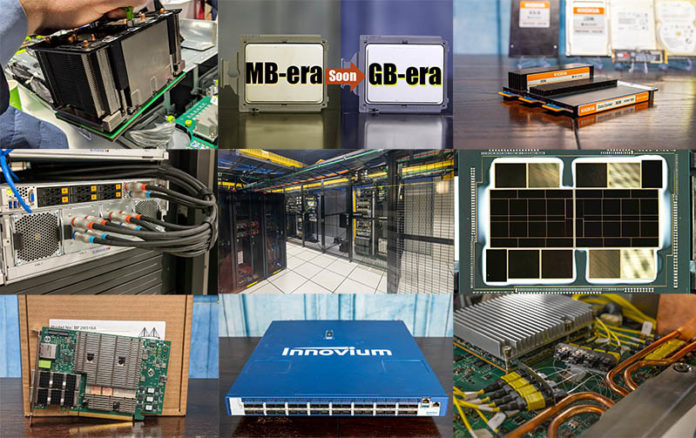

Over the past few months, regular readers will have noticed that we have been running a series looking at the key technologies that will impact our readers in the next few quarters. The transition of server architecture in 2022 is going to be quite unlike what we saw in the Xeon E5 era from ~2012 to 2017 and one could argue even through Skylake/ Naples bringing that to 2019. The fact around seven years of relatively low innovation means that there are now folks with 9-10 years of experience that have never seen a massive shift in server architecture. Our Planning for Servers in 2022 and Beyond series has been focused on showing just how much will change in the very near future.

CPUs and Interconnects in 2022 and Beyond

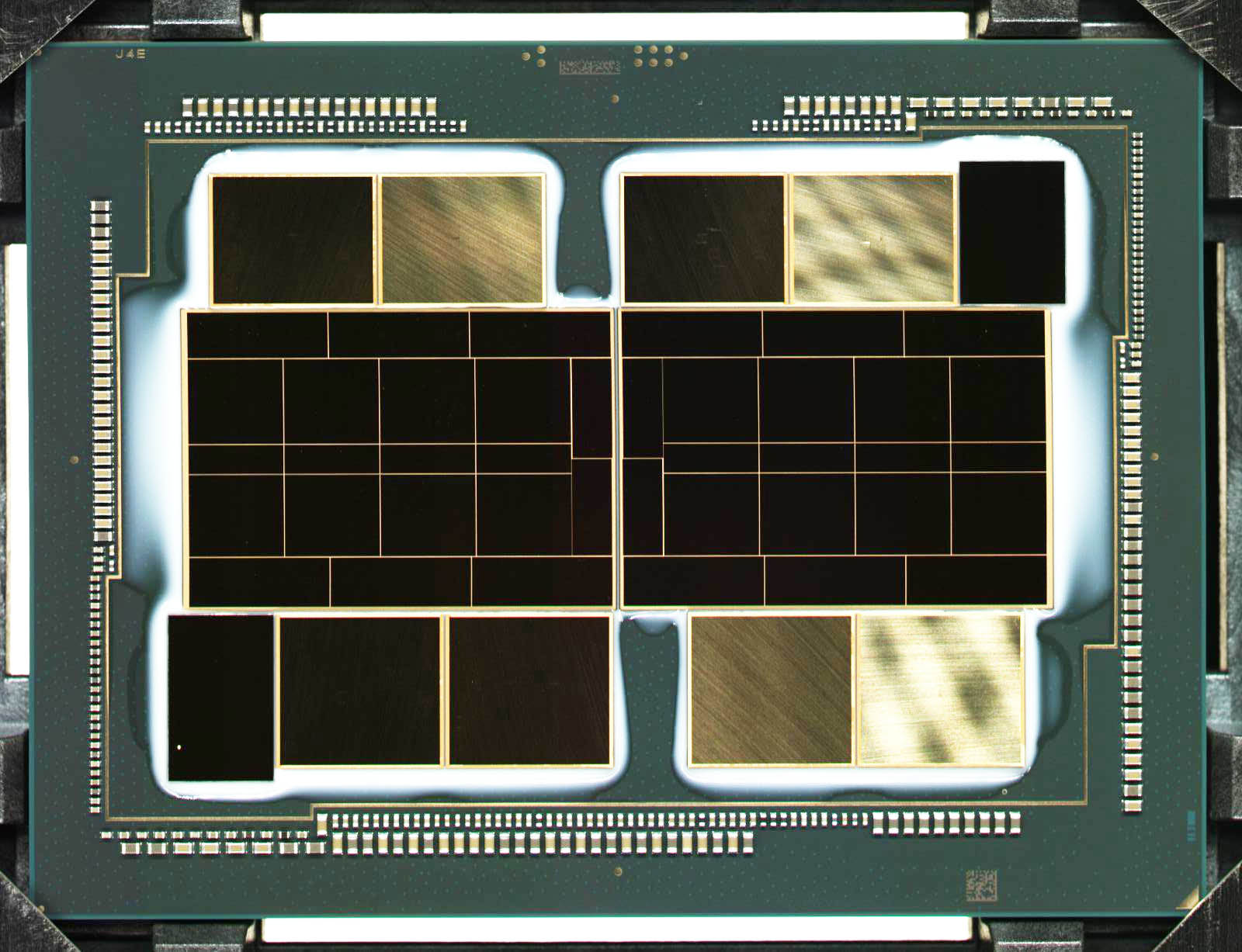

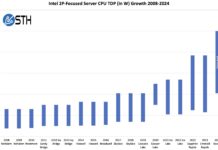

The relatively well-known changes to server CPUs in 2022 will be the DDR5 transition along with higher core counts. We have covered the move to chiplets and more advanced packaging for some time and these technologies will help drive much of the industry’s change over the next few years.

We also focused on some of the less-discussed changes. One of them is simply the increase in onboard “close” memory on CPUs that we will see in different forms starting really in 2022. We discussed this in Server CPUs Transitioning to the GB Onboard Era.

We also discussed the transition to Arm servers and how that ecosystem is evolving in our review of a modern Arm server platform in Ampere Altra Wiwynn Mt. Jade Server Review The Most Significant Arm Server. This will be a big change and will have a slightly different focus than many of the x86 servers we have seen to date.

Moving beyond the chips themselves, CXL will become an enormous force in the industry starting in 2022 but then picking up as we get to CXL 2.0 and beyond. You can check out our piece: Compute Express Link or CXL What it is and Examples for more on that.

The CPU, and perhaps more importantly, the role of the CPU in servers is going to change drastically over the next few quarters. After CXL we will get Gen-Z and have already started seeing systems with prototypes for that such as with our Gen-Z in Dell EMC PowerEdge MX piece.

Storage Trends

One of the biggest trends we are going to see is the EDSFF shift to the various E1 and E3 form factors. This is going to be the shift away from rotating disk-based form factors and into something that is designed for PCIe/ CXL devices including flash storage. EDSFF allows for more power and cooling potential for higher-power devices whether that is traditional SSDs, computational storage devices, or even AI accelerators. You can read more about that in our E1 and E3 EDSFF to Take Over from M.2 and 2.5 in SSDs article.

We also discussed the Intel Optane persistent memory and how that will change a bit in future generations, including why Micron shifted its focus from DIMM-attached 3D XPoint. You can read about that in Glorious Complexity of Intel Optane DIMMs and Micron Exiting 3D XPoint.

There are still a few more storage pieces we want to cover. We covered SAS4 a number of times, but we felt these are going to be the more impactful changes.

Networking and Fabric

On the networking side, the industry over the last 12 months or so has coalesced around the DPU terminology. We have a bit about why DPUs are needed and how to determine if a device is a DPU in What is a DPU A Data Processing Unit Quick Primer.

We also have the STH NIC Continuum Framework that we are using to evaluate different types of network devices. The DPU space, for example, has many types of devices and although in the lab we have mostly shown the Mellanox-NVIDIA BlueField-2 in STH articles, we also have done hands-on with the Fungible DPU-based Storage Cluster.

Networking is an area that continually must evolve to faster iterations in order to deal with not just bigger servers, but also faster-connected devices with technologies such as 5G at the edge. We have done a number of AI platforms and O-RAN platform reviews at STH, but it is always fun to look at the switches. One example is the Inside an Innovium Teralynx 7-based 32x 400GbE Switch. This week we covered Marvell Acquiring Innovium in recognition of this trend and platform.

Looking beyond these 32x 400GbE switches, we are going to transition to an era of needing silicon photonics and co-packaged optics. That will have to happen perhaps not in 2022, but we are only a few years away.

We have also been covering the transition to 2.5GbE for the campus edge but those are less focused on traditional servers. We have also looked at some of the accelerators for 5G infrastructure since that is an entire space with a massive amount of investment.

AI Accelerators

The AI accelerator market will move to the OAM form factor that we started covering in 2019 in Facebook OCP Accelerator Module OAM Launched and Facebook Zion Accelerator Platform for OAM.

We are seeing OAM as a major force in the AI accelerator industry in 2022 and beyond even if there are some very cool alternatives out there such as making one large chip as Cerebras Systems does.

At the edge, we now have almost a dozen articles focused on using cards like the NVIDIA T4 or PCIe-based A100 modules for edge inferencing in traditional servers but also mobile/ ruggedized and ORAN style form factors. Since a huge application is going to be video analytics we have started on more video/ edge AI platforms such as the Xilinx Kria KV260 FPGA-based Video AI Development Kit since these platforms will be part of a tidal shift in data flowing from the edge to the core of networks. AI inference will be a feature in almost every server in the very near future and that will cover the cloud to the edge in that statement.

Power and Cooling

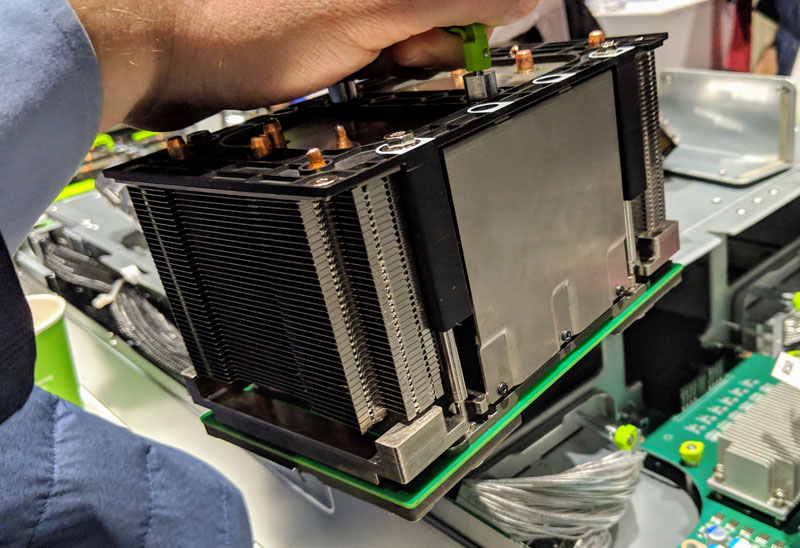

Recently we just looked at some of the liquid cooling options in Liquid Cooling Next-Gen Servers Getting Hands-on with 3 Options.

The next generation of servers will certainly use more power and require more cooling so we have started covering those solutions. They are a major reason we are working on a massive behind-the-scenes upgrade.

Final Words

The purpose of this Friday piece was simply to discuss some of the biggest changes that we have been covering on STH that will happen in 2022 and beyond. These are the immediate changes as the pace of innovation in the data center speeds up. For our readers who feel more comfortable in the slower pace of innovation from 2012 to 2017 or 2019, starting next year we are going to see massive shifts. The implication of this is that planning needs to happen today to ensure facilities are ready to take on the new operating models in 2022 and beyond.

Some of our keen readers will also notice that we have focused on doing videos for many of these big 2022 and beyond topics. There are still several on the to-do list and some that we have covered and not featured above, but there is also limited time until the next generation arrives. The point is: get ready for a wild ride.

AMDead