Last week I had the chance to talk to Kurtis Bowman of Dell EMC and Barry McAuliffe of HPE about Compute Express Link or CXL. Both are also big proponents of Gen-Z another cache coherent interconnect consortium. This week, I saw Kurtis at Dell Technologies World in Las Vegas where the company was demonstrating Gen-Z in the PowerEdge MX. I wanted to take a few minutes and talk about both the Gen-Z demo as well as the CXL discussion.

Dell EMC PowerEdge MX Running Gen-Z

In our Dell EMC PowerEdge MX review, we noted that it is a chassis designed for the Gen-Z future. At Dell Technologies World, Dell EMC showed off the Gen-Z future. Here is Gen-Z replacing one of the fabric modules in the PowerEdge MX and an external server outside of the chassis reading/ writing the same memory.

I may have called this “the most expensive paint demo” but it showed shared memory updating. I was also promised another demo later this year.

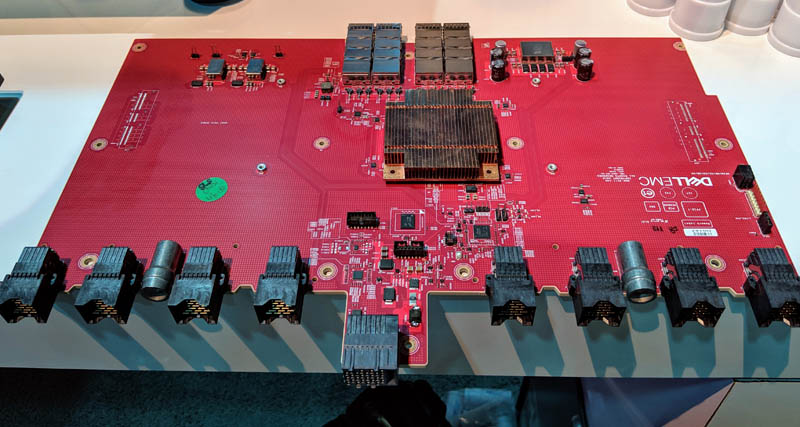

In a particular treat, I was able to see one of the prototype fabric modules.

One can see external cabling at the rear. Under the middle heatsink, one can see a Xilinx FPGA that makes Gen-Z possible. One can also see the connectors that connect this PCB to the individual nodes in the PowerEdge MX’s “no midplane” design. You can read more about that in our review.

Clarifying Gen-Z and CXL Domains

A lot of folks were confused by the fact that we now have two cache coherent protocols Gen-Z and CXL. Speaking with Kurtis and Barry was intriguing as representatives from the two largest server vendors had a very similar view.

There is still a lot of work to do, but this model offered made a lot of sense. Gen-Z was created with PHYs that were focused on chassis-to-chassis communication. CXL 1.0 is based on a PCIe Gen5 physical layer. PCIe works well in a chassis, but struggles going to longer ranges. High-end external PCIe cabling like what we saw with the Facebook Zion Accelerator Platform for OAM shows just how intense even short PCIe cables can get.

If one then thinks of CXL as the protocol for in-chassis hardware such as local memory, storage, and accelerators and Gen-Z as chassis-to-chassis, one can understand how these co-exist and why Dell EMC and HPE are pushing both protocols. In the PowerEdge MX (MX7000) demo, we are seeing Gen-Z going from chassis to chassis.

When I asked Kurtis and Barry about where the first implementations we are going to see CXL, I was told that expect it in the early 2020’s we should start seeing accelerators start to use CXL as that was a primary focus for the CXL 1.0 spec. A CXL 1.1 spec is likely to come out soon to clarify early questions the consortium has received.

Looking further into the future, I asked about how long until we see memory controllers being on the far side of the bus from the CPU. Memory controllers take up a lot of silicon on modern CPUs and the idea of using a high-speed link is one that has been around for some time. Barry and Kurtis were a bit less definitive here saying we may start to see this in the DDR5 generation.

Another key learning from our discussion is that as of our discussion there were 57 members of CXL but only 38 on their current member page. We were told that beyond the big names in the industry on the public member page, there are some very large names that are not publicly listed at this time.

Final Words

We are now seeing systems designed for future Gen-Z support start to demo Gen-Z. The industry wants cache coherent interconnects across devices. It seems like Gen-Z is gaining momentum and maturity at the same time a complimentary Compute Express Link is rapidly adding consortium members.

For users, ultimately Gen-Z and CXL have the potential to increase efficiency and create a more agile infrastructure. Tomorrow’s infrastructure will certainly be more modular than we see today and these consortiums will help drive a more diverse hardware ecosystem.

If only Intel were to come out with a CPU that had an Integrated FPGA for I/O on PCIe pins so an external FPGA was not required for these prototypes.

Memory on CXL/GenZ really only makes sense in the case are massive shared memory pool or massive aux memory pool. And by massive, you are looking at basically a full base board filled with DIMMs. Doesn’t make sense in any other case due to the massive latency increases along with significant bandwidth limitations. Realistically, if you were going to do it, you would want something that also supported NV memory in mass quantities.

So the real question is what use cases require/want on the order of 256+ TB of memory. And does the combination of those use cases have enough of a market to justify the investment? I can’t think of any good use cases, off the top of my head, outside of massive in memory DBs which is an extremely limited market at that scale. Esp since all the external market drivers are heavily shared nothing based (almost the whole point in cloud scale computing as an example).