At OCP Summit 2019, Facebook showed off a new accelerator platform. Dubbed the Facebook Zion, the platform is the first to accept the new OCP accelerator module form factor or “OAM”. We have more on the modules here: Facebook OCP Accelerator Module OAM Launched. As part of the Zion platform, Facebook has an array of eight CPUs and eight accelerators along with high speed interconnects. In this article, we are going to go over what that system looks like, and give some perspective we got from the company and from hands-on time with the platform’s hardware.

Facebook Zion Overview

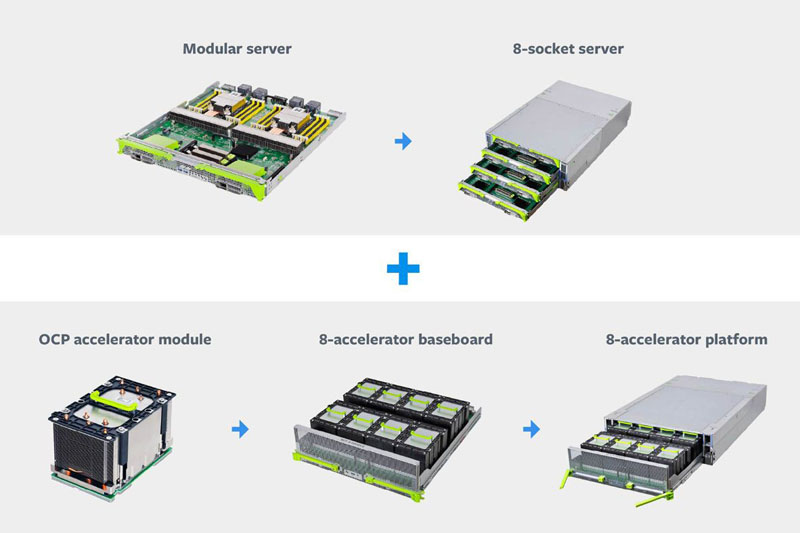

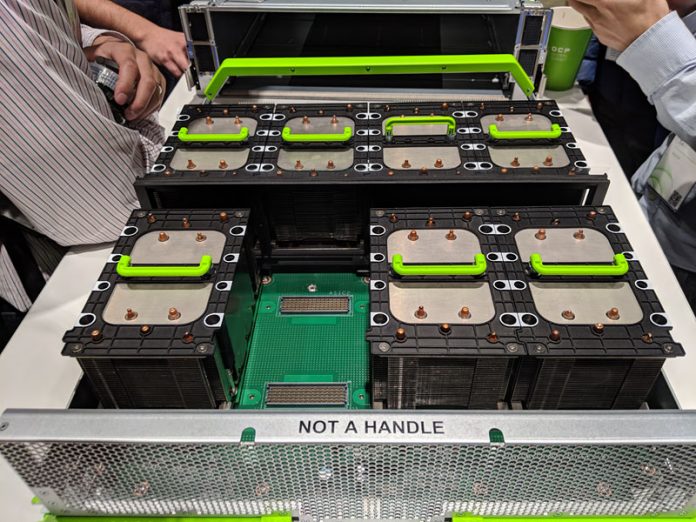

The Facebook Zion is comprised of eight CPUs and eight accelerators. The module is disaggregated insofar as the server CPUs are in modular sleds and the accelerators sit in another modular system.

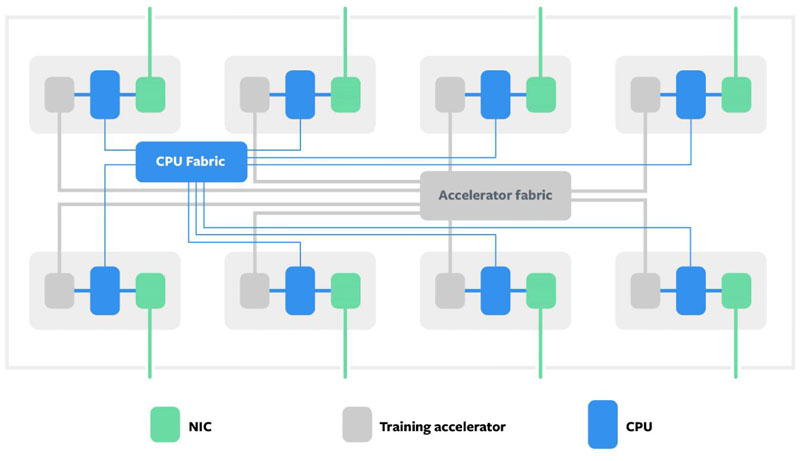

The eight CPUs sit on four dual-socket servers. These are then connected to the accelerator platform that houses various accelerators. This is tied together using different fabrics including network, CPU, and accelerators.

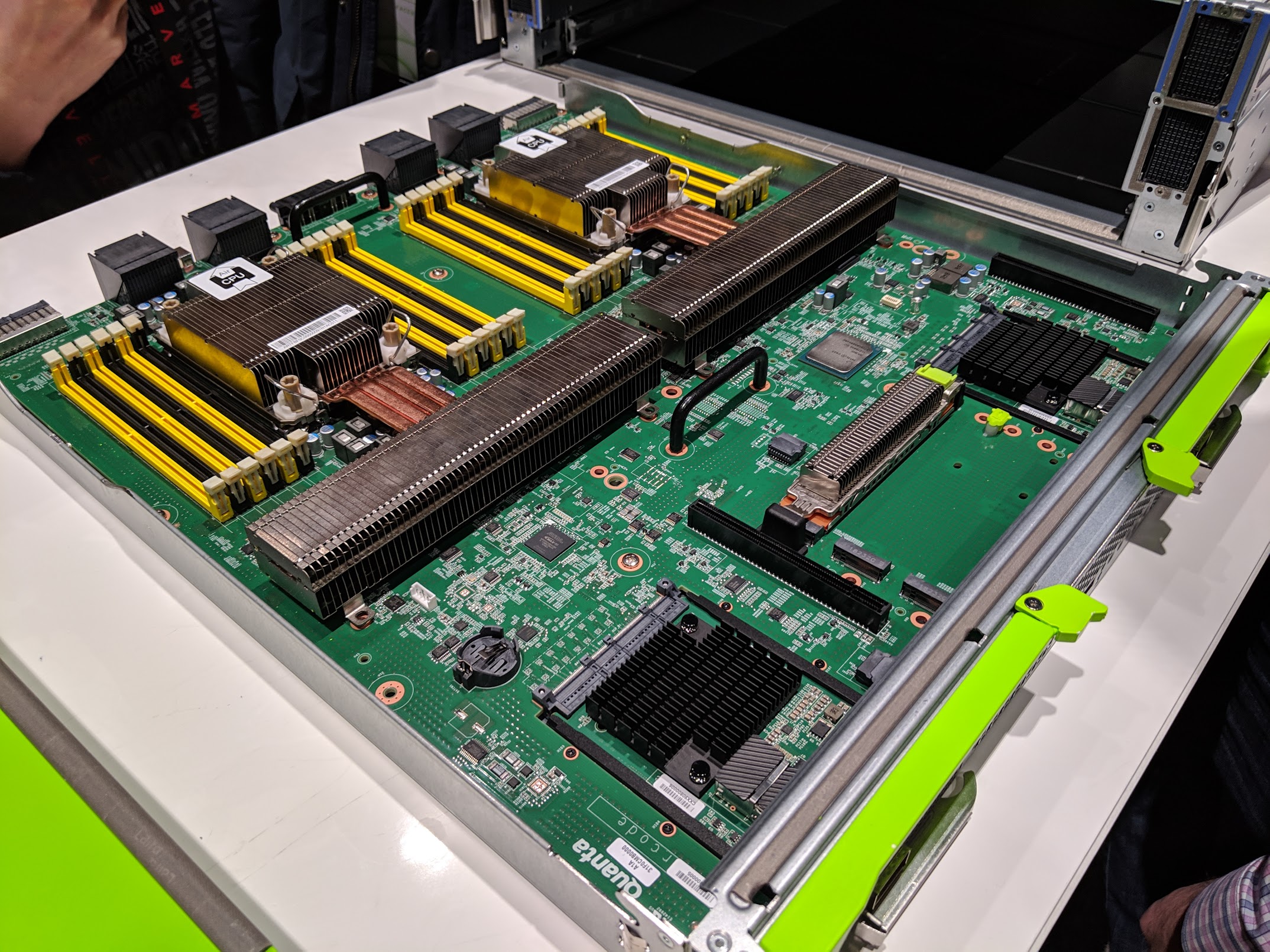

The server module itself is a modular dual socket solution. Facebook had LGA3647 Intel Xeon Skylake/ Cascade Lake solutions on display at the show. One can see the server with large heatsinks, twelve DIMMs per CPU, and OCP NIC 3.0 modules.

These dual socket servers fit four servers to a chassis and give eight sockets worth of compute, memory, and network fabric connectivity.

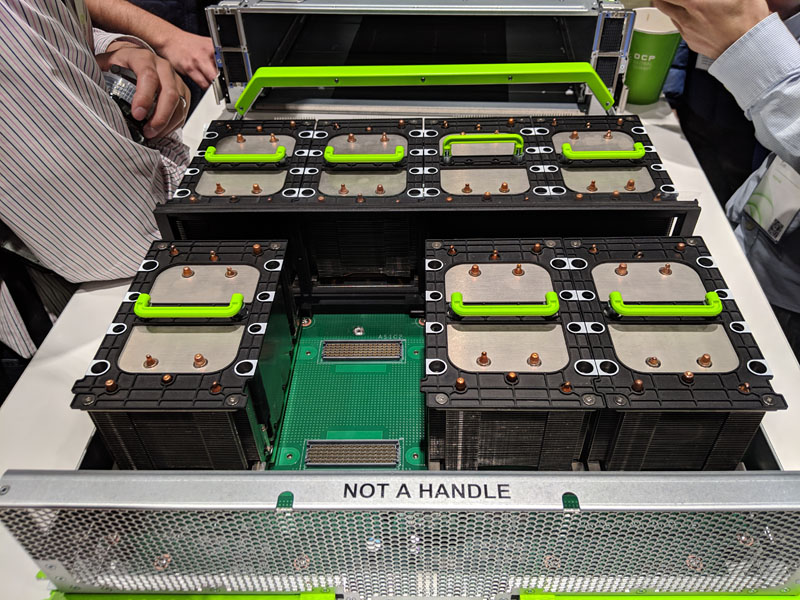

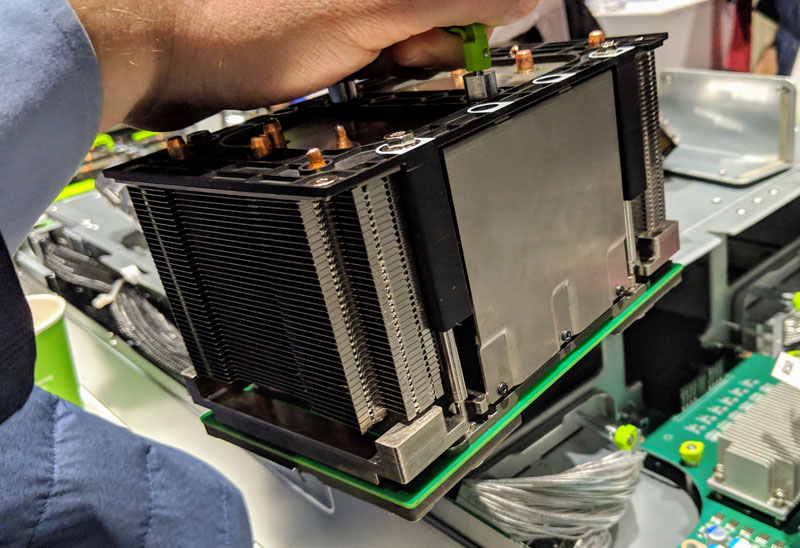

Accelerators are housed in a separate chassis. This chassis is designed for the unique thermal requirements of accelerators. The particular chassis on display was designed for air cooling. One can see the air gaps between the accelerators are minimized. Along the top of the heatsink is an interlocking plastic cover that also serves as a keying solution to keep the heatsinks aligned in the chassis for proper airflow.

Each OAM is designed for around 450W on air cooling. Thusly it has an absolutely massive heatsink that weighs many pounds or kg.

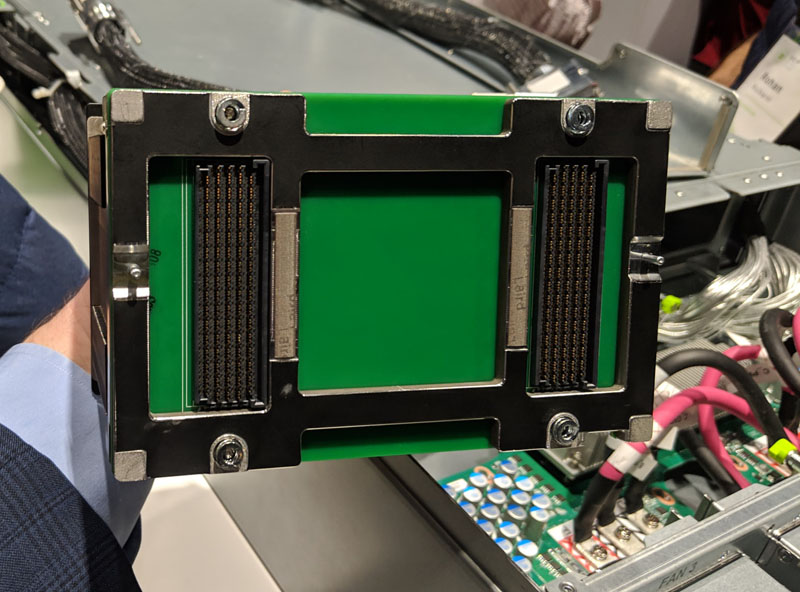

Underneath the mechanical assembly, one can see the OAM connectors. These provide power and data to the accelerators. While they look much like NVIDIA SXM2/3 connectors, they are designed to work across vendors instead of being NVIDIA specific. In the OAM spec is connectivity for accelerator to accelerator communication.

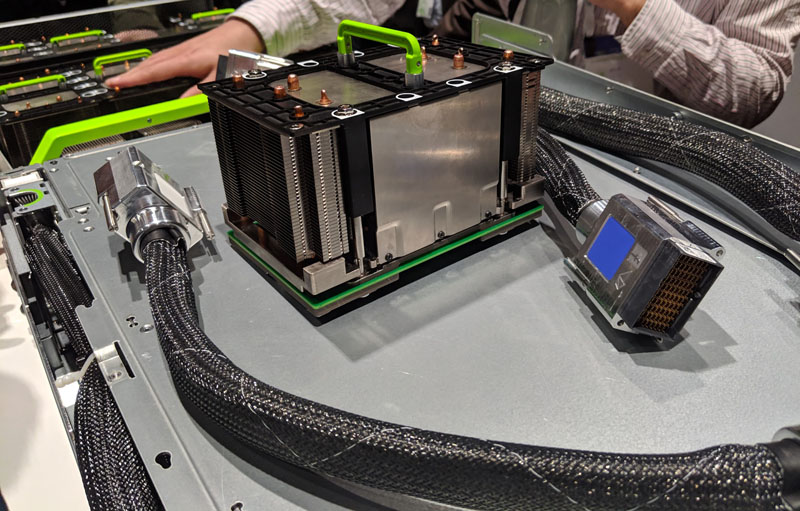

One item that is noticeable in the booth is the cabling. While the OAM heatsink is huge, the PCIe cabling does not look out of place.

To maintain signal quality between the OAM platform and the comptue servers, Facebook is using a number of cables that route internally, as well as from the OAM shelf to the CPU compute nodes.

Here is a sense of the scale of these connectors using a standard business card.

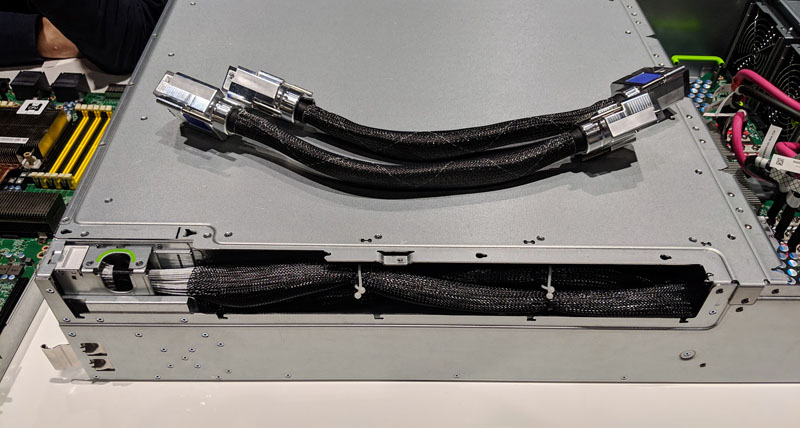

Zion cabling was custom designed by Facebook. We were told each of these connectors carries eight x16 PCIe lanes between each chassis. That allows Facebook to maintain a high density while also minimizing cabling complexity. As some perspective, these 128 lane cables have more PCIe connectivity than a modern dual socket Intel Xeon Scalable server.

Final Words

The Facebook Zion platform is nothing if not impressive. Having built and reviewed 8x GPU systems, like the Gigabyte G481-S80 8x NVIDIA Tesla GPU Server using NVIDIA SXM2 modules, it is easy to see how this is a step forward in the infrastructure. Beyond Facebook, companies such as Microsoft and Baidu have signed on to the OAM form factor. With major CSPs adopting OAM, SXM2/3 may have limited time left in the market as volume will likely follow the new OAM form factor. As such, we expect to see more designs, and possibly more use of the Zion platform elsewhere.

Always wondered at what length of PCIe extension problems start to appear…Also heard of tunneled PCI attempts which ended in failure. Accelerator fabric here is NVLink?

Linus did a test once, it was about 3 meter after he could notice arteffects on the screen with PCIe-3 x16.

@necr I had a buddy who took care of an IBM Power based large NUMA shared memory machine a while back. It was multiple chassis in a 42U single rack, each chassis had a couple of CPU and a bnch of RAM sticks. He told me it used PCIe3 to link the machines together to achieve a global address space. The PCIe3 was running across really nice looking black & copper ribbon cables on the front of the rack. So PCIe3 could go 42U.