In every era, there is a server design that is aspirational in their design. The NVIDIA DGX-1 was perhaps that server of its generation. What NVIDIA did with the DGX-1 was create a server that was a headliner in terms of performance, but they did something further, they allowed server partners to innovate atop of the base design. Gigabyte did that with its variant, we are going to call a “DGX-1.5”. The Gigabyte G481-S80 is an 8x Tesla GPU server that supports NVLink. We call it a DGX-1.5 since it uses the newer Intel Xeon Scalable architecture. That means there are a number of improvements under the hood that make it a generational improvement over the NVIDIA DGX-1.

There is a lot going on with the Gigabyte G481-S80 so we are going to start with a basic server hardware overview followed by the process of diving into the hardware during the actual build. We are going to show off the resulting topology of the system as that should be a major factor in your deep learning server design. After that is complete, we will shift our focus to performance, management, and power consumption before our summary. This is a huge review, so take some time to read through the details.

Gigabyte G481-S80 SXM2 Tesla GPU Server Overview

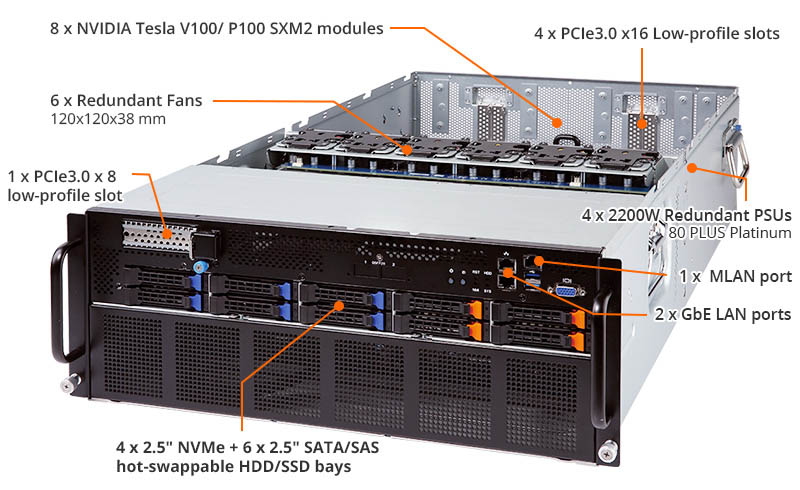

The Gigabyte G481-S80 is a 4U server that is focused around GPU compute. Key features include supporting dual Intel Xeon Scalable CPUs which also means 6 channel memory support. That can add over 50% more CPU memory bandwidth and additional cores for data conditioning in a data science workflow. Another benefit is that the system has more PCIe lanes for fast NVMe storage. We were able to use both traditional NAND NVMe and Optane U.2 drives in the system’s 4x front panel NVMe bays. Servers like DeepLearning11 with 10x NVIDIA GTX 1080 Ti’s typically utilized either all SATA or 1-2 NVMe devices for storage.

Here is Gigabyte’s overview of the machine:

Rounding out storage there is a PCIe 3.0 x8 slot up front for the additional 6x SAS/ SATA bays or adding additional NVMe storage. The front panel is extremely interesting as it also has the management and 1GbE provisioning network ports along with the local KVM cart VGA and USB connectivity. In our lab racks, this meant that we needed to source KVM cart power from the rear of the rack while bringing a KVM cart to the cold aisle.

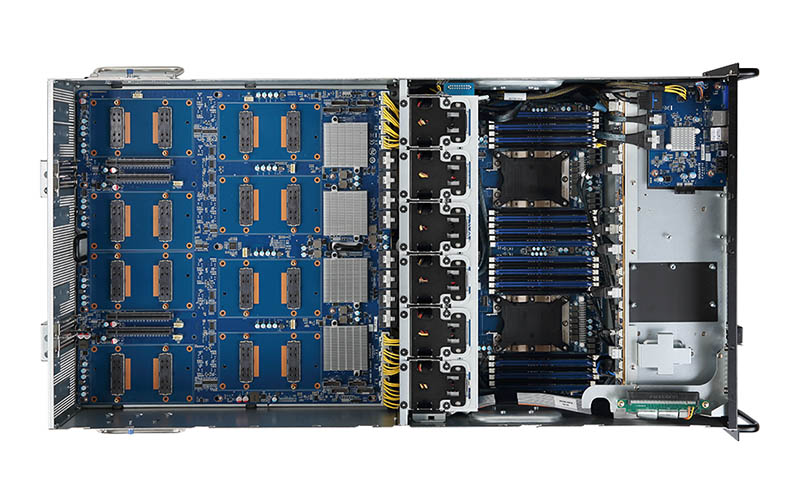

Instead of being built around a PCIe GPU architecture as found in many workstations, it uses a special SXM2 GPUs. The NVIDIA SXM2 form factor being used here is the DGX-1 form factor. NVIDIA’s 10kW 16-GPU DGX-2/ HGX-2 uses a different type of SXM2 module. SXM2 allows for NVLink communication across GPUs which greatly speeds up GPU to GPU transfers versus traditional PCIe solutions.

Our Gigabyte G481-S80 supports both Tesla P100 and Tesla V100 generation NVLink. As part of our research on this piece, you can generally use Tesla P100 SXM2 modules in Tesla V100 motherboards just fine. Putting Tesla V100 cards in Tesla P100 NVLink motherboards can be problematic.

The rear of the chassis has four low profile expansion slots for the four PCIe 3.0 x16 internal slots. Here you are going to put Mellanox ConnectX-4 or later networking either for EDR Infiniband 100GbE, or both. We are going to have more on that during this review, but given how popular Mellanox is in these applications, they could almost put their names on these slots. Networking in the rear also includes a hot swap tray with an OCP mezzanine card slot next to the power supplies.

Beyond the networking, there are four 2.2kW power supplies to provide redundant power. In our testing, this array is large enough that it can handle a failure of an A or B power supply. These power supplies also have C20 plugs due to their power rating so you need to pick your C19/ C20 PDU infrastructure accordingly when you deploy these in racks.

One quick note, there are five pairs of holes covered up in the picture above. This is clearly meant for liquid cooling applications and these holes are meant for tubes. These machines generate a lot of heat and so liquid cooling with rack level or facility heat exchangers we can see as a common application.

Gigabyte has a video online showing the overview of the machine using a rendered image which makes showing off the interior components easier.

Next, we are going to look at the process of building our DeepLearning12 around the Gigabyte G481-S80.

Gromacs would be a nice benchmark to see.

Thanks for doing more than AI benches. I’ve sent you an e-mail through the contact form on a training set we use a Supermicro 8 GPU 1080 Ti system for. I’d like to see comparison data from this Gigabyte system.

Another thorough review

It’s too bad that NVIDIA doesn’t have a $2-3K GPU for these systems. Those P100’s you use start in the $5-6K each GPU range and the V100’s are $9-10K each.

At $6K per GPU that’s $48K per GPU, or two single root PCIe systems. Add another $6K for Xeon Gold, $6K for the Mellanox cards, $5K for RAM, $5K for storage and you’re at $70K as a realistic starting price.

Regarding the power supplies, when you said 4x 2200W redundant, it means that you can have two out of the four power supplies to fail right?

I’m asking this because I’m might be running out of C20 power socket in my rack and I want to know if I can plug only two power supplies.

Sorry, my question’s reply is in the marketing video.

They are 2+N power supplies.

Interested if anyone has attempted a build using 2080Tis? Or if anyone at STH would be interested. The 2080 Ti appears to show much greater promise in deep-learning than it’s predecessor (1080Ti), and some sources seem to state the Turing architecture is able to perform better with FP16 without using a single many tensorcores as former Volta architecture. Training tests on tensorflow done by server company lambda also show great promise for the 2080Ti.

Since 2080Ti support 2-way 100Gb/sec bidirectional NVlink, I’m curious if there are any 4x, 8x (or more?) 2080ti builds that could be done by linking each pair of cards with nvlink, and using some sort of mellanox gpu direct connectX device to link the pairs. Mellanox’s new connectX-5 and -6 are incredibly fast as well. If a system like that is possible, I feel it’d be a real challenger in terms of both compute-speed and bandwidth to the enterprise-class V100 systems currently available.

Cooling is a problem on the 2080 Ti’s. We have some in the lab but the old 1080 Ti Founder’s edition cards were excellent in dense designs.

The other big one out there is that you can get 1080 Ti FE cards for half the price of 2080 Ti’s which in the larger 8x and 10x GPU systems means you are getting 3 systems for the price of two.

It is something we are working on, but not 100% ready to recommend that setup yet. NVIDIA is biasing features for the Tesla cards versus GTX/ RTX.