STH Tensorflow GAN Training

We are going to have a follow-up piece *hopefully* using MLperf. Our original intent was to use the entire MLperf suite for benchmarking. Little did we realize that many of the benchmarks released to date do not take advantage of multiple GPUs out of the box. While the beta MLperf v0.5 works well for single GPUs, many of the models do not scale using NVIDIA nccl to multiple GPUs. That is important since training on a single GPU or single system is quaint but acceptable for learning. Crunching larger models require faster interconnect speeds.

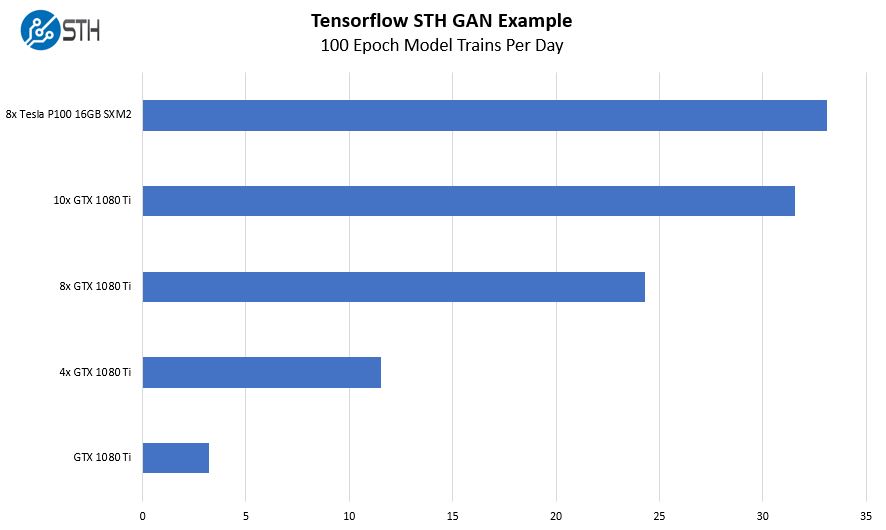

We took our sample Tensorflow Generative Adversarial Network (GAN) image training test case and ran it on single cards then stepping up to the 8x GPU system. We expressed our results in terms of training cycles per day.

Here is a view of what we miss when we see single NVIDIA GeForce 1080 Ti v. Tesla P100 figures. Here we see an example of where the NVIDIA Tesla P100 16GB SXM2’s NVLink architecture plus stronger performance yields a tangible benefit.

We are going to have more training benchmarks in our subsequent DeepLearning12 piece where we will start using MLperf and other tools. We wanted to provide some continuity between this review and our previous DeepLearning10 and DeepLearning11 reviews.

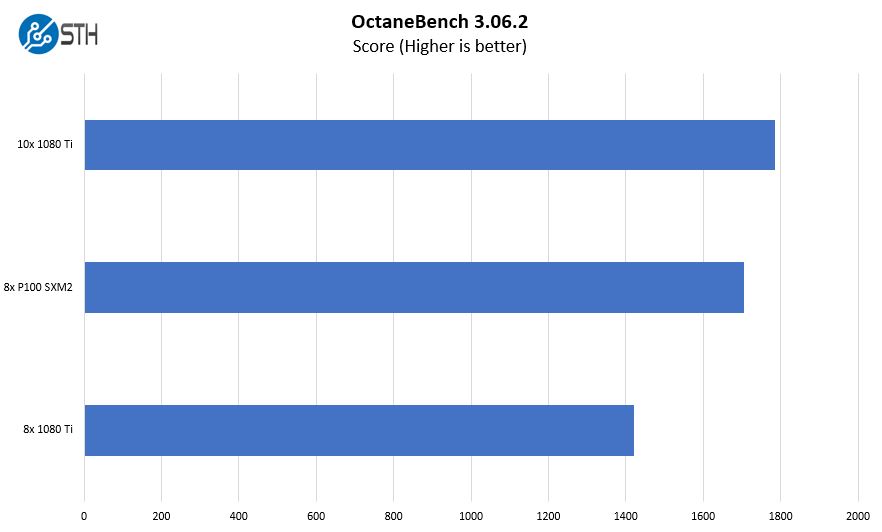

OTOY OctaneBench 3.06.2

We added rendering to the benchmark suite of the Gigabyte G481-S80 review just to show another aspect that we often get asked for.

Here the performance is good, but it is not going to sway someone to get an 8x Tesla SXM2 machine over a GeForce GTX 1080 Ti machine. At the same time, if you are running mixed workloads, the Tesla P100’s show excellent performance. In a Kubernetes cluster or cloud, rendering is another task that the Gigabyte G481-S80 will do well on.

The Dark Horse: VDI

This ended up as a really interesting reader request. We are not going to do VDI performance testing of the 8x Tesla P100 16GB solution. We will note that if you have the appropriate Quadro vDWS or GRID licensing you can support anywhere two four GPU power users to sixty-four 2GB GPU accelerated VMs.

Again, if you are running infrastructure where you have VDI by day and on nights/ weekends want to train, the Gigabyte G481-S80 is a really interesting option. Consumer-level GPUs do not have this capability. Many of the VDI specific GPUs do not have the larger NVLink implementations that are useful for training. If you are considering a VDI deployment, this may be worth a consideration.

Next, we are going to look at networking and storage performance. We will then show some of the management features and follow that discussion with power consumption before wrapping up the review.

Gromacs would be a nice benchmark to see.

Thanks for doing more than AI benches. I’ve sent you an e-mail through the contact form on a training set we use a Supermicro 8 GPU 1080 Ti system for. I’d like to see comparison data from this Gigabyte system.

Another thorough review

It’s too bad that NVIDIA doesn’t have a $2-3K GPU for these systems. Those P100’s you use start in the $5-6K each GPU range and the V100’s are $9-10K each.

At $6K per GPU that’s $48K per GPU, or two single root PCIe systems. Add another $6K for Xeon Gold, $6K for the Mellanox cards, $5K for RAM, $5K for storage and you’re at $70K as a realistic starting price.

Regarding the power supplies, when you said 4x 2200W redundant, it means that you can have two out of the four power supplies to fail right?

I’m asking this because I’m might be running out of C20 power socket in my rack and I want to know if I can plug only two power supplies.

Sorry, my question’s reply is in the marketing video.

They are 2+N power supplies.

Interested if anyone has attempted a build using 2080Tis? Or if anyone at STH would be interested. The 2080 Ti appears to show much greater promise in deep-learning than it’s predecessor (1080Ti), and some sources seem to state the Turing architecture is able to perform better with FP16 without using a single many tensorcores as former Volta architecture. Training tests on tensorflow done by server company lambda also show great promise for the 2080Ti.

Since 2080Ti support 2-way 100Gb/sec bidirectional NVlink, I’m curious if there are any 4x, 8x (or more?) 2080ti builds that could be done by linking each pair of cards with nvlink, and using some sort of mellanox gpu direct connectX device to link the pairs. Mellanox’s new connectX-5 and -6 are incredibly fast as well. If a system like that is possible, I feel it’d be a real challenger in terms of both compute-speed and bandwidth to the enterprise-class V100 systems currently available.

Cooling is a problem on the 2080 Ti’s. We have some in the lab but the old 1080 Ti Founder’s edition cards were excellent in dense designs.

The other big one out there is that you can get 1080 Ti FE cards for half the price of 2080 Ti’s which in the larger 8x and 10x GPU systems means you are getting 3 systems for the price of two.

It is something we are working on, but not 100% ready to recommend that setup yet. NVIDIA is biasing features for the Tesla cards versus GTX/ RTX.