Gigabyte G481-S80 Power Consumption

We put the server into in our data center using 208V 30A Schneider Electric APC PDUs. We measured power using the Intel Xeon Gold 6138 CPUs, and saw some great figures:

- Idle: 0.79kW

- 70% Load: 2.61kW

- 100% Load: 3.32kW

- Peak: 3.49kW

Note these results were taken using a 208V Schneider Electric / APC PDU at 17.7C and 71% RH. Our testing window shown here had a +/- 0.3C and +/- 2% RH variance. The ambient temperatures and humidity factors are important as they greatly influence server power consumption, especially in densely populated GPU servers such as this.

Overall, this is excellent power consumption, actually lower than we saw in some of our 1080Ti based systems. At the same time, this is around 1kW/U so you are likely going to want to spec 40kW racks. Not all data centers can handle this power, especially facilities that are in office buildings that were built for lower-power comptue racks.

We also wanted to point out that the power consumption varied greatly in our testing. We have workloads where the 16GB of HBM2 memory was being used, and the GPU utilization across 8x GPUs was pegged at 95% and we saw power consumption in the 1.4kW-1.5kW range. This is important if you are in a facility with metered power and are trying to budget. Assuming peak load at all times is not realistic for most installations.

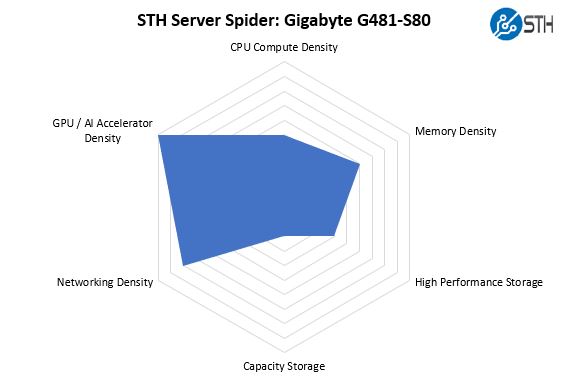

STH Server Spider: Gigabyte G481-S80

This is going to be the second review we are going to use the STH Server Spider. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

The focus of the Gigabyte G481-S80 is simple: it is designed to be a GPU compute server. One of the interesting parts of the server is that it has a relatively dense storage configuration. It is also designed for high-end networking with a standard configuration having over 400Gbps of networking.

Final Words

We really like this platform. Unlike building solutions around PCIe switches, there is a balance that the Gigabyte G481-S80 achieves. Storage and networking capabilities are well beyond what most PCIe GPU systems offer and that is a big deal. Moving from one system to many systems is where one is quickly presented with challenges feeding the GPUs. As a “DGX-1.5” class system one has NVLink plus a well-conceived PCIe complex that makes the system work. Looking ahead, Cascade Lake-SP and Optane Persistent Memory will turn the Gigabyte G481-S80 into an absolutely tantalizing upgrade over NVIDIA’s DGX-1.

As you might be able to tell from this article, there is a follow-up being worked on. We did not want to publish MLperf v0.5 results as the suite needs time to mature. We also have a few other benchmarks we wanted to present and a few comparison points headed into the lab this quarter. Stay tuned for DeepLearning12 content featuring the Gigabyte G481-S80.

We are also going to make a somewhat unprecedented offer. If you have a self contained Docker file and want some training or other performance data, get in touch and we will see if we can schedule running it on the machine.

Gromacs would be a nice benchmark to see.

Thanks for doing more than AI benches. I’ve sent you an e-mail through the contact form on a training set we use a Supermicro 8 GPU 1080 Ti system for. I’d like to see comparison data from this Gigabyte system.

Another thorough review

It’s too bad that NVIDIA doesn’t have a $2-3K GPU for these systems. Those P100’s you use start in the $5-6K each GPU range and the V100’s are $9-10K each.

At $6K per GPU that’s $48K per GPU, or two single root PCIe systems. Add another $6K for Xeon Gold, $6K for the Mellanox cards, $5K for RAM, $5K for storage and you’re at $70K as a realistic starting price.

Regarding the power supplies, when you said 4x 2200W redundant, it means that you can have two out of the four power supplies to fail right?

I’m asking this because I’m might be running out of C20 power socket in my rack and I want to know if I can plug only two power supplies.

Sorry, my question’s reply is in the marketing video.

They are 2+N power supplies.

Interested if anyone has attempted a build using 2080Tis? Or if anyone at STH would be interested. The 2080 Ti appears to show much greater promise in deep-learning than it’s predecessor (1080Ti), and some sources seem to state the Turing architecture is able to perform better with FP16 without using a single many tensorcores as former Volta architecture. Training tests on tensorflow done by server company lambda also show great promise for the 2080Ti.

Since 2080Ti support 2-way 100Gb/sec bidirectional NVlink, I’m curious if there are any 4x, 8x (or more?) 2080ti builds that could be done by linking each pair of cards with nvlink, and using some sort of mellanox gpu direct connectX device to link the pairs. Mellanox’s new connectX-5 and -6 are incredibly fast as well. If a system like that is possible, I feel it’d be a real challenger in terms of both compute-speed and bandwidth to the enterprise-class V100 systems currently available.

Cooling is a problem on the 2080 Ti’s. We have some in the lab but the old 1080 Ti Founder’s edition cards were excellent in dense designs.

The other big one out there is that you can get 1080 Ti FE cards for half the price of 2080 Ti’s which in the larger 8x and 10x GPU systems means you are getting 3 systems for the price of two.

It is something we are working on, but not 100% ready to recommend that setup yet. NVIDIA is biasing features for the Tesla cards versus GTX/ RTX.