Recently the CXL 3.1 spec was announced. The new spec has additional fabric improvements for scale-out CXL, new trusted execution environment ehnahcments, and improvements for memory expanders. A lot has happened since our Compute Express Link or CXL What it is and Examples piece, so let us get to it. Here is the old CXL Taco video for the introduction.

CXL 3.1 Specification Aims for Big Topologies

CXL 3.1 has a number of big changes under the hood, largely to address what happens when teams build larger CXL systems and topologies.

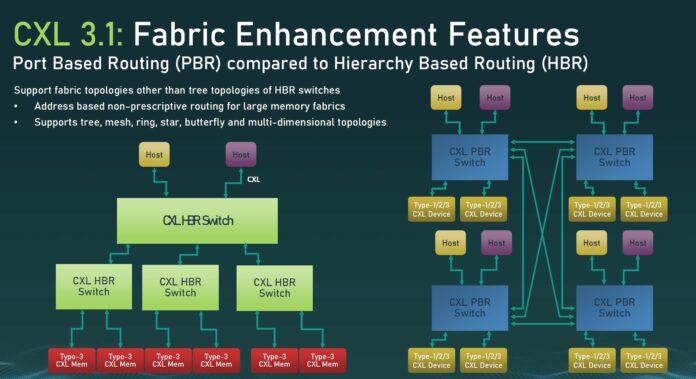

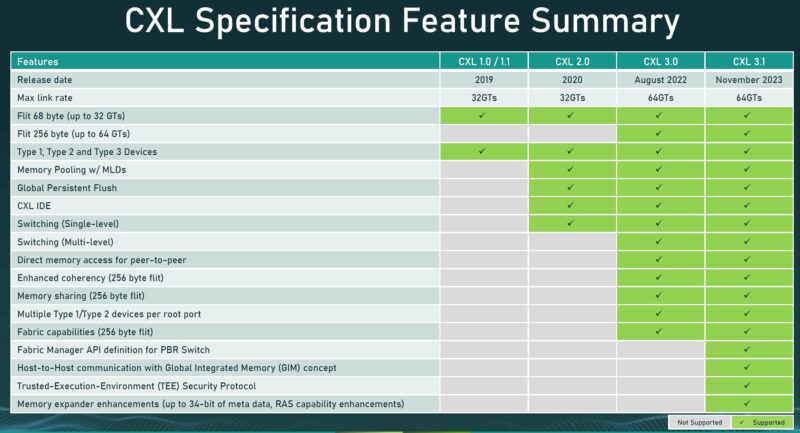

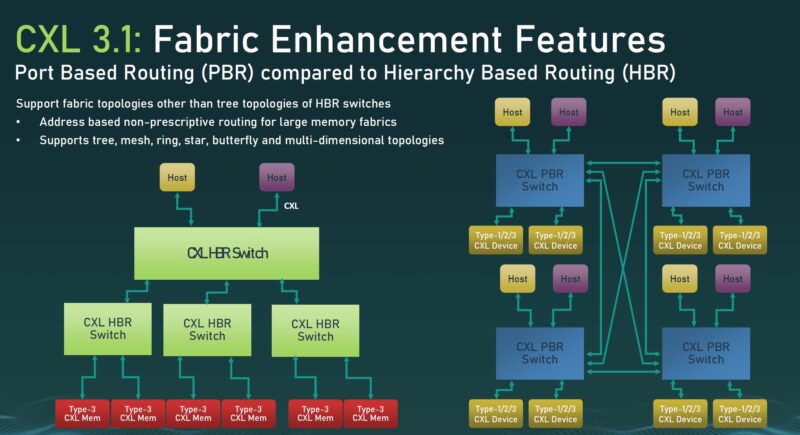

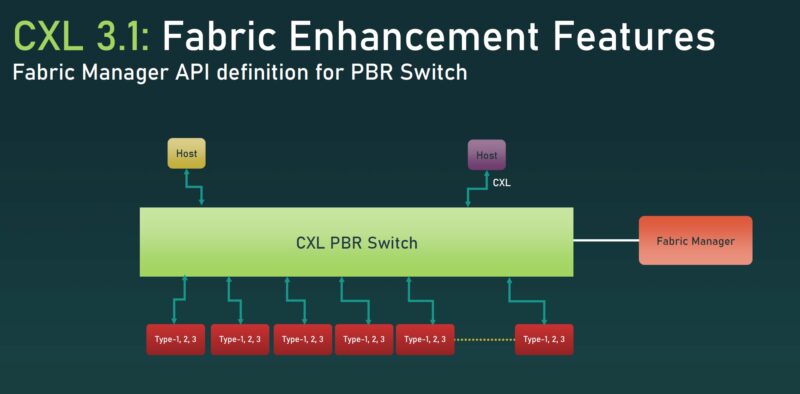

CXL 3 brings things like port-based routing (PBR), which is different than hierarchy-based routing that is more similar to a PCIe tree topology. This is needed to facilitate larger topologies and any-to-any communication.

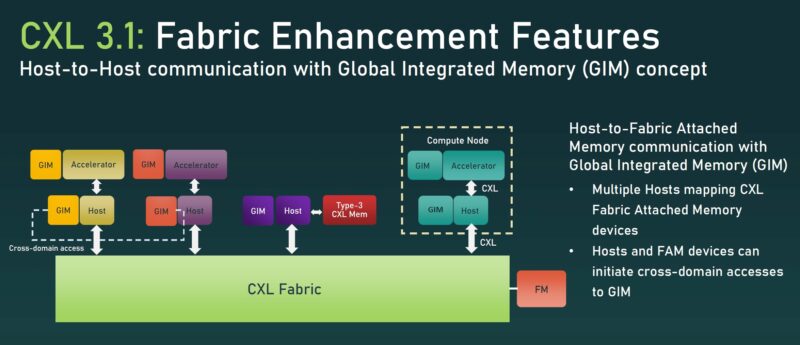

One of the CXL 3.1 enhancements is supporting host-to-host communication over CXL fabric using Global Integrated Memory (GIM.)

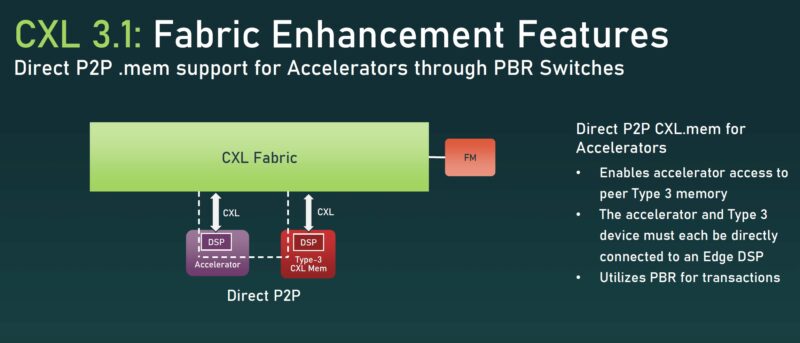

Another big one is the direct P2P support for .mem memory transactions over CXL. With all of the discussion on GPU memory capacity being a limiter for AI, this would be the type of use case where one could add CXL memory and accelerators onto a CXL switch and have the accelerator directly use Type-3 CXL memory expansion devices.

There is also a Fabric Manager API definition for the port-based routing CXL switch. The fabric manager might end up being a key CXL ecosystem battleground as that will need to track a lot of what is going on in the cluster.

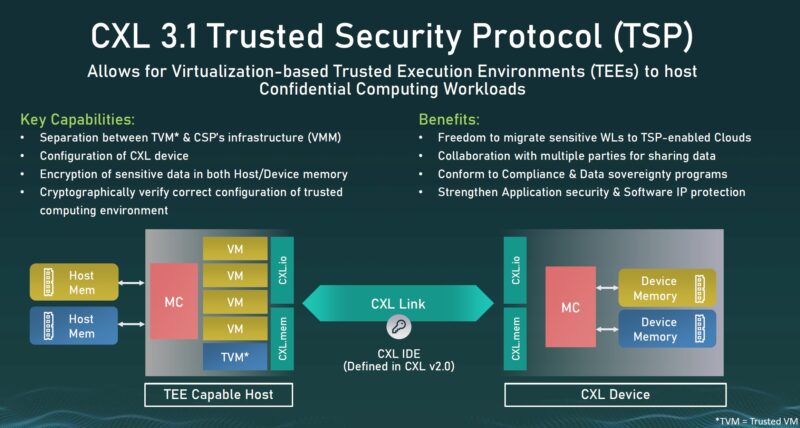

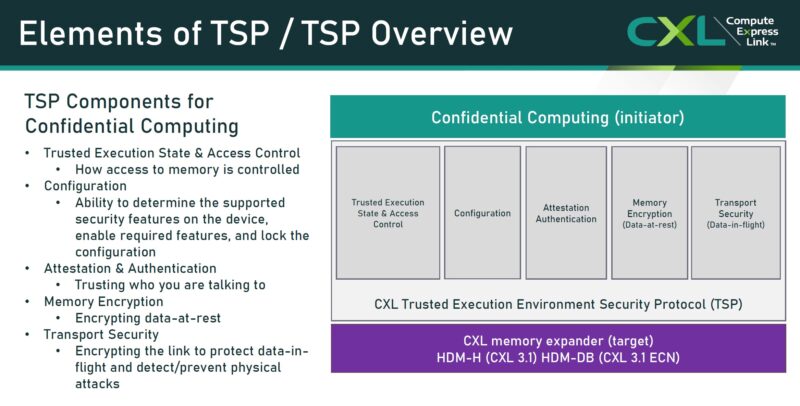

The CXL 3.1 Trusted Security Protocol (TSP) is the next step in handling security on the platform. Imagine a cloud provider with multi-tenant VMs sharing devices that are connected via CXL.

As a result, things like confidential computing that are a hot topic in today’s cloud VMs need to extend past the confines of a server and to devices that are attached to the fabric.

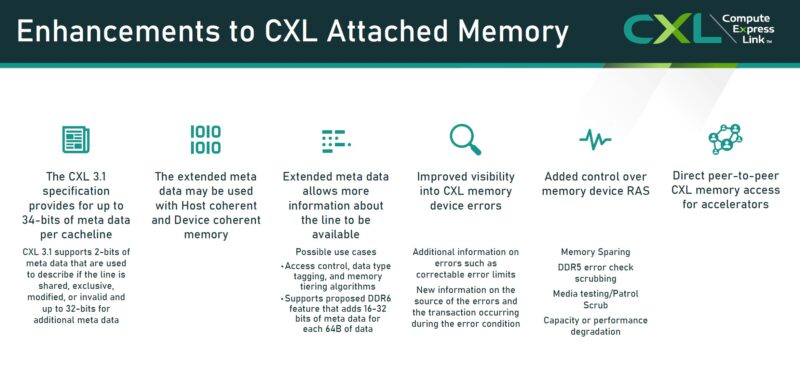

CXL Attached memory also is getting a number of RAS features and then additional bits for metadata. Again, this is important as the topologies get bigger to ensure reliability.

There are a surprising number of new features in CXL 3.1.

Final Words

CXL 3.0/ CXL 3.1 is still far enough out in terms of products that our sense is that most companies will adopt CXL 3.1 over CXL 3.0 when we see products hit the market. At the same time, my question to the CXL folks at Supercomputing 2023 is whether CXL 3.1 is designed to be big enough. Currently, the specification is designed for a few thousand CXL devices to be connected. At the same time, we have AI clusters today being built with tens of thousands of accelerators. In the CXL world, there may be more attached CXL devices than today’s accelerators so my question is whether CXL will need to scale up as well.

There is still a lot of work to be done, but the good news is that we will start to see CXL support pick up in 2024 with not just experimental, but also useful production use cases.