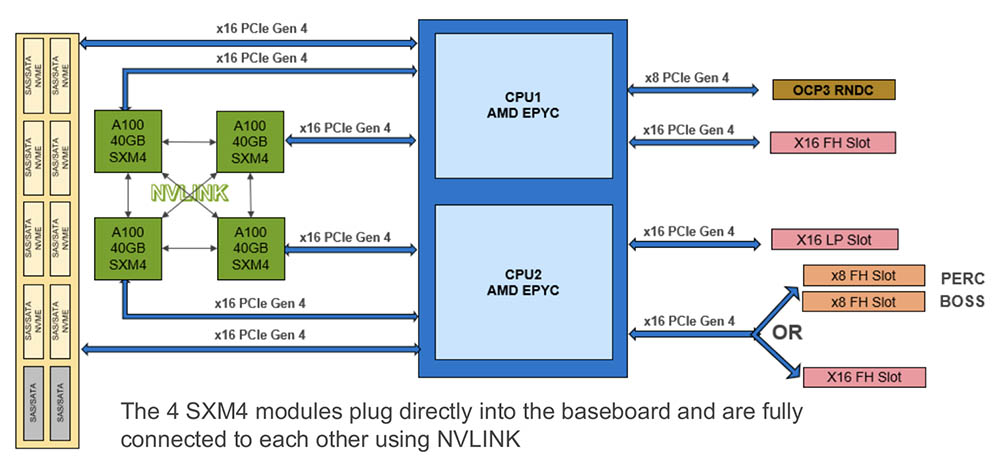

Dell EMC PowerEdge XE8545 Topology

The Dell EMC PowerEdge XE8545 is a fairly interesting server. There are a number of looks at topology, but this one is absolutely great since it shows the basic layout of the PCIe lane configuration from front to back.

Each CPU has sixteen PCIe Gen4 lanes dedicated to the front drive bays. These sixteen lanes support four 2.5″ NVMe SSDs for a total of eight. These, if you saw our internal overview, come from the configurable lanes that can be used as socket-to-socket or PCIe links.

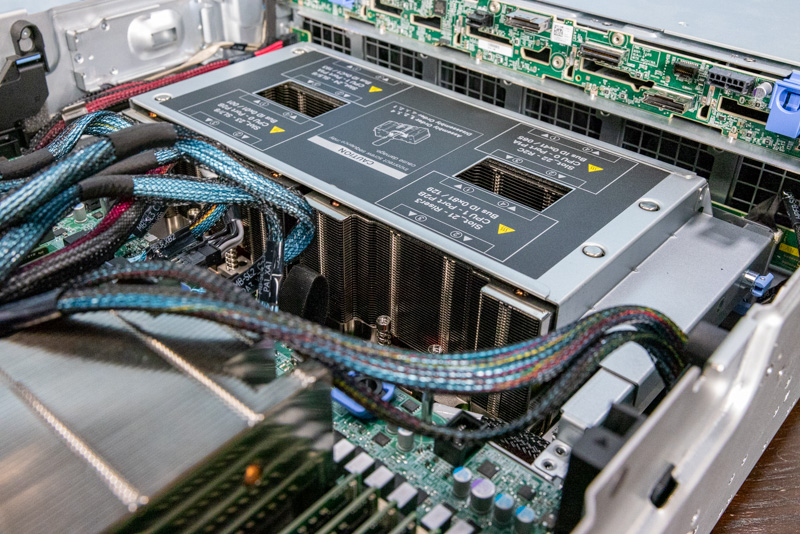

As you can see, each CPU connects to two of the GPUs. The GPUs themselves are directly connected via NVLink. We will note that in this diagram there is the 40GB module noted, but the 80GB 500W module is also an option.

On the rear, each CPU gets a PCIe Gen4 x16 slot. CPU 1 gets the OCP NIC 3.0 port while CPU2 gets either an x16 slot or two x8 slots.

If you are doing a quick count you are probably at 152 PCIe lanes by now with eight unaccounted for lanes on CPU1. This is a bigger topology still than we can get with Intel Xeon Ice Lake, but it is not the full 160 PCIe lanes. Additional lanes are used in systems for features such as the non-OCP NICs, SATA for the front bays, and so forth. Those were not in the diagram, but since AMD EPYC CPUs do not have PCH’s for lower value I/O, we typically see systems allocate lanes for those functions.

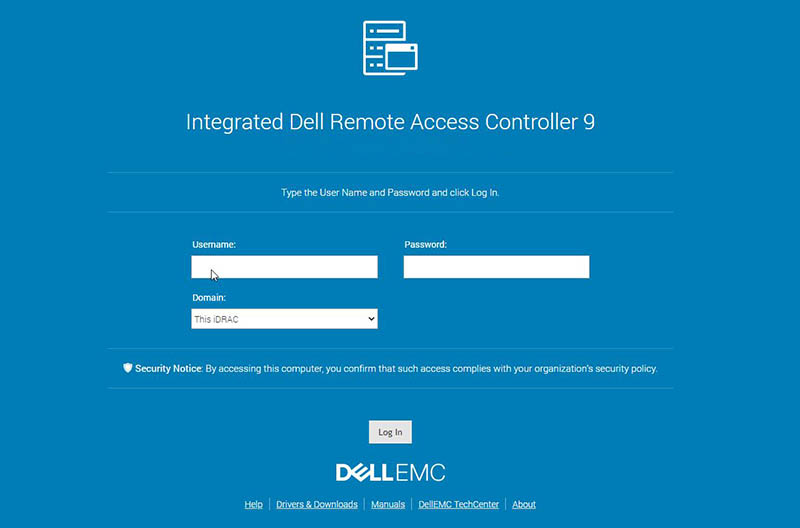

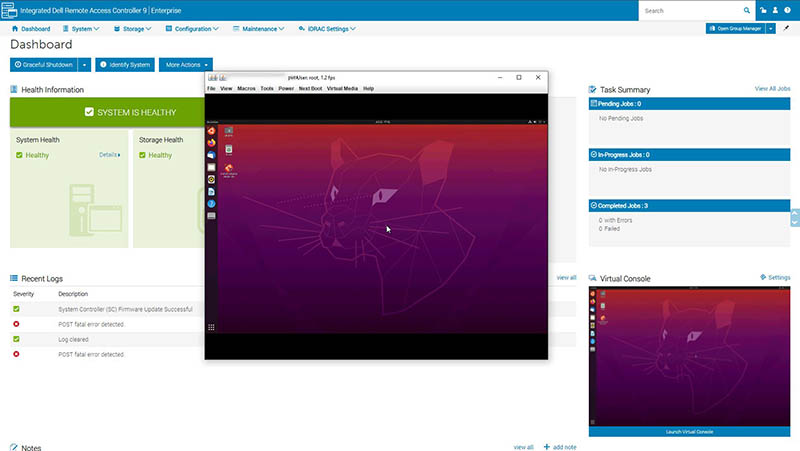

iDRAC 9 Management

One of the biggest features of the PowerEdge XE8545 is that it supports iDRAC. For those unaware, iDRAC is Dell’s management solution at the server level. Its BMC is then called the iDRAC controller. For those in the Dell ecosystem, you likely know what iDRAC is.

The iDRAC solution here we are not going to spend much time on. It is effectively similar to what we saw in our Dell EMC PowerEdge R7525 review, just with monitoring for the GPUs and the different fan/ drive configurations. One notable difference between the XE8545 and competitive systems is that many GPU systems are designed to maximize GPU/$ and performance/$. As such, usually features such as iKVM support on GPU systems, outside of HPE and Lenovo, tend to be included. One needs to upgrade a license to do that with Dell.

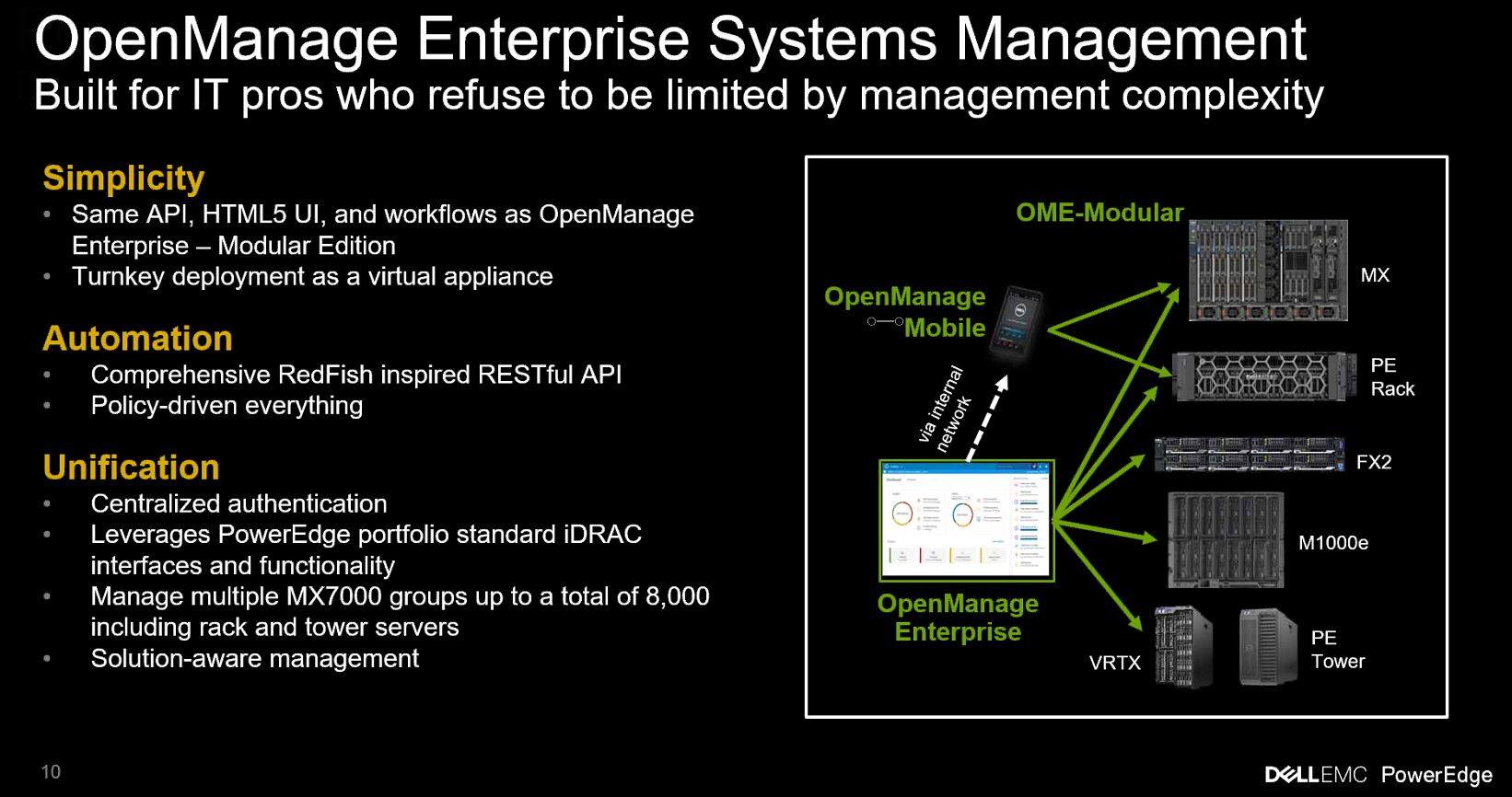

For most Dell shops, the big benefit is being able to manage the XE8545 with Open Manage. That is the same management platform that can manage everything from the Dell EMC PowerEdge XE7100 storage platform to the Dell EMC PowerEdge MX, to the Dell EMC PowerEdge C6525.

A major benefit for organizations with the XE8545 is the ability to manage the GPU-based solution alongside its other servers.

Next, let us look at performance and features.

Dell EMC PowerEdge XE8545 Performance

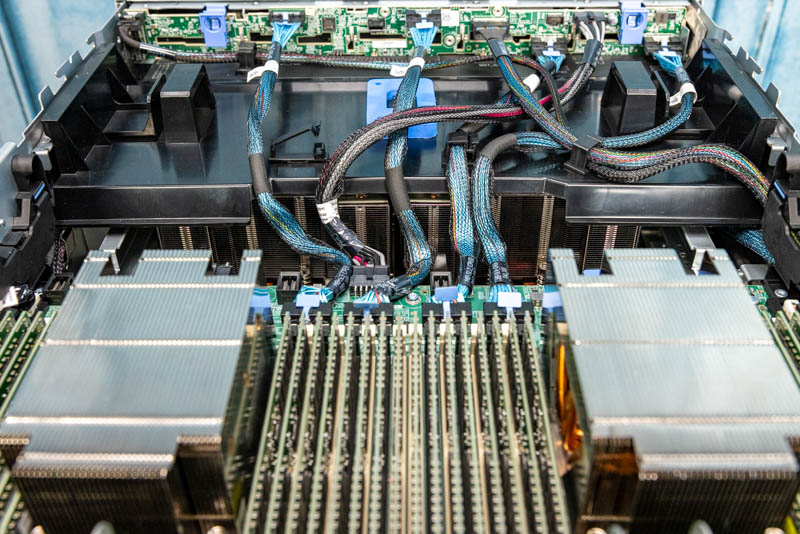

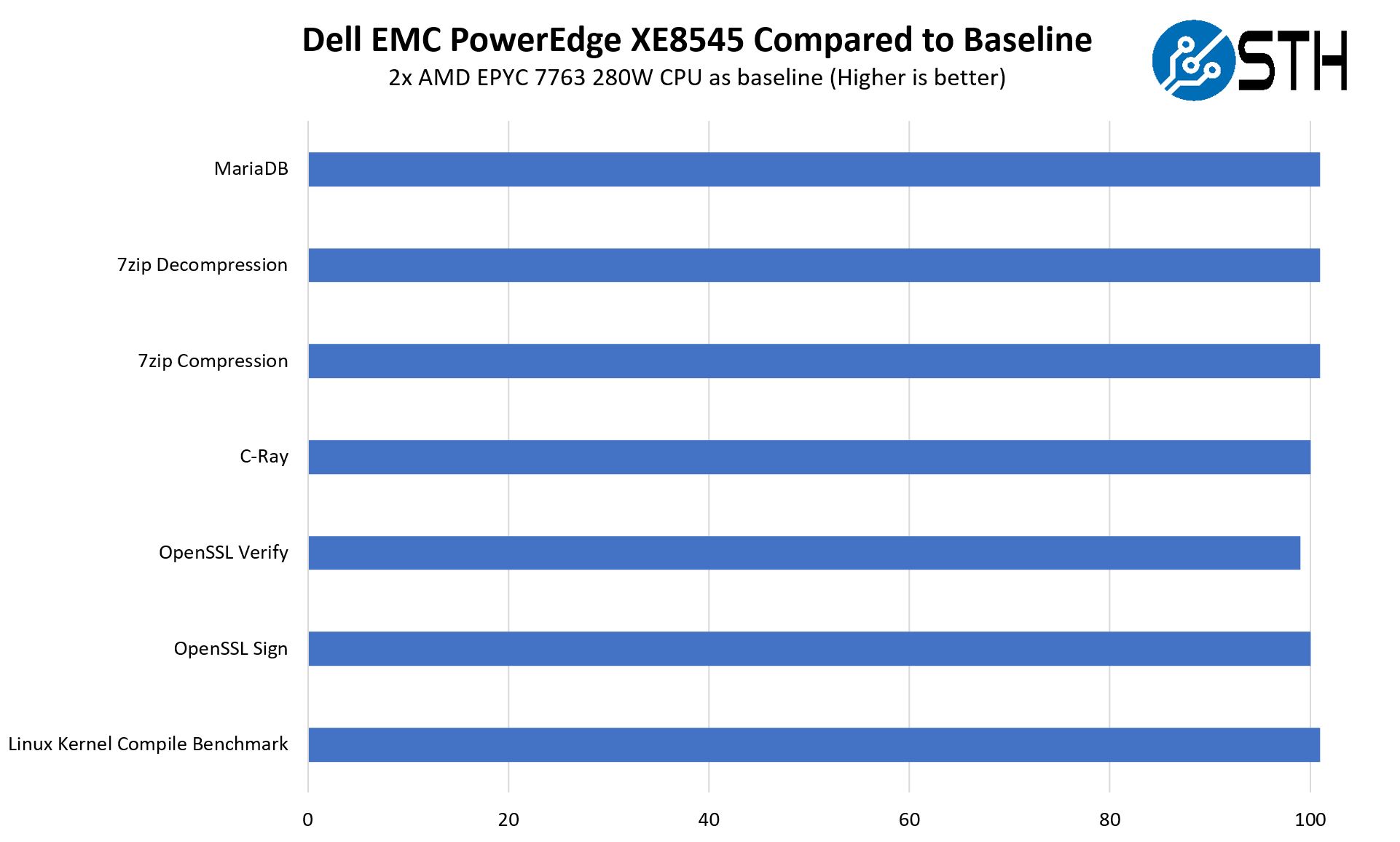

In terms of performance, we have reviewed many systems with the AMD EPYC 7763 at this point. As a result, we wanted to use our existing data from our formal review to validate the CPU performance using top-end 280W CPUs to see if the system could indeed keep the CPUs as cool as a standard server.

The net result here is that we saw performance close enough to our baseline that we would not say that this is either faster or slower than a standard server. It seems like our system was able to keep the CPUs cool even running at 27.1C ambient in the data center’s cold aisle.

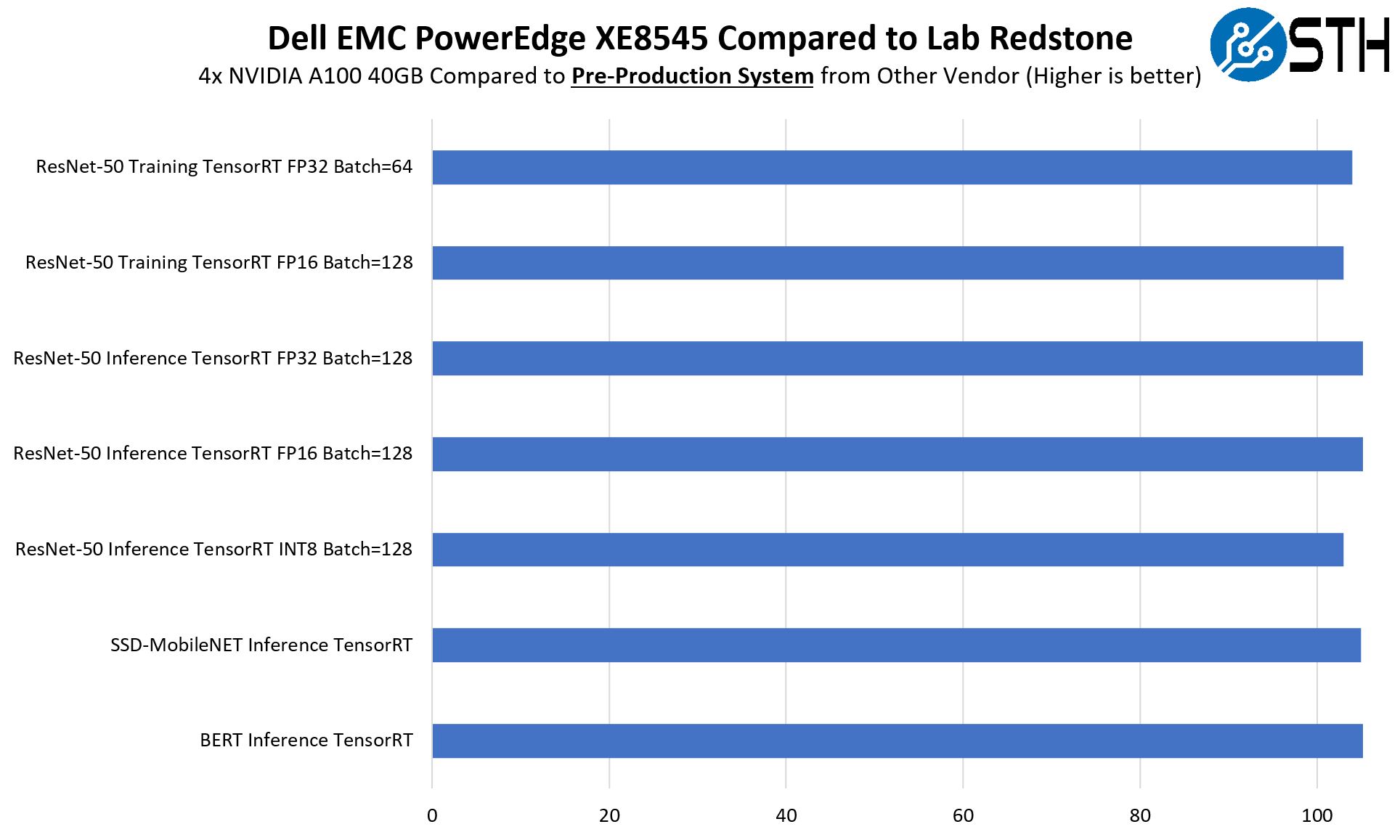

On the GPU performance, we had a bit more of a struggle. We had another prototype NVIDIA Redstone system but we are unable to share details of the system since it is going back for a round of revisions. Hopefully, we can review the final system soon. The other challenge is that most other vendors either want us to review the lower-end 4x A100 PCIe configurations or the higher-end 8x A100 Delta platforms. So our comparison set was very poor here.

The Dell EMC machine ended up faster than the prototype, but frankly, this is a meaningless chart. We would expect a Dell system to perform better than a prototype. If we did not have a comparison point, then we are just showing numbers without context. What it does show is that Dell is doing a great job on the cooling side. Our suggestion for this is to look to MLPerf Training v1.0 results if you want a bigger comparison set.

Overall though, it appears as though Dell EMC’s cooling solution may look a little funny, but it is working well.

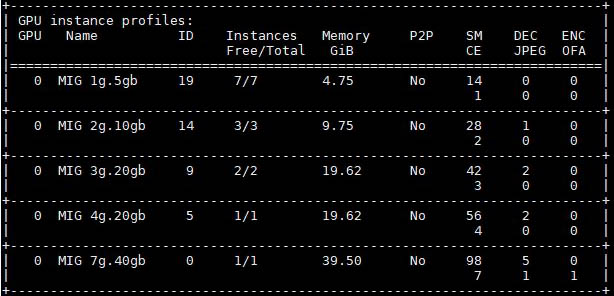

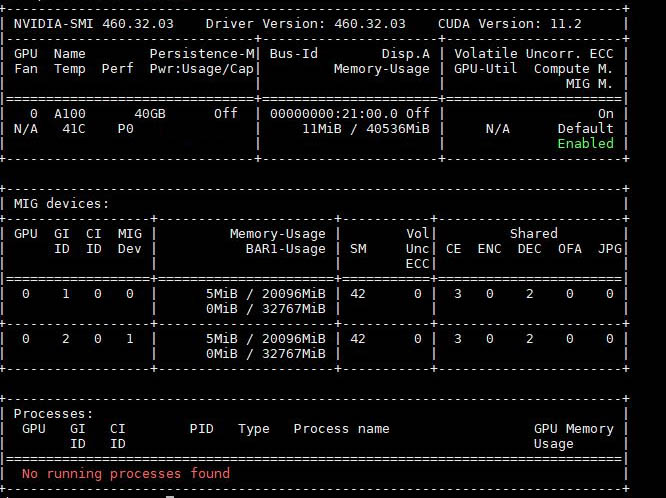

Also, it is worth pointing out that the solution also includes support for NVIDIA MIG or multi-instance GPU. With the NVIDIA A100, even the SXM4 modules, one can “split” the large GPU into multiple instance sizes with up to seven total instances.

Here is an example of splitting a 40GB A100 into two 20GB MIG instances.

With the 80GB module especially, there is a very interesting use case. Some inference workloads do not scale up on a big GPU overly effectively. Instead, one uses multiple lower-power GPUs like the venerable NVIDIA Tesla T4 for inference. With MIG, one can split an 80W A100 GPU into seven instances that can out-perform a T4. In the four GPUs in this system, that yields a total of 28x GPUs with ~10-11GB of memory each. For some PowerEdge XE8545 buyers, the ability to create 28 instances with 4 cores/ 8 threads each and an inferencing GPU for each on this system with 16 cores left over will be an intriguing use case enabled by the NVIDIA A100 and 64-core AMD EPYC 7003 Milan CPUs.

Next, let us wrap up with our power consumption, STH Server Spider, and final words.

With 3 nvme u.2 ssd’s how can i leverage 2 drives into a raid 1 configuration for OS? That way I can just use 1 of my drives for scratch space for my software processing.

Do i need to confirm if my nvme backplane on my xe8545 will allow me to run a cable to the h755 perc card on the fast slot x16 of riser 7 for the perc card? Would this limit those raided ssd’s to the x16 lanes available to the riser where the perc card would be? At least my os would be protected if an ssd died. The 1 non raided drive would be able to use the x8 lane closer to the gpus right? I was originally hoping to just do raid 5 with all 3 drives so that i could partition a smaller os and leave more space dedicated to the scratch partition.