I bet you thought we forgot about Arc in our Intel Architecture Day coverage! While the Xe infrastructure will of course be present in HPC applications thanks to Ponte Vecchio, Intel is also releasing Xe HPG as Arc, a gaming-focused GPU based on the same fundamental architecture. While STH is not a gaming-focused site, we still want to cover this GPU as we suspect it will end up pulling double duty as a workstation-class card as well.

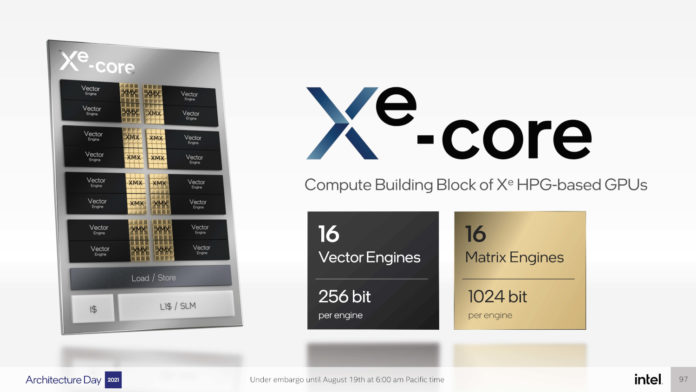

Intel’s first consumer Arc GPU will be running Alchemist, Intel’s name for the specific microarchitecture used on these new GPUs. Alchemist is based on the Xe-core, which is the fundamental building block of Intel’s Xe range of products.

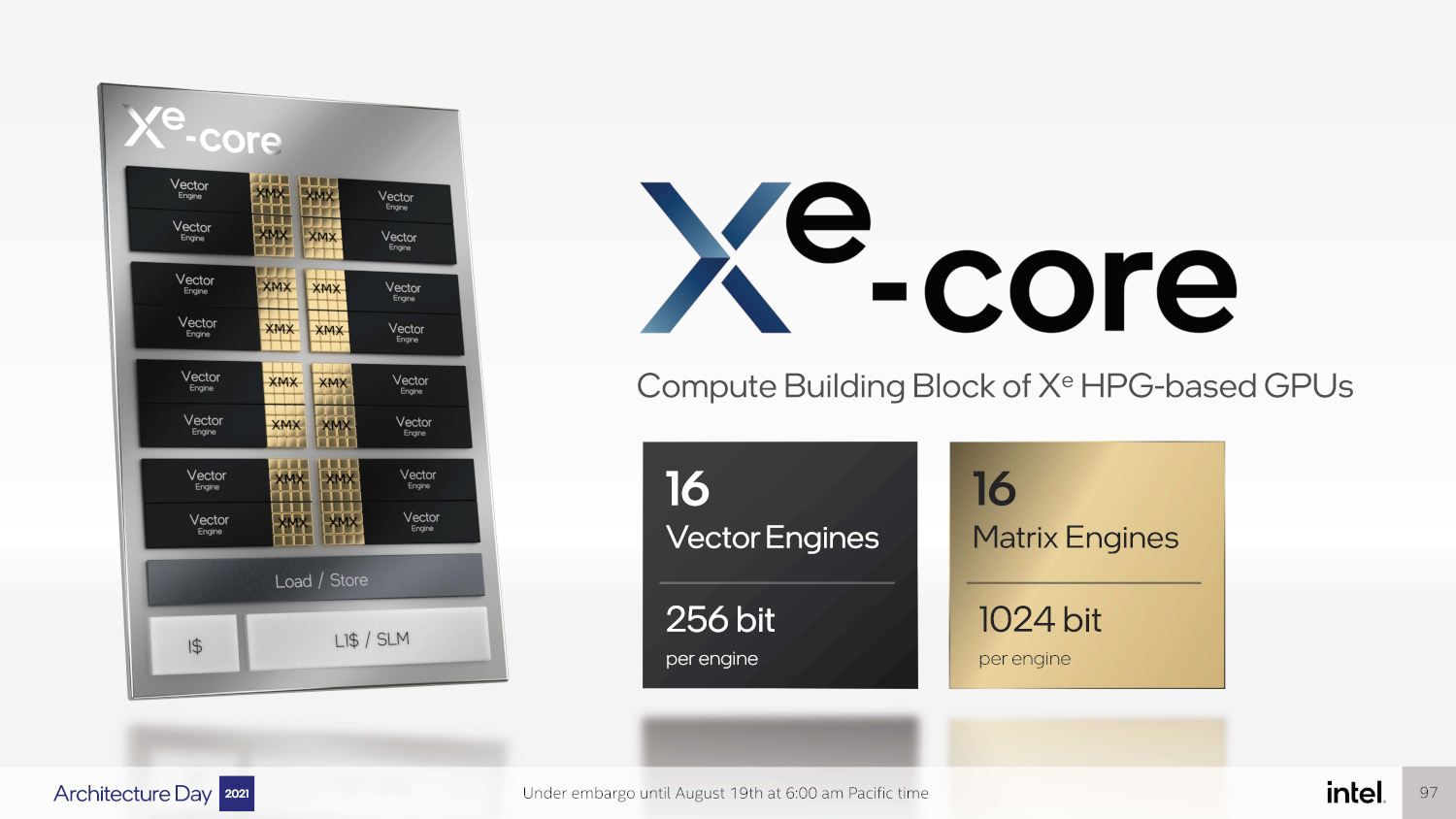

Each Xe-core comes with 16 vector and matrix engines, plus some cache and other logic. Intel previously defined their graphics solutions in terms of EUs or Execution Units. The company said that the execution unit name was too constraining for its next generation cores. New to the architecture are the matrix engines, which Intel calls Xe Matrix eXtensions or XMX. The XMX engines are designed for matrix math common in AI or machine learning scenarios.

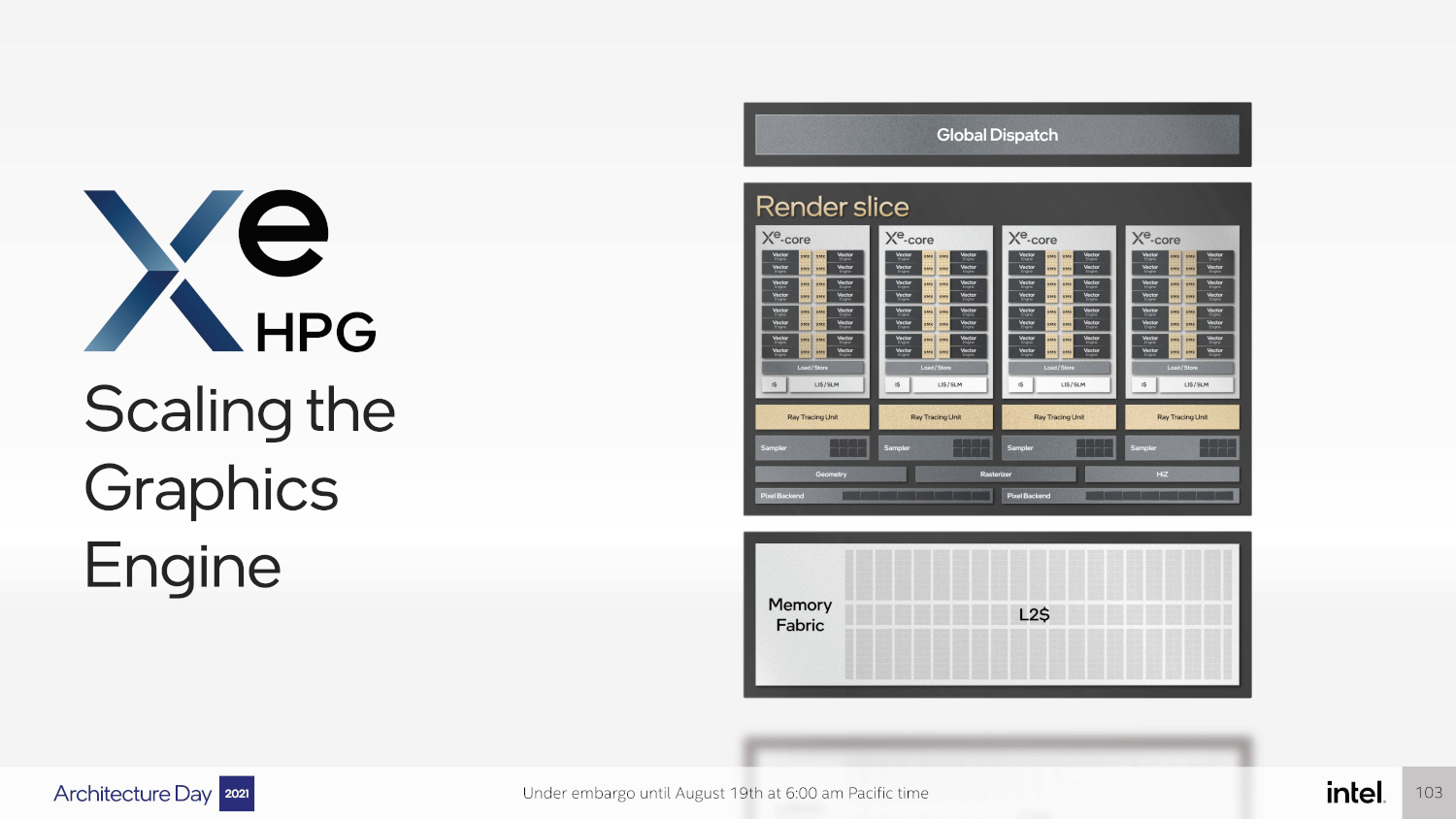

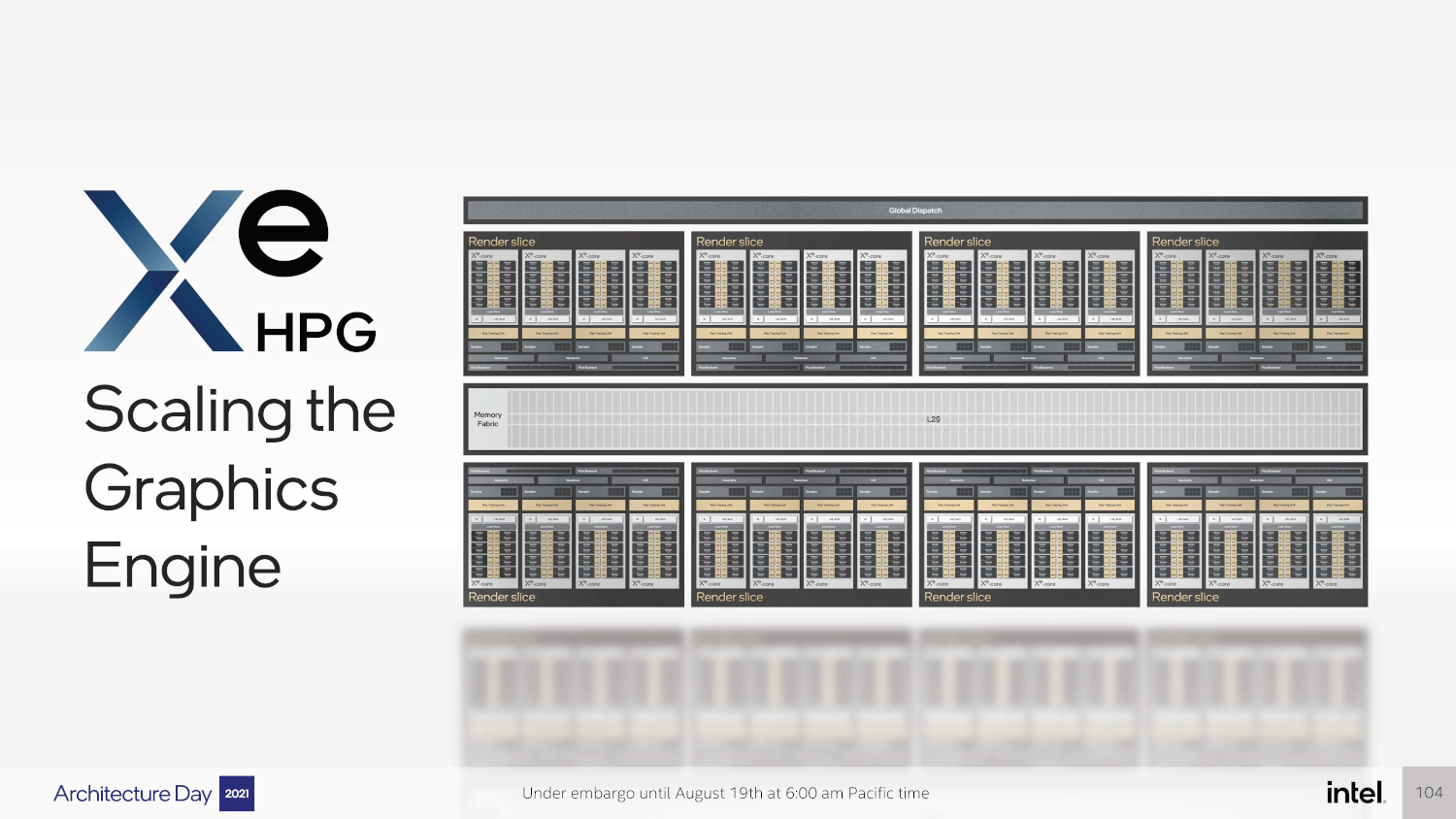

Intel can bundle varying amounts of the Xe-cores together, along with some additional logic and cache, to create a complete GPU. They have provided an example here featuring four Xe-cores, though no word yet on the exact configuration of the Xe HPG line was given.

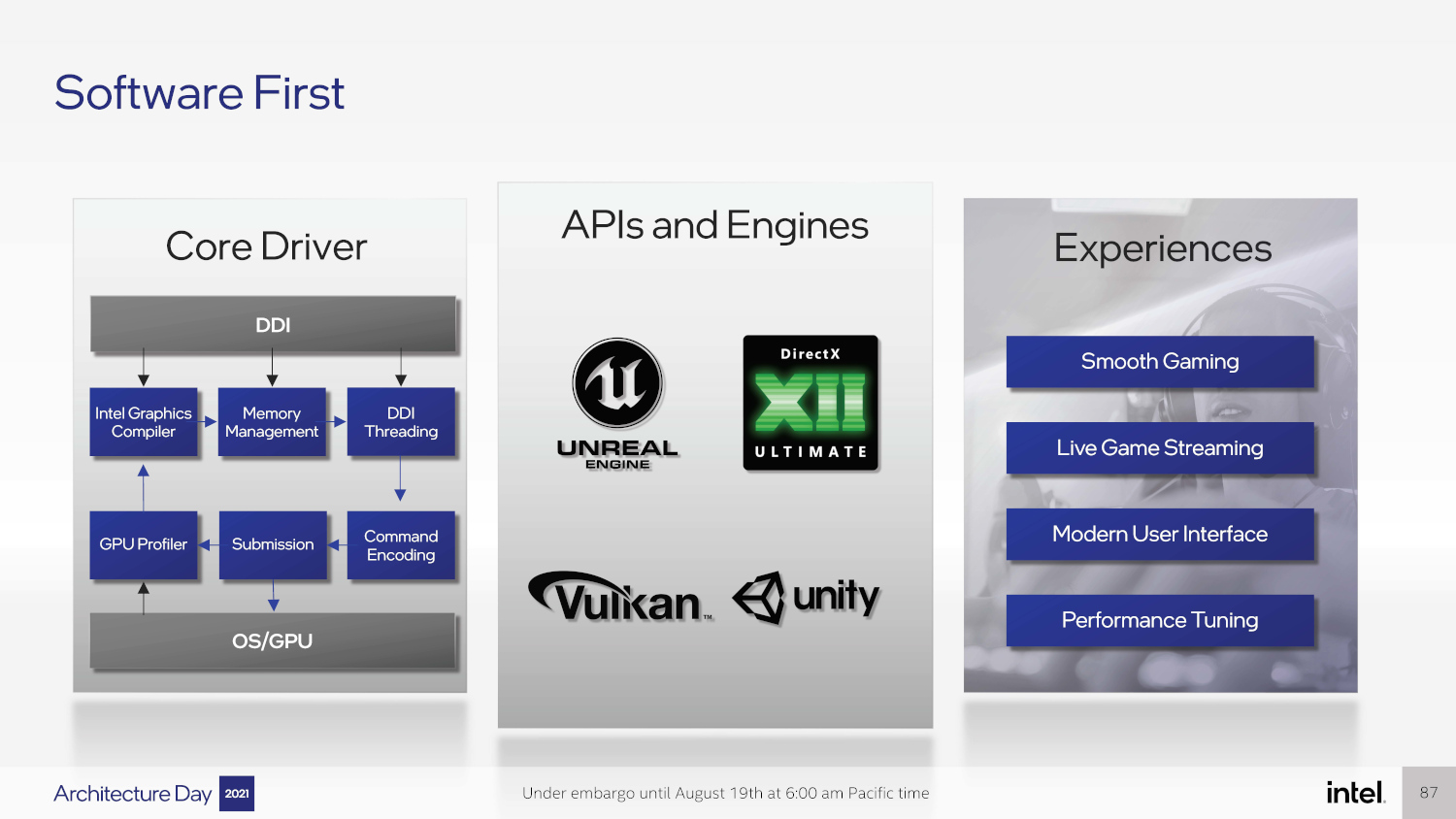

Of course, all of the compute grunt in the world is for naught if you do not have software support to back it up. The vector engines on the Xe-core are fully DX12 compatible, and Intel is including hardware-accelerated Ray Tracing Units along with each Xe-core as well.

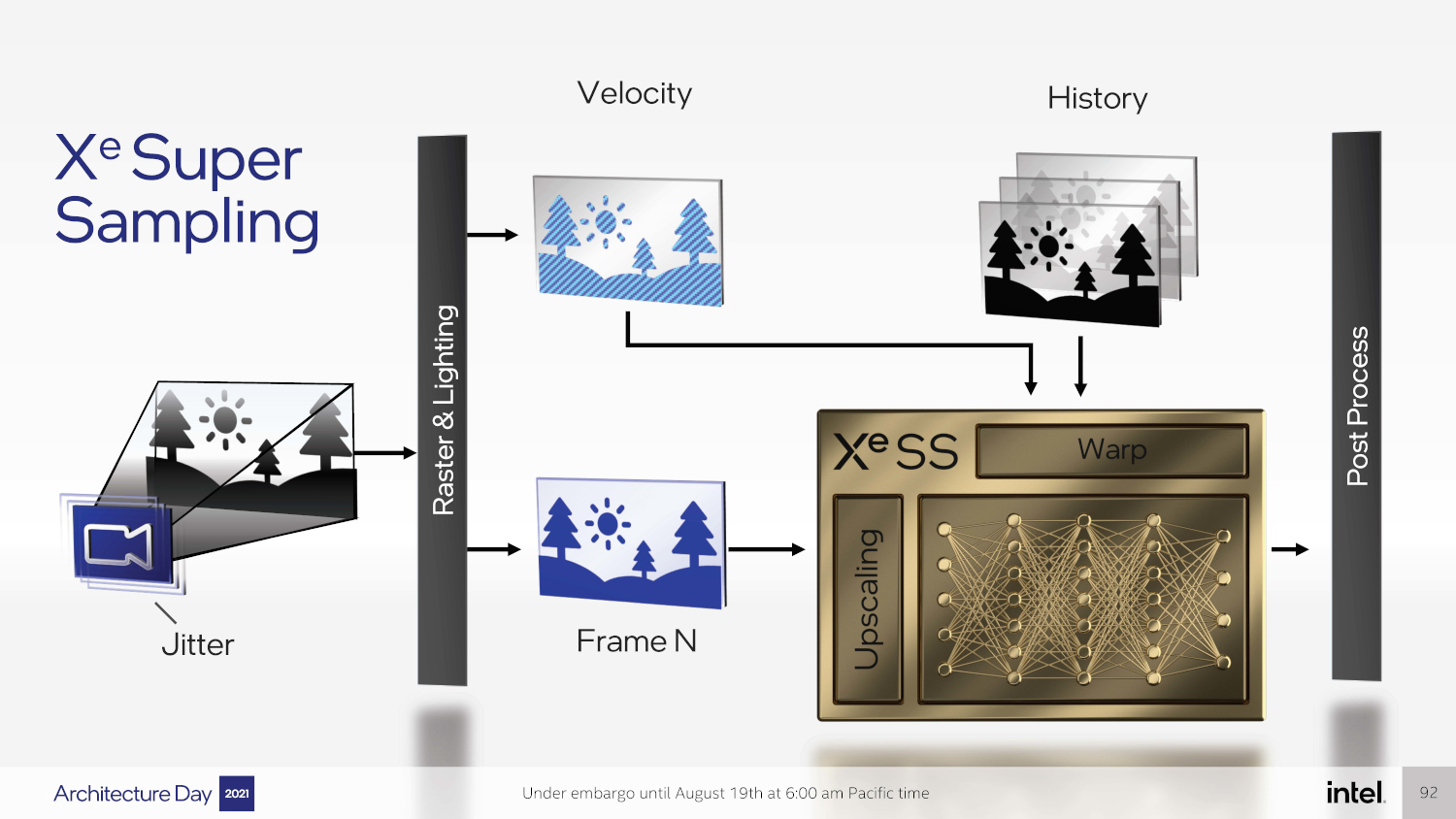

One area that Intel is making an interesting push is with their Xe Super Sampling or XeSS technology. This is Intel’s upscaling technology, designed to compete with NVIDIA’s Deep Learning Super Sampling (DLSS) and AMD’s FidelityFX Super Resolution (FSR). The basic concept is that rendering is done at a lower quality, e.g. 1080p, and then upscaled using an inferencing model to 4K. Ideally one gets the fast render times of lower resolution with the quality output of a higher resolution, but slower render.

While Intel has been light with details so far, all appearances are that the technology is more similar to DLSS than to FSR in that it incorporates both machine learning, as well as a temporal component to the upscale. Additionally, Intel is utilizing their XMX engines on the Xe-core to accelerate this process similar to NVIDIA using tensor cores for DLSS. One important similarity to AMD’s FSR is that supposedly XeSS will be compatible with both AMD and NVIDIA GPUs, though without XMX engines in the GPUs performance is an even bigger question mark when talking cross-vendor support.

Intel is going to produce Alchemist at TSMC on N6 rather than try to produce it in-house on their 10nm (or Intel 7) process. TSMC’s N6 process is a more refined version of the 7nm process-node technology currently used by AMD’s RDNA2 GPUs.

Intel has also made a point to show that the Xe-core based GPUs will be scalable, with an example given showing a 32x Xe-core design. Broad scalability of Xe-core GPUs could enable Intel to cover multiple market segments relatively simply, but so far all we have to go on is their word and this picture in the slide deck.

Lastly, Intel has chosen some very gamer-friendly names for their forthcoming GPU families. This first-generation product will be called Alchemist, with future products called Battlemage and such. I almost feel like this slide should have come with its own RGB lighting, but perhaps that is just me.

And with all of that said, everything in this article is still a big question mark. Intel is still a ways away from the actual release of Alchemist, and while rumors suggest Intel is roughly targeting an NVIDIA RTX 3070 in terms of performance, we will not know for certain until someone actually gets their hands on one of these cards and puts it through its paces. Speaking personally, I truly hope Intel knocks this one out of the park, as additional competitive options in the GPU space will help the overall health of the GPU market.

Given that they are using the same supplier TSMC as AMD, I don’t foresee plenty supply whenever launched. AMD and NVIDIA can’t get as much supply as they can sell. They are simply impossible for gamers to get one. I hopped if Intel managed to use its own FAB for GPU, and flood the market, they could capture substantial gaming market share. Alas…. No in-house GPU it seems for now.

Ram,

Every foundry has fixed capacity of course. My understanding (possibly incorrect) is that the different lines at TSMC operate mostly independently; in other words, N6 line capacity should not affect AMD’s continued use of their particular N7 node. More importantly, a huge chunk of the 7nm capacity is being eaten up by the PS5 and Xbox chips, and those chips are unlikely to jump to a newer process node without an actual revision on the consoles. Historically that would be at least a couple years away from happening. As a result, there may be more ‘available’ space on a higher process node for new orders than a popular more established node. And of course, all these orders are placed a good deal in advance, so it really depends on what Intel or AMD managed to negotiate with TSMC. Nvidia will be a wildcard in the future too; if they stick with Samsung for their primary fab it will spread the load a little bit.

Lets hope that this move just dont eat up AMD-corridors at TSMC. I would give Intel credit for going into GPU area just to try that with such an move to limit AMD possibilities and success.

I think I am unusual, but I can notice even a frame or two of display latency and I find it frustrating on single-player games (where they tell you not to put the TV in game mode) and I just can’t win at all in multiplayer games such as Titanfall and League of Legends.

In the case of LoL I was just getting hit before I could do anything on my Alienware laptop (bought because I was training neural nets.). I plugged in an external monitor, duplicated the display, ran a timer and took photos showing the time on the external monitor was about 30ms ahead of the internal monitor. I switched to the external monitor and found I could make a real contribution to my team for the first time.

So I think spatio-temporal upscaling will be great for movies but for games count me out.

@Paul Houle, TV lag in FPS games is a pain. What does it have to do with GPUs or AI upscaling? Sounds like your issue was TV lag.

Paul,

To my knowledge, which again could be incorrect, the temporal component of TAA as well as DLSS or XeSS type upscalers always uses *past* frame data to enhance the current frame. They do not delay the current frame. If you watch Digital Foundry videos, they demonstrate this somewhat often by showing that the very first frame after a camera cut often lacks the benefits of TAA, checkerboard rendering, or other upscaling tech, because there is no previous frame from which to infer data.