The Dell EMC PowerEdge XE8545 is the company’s big dual-socket node. Specifically, this system is a 4U system that incorporates two AMD EPYC 7003 processors along with four SXM4 NVIDIA A100 GPUs also known as NVIDIA’s Redstone platform. Positioned above the Dell EMC PowerEdge R750xa in its stack, the XE8545 is the company’s high-end server.

Dell EMC PowerEdge XE8545 Hardware Overview

We are going to split our review into the external overview followed by the internal overview section to aid in navigation around the platform. We also have a video of the platform here:

As always, we suggest opening the video in a YouTube browser window or tab for the best viewing experience.

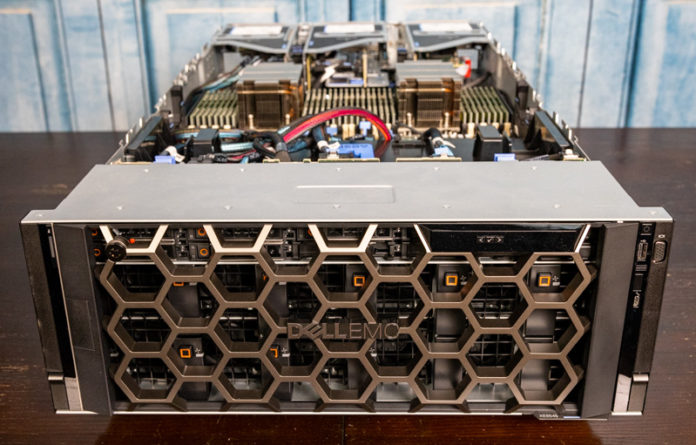

Dell EMC PowerEdge XE8545 External Overview

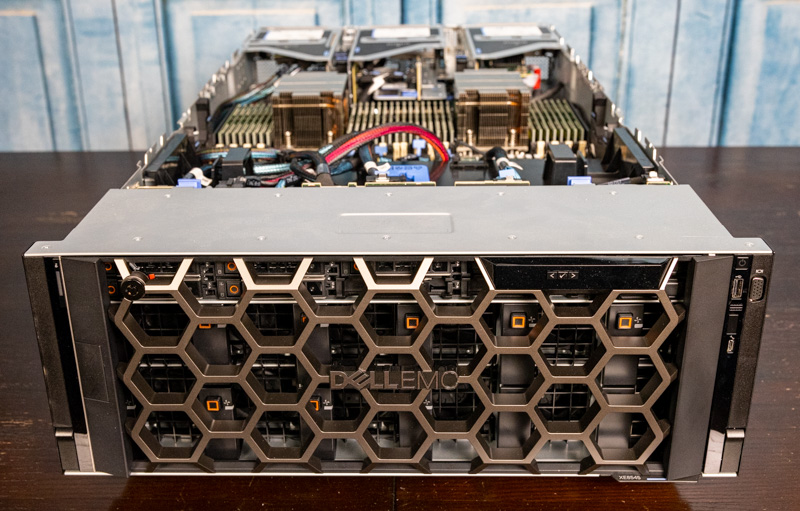

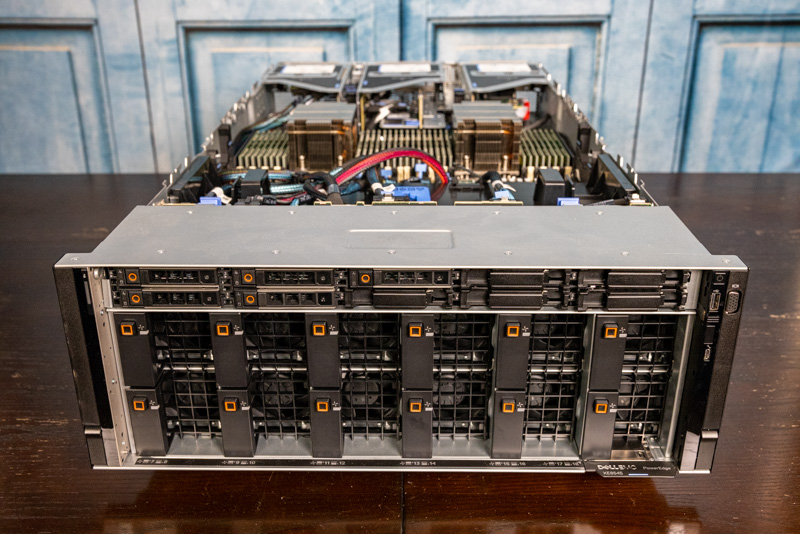

The Dell EMC PowerEdge XE8545 is a 4U server. One of the key innovations for Dell with this platform is that they managed to keep the entire platform in a 810mm chassis depth allowing for deployment in most standard server racks. This is a successor to the PowerEdge C4140 which was well over 100mm longer and often did not fit in standard racks while also giving up features such as hot-swap front storage. Dell sought to create not just a vastly more powerful platform, but also one that was more deployable.

Behind the fancy PowerEdge bezel, we have a 1U ten hot-swap 2.5″ bays on the top of the chassis and a 3U fan array below. The important aspect here is that that the top 1U of drive bays serves as the inlet for the main airflow directed to not just the storage but also the AMD EPYC CPUs, memory, motherboard, and PCIe expansion. The bottom 3U fan wall is designed to cool the NVIDIA Redstone platform with 4x SXM4 NVIDIA A100 GPUs.

The hot-swap bays are designed for SSDs with 8x being PCIe Gen4 x4 slots for NVMe SSDs. Two are legacy SATA/ SAS drive bays. Still, in comparison with the C4140, this is a massive upgrade.

The entire bottom 3U of the front of the system houses twelve fan modules. As a result, all of the front I/O and provisioning/ management functionality is found on the rack ears. These fan modules are hot-swappable for easy servicing without having to open the chassis.

Just to be clear, these twelve fan modules are pointed directly at the NVIDIA Redstone platform and the NVIDIA A100 GPUs.

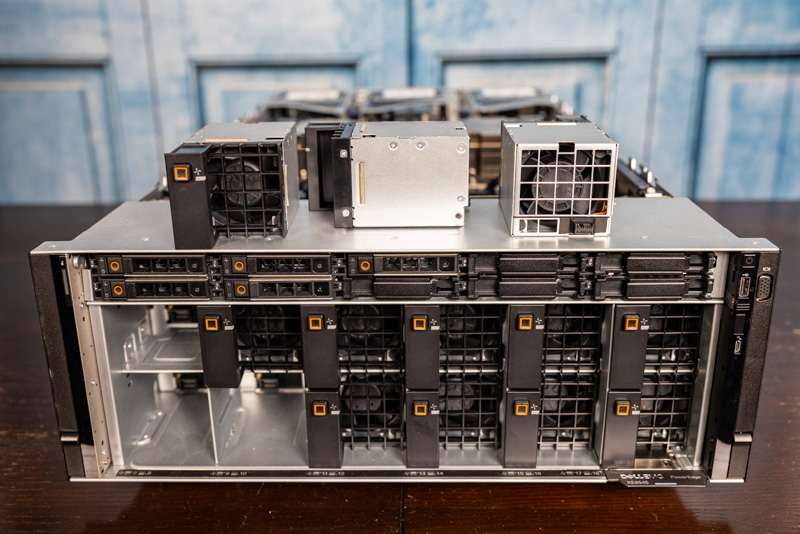

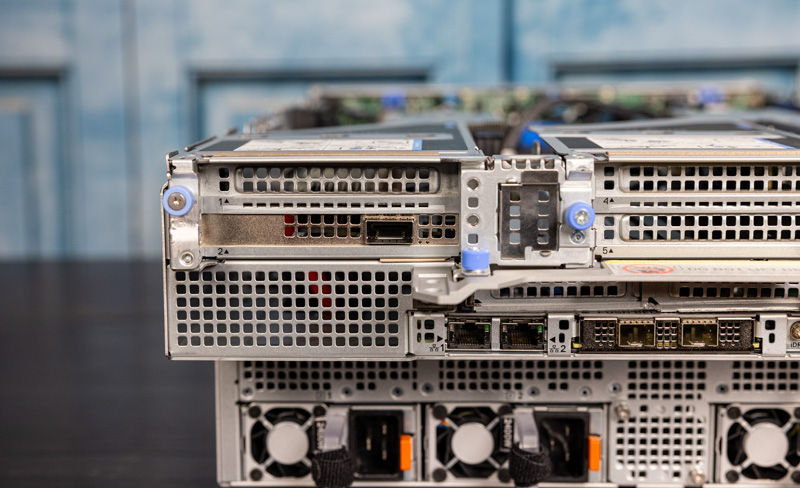

The rear of the unit has a different split. Here, there are two 2U sections. The top-front 1U section expands inside the chassis to exhaust in 2U. On the bottom, the front 3U section exhausts at the rear in a 2U section. We will see more on that as we get to our internal overview.

The top section houses PCIe risers. Not all of these risers are active but one can get at least three PCIe slots for high-speed NICs, DPUs, or external storage controllers in the system.

The main motherboard I/O is similar to other Dell servers of this generation. All of the I/O is on separate swappable PCBs making this area highly configurable. We have a NIC LOM on the left with an OCP NIC 3.0 in the middle. The right side has the iDRAC NIC USB and VGA. Since this is all very configurable, we will say this is our configuration, but you may have a different one.

Something else we wanted to point out is that the system has a total of 48x PCIe Gen4 lanes available for rear PCIe expansion. That can be using three x16 slots or two x16 and two x8 slots. This is in addition to the x8 link for the OCP NIC 3.0 slot. In total, Dell uses 64 PCIe Gen4 lanes for this rear I/O area.

Power is provided by four 2.4kW PSUs. Even in the 400W GPU configuration, a single 2.4kW PSU may not be enough for the system. Dell has the capacity to use two power supplies for an A and two for a B-side PSU to maintain redundancy. Using a N+1 3x PSU configuration would mean uneven loading in the event power delivery to the rack failed on a circuit. Dell designed this system for that extra bit of reliability by using a four PSU design.

Let us get to what was the defining feature for me in this server, the rear overhang. As cool as all of the hardware is, this view dominates my thoughts about the XE8545. This server feels quite a bit like Dell took two servers and put them together, and this view shows that. The top is effectively a Dell EMC PowerEdge R7525 while the bottom is a NVIDIA Redstone-based GPU shelf. In our recent Planning for Servers in 2022 and Beyond Series, many of the key concepts we have been discussing around next-generation servers center around disaggregation. This chassis feels quite a bit like Dell took a disaggregated server and welded two chassis together. Being fair, the integration is much deeper than that but if one view makes me feel like this is happening, it is the rear section. Having lived in California for 25+ years, all I can think about is that this is like two continents starting to drift apart (towards disaggregation) as the top and bottom tectonic plates start to shift.

This is actually quite a unique platform insofar as the 1U/3U to 2U/2U compartmental splits occur. As we recently looked at the larger 8x NVIDIA A100 HGX A100-based “Delta” systems such as those from Inspur and Supermicro we see those systems usually keep 3U for their 8x GPUs plus PCIe switches and NVSwitches while the top 1U is for the CPUs, storage, and memory. In contrast, even though the NVIDIA Redstone platform in the Dell is a lower-end solution, Dell has a much more intricate chassis design which is cool to see.

Next, we are going to take a look inside the system to see why this chassis design works the way it does.

With 3 nvme u.2 ssd’s how can i leverage 2 drives into a raid 1 configuration for OS? That way I can just use 1 of my drives for scratch space for my software processing.

Do i need to confirm if my nvme backplane on my xe8545 will allow me to run a cable to the h755 perc card on the fast slot x16 of riser 7 for the perc card? Would this limit those raided ssd’s to the x16 lanes available to the riser where the perc card would be? At least my os would be protected if an ssd died. The 1 non raided drive would be able to use the x8 lane closer to the gpus right? I was originally hoping to just do raid 5 with all 3 drives so that i could partition a smaller os and leave more space dedicated to the scratch partition.