Dell EMC PowerEdge XE8545 Internal Overview

Inside the system, we see something that looks a lot like a 2U server if we crop the airflow picture off just behind the 10x 2.5″ drive backplane.

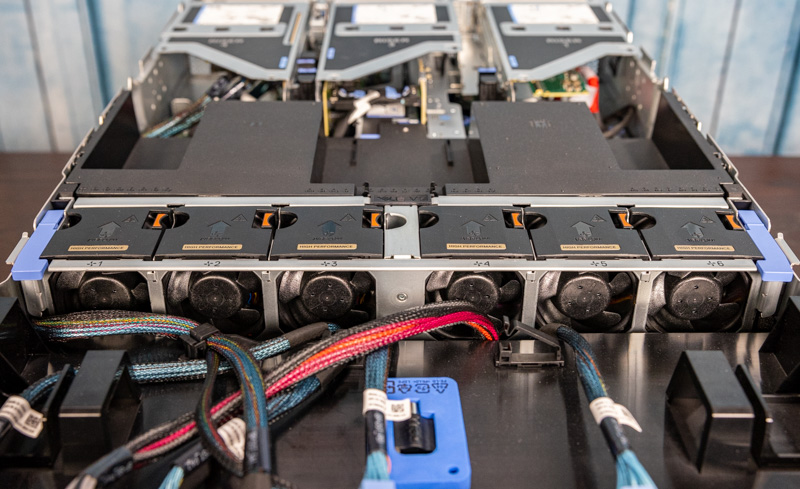

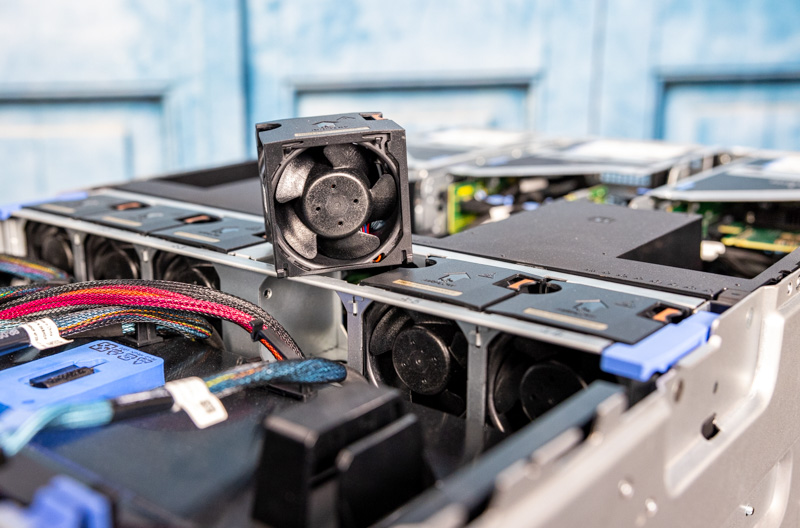

We covered the 3U fan wall for the GPUs in our external overview but behind the 1U of 10x 2.5″ drive bays there is a 2U fan partition This fan partition has six hot-swap fans and the entire partition removes to allow access to the components underneath.

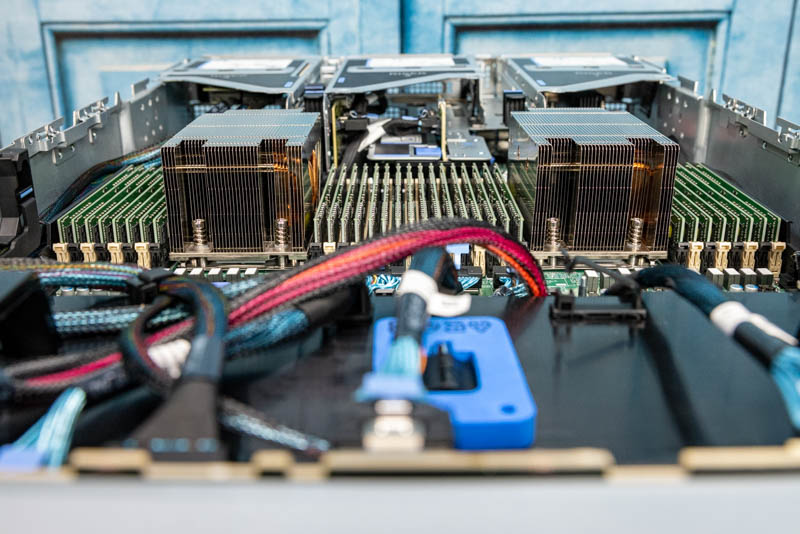

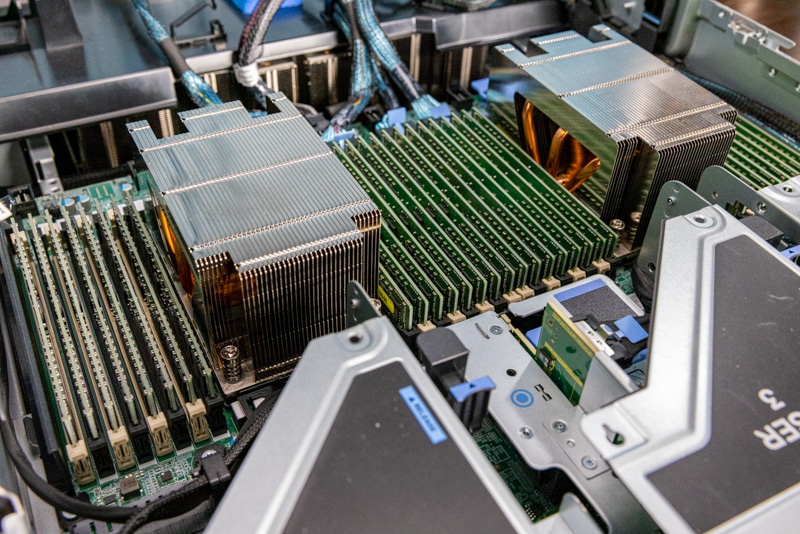

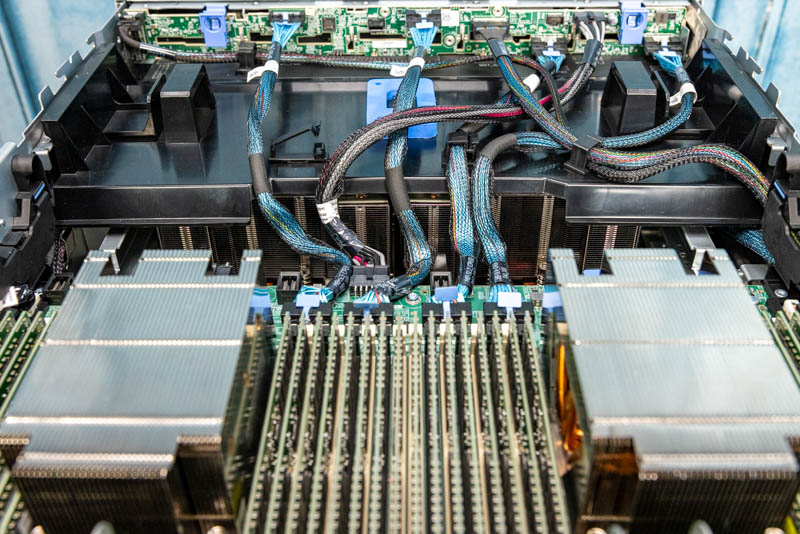

Removing the fan partition and the airflow guide, we can see the CPUs and memory and then the PCIe expansion risers that we covered in our external overview.

A key feature of this system is its ability to handle 280W AMD EPYC 7003 series CPUs such as the AMD EPYC 7763. Some GPU systems cannot handle higher wattage CPUs. We will quickly note here that this is an area where there is a massive upgrade over the PowerEdge C4140 from the previous generation. Instead of being limited to 28 cores per socket or 56 total, this system has 64 per socket or 128 cores total. A combination of TSMC 7nm manufacturing process, chiplet design, and the 280W power budget allows for a massive jump in CPU performance. Some tasks such as those that require photo or video pre-processing often use a lot of CPU cycles before passing tasks to GPUs so having more CPU performance helps.

Each CPU has 8-channel DDR4-3200 memory support (up from 6-channel DDR4-2666/ DDR4-2933 support.) With two DIMMs per channel one can also fit 32 DIMMs in this system up from 24 in the previous generation. The result is that we have more memory bandwidth and more capacity to go along with the additional CPU core count and performance.

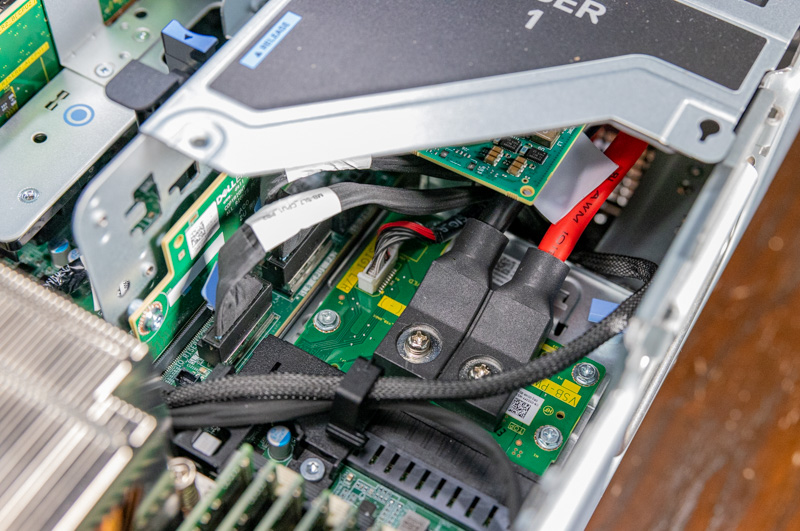

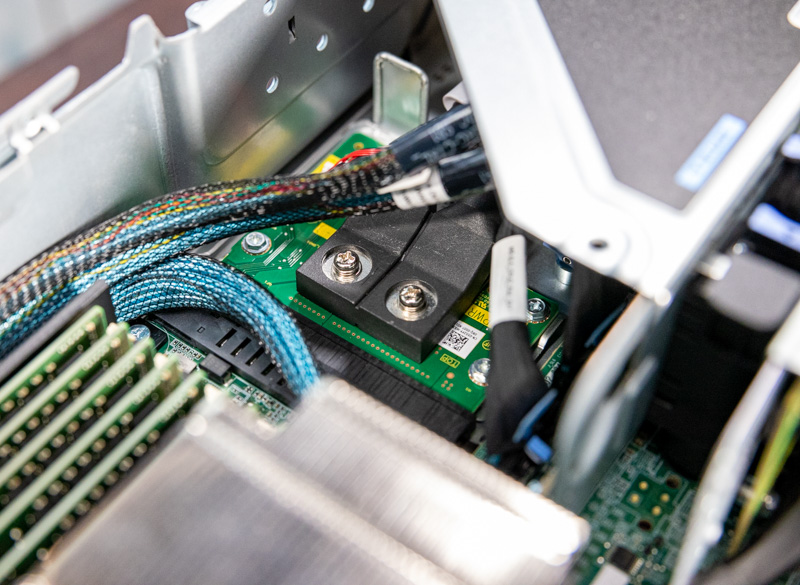

Although we discussed the PCIe risers previously, we wanted to quickly show a cool feature of this system. As many will have noticed, the motherboard Dell is using is basically the same as the R7525. In the R7525, there are 1U power supplies that plug directly into the motherboard on either side of the chassis. With this system, we have the four 2.4kW power supplies so Dell routes power up from the bottom section of the chassis and into power delivery PCBs designed to fit into the standard PSU slot.

What is more, even though the four PSUs are below, Dell has these power delivery PCBs on both sides of the XE8545 motherboard.

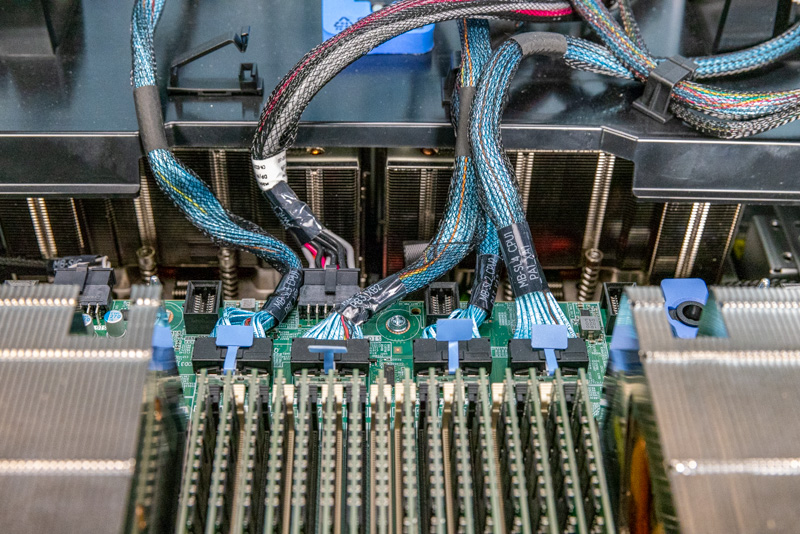

One will likely have seen many cables running from the front 10x hot-swap bays to the motherboard. Dell is doing something interesting here. PCIe Gen4 lanes are being supplied by the flexible motherboard links that can be configured for more socket-to-socket bandwidth or more PCIe lanes. In this configuration, Dell is using the AMD EPYC CPUs in a 160x PCIe Gen4 lane configuration.

One of the major reasons Dell is using AMD in its high-end platform, aside from core counts, is that even the Intel Xeon Ice Lake generation processors are stuck at 128x PCIe Gen4 lanes so Dell had to move to the AMD EPYC line for this higher-end platform.

At STH, we did a deep dive into this configuration in Dell and AMD Showcase Future of Servers 160 PCIe Lane Design. Since that piece was done using the PowerEdge R7525 that has basically the same motherboard we have here, we are not going to do as much of a deep dive in this piece. We even did a video for that one that you can see here (again best to open in its own browser):

Since our internal overview was getting very long, we are going to split this into a more in-depth discussion of the 4x NVIDIA A100 “Redstone” GPU platform.

With 3 nvme u.2 ssd’s how can i leverage 2 drives into a raid 1 configuration for OS? That way I can just use 1 of my drives for scratch space for my software processing.

Do i need to confirm if my nvme backplane on my xe8545 will allow me to run a cable to the h755 perc card on the fast slot x16 of riser 7 for the perc card? Would this limit those raided ssd’s to the x16 lanes available to the riser where the perc card would be? At least my os would be protected if an ssd died. The 1 non raided drive would be able to use the x8 lane closer to the gpus right? I was originally hoping to just do raid 5 with all 3 drives so that i could partition a smaller os and leave more space dedicated to the scratch partition.