Dell EMC PowerEdge XE8545 Power Consumption

The PowerEdge XE8545 is not a low-power server. There are a total of four 2.4kW 80Plus Platinum power supplies. As a quick note, we wish that Dell looked at Titanium power supplies in this class of system. The cost to have higher efficiency power supplies is often not that much in the context of a large system like this.

The four power supplies are designed to have two power supplies for redundant power feeds into the rack. In the 4x A100 400W and dual AMD EPYC 7763 configuration, this setup can pull over 2.5kW under load, and there is room for higher power configurations than we have. This server needs more than a 2.4kW power supply worth of power. The 500W A100 version adds another 400W of power consumption from the GPUs (NVIDIA GPUs use power according to their rating as we have seen with other 400W and 500W A100 systems.) but that also requires higher fan speeds and thus more power from cooling. We have complete confidence that the truly high-end configurations will require >3kW per system.

This is important. While the short depth of 810mm helps the system fit into many existing racks, power density becomes a challenge as well. The C4140 is a 1U server that can use 2kW/U making it impractical for many data centers that need deep racks and also need to power 80kW+ per rack. The XE8545 may be a larger 4U server, but even with ten in a rack, one will likely need to provide over 25-30 kW. Given the standard North American rack is 208V 30A yielding ~5kW usable, rack U density is often not the primary challenge. Instead, it is rack depth, power delivery, and data center cooling.

That points to how integrated these servers are becoming. One needs to look at facility capabilities to simply ensure that two 4U servers can be powered in a rack. Dell did a good job recognizing this power and cooling constraint and changing the form factor in this generation from 1U to 4U.

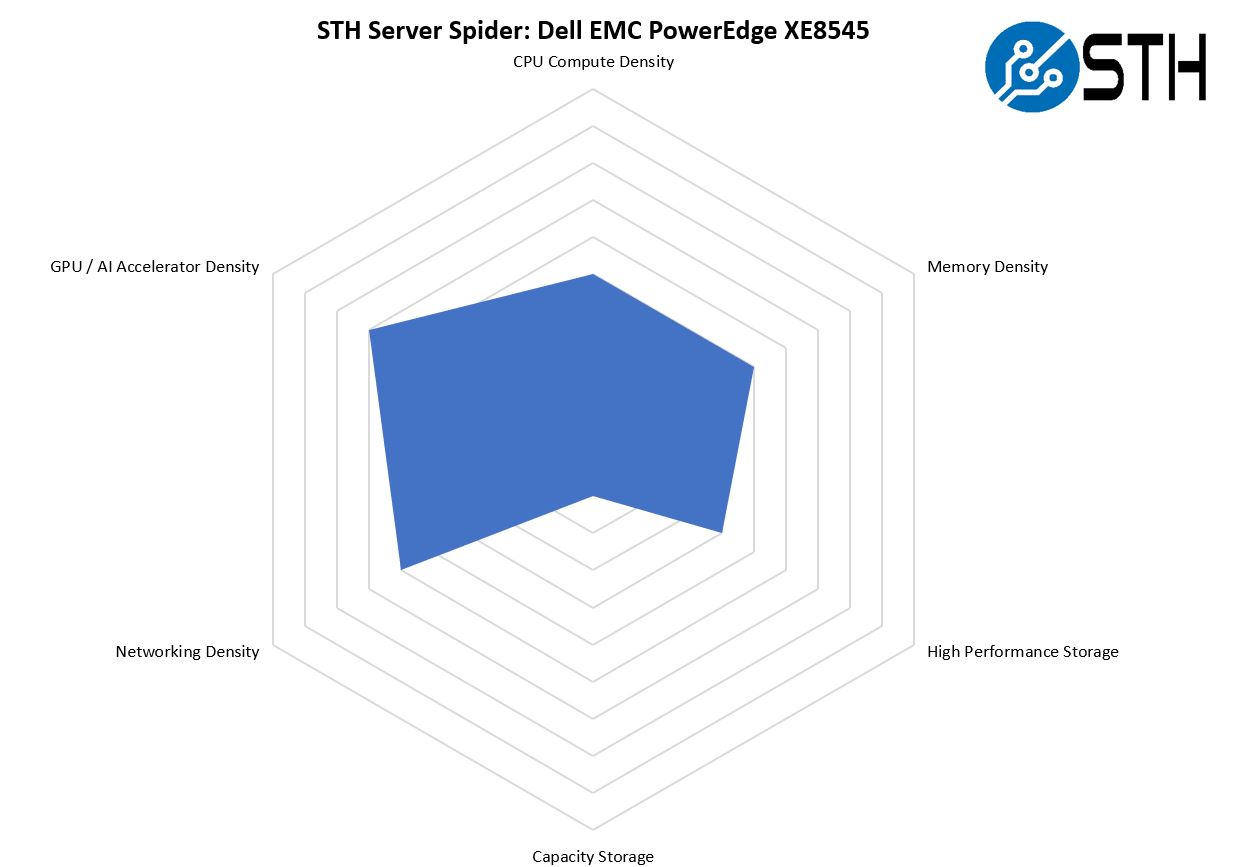

STH Server Spider: Dell EMC PowerEdge XE8545

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

While the PowerEdge XE8545 is a dense system, there are higher density systems on the market. One thing this system is not is a good platform for capacity 3.5″ drive storage as evidenced by the fact that it does not have 3.5″ drive bays.

Still, what Dell EMC managed to produce is a well-rounded accelerated platform that is designed for easier integration into customer facilities.

Final Words

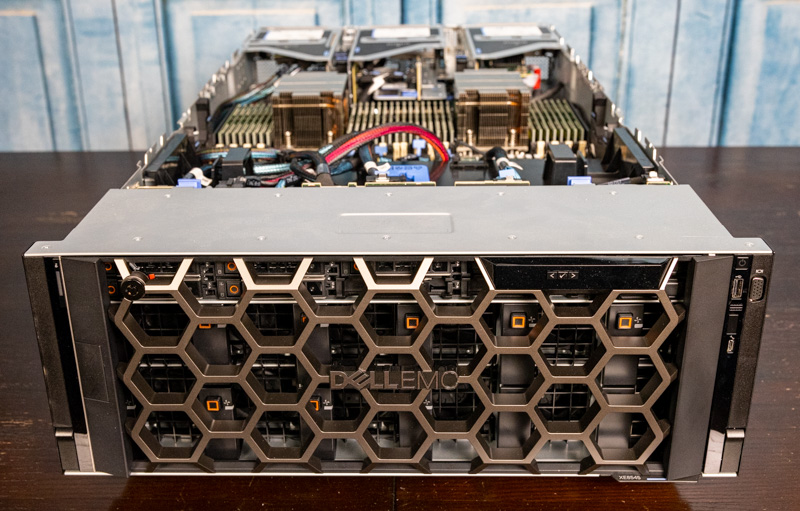

The Dell EMC PowerEdge XE8545 is a surprisingly well-rounded platform for one that has high-end NVIDIA A100 SXM4 accelerators and dual AMD EPYC 7003 processors. Compared to its predecessor, the C4140, this is going to be both a significantly higher performance system but also much easier to integrate into existing facilities. It may be the end of the line or near the end of the line where this balance can be achieved with modern accelerators and air cooling.

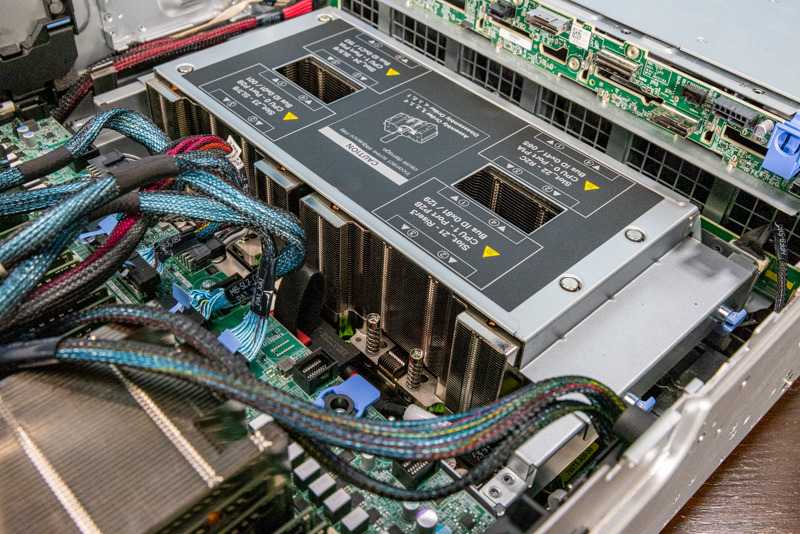

Dell’s ability to cool even the NVIDIA A100 500W 80GB GPUs in the NVIDIA Redstone assembly along with dual AMD EPYC 7763 280W CPUs in a single node using air cooling is rare in the industry. Many vendors are recommending liquid cooling for 500W A100’s but that comes with integration challenges. Integration challenges that Dell sought to avoid with the XE8545. This focus on ease of deployment extends beyond the hardware and to the fact that it can be managed using iDRAC and Open Manage.

Still, this server also shows a bit about the future. While this setup may work for 500W GPUs (assuming <=28C ambient temperatures), in the future 600W-1kW accelerators will make the case for liquid cooling more pressing. Dell’s solution of two zones, one going from 1U at the front to 2U at the rear and the other from 3U to 2U, is novel. It does bring us back to the feature that has stayed with me while reviewing this platform, the overhang. Every time I see this I wonder at what point this is disaggregated to a standard server and then a GPU shelf. Doing that design would also allow Dell to, for example, use a PowerEdge R7525 as a base, then have a 4 GPU Redstone shelf and/or an 8 GPU Delta shelf. Given how many servers STH reviews, this overhang is a constant reminder that disaggregation is coming.

Overall, the Dell EMC PowerEdge XE8545 was easy to work with. Getting to the Redstone assembly was one of the harder maintenance tasks we have seen on Dell servers, but Dell did a good job. The fact that we ran the system, took it apart for photos, put it back together, and it worked immediately is a testament to how well this is built down to the small details of clearly labeling and marking components in the chassis.

The PowerEdge XE8545 may not be the reason a large organization switches from HPE or Lenovo to Dell, but it helps a Dell customer add NVIDIA’s high-end GPUs and AMD’s high-end CPUs to deployments without having to leave the ecosystem. For many, this is going to be a near-perfect solution.

With 3 nvme u.2 ssd’s how can i leverage 2 drives into a raid 1 configuration for OS? That way I can just use 1 of my drives for scratch space for my software processing.

Do i need to confirm if my nvme backplane on my xe8545 will allow me to run a cable to the h755 perc card on the fast slot x16 of riser 7 for the perc card? Would this limit those raided ssd’s to the x16 lanes available to the riser where the perc card would be? At least my os would be protected if an ssd died. The 1 non raided drive would be able to use the x8 lane closer to the gpus right? I was originally hoping to just do raid 5 with all 3 drives so that i could partition a smaller os and leave more space dedicated to the scratch partition.