Supermicro showed off a few of its servers for the Intel Xeon 6 generation. There were surprises beyond there being just Xeon -SP and Xeon -AP variants. Instead, the way Supermicro is constructing a few of these servers will turn heads.

As a quick note, I was attending Vision 2024 as an “Influencer” since this was primarily a customer, partner, and analyst event. As an influencer, Intel did not dictate what I could write about. It was just an avenue to get me into the event having about 25 minutes from the STH studio.

Supermicro X14 Servers Shown at Intel Vision 2024 Including a Big Surprise

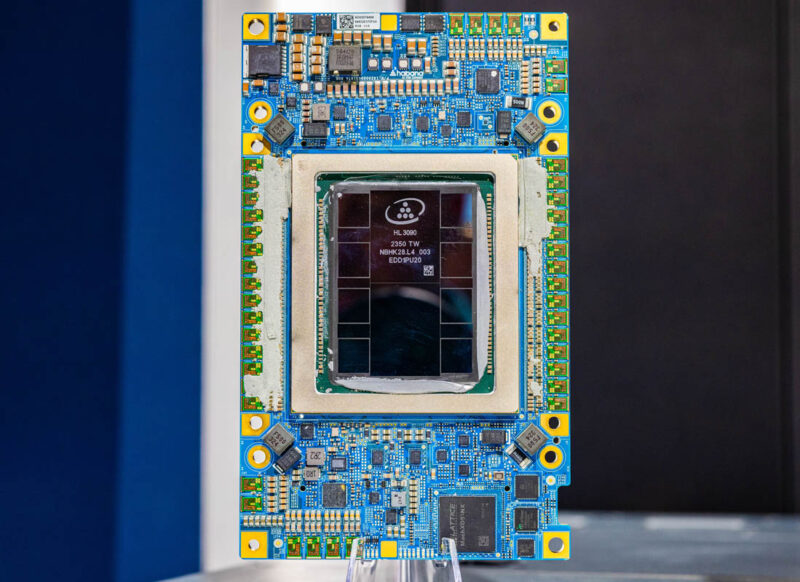

Of course, the star of the Intel Vision 2024 event was the Intel Gaudi 3 from its Habana Labs acquisition.

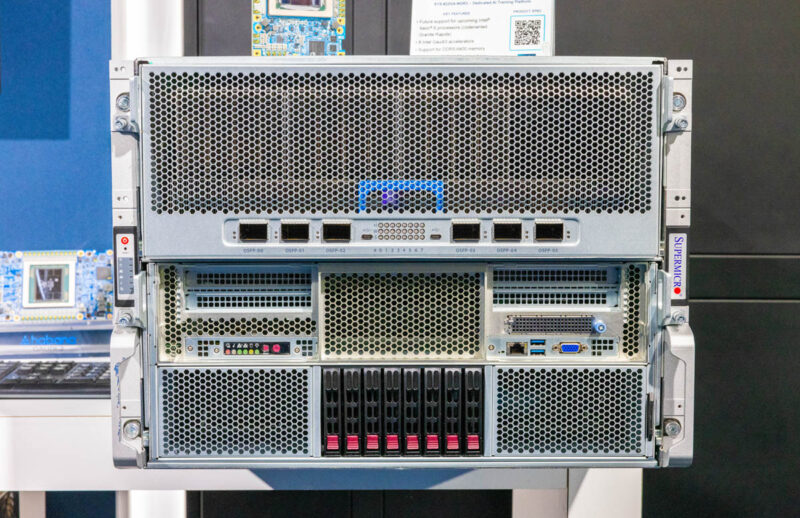

As one would expect, Supermicro is ahead of this trend and already had an Intel Gaudi 3 8x OAM / UBB setup installed. Those AI accelerators sit in the top portion of the system, and the compute and I/O are below. The 6 OSFP ports are 800GbE ports, each carrying 200GbE from four of the OAM AI accelerators. One of those accelerators is pictured above. This is actually a working sample system, so the heatsink was taken off for Vision 2024.

Inside, Supermicro is using another Intel Xeon 6 variant. It is using the higher-end Xeon 6 Granite Rapids-AP. We have known for some time that there will be different sockets for Intel Xeon 6, and this system is using the higher-end sockets. While we have seen Granite Rapids motherboards, this is the first Granite Rapids-AP system we saw.

Supermicro was the only major vendor to have a Gaudi 3 OAM and Xeon 6 system on the show floor. Supermicro tends to be early with its designs.

Supermicro’s Shocking 1U Hyper Server

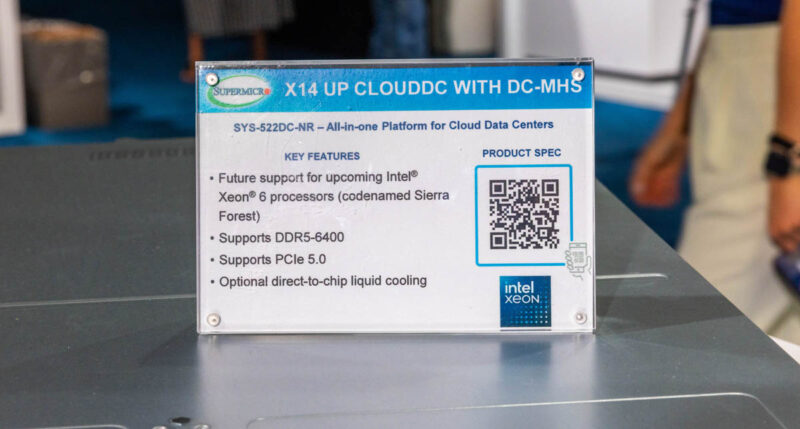

Next to the Gaudi 3 system, we saw a fairly standard-looking 1U server. Instead, it was absolutely shocking. The Supermicro SYS-522DC-NR is a 1U server that is part of the company’s CloudDC line. Supermicro has a number of different levels of servers, ranging from highly cost-optimized to ones for organizations that want more customization. CloudDC is a brand we have reviewed with some optimizations for large-scale deployments adopting more hyper-scale principles. At first, a 1U 12-bay server may not seem too exciting, but then we kept looking around.

At the rear of the server, we see redundant power supplies on the right of the chassis. There are two full-height expansion slots. Then things start to get different. Alongside the AIOM/ OCP NIC 3.0 slot, there is the server management I/O. Note, the lack of a VGA port. Also note, the entire management block with the OOB management NIC, and USB ports is on a module. Might Supermicro be using something like DC-SCM for this?

Then things took a big turn. While we could not open the server, we saw the little sign that was sitting on this server. This is a UP server for Sierra Forest with liquid cooling options. The title has something shocking, “X14 UP CloudDC with DC-MHS”. Supermicro is now the first top 5 vendor to support DC-MHS. Dell told me they tried to adopt this standard with its previous generation servers, but they were not able to adopt the design in time.

If you do not know, DC-MHS is the OCP standard for motherboards. It is a design that Intel has been pushing to modularize servers into standard blocks. Culturally, is a big deal that Supermicro has adopted DC-MHS on a server. Ten years ago, the company would likely not have used an Intel/ OCP-style format, but the market has changed.

Supermicro SYS-222H-TN 2U Hyper Server

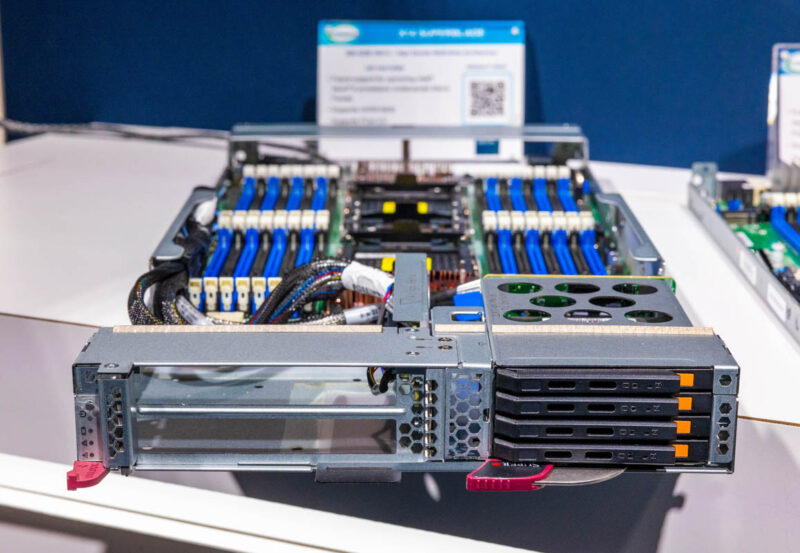

Next, we saw a Supermicro SYS-222H-TN. This is a 2U Hyper server with eight 2.5″ NVMe bays. Both sides are available for additional uses, even if that is just to provide maximum airflow for accelerators. Hyper is Supermicro’s line that generally sits above CloudDC in the stack.

Looking at the back, we see a more traditional Supermicro rear I/O block and power supply placement. There are spots for many risers in the rear. There is also a dual OCP NIC 3.0 stacked setup. To do this, Supermicro will most likely need to cable connections from the motherboard to the AIOM/ OCP NIC 3.0 slot.

Like the other servers we have shown, Supermicro is offering liquid cooling options. We covered Supermicro’s Custom Liquid Cooling previously. Here is the vide for that one.

One will notice a similar 8U chassis to the cover image for that video and how similar that chassis is to the 8U Gaudi 3 system above.

Supermicro SBI-422B-1NE14 and SBI-621B-1NE34

We also saw blade servers based on Intel Xeon 6. Here we have the Supermicro SBI-422B-1NE14 with two expansion slots and four E3.S bays in front.

This is a dual-socket, 8-channel blade, but there is a big change with this blade server.

Supermicro is using copper heatsinks for cooling power components. We asked and this is due to the volume of air that needs to move through the server to cool, and copper heatsinks will be needed in some of this generation’s designs.

The other blade we saw was the Supermicro SBI-621B-1NE34. This is a single socket Xeon 6 -SP blade with eight DDR5 channels and sixteen DIMMs.

One can see that this system can handle GPUs in the expansion slots.

In front, we have two 2.5″ bays.

Sierra Forest is going to change the density game with blade servers. We will end up seeing over 5000 E-cores in a SuperBlade chassis and that will allow companies to replace multiple 5+ year old racks with a single blade chassis. For those who are looking to get more power and space for AI servers, that is going to be a viable option.

Final Words

Supermicro had servers out at Vision 2024. We looked at other vendors, and they did not bring their Xeon 6 servers to the show, especially not production servers. It seems like Supermicro already has a portfolio spanning AI systems, traditional servers across multiple lines, and blade servers ready for the Xeon 6 launch later this quarter. We expect Gaudi 3 to start shipping in higher volume later this year, so some of these servers are already ready for the Xeon 6 -AP variants many months before we expect those parts to launch. Seeing the DC-MHS CloudDC server was shocking since we have covered Supermicro servers for years and would not have expected that.

Lack of VGA is nothing new – it looks like it’s got a mini-DP just like lots of other servers.

dual Granite Rapids-AP in bottom level?

Granite Rapids-AP reportedly has only 96 pcie5 lanes. 8x Gaudi 3 would need 128 pcie5.

I read elsewhere a report from Hotchips 2023 that Granite Rapids-AP actually has 136 PCIE5 lanes … so, my bad on using the wccftech info, which said 96.

We already talking about x14 lineup, but sadest part is theirs (Supermicro) x13 lineup is still have issues with EmeraldRapids cpu on these boards. Example, xeon 5515+ on x13sem-tf with bios v2.1 (build 12/07/2023) and BMC fv01.02.06 (build 12/13/2023) still impossible to boot, and in bmc these cpu reported as Xeon 8468. Shame Supermicro!

Weird that Supermicro web says for EmeraldRapids 2.1 bios needed :-)

PL Supermicro if you read these comment, pls update your bioses properly.

Intel slides for Xeon Gold 5515+ marked with thick – Long Life Availability My Ass………but still after 4months after lauch bios’es not ready.

Maybe Patrick have some ends on Supermicro support people, to claify my issues. :-)

Jay N.

With AMD Servers – single CPU = 128 PCIe lanes.

2 AMD CPUs = 128 PCIe Lanes.

Maximum of 2 sockets.

With Intel servers – Single CPU = 96 PCIe lanes.

2 Intel CPUs = 192 PCIe Lanes.

4 Intel CPUs = 384 lanes.

Maximum of 8 CPUs.

1 is a server arch = Intel.

other is a desktop masquerading as a server.

LaughinGMan

X13 SPR is working great, as is EmeraldRapids. I own both.

Taking my words back from previous comments…..

x13sem-tf + Xeon Gold 5515+ . Culprit (Supermicro PWS-865-PQ) in these combo was PSU. It just doesn’t boot MB, only for half seconds cpu coolers spins-up and then stops.

Swapped to Corsair HX1000i and board booted properly.

I dunno, why sm single rail 865 psu with many amps was issues to boot 165W TDP cpu!