Google has a new Arm CPU developed internally. The Google Axion is a new Arm Neoverse V2 core CPU that it will use internally before opening up to more cloud customers later in 2024.

Google Axion for Arm Cloud Compute

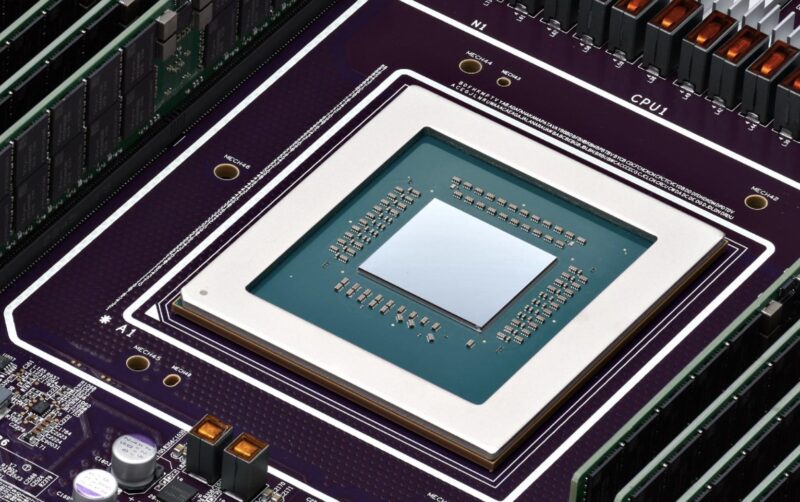

The release was sparse with details. Google said that the new Axion CPU is using Arm Neoverse V2 cores. We first heard about those cores launching in 2022 with the Arm Neoverse V2 core launched for NVIDIA Grace. Most designs with Neoverse V2 have had PCIe Gen5 connectivity. We can also see that the memory modules have DDR5 PMICs so it looks like this is DDR5 RDIMM memory, which is standard in this generation. Although five DIMMs are on one side of the picture, we do not have an exact DDR5 memory channel count.

We can also see from the photo that there is not a huge amount of silicon on the package, so this is unlikely a 128+ core design like an AMD EPYC Bergamo or the upcoming Intel Xeon 6 Sierra Forest-SP 144 core. Memory controllers and I/O take silicon area, and Neoverse V2 cores are larger than the Neoverse N series core line that Google had been using. Intel Xeon 6 will have two variants, the Xeon-SP, which will scale to 144 cores. There will also be a Xeon AP platform later in 2024. There, we will see the 288-core Sierra Forest-AP for high-density and processors like Granite Rapids-AP that we expect to be used in accelerated systems for AI.

An interesting note here is that we have been debating in 2024 if a 500W Grace Superchip with 144 Arm Neoverse V2 cores and just under 1TB of usable memory could be considered a cloud-native part for our series. It seems like Google is building a lower core count part that likely uses significantly less power. 80 cores, perhaps like the Amepre Altra processors it has deployed?

Google said it also has its Titanium offloads. AWS has enjoyed a solid lead with its Nitro DPU features, which offload networking, virtual machine functions, and storage to a dedicated accelerator. We know Google has been using the Intel Mount Evans Arm-based ASIC, but having its own silicon means that Google can offload many similar functions.

Final Words

Overall, it is awesome to see the new part come out. It may not be the biggest Arm CPU out there, but it is a step in getting more compute out in the market. In an era where other hyper-scalers have been talking to STH about using higher core count CPUs in larger nodes to free up power for AI clusters, it seems that Google is going a different route.

Hopefully, we will get to take these for a spin one day, either in person or in the cloud. We know the Google silicon folks read STH when we cover things like Google YouTube VCU for Warehouse-scale Video Acceleration.

My opinion is cloud native actually means the side channels that can be used to interfere or monitor other tenants’ virtual machines have been reduced or eliminated.

Since fewer cores sharing the same memory bandwidth are less likely to interfere with each other, cloud native is not so much about cramming a huge number of cores in a socket, but to make sure those cores don’t interfere with each other and meet consistent service levels.