In 2023, the big talk of the data center was certainly AI, as it should be. Still, there are a ton of legacy applications that would be foolish to run on today’s expensive AI servers. Many of these are legacy applications still running on 2nd Gen Intel Xeon Scalable “Cascade Lake” or older servers since AMD was just hitting its stride with its AMD EPYC 7002 “Rome” Generation in the second half of 2019. Since those say 2017-2021 era servers were deployed a lot has changed, and AMD’s newest EPYC is designed to replace fleets of older generation servers. In this article, we are going to discuss the AMD EPYC “Bergamo” generation of Cloud Native/ Energy Efficient CPUs, and just how crazy the consolidation benefits can get.

AMD EPYC Bergamo Has Massive Consolidation Benefits

A few months ago, we looked at how Intel’s latest generation of Xeon’s offers big consolidation benefits even from the newest Xeon generation as of the start of Q2 2021. In that, we used two Supermicro server generations. As a result, we are going to use Supermicro servers again here, with a twist. The consolidation numbers are going to be much larger because the AMD EPYC Bergamo series was designed as a new cloud-native part that truly offers something different. Of course, since we are using Supermicro hardware and AMD CPUs we are going to say that this is sponsored. If you want to learn more about Bergamo, we have our AMD EPYC Bergamo is a Fantastically Fresh Take on Cloud Native Compute piece and accompanying video:

We are using 2nd Gen Xeon “Cascade Lake” as the comparison point because it was still the top mainstream Intel Xeon at the start of Q2 2021 and the 3rd Gen Ice Lake Xeons took some time to ramp. Folks will wonder what about AMD EPYC, but the EPYC 7001 series had very limited market penetration, and the 2nd Gen EPYC 7002 started to get more traction, but the vast majority (90%+) of folks are going to be upgrading from 1st Gen or 2nd Gen Xeon Scalable (or possibly E5) if they are on a 3-6 year refresh cycle.

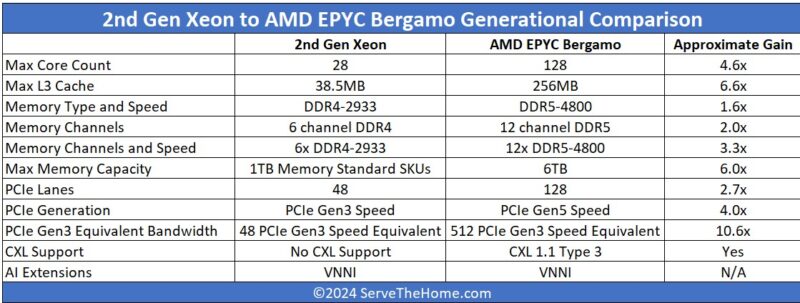

For this, we wanted to do our standard 2nd Gen Intel Xeon to AMD EPYC Bergamo top bin comparison.

One of the interesting things here is that if you are still on Q2 2021 and older hardware, Intel had just introduced VNNI for AI inference in its CPUs. Acceleration blocks like QuickAssist, AMX, and more were not in the CPUs either. Instead, you get a fairly straightforward core-to-core comparison, but with the Zen 4C core being a bit faster.

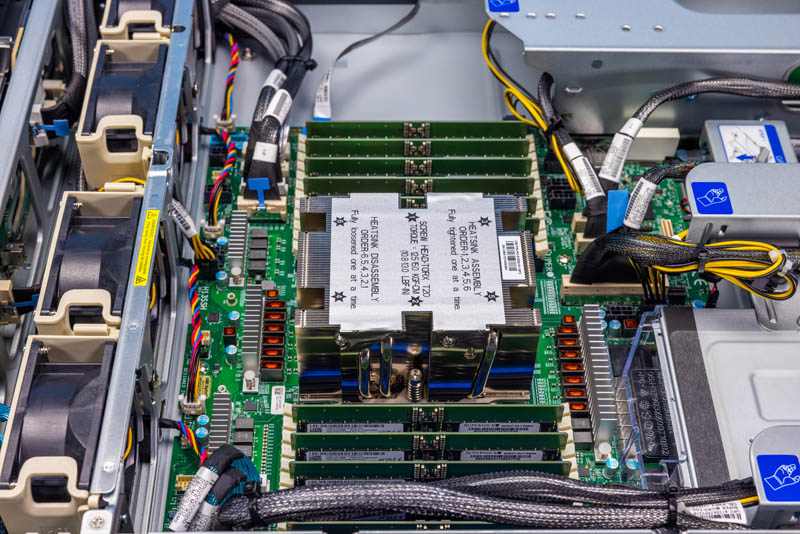

Again for some perspective, this is a single-socket Supermicro AS-2015HS-TNR that we reviewed. This single AMD EPYC 9754 CPU can replace around 4-4.5 top-bin CPUs up to Q2 2021 for the vast majority of applications. In other words, you can replace two top-bin 1U dual-socket servers and move to the operational efficiency and lower power density of a single-socket server.

Even with that consolidation, the I/O is still significant on the single socket platform. While those dual-socket servers might combine for 192 PCIe lanes, they are only PCIe Gen3. A fairly common architecture in 2021 would have been to put a 100GbE NIC per CPU which would take a PCIe Gen3 x16 slot or 64 lanes total. Bergamo can have a single 400GbE NIC in a single PCIe Gen5 x16 slot and use one-quarter of the lanes, and one-quarter of the NICs, and still provide the same throughput.

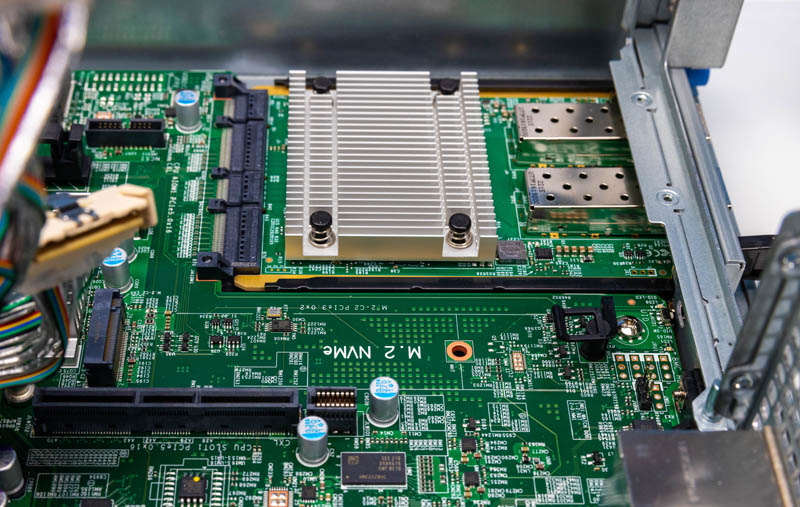

Of course, there are more changes to the I/O side than just PCIe Gen5. We now have support for newer expansion cards like OCP NIC 3.0 cards.

We also get support for PCIe Gen5 NVMe SSDs. These are coming out, slowly, but can offer 4x the throughput of previous generation drives. SSD capacities have also increased significantly, so on an upgrade cycle, it is possible to get much larger drives than in the previous generations.

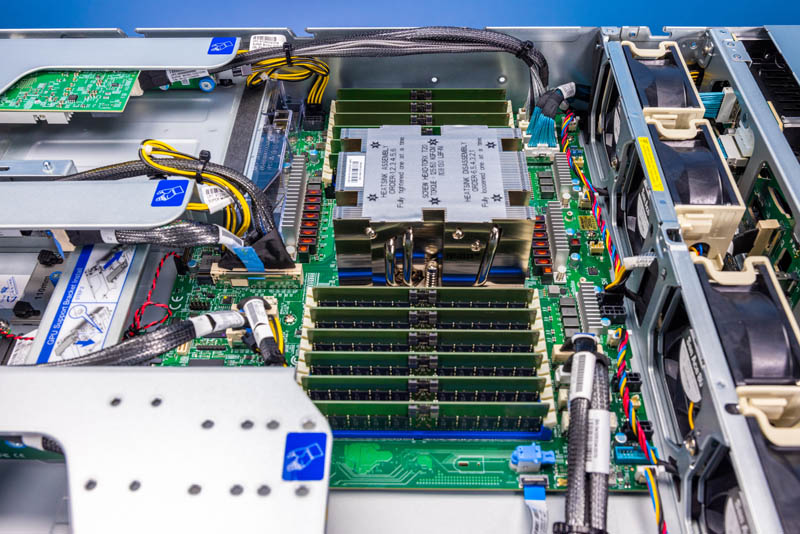

We have been showing single-socket options, but dual-socket servers with Bergamo can scale to almost 2x. There is one challenge with that, however, and why we are focusing on single-socket solutions here. An AMD EPYC Bergamo CPU has 12x DDR5 lanes. Each lane can have two channels, for 24x DDR5 DIMMs total per CPU. Putting that into perspective, a dual-socket 1st Gen and 2nd Gen Xeon Scalable server can have 12x DDR4 DIMMs per CPU or 24x total. The challenge with having 24 DIMMs per CPU is just the width.

Physically you can fit a CPU socket plus 24 DIMM slots or two CPU sockets with twelve each for 24 total. You cannot, however, fit two CPUs and 48 DIMMs in a 19″ chassis width unless you create a large motherboard with offset CPUs that makes for a much deeper chassis. One could use CXL 1.1 Type-3 devices to act as memory expanders in these new servers, but that is still an emerging technology at this point.

So, with AMD EPYC Bergamo we get ~4.5x or more cores, faster PCIe Gen5 I/O, newer form factors like OCP NIC 3.0, and the ability to fully populate 1U or 2U of rack space with a single socket. All of this really hinges on the ability of the AMD EPYC Bergamo parts to deliver a huge step function in per-socket performance. Next, let us talk about performance.

The vast majority of companies who used Scalable Gen 1 or 2 moved on to Ice Lake and Likely Sapphire Rapids by now – but the only way AMD can come out on top is to compare something they haven’t released vs something Intel released years ago.

AMD cannot feed 64 cores much less 128.

I used Gen 1 which led to Ice Lake which led to Sapphire Rapids – which is the path many organizations did.

With AMD’s launch schedule (Launch, with actual hardware months later – like how Mi300 was never mentioned as an AI processor until it shipped 9 months later and is now magically AI focused) Bergamo is a year out at least.

If AMD was so superior then they would not be sitting in single digits in the DC.

@Truth+Teller: the fact that intel DC business isn’t making money despite having 70% of the market is even more damning. It suggests that they are unable to demand a premium for their products. AMDs DC operation has been profitable for the last couple of years, growing both revenue and margins. It Does not need sherlock holmes to deduce what is going on.

I’ll mention first that this is the best article I’ve seen on the cloud native subject. I wish you’d done a video of it too.

My concern with Bergamo is that it isn’t much cheaper than Genoa or Genoa-X, but you know you’re getting less performance per core. You’re losing performance but you’re getting what 33% more cores in a socket? I don’t think that is enough of a gap. AMD needs to be offering twice the cores for cloud native to wow people into switching.

I think you’re earlier coverage of Sierra is also right that customers won’t jump on the first gen of a new CPU line like this. You’ve also nailed the most important metric for those who will switch, how many NVIDIA H100 systems it allows you to add.

I’m just commenting that I’m agreeing with Hans. I’d also say this is hands down the best Bergamo cloud native explanation I’ve ever seen and that 15 3 1 example is perfect for what we need. We’re probably going to order Supermicro H200 servers because STH seems to think they’ve got the best and they’re the only ones with a history of reviewing every gen.

AMD could buy STH for $M’s just to use this article and the ROI’d be silly. You’ve just done something AMD’s marketing’s been trying to push on OEM partners and turned it from “who tf cares?” to something we’re putting on our staff meeting tomorrow.

@Truth+Teller: in order of your assertions, you’re probably wrong, wrong as this article has a picture of all 128 cores 100% loaded, can’t say, somewhat right but that’s due to demand not the products coming out late and definitely wrong as their DC market share is definitely double digits.

“”Truth” Teller another paid Intel shill boy……makes Bagdad Bob look like an amateur :-) and oh Bergamo has been in ample supply for quarters now and many customers had it that long already or longer,

Great analysis.

Truth+Teller your assessment is full of baloney. First most companies outside of places like AWS didn’t go from Gen 1 > Gen 2 > Gen 3 Xeon as that was a huge cost with minimal upside. The only benefit was getting onto Gen 3 or later as you could add more RAM without needing the L series CPUs. However, if you already had those well it didn’t make much sense. Secondly AMD Bergamo has been available for a long time now. I can go on Dell’s website right now and purchase a brand new system with Bergamo in it. Therefore it isn’t “a year out at least.” Also they can feed that many mores. There is a reason they went to 12 channel DDR5 RAM. A single socket now has more bandwidth than a dual socket from the DDR4 era.

Are companies even looking for consolidation? I’d think most datacenters have more problems with power and cooling than available rack space. Of course having CPUs that deliver what general purpose applications need instead of focusing on AI boondoggles to woo shareholders is always appreciated. Most of the newer instruction sets are barely used.