Modern CPUs have a glaring problem and AMD is taking its first steps to remedy the issue. The AMD EPYC Bergamo is the company’s newest chip that offers up to 128 cores in a single chip. Unlike the modern trend of making bigger, faster CPU cores, AMD is making something that is deliberately slower (on a per-core basis) but also has new characteristics that we had not seen before. This is the start of x86 CPUs marching into the cloud-native compute realm formerly dominated by Arm CPUs. In this piece, we are going to get into it in-depth.

“What is the fastest server CPU?” It is Complicated

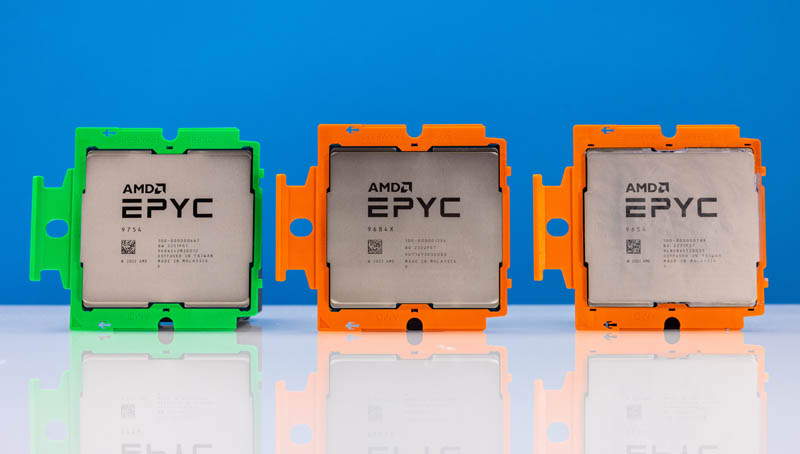

Five years ago, the answer to “what is the fastest public server CPU on the market” was still basically a hunt for the highest MSRP chip Intel sold. Times are changing. Instead, that question really has become a lot more workload specific. AMD now has three workload-specific EPYC processors just in the AMD Socket SP5 platform.

In this article, we are going to focus on Bergamo, the high-core count, but lower clock speed and lower cache variant. We already went in-depth on the Genoa mainstream part that you can find in AMD EPYC Genoa Gaps Intel Xeon in Stunning Fashion. We now have a video for this article as well covering the new chips and how they all create a portfolio of chips for AMD’s server business:

We are also going to briefly cover “Genoa-X” as we covered it in the video that is accompanying this article. We will separately do a deep dive on that and another deep dive on the Intel Xeon Max series with 64GB of HBM2e onboard that we have tested and the video mostly recorded for.

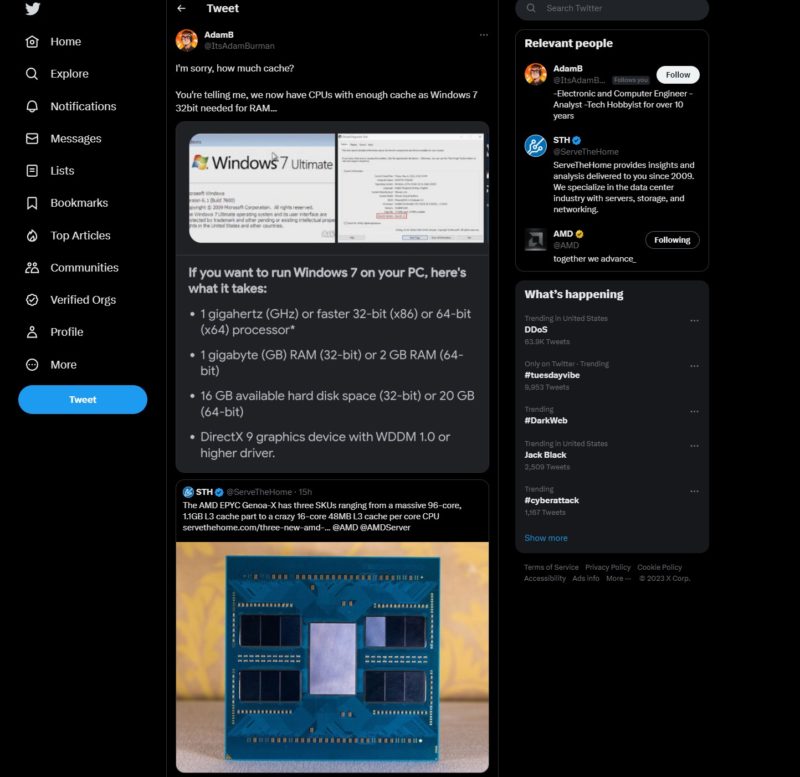

Genoa-X adds 3D V-Cache similar to Milan-X and the desktop parts, to get up to 1.1GB of L3 cache per CPU. For some perspective, we had a Twitter comment exclaim that 1.1GB is enough to meet the minimum Windows 7 32-bit system requirements.

Since we have already gone into more depth on that technology, we are going to focus this article on the new Zen 4c underpinnings, and how AMD EPYC Bergamo fits into the portfolio.

The AMD EPYC Bergamo Recap and Something New

We went over this previously in AMD EPYC Bergamo Launch SKUs, but for completeness, we wanted to do a quick recap and show our readers something that AMD did not say, but that we found on three different systems we tested with three different chips. First, the new series is designed with either 112 or 128 cores.

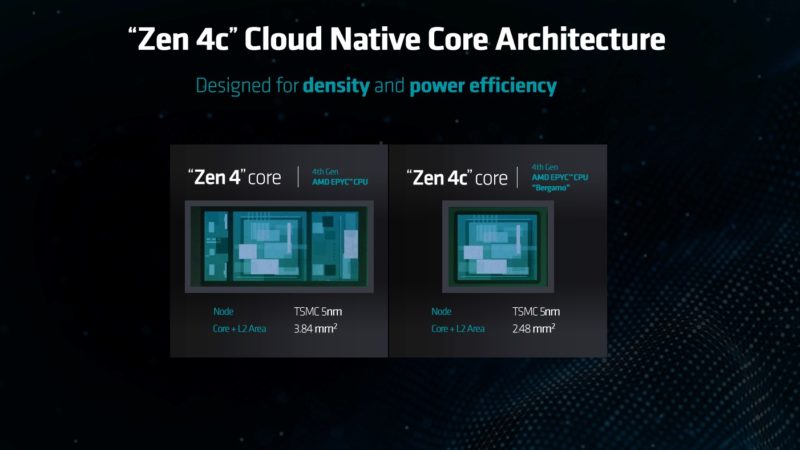

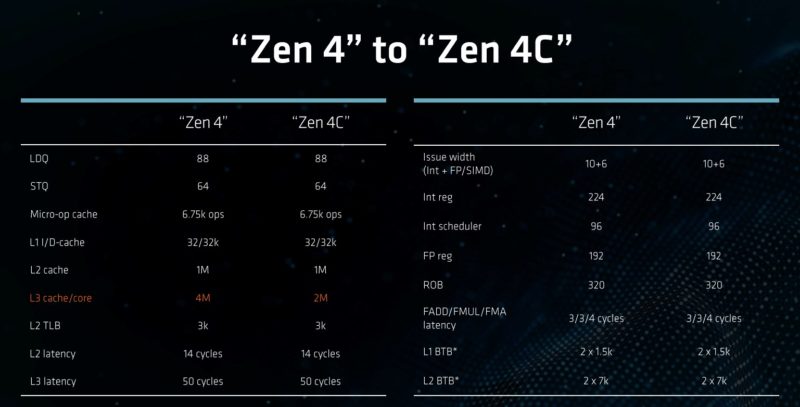

AMD was able to shrink each CCD by adopting a new Zen 4c core. AMD has less L3 cache (half at 2MB versus 4MB) with Zen 4c, and that allowed the company to shrink the area in a straightforward manner. AMD was also able to optimize things like trace lengths and so forth around the more compact die. As a result, each core’s die area on Zen 4c is much less than that of Zen 4.

One of the big selling points for AMD is that this is a L3 cache reduction, not a feature reduction. Intel is planning a move to E-cores from P-cores for Intel Sierra Forest. At the same time, this is AMD’s x86 Zen 4 instruction set and not some stripped-down set of instructions and features like many Arm offerings we have seen to date.

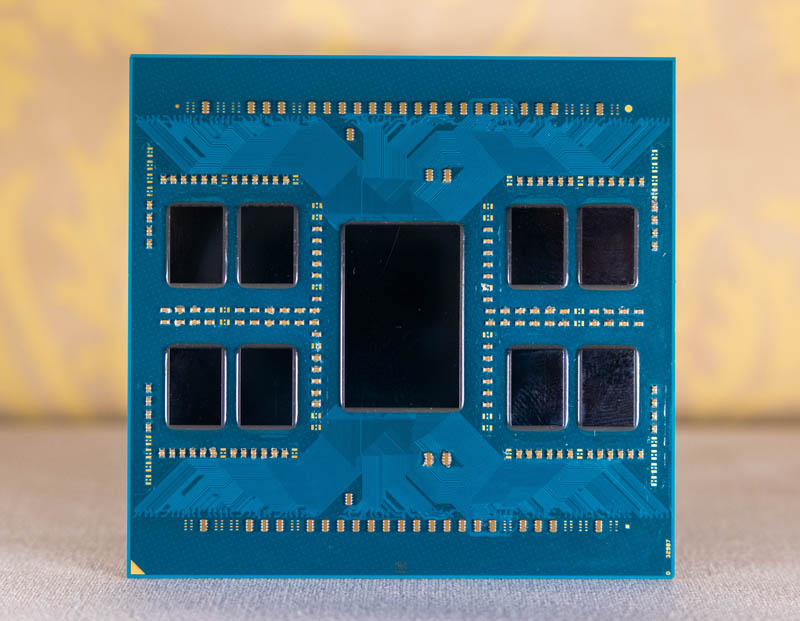

AMD is also showing its chiplet strategy here. We get the same I/O die with PCIe Gen5 and DDR5 that we have seen on Genoa (and Genoa-X.) That means this is a known quantity and helps a lot with platform validation. The DDR5 and PCIe Gen5 controllers are not changing. In this case, the big change is transitioning to the 12x 8-core CCDs on 96-core Genoa to 8x 16-core CCDs on Bergamo.

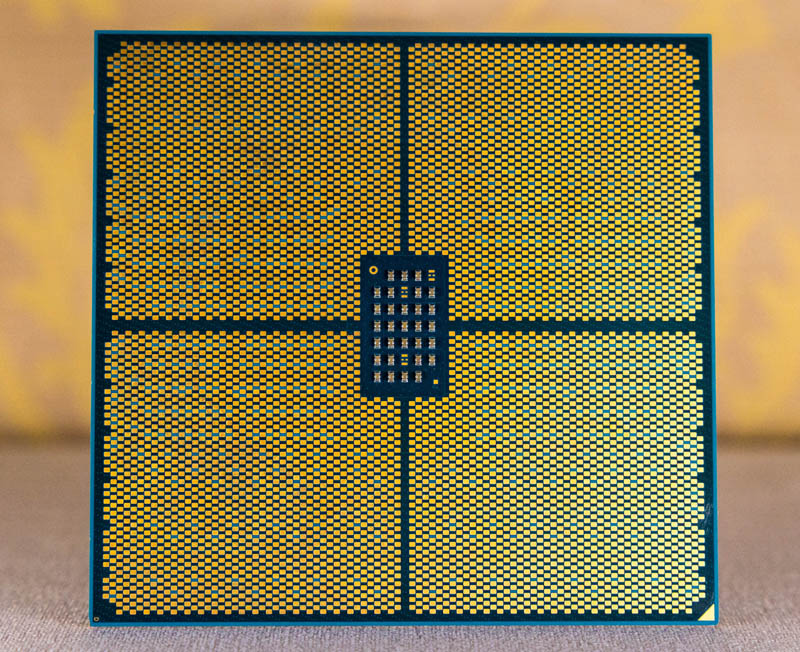

That also means that this is still an AMD Socket SP5 CPU, so we were able to use it in a number of servers and motherboards we had in the lab.

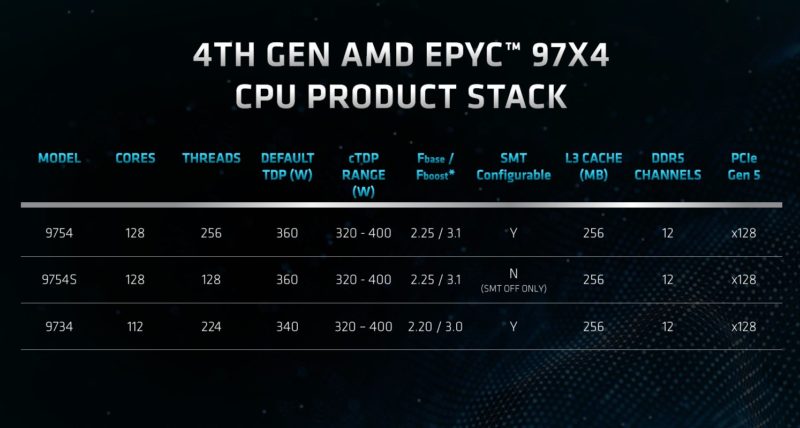

In terms of the SKU stack, the AMD EPYC 9754 is the flagship part with 128 cores and 256 threads at $11,900. That is likely the best CPU if you use increments of two vCPUs in your VMs. For those who do not want or do not need SMT, there is the 128-core and 128-thread AMD EPYC 9754S at $10,200. The AMD EPYC 9734 is the 112-core part at $9,600. AMD does not have SKUs below the 112-core figure because that starts to get into the 96-core Genoa line.

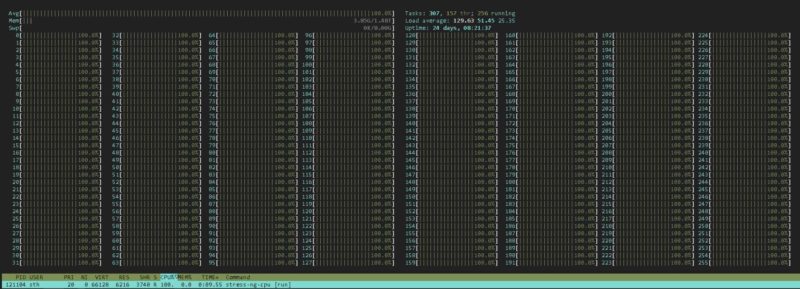

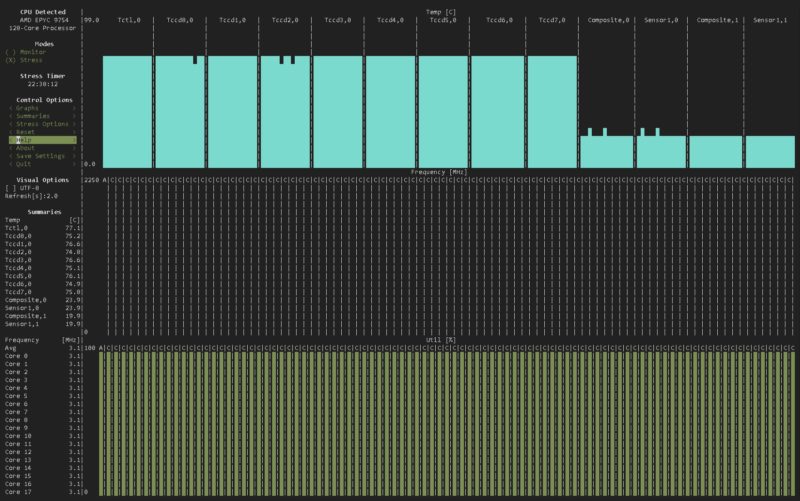

On the subject of key findings, this is a great screenshot. One of the messages against traditional x86 designs is that the cores vary widely in how they are able to turbo boost. Some cores will be low frequencies while subgroups burst higher.

To generate that 100% load across all 256 threads, we used stress-ng. Here is the shocking screenshot:

The specs say 3.1GHz. Unlike many other server CPUs, all 128 cores sat for hours running at 3.1GHz. This was even in a less-than-stellar cooling server where the temps were in the 75C range, yet all 256 threads were loaded and all 128 cores sat at 3.1GHz.

That commitment to a low maximum frequency actually helps ensure that there are not cores outpacing others, an important trait of cloud-native processors. What is more, this cloud-native processor is not a stripped-down core. It has AMD’s Zen 4-era ISA with support for AVX-512 and things like bfloat16 and VNNI for AI inference.

Put together, the two big trade-offs one is making with Bergamo are:

- Half the L3 cache

- Lower maximum clock speed

Otherwise, this is a drop-in high-core count replacement in AMD’s portfolio.

Next, let us get to performance.

Nice review.

I noticed that you put chose the CPU carrier colors in the performance graphs ;-)

Somehow the reduced cache reminds me of an Intel Celeron. Does anyone really want a slower processor aside from marketing types selling CPU instances in the cloud?

If so, then since the AmpereOne has 2MB L2 cache per core, a competitive x86 design should have 4MB L2 per dual SMT core rather than Bergamo’s 1MB.

Eric, Having the full size cache that doesn’t make use of it in the cloud doesn’t count for anything if it isn’t being made use of (i.e spilling out of cache anyway or fitting into the reduced L3)

And also, less cache on this one also means lower latency. It sure isn’t slower, it has Zen 4 IPC still and is better optimized for lower clocks.

It sure ain’t no Intel Celeron that’s for sure.

Also every CPU arch is different, it’s useless to say a competitive x86 needs a big L2 cache

Because of course the Bergamo walks all over the Altra Max anyway. While being far more efficient

@Dave

Historical perspective on why the cache does and doesn’t matter.

Celeron 300A – 128k cache @ 300mhz vs Pentium 3 with 512k cache @ 450mhz

You could overclock that Celeron to 450mhz and 95% of your applications would function identially to the 3x cost Pentium III.

The 5% use case where cache does matter – was almost always a Database, and this shows clearly in the benchmarks here – MariaDB does much worse compared to the large-cache parts. And I saw this decades ago with the 300A as well, we were regularly deploying OC’d 300A’s to the IT department, but the guys doing a “data warehouse” coding a sync from an AS400 native accounting app to SQL servers that would be accessible for Excel users and a fancy front end …they in the test-labs were complaining. Sure enough cache mattered there. It ran, well enough really, but we bought a couple P3’s and it increased performance ~20% on the SQL’s in lab – end of the day the Xeon’s in the servers were plenty fine for production as they had the cache.

The AMD product sku’s are really really straightforward compared to Intel – I don’t think anyone is going to be confused on use-cases. Besides those big DB players are going to sell them pre-built or SaaS cloud solutions on these, they’ll do their homework on caches.

When there’s a new CPU release day, there’s 2 processes

a) Go to Phoronix look at charts, then come to STH for what the charts mean

b) Go to STH get the what and why, and if there’s something specific I’m going to Phoronix

I started 3 years ago on a) but now I’m on b)

“To me, the impact of the AMD EPYC Bergamo is hard to understate” Did you mean overstate?

I’m really wondering if those AI and AVX-512 instructions are worth the die area. I think for typical cloud workloads one really should optimize for workloads that are more typical, e.g. encryption is something that pretty much everyone does all the time while AI is a niche application and usually better served with accelerators.

Many, including me does CPU inference in the cloud on cheap small instances. Having access to AVX-512/VNNI is a huge benefit as it decreases inference latency. The advantage on doing this on the cpu is that you don’t need to copy the data to and from an “accellerator” which improves inference time for smaller models.

Nils, once avx-512 cat is out of the bag for cloud, it’ll get used. Even today gcc (C compiler) may optimize simple library operations (memcpy & co.) using avx-512. And what about for example json parser which is kind of cloud thing right? See for youself: https://lemire.me/blog/2022/05/25/parsing-json-faster-with-intel-avx-512/

@MDF

Thanks for the input. Indeed, having more cache is only useful if you can use it.

AMD has tailored the core specifically for the market and this could pay dividends especially on the consumer side which we might see soon (Phoenix 2 apparently?)

Although technically Phoenix APU cores are already Zen 4c without the optimization for the lower clock speeds (it’s already higher than 7713 mind!) But AMD could indeed take these cores and stick a smaller vcache on them and use it as a second CCD thus spawning a 24 core Zen heterogenous monster

I’m excited. More than can be said for intel’s bumbling efforts.

@MDF, the (Katmai) Pentium III’s 512kb L2 cache wasn’t full speed though. Started at half speed and as CPU freqs rose, the cache speed dropped to a third clock speed. Then we got Coppermine PIIIs around 700 MHz, which settled on 256kb on-die full speed cache.