At OCP Summit 2023, Intel showed some of the 2024 generations of motherboards for its upcoming Granite Rapids and Sierra Forest platforms. There are big changes coming that we have previewed previously, such as in the PCH-Less Intel Granite Rapids motherboard from Computex 2023. Now, Intel is openly showing the new designs.

Intel Shows Granite Rapids Motherboards at OCP Summit 2023

Years ago, next-gen hardware littered the OCP Summit floor and keynotes. Now, the vendors tend to be more reserved, showing off next-gen hardware, but Intel had its partner’s products on display at its booth to show off the OCP DC-MHS platform. For those wondering, the idea of DC-MHS is to standardize motherboards and connectivity to make it easier to drive volume and quality while still allowing flexibility.

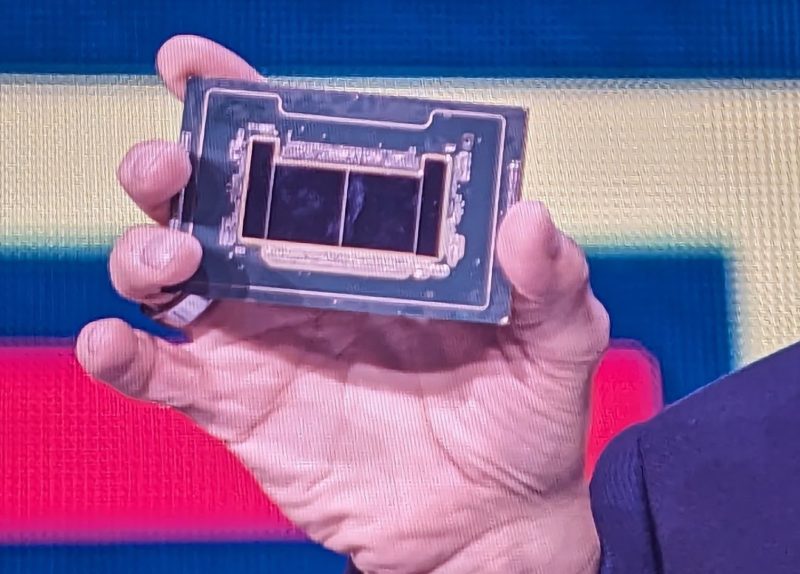

The fun part about that display is that it was focused on next-generation parts, such as the upcoming Intel Xeon Granite Rapids parts.

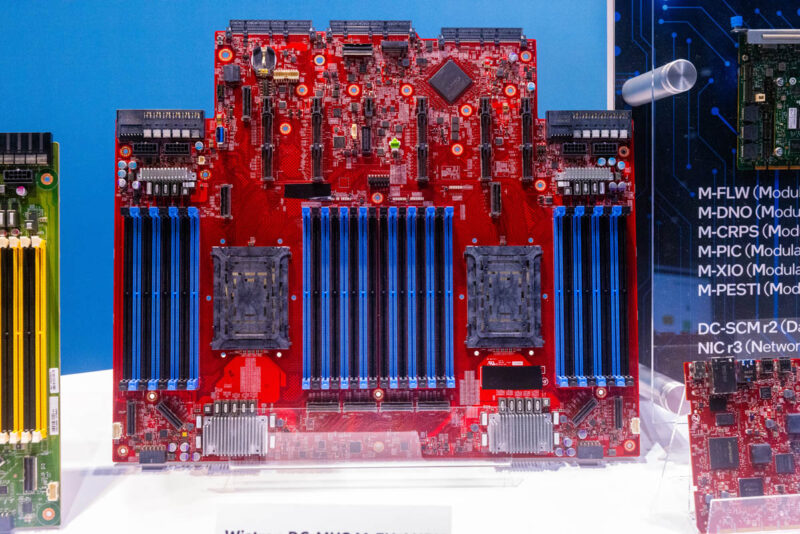

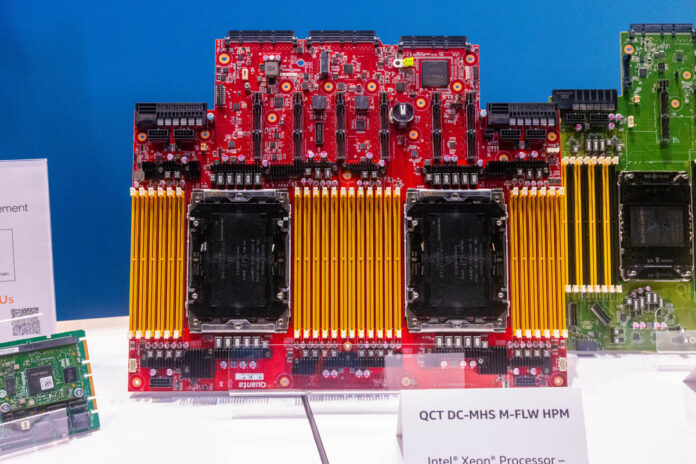

The first is a full-width motherboard from QCT. This is the 8-channel DDR5 platform for Granite Rapids. Something that is worth noting here is that we have 2 DIMM per channel or 2DPC here with sixteen DIMM slots per CPU. There are also MCIO connectors on the front and the back of the CPUs for front connectivity (e.g. for NVMe SSDs) and rear connectivity (e.g. for expansion cards.) There are also OCP connectors for NICs and DC-SCM modules.

There was also a Wiston version of a Granite Rapids 8-channel platform on the floor.

If this looks familiar to some of our readers, that is because we saw something very similar five months prior at Computex 2023.

One of the big changes here is that not only do the motherboards not have an onboard BMC because of the move to DC-SCM, but they also do not have a PCH. Instead, there is an Intel-Altera FPGA onboard. In 2024, we expect the PCH to (finally) leave Intel’s server platforms.

Intel Shows Sierra Forest Motherboards at OCP Summit 2023

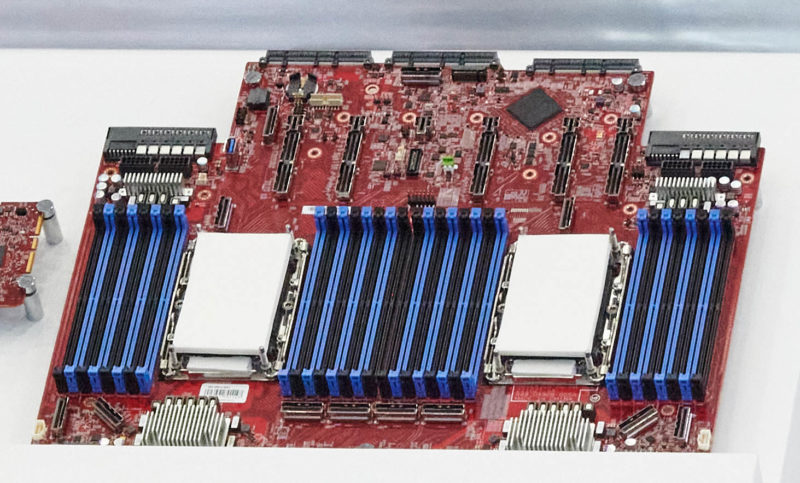

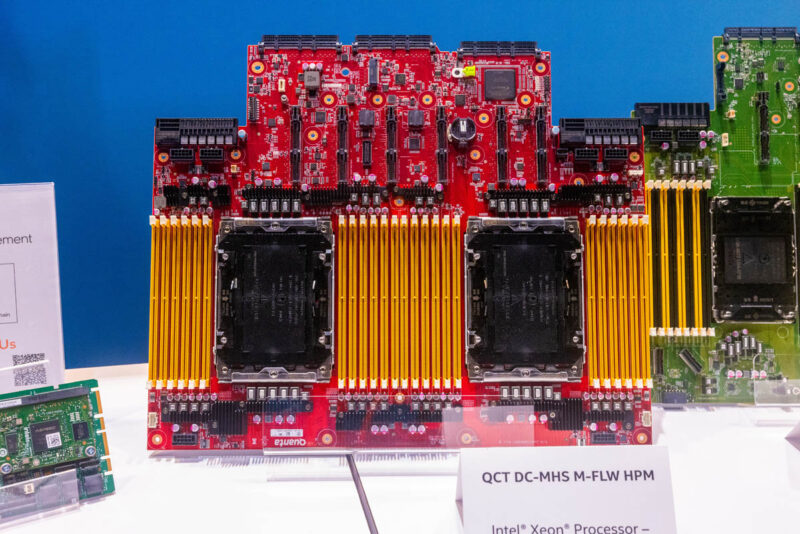

The other primary motherboard is the LGA7529 platform for Sierra Forest AP.

This is also a QCT DC-MHS platform. One can see the larger LGA7529 sockets and 12-channel DDR5 memory. If you saw our Why 2 DIMMs Per Channel Will Matter Less in Servers this will immediately make sense. This Sierra Forest Xeon platform is designed for up to 288 cores per socket for 576 per server, so it needs more memory bandwidth. As a result, with 12 channels of DDR5 and larger sockets, there is no room in a 19″ server motherboard for 2 DIMMs per channel. There are some single-socket AMD EPYC SP5 platforms with 2 DPC, including the Supermicro AS-2015HS-TNR we reviewed and offset Gigabyte 48 DIMM 2P AMD EPYC Genoa GPU servers, but 12 channel memory means 1DPC for dual-socket servers. If you want more memory capacity, that is where CXL 2.0 Type-3 devices will come in for memory capacity and bandwidth expansion.

As with the Granite Rapids QCT platform, we get the same PSU, DC-SCM, and OCP NIC 3.0 slots on the back and cabled PCIe connections. Something slightly different is that we do not have front PCIe connectivity via MCIO connections on this motherboard.

Final Words

We also grabbed a few other images of the upcoming platforms from the show, as Intel had them stashed in a few places. Stay tuned for a bit more on this one as we go through some of the images we captured at OCP Summit 2023.

To us, removing the PCH is a big win as it modernizes the Intel Xeon platform. Folks who want SATA connectivity in 2024 are going to be using SAS4 expansion for JBODs for large disk arrays so this makes sense. Also, seeing 12-channel memory platforms really highlights a big challenge. If you saw our Why DDR5 is Absolutely Necessary in Modern Servers piece or Updated AMD EPYC and Intel Xeon Core Counts Over Time pieces, you will have seen the core count versus memory bandwidth growth. We fully expect a 288-core Sapphire Rapids AP platform in 2024 to be competitive in raw CPU performance with 10+ servers being retired on a 5-year refresh cycle. That means many technologies like memory capacity, memory bandwidth, network bandwidth, and PCIe I/O will also need to find ways to massively scale.

Would you say the next logical move for system memory is a large HBM stack then straight to CXL?

We can see that DIMMs are just taking up too much space now and even with 12 channels it’s still not crazy fast bandwidth when you compare it to HBM4.

I can see the hierarchy moving towards:

large L3 cache -> a separate stacked die of L4 cache (Adamantine adaptation) -> 256GB to 1TB of hbm4 system memory all buffering a near unlimited amount of CXL 3.0 memory.