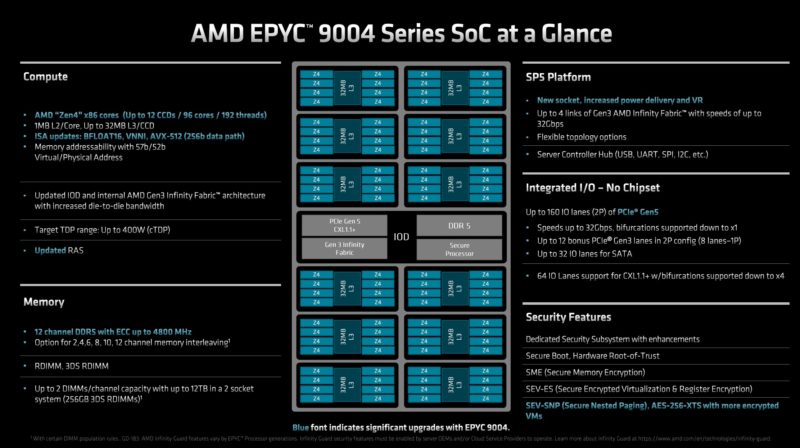

Something I have received many calls about from the financial folks is the two DIMMs per channel (2DPC) operation of AMD EPYC 9004 “Genoa” CPUs. Many have come to me with a perspective informed by logic we would have used in the 2012-2019 server era, but that applies less today and will apply less in the future. After doing dozens of calls, I wanted to just put this perspective out there.

What is 2DPC or 2 DIMMs Per Channel?

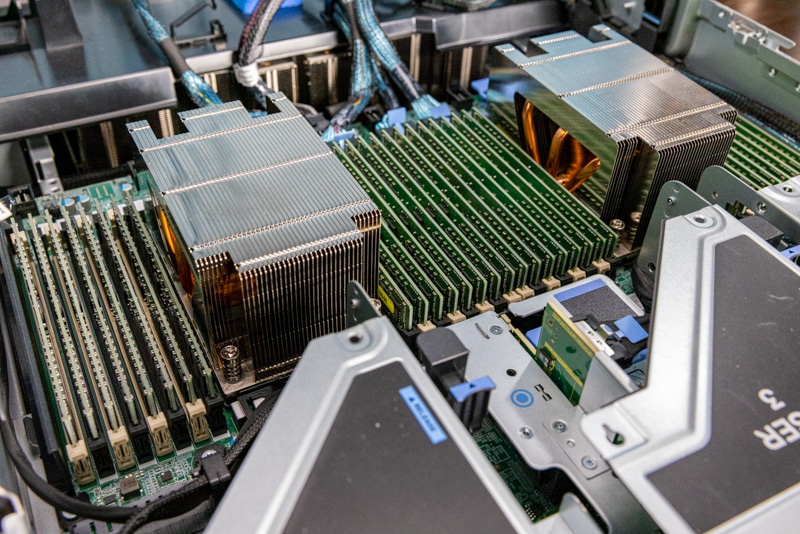

In modern CPUs, each DDR memory channel can host up to two DIMMs, with a catch. The first DIMM generally provides the memory bandwidth from a memory channel being activated, but the second DIMM in each channel is there to add capacity. In the image below from our Dell PowerEdge XE8545 review, we can see this easily.

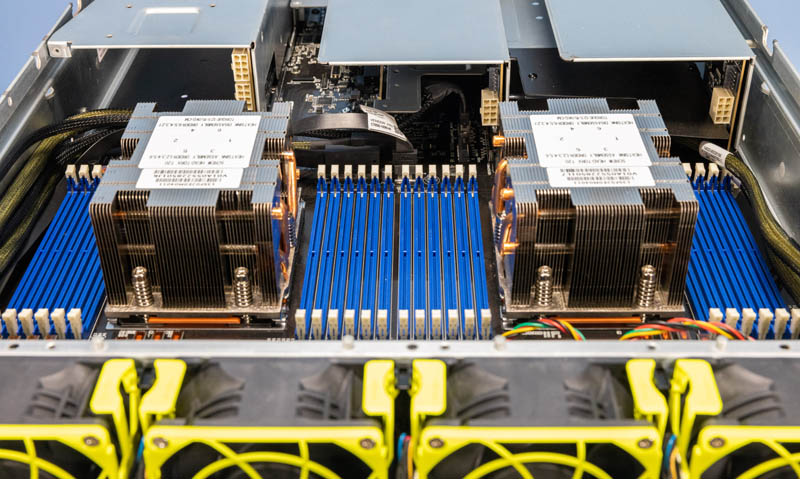

This Dell system is a fairly high-end system with four NVIDIA A100 SXM GPUs and two AMD EPYC 7763 CPUs (“Milan” generation). Here we can see a total of 32 DIMMs installed. Half are in white DDR4 slots, and half are in black DDR4 slots, alternating on each side of the CPU. This is an example of a 2DPC configuration.

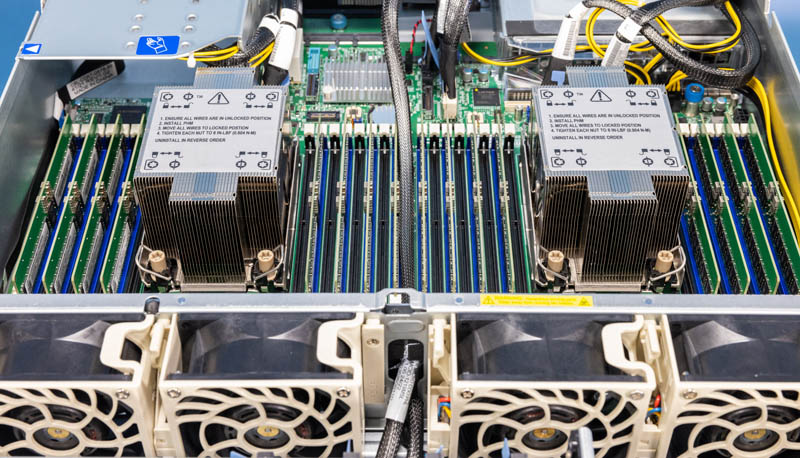

Here is an example of a newer Supermicro SYS-221H-TNR 4th Gen Intel Xeon Scalable “Sapphire Rapids” server. Here we can also see 32 DIMM slots, but only the Blue slots have DDR5 memory modules installed. The black DDR5 slots are unpopulated. This is a one DIMM per channel or 1DPC configuration.

The simple math behind these two 32x DIMM configurations is:

- 32 DIMMs for 2 CPUs

- 2 CPUs each have 16 DIMM slots

- Each CPU has 8 memory channels

- So there are 2 DIMM slots per channel x 8 channels x 2 CPUs = 32 DIMMs

At the same time, especially with the Supermicro photo above, something should be becoming obvious: having 32 DIMM slots occupies more width in the server than two CPUs, and a lot more.

The benefit of having this many DIMM slots is that one can add up to 8 DIMMs per CPU, or 16 total, to get the full memory bandwidth, then add more DIMMs to increase capacity. We will quickly note that a large portion of servers are sold with fewer DIMMs than memory channels.

The Dark Side of 2DPC: Memory Bandwidth

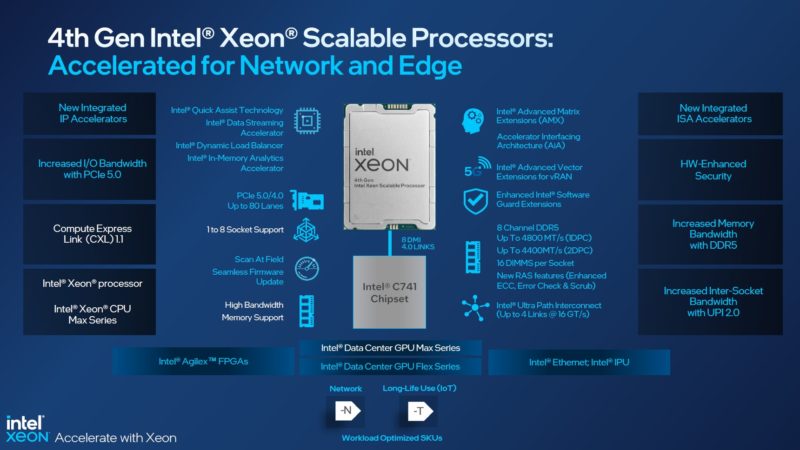

Adding that second DIMM is not “free” in most servers, there is a cost to get more capacity. That cost is paid in terms of performance. Here is one of the first images from our in-depth 4th Gen Intel Xeon Scalable launch piece:

Focusing on memory support, we have the quote on the slide:

- 8 channel DDR5

- Up To 4800MT/s (1DPC)

- Up to 4400MT/s (2DPC)

That means we lose around 8.3% of our memory performance, for all DIMMs, not just the incremental DIMMs past 8 per socket, by going from 1DPC to 2DPC. AMD does not call this out on its AMD EPYC 9004 “Genoa” slides, but it similarly downclocks DDR5 in 2DPC mode. Many vendors are only getting DDR4-4000 as an example, for a 16.7% decrease in performance.

While we are using the latest Intel and AMD server processors here, this is very common and happens for generations. Eventually, some vendors have some models that they validate will run at maximum speeds even in 2DPC modes, but this is an OEM extra after validation, not a base feature.

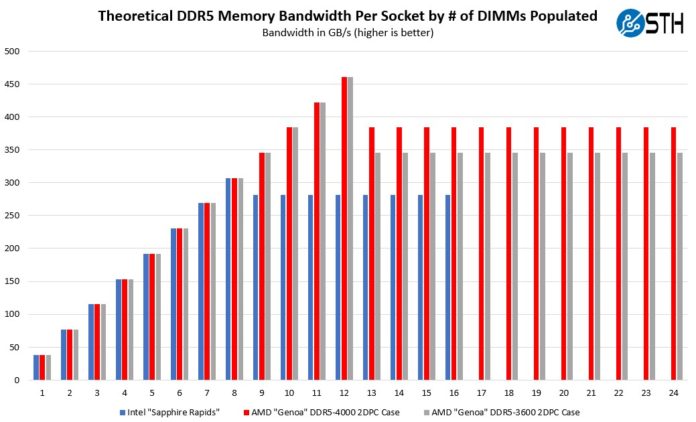

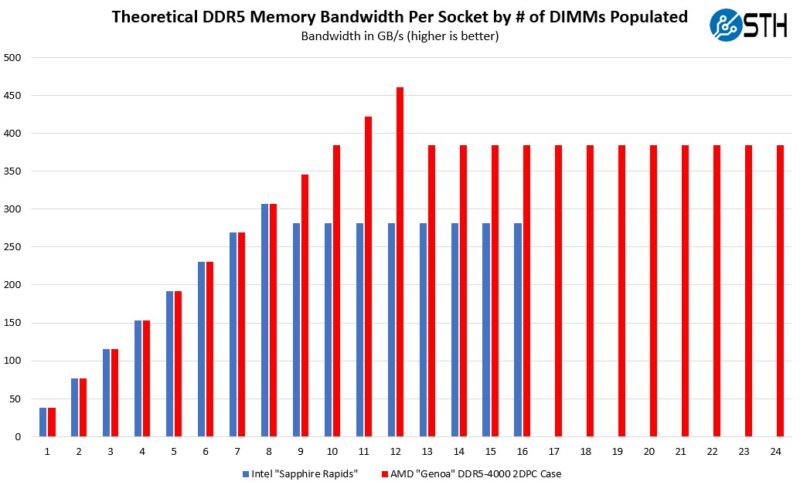

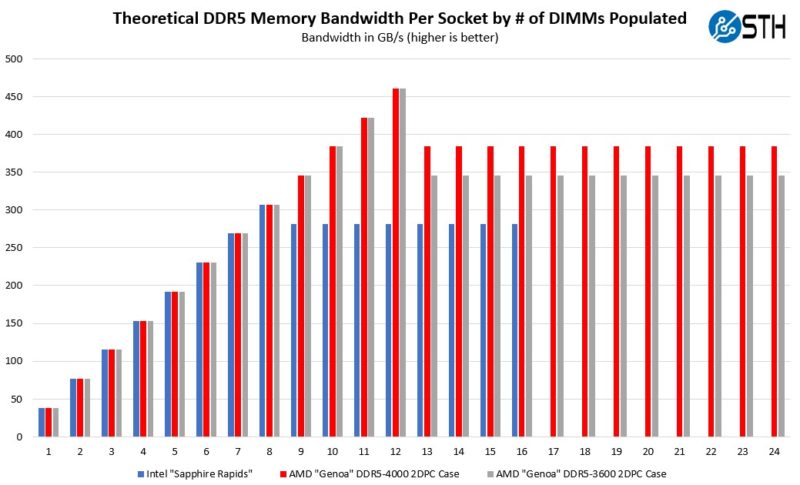

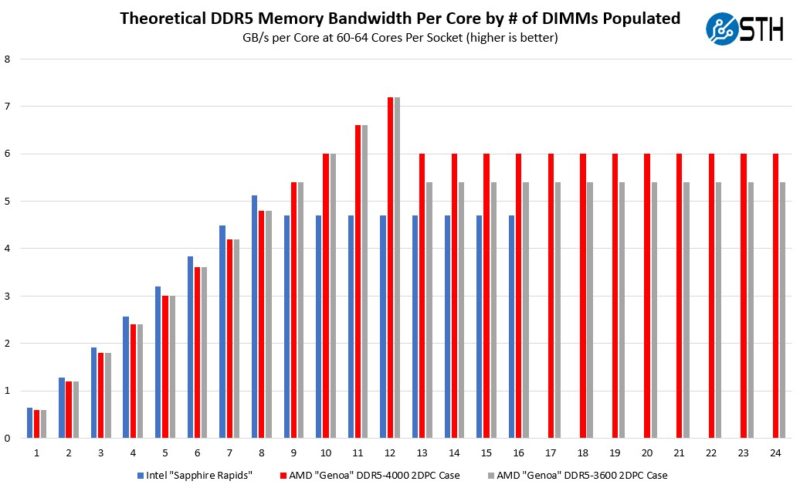

Taking a step further, here is what the impact of that DDR4 speed transition is on the memory population for both top-end Intel Xeon and AMD EPYC CPUs:

As you can see, increasing DIMM capacity from 1-8 increases our available memory bandwidth on both CPUs. Going beyond 8x DDR5 DIMMs per Xeon CPU means we take both an 8.3% hit, but also we no longer get additional memory bandwidth by adding more DIMMs beyond eight.

For AMD EPYC 9004 “Genoa” we continue to increase to 12x memory channels. Going to 13 means we head off the 2DPC cliff and to a 16.7% lower plateau. That 16.7% lower plateau is higher than where Intel is because there are 50% more memory channels.

We have seen some early systems only support DDR5-3600 speeds. Here is what that looks like added to the chart in the AMD “Genoa” DDR5-3600 case.

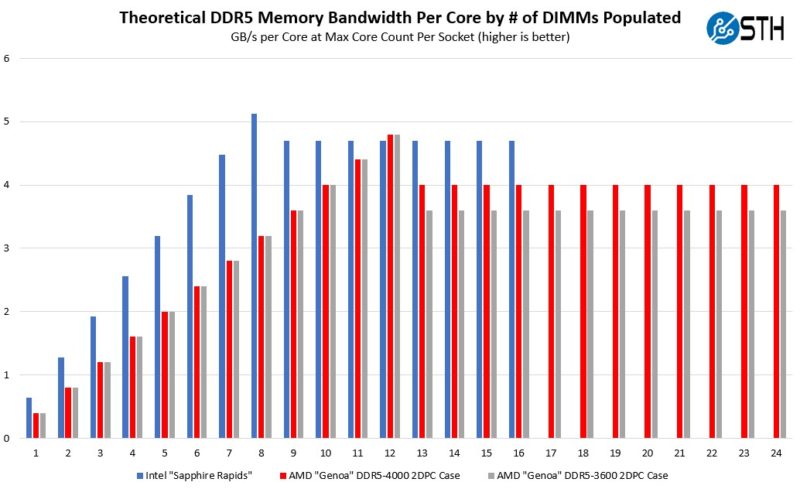

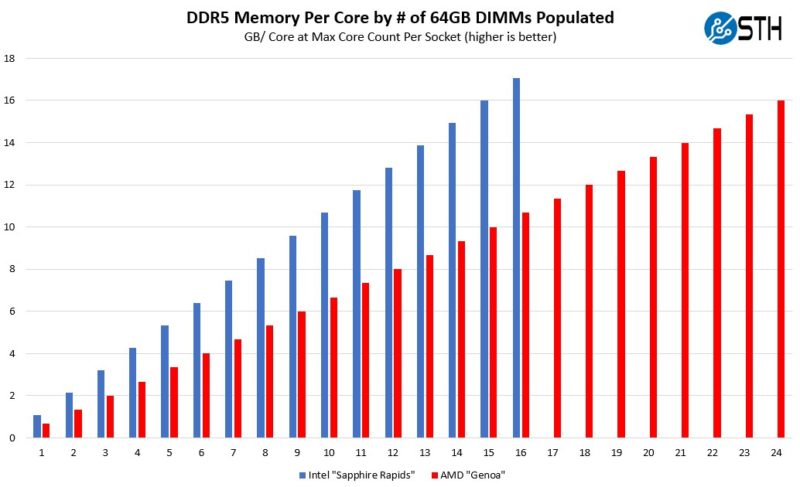

We can also do this on a memory bandwidth per core basis by using the maximum cores in a socket 60 for Intel “Sapphire Rapids” and 96 for AMD EPYC Genoa:

One item to keep in mind with the above is also that, for the same number of cores, we would need more than three Intel Xeon servers for every two AMD EPYC “Genoa” servers. If we come closer to normalization on core counts with 64-core EPYC “Genoa” and 60-core Intel “Sapphire Rapids” we would see a different memory bandwidth per core picture.

There the Intel advantage from 1-8 DIMMs populated is driven by having four fewer cores.

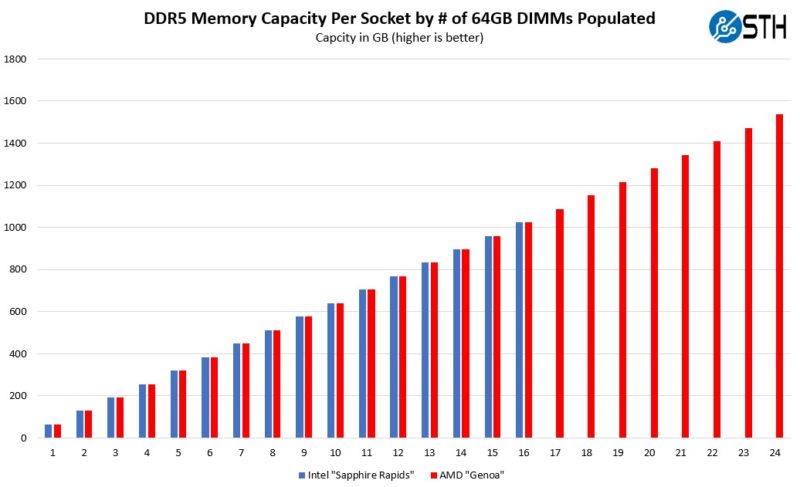

Looking at capacity, we are going to use 64GB DIMMs here which are relatively inexpensive. One of the reasons one would use DPC is to increase capacity, but also increase capacity without going to higher-cost capacities like 128GB or 256GB DIMMs.

Here we can see that up until 16 DIMMs, 1DPC and 2DPC are the same on both platforms. There are population rules for each server that change exactly how many DIMMs need to be populated, so this chart is really just the number of DIMMs per socket multiplied by capacity.

Often folks use 8GB/ core or 4GB/ vCPU or on newer systems twice that. 8GB/ core is what a 96-core EPYC “Genoa” with 12x 64GB DDR5 RDIMMs hits. One can get twice that by either using 2DPC with 24x 64GB DIMMs per socket or 12x 128GB DIMMs.

Generally larger pools of cores and memory help lower the number of stranded resources in virtualized servers. That is one of the major reasons we are seeing a race to 100+ core CPUs.

Perhaps the most interesting points of the above are:

- 1-8 DIMMs, the theoretical bandwidth is similar between AMD and Intel

- 9-12 DIMMs populated, and Intel increases capacity but lowers bandwidth. AMD increases both capacity and bandwidth.

- 13-16 DIMMs, both Intel and AMD increase capacity at lower 2DPC memory speeds

- 17-24 DIMMs, AMD continues to add capacity at the lower 2DPC memory speeds

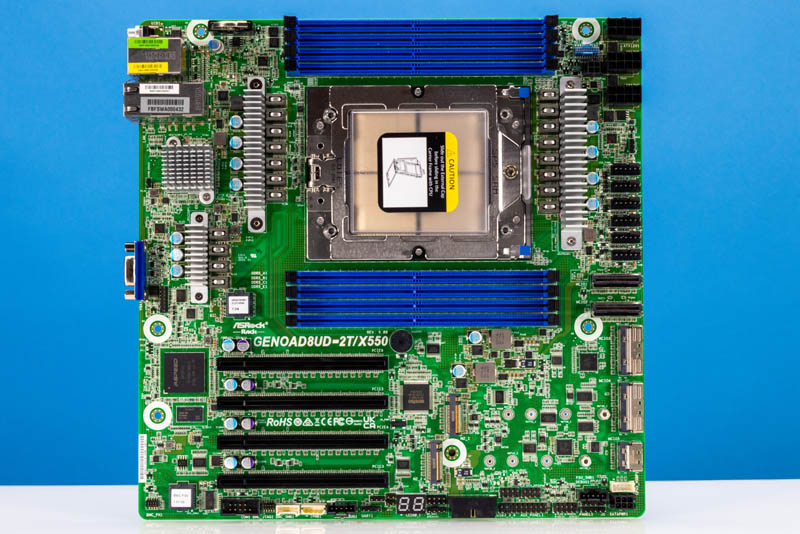

One may read the above, and assume that AMD is clearly better: but there is a catch. Sometimes what one can theoretically add to a platform and what one can physically add are different. Not all motherboards support 16 or 24 DIMMs per CPU. Some, even support less. An example is the ASRock Rack GENOAD8UD-2T/X550 we reviewed that due to form factor constraints only has 8 of the 24 possible DIMM slots.

With newer, larger CPUs, physical form factors are a major obstacle.

12 Channels of DDR5 are Almost Too Big for Servers

Focusing on the AMD EPYC Genoa platform, and with the knowledge that in the future Intel Xeon will also adopt 12-channel memory, there is a big challenge. Physically fitting a socket with a larger CPU and 24 DIMMs per socket is very challenging. First, here is the size of an AMD EPYC 9654 96-core CPU versus a 4th Gen Intel Xeon Scalable that will have a maximum of 60 cores. The AMD EPYC is in its carrier to help the large CPU reliably install into sockets. Chips are simply getting bigger as more resources are brought on board.

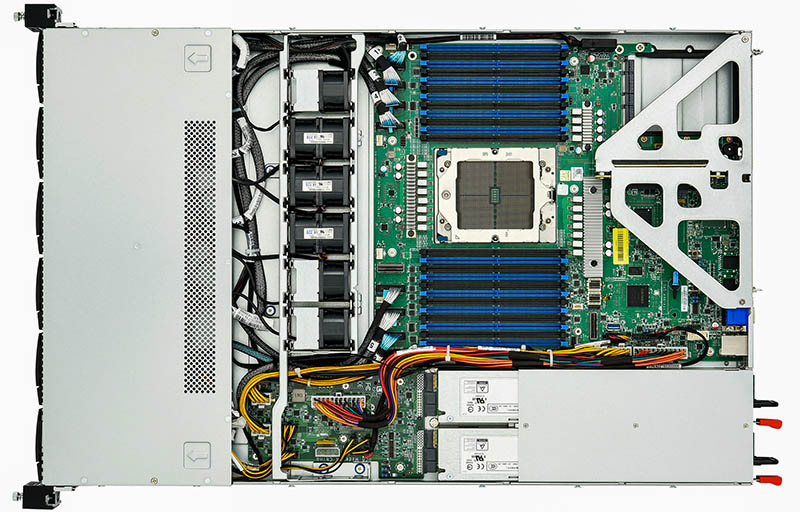

Translating that into a server, here is a 1DPC ASUS server, the ASUS RS720A-E12-RS24U we reviewed, where we can see the challenges sever designers face. Side-by-side, there is a little bit of room outside of the DDR5 DIMM slots and CPU sockets for cabling on the sides of the chassis (e.g. to get connectivity for front drive bays.)

For a 1U view, here is an Ingrasys SV1120A we saw at SC22. Ingrasys is a Foxconn affiliate entity for the server industry. Again, we can see very little room in the chassis to go from 1DPC 12 DIMMs/ socket (24 total) to 2DPC 24 DIMMs/ socket (48 total). The blue DIMM slots above, or white DIMM slots below simply cannot double in quantity given the space required.

In higher-density 2U 4-node servers, the challenge is more acute as 12 DIMM slots barely fit alongside the AMD SP5 CPU socket.

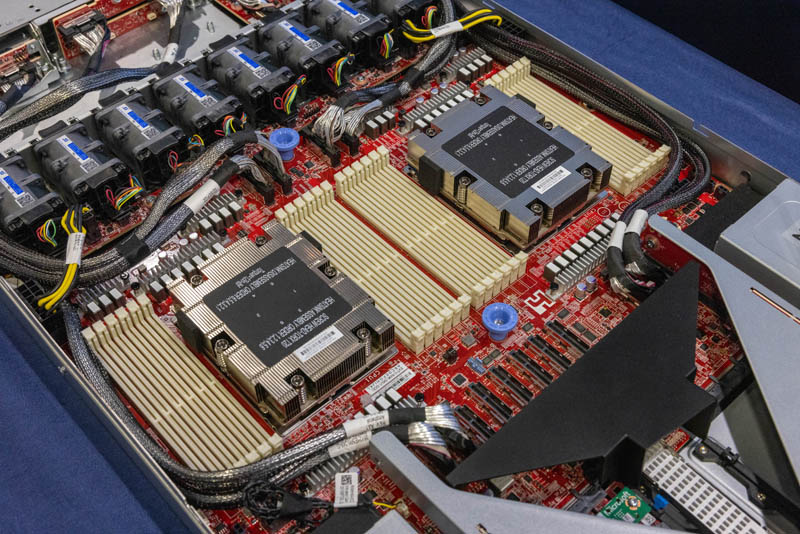

To solve the standard 19″ rack server width challenge by physically fitting 2DPC with a modern CPU. Here is a Tyan Transport CX GC68AB8056 design with 24 DIMM slots and an AMD EPYC SP5 socket:

As one can clearly see, there is no way to fit a second socket with even 1DPC/ 12 DIMMs in the width of the chassis, let alone 2DPC/ 24 DIMMs on that second socket.

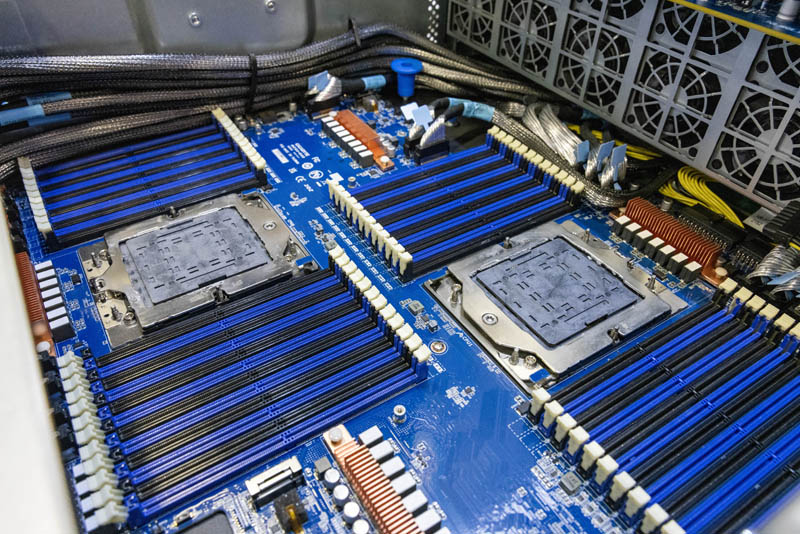

Looking at an example of a dual-socket, 2DPC implementation, we have seen designs like this Gigabyte G493-ZB0 system where the sockets are offset.

That seems like a logical design, but it adds its own challenges. This offset design adds minimally 5.5-6″ in depth (140mm+) to the server, but probably a bit more due to clearances. The ASUS RS720A-E12-RS24U shown above, with NVMe SSDs, fans, and PCIe slots for GPUs is a dual-socket 1DPC design and it fits in just over 33″. So adding 5.5-6″ (or more) adds 16-18%+ on a server depth. For many racks, a 39″ depth server is not an issue. For others, especially with zero U PDUs installed behind servers, that can be a challenge.

Beyond that depth challenge, is the internal routing. PCIe lanes within a server terminate at CPUs. The offset design means that one CPU will be further from the rear PCIe slots in a server. Modern servers also often have cabled connections to front storage (NVMe) bays. The rear CPU will have further runs to those. With PCIe Gen5 signal reach challenges in PCB, it likely means more retimers and cabling need to be used instead of simple slots and risers.

Final Words

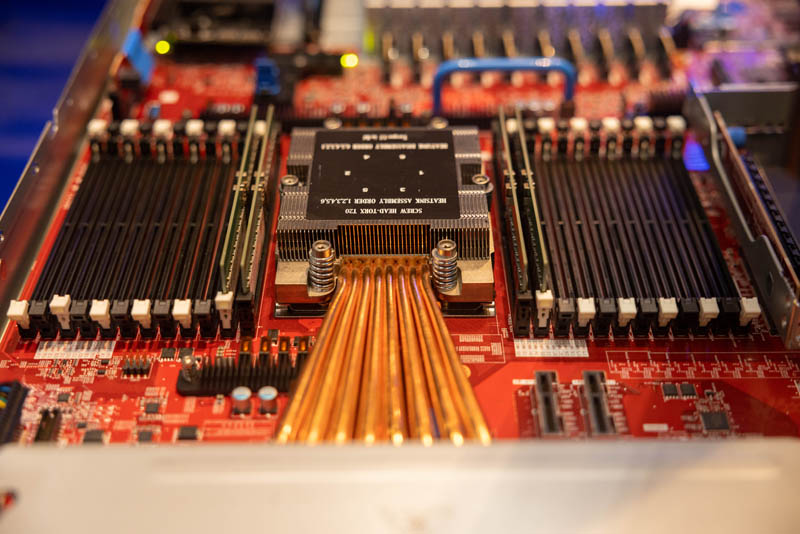

Over the past few weeks, I have had many questions come in about why we do not see many 2DPC AMD EPYC 9004 “Genoa” servers. To be frank, this is a capability I believe AMD should have launched with. At the same time, it is in the current AGESA code being sent to AMD partners. We also saw an example of 2DPC running back in October 2022 live on the OCP Summit 2022 show floor. This single socket photo from our Microsoft Shows AMD Genoa 1P Server Powered by Hydra at OCP Summit 2022 was actually running 2DPC as one can see two DIMM channels populated each with two DIMMs (white and black DDR5 slot tabs).

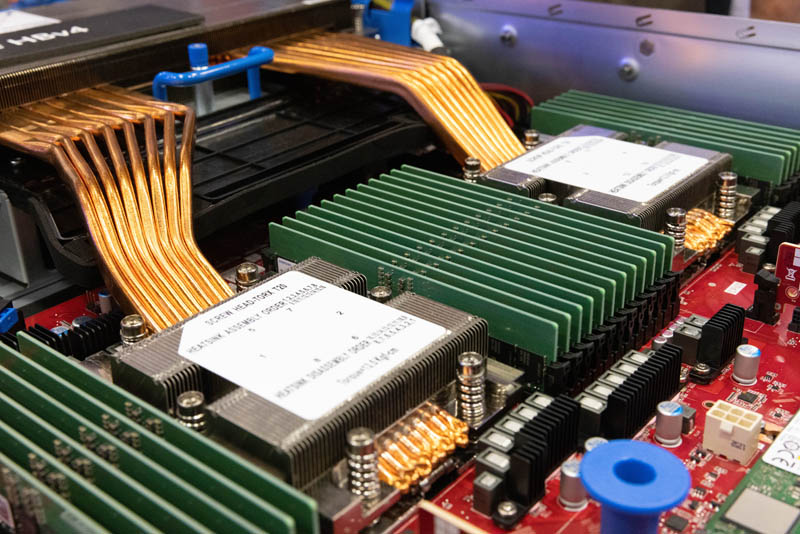

The above was notable because we were already testing Genoa and we knew it would not launch with 2DPC support. Needless to say, we could see that get shared around the industry. Even though Microsoft was running 2DPC live on a show floor in October 2022, its dual-socket designs still run out of chassis width due to the physical limitations of that many DIMM slots. Here is the Microsoft HBv4 platform that is dual socket 1DPC because of this constraint.

Intel and other vendors will run into this same challenge just due to the real-estate DIMM slots take. There is an answer to this in the industry. CXL memory will be in PCIe slots and EDSFF E3 slots. That allows for lanes other than DDR5 DIMM slots to be used to provide extra memory capacity, and since it runs on CXL/PCIe lanes instead of DDR5 memory controllers, it also increases the total available bandwidth to a system.

If you want to learn more about DDR5 memory bandwidth in current-generation servers and Why DDR5 is Absolutely Necessary for Modern Servers we have a video for that as well.

It has gotten interesting. How feasible is it put the memory in a modular caddy/tray, similar to the how IBM did it in their “IBM x3690 x5” servers.?

I’d expect to see some designs with the motherboard floated a few cm off the case, with half the DIMM slots facing downwards. I’m sure there are many mechanical and cooling challenges as well as the expected trace routing complexity, but it should be doable.

Since they’re managing 24 slots + 2 CPU sockets single-sided, they should be able to do 48 + 2 double-sided. Possibly more, using the CPU area for a few more DIMMs on the back; but I imagine at least the first version of such a design would skip that, maybe using the CPU areas for cooling devices instead.

Marked as best answer. Great job Pat and team.

I always appreciate how STH is like reading how’s this fid with that. They’re all interconnected. Almost everything else is like one spoonfed part after another. It’s a real benefit to have someone explain why 1 DPC not 2 then bridge to CXL.

Hi Patrick

Do you have any idea how the availability of the 128GB and 256GB RDIMMs will look like ?

Do you see any inclination for the vendors to defer availability of such RDIMMs in order to empty the stock of 3DS DIMMs ?

They way to go for memory seems be vertically on top of cpu as AMD is already doing for 3D cache

Potential solutions:

1. Use SO-DIMM ECC form factor(so you can have 2DPC)

2. Have RDIMMs priced linearly(ie 256GB dimms exactly twice the price as 128GB dimms)

3. Just move to LPDDR5 soldered onto Dell’s CAMM form factor. (This is the most forward looking, as it uses cell phone memory like the Nvidia Grace platform, but socketed.)

This is exactly relevant for an upcoming exercise, where performance is the first concern.

Would be nice to list, as a starting point, which AMD motherboards are 12-channels per CPU.

Why not just use taller memory, like how there’s dual DIMMs for consumer platforms.

@Benny_rt2

Those cards have expander chips that add latency and reduce bandwidth (and also add cost) so they are only used where memory capacity outweighs all other concerns.

@TS

SODIMM and CAMM don’t solve the problem. The problem is that there are only so many memory chips you can put on a DIMM – usually 32 for RDIMMs. SODIMMs have a max of 16 memory chips, so even though they take up half the space, they also have half the capacity. CAMM also has limited area for memory chips because of its horizontal orientation.

3DS memory is the primary way to meet high capacity with 1DPC but it also costs exponentially more as you point out.

The 2DPC market is mainly there for people who want to save money by using more of the cheaper, lower density DIMMs and are willing to tradeoff bandwidth for the cost savings.

Why isn’t MCR mentioned in this article at all? It seems to solve more or less the same issues with less memory traces on the motherboard

I see you doing a lot of DRAM capacity/core math but 24 DIMMs per socket seems to be more for applications like large databases (e.g. SAP HANA)where DRAM capacity is often more important than direct DRAM bandwidth.

Having slower 2DPC bandwidth would still be faster than going through additional sockets (when you have to go from 1 to 2 or from 2 to 4 sockets just because of capacity). There is also a latency penalty with additional sockets.

@Yuno

MCR will not have a lot of difference from LR DIMMs when it comes to signal strength.

We need double-height DIMMs to start becoming standard in the server world.

IBM was also correct in that OMI (Open Memory Interface) was the solution.

The dumb part was that they wanted to move the Memory Controller onto the DIMM.

That’s a big “No No” for DIMM costs. That’s the Bridge too far, or flying too close to the sun and having your wings melt off.

The OMI model that should be adopted is the one where the Memory Controller is soldered onto the Server MoBo, near the DIMM slots.

Small Chiplet based Memory Controllers with a extra short trace connection length between the Memory Controller and the Parallel connections on the DIMM would help increase and stabilize future higher speed Memory w/o going crazy and mounting the Memory Controller onto the DIMM.

The Serial Connection to the CPU would be standardized and use the OMI interface, ergo saving plenty of die area on the cIOD for AMD and easily opening up many of the pins on the CPU Socket for more PCIe lanes and not needing to save so many pins for DIMM slots.

Just wait for SP7 and its 16 channels, that will take up a lot of space.

Maybe angling the sockets to 30° and splitting them between both sides of the motherboard will be the solution. That’s probably better than rotating both the CPU and memory sockets 90° and implementing ‘cross flow’ cooling.