At SC22, Microsoft Azure was out with some high-end hardware. The company showed off its HBv4 instances, including AMD EPYC 9004 Genoa, as well as a large OAM chassis. We wanted to snap a few photos and show our readers since it shows the great lengths companies are going to in order to cool modern processors with air.

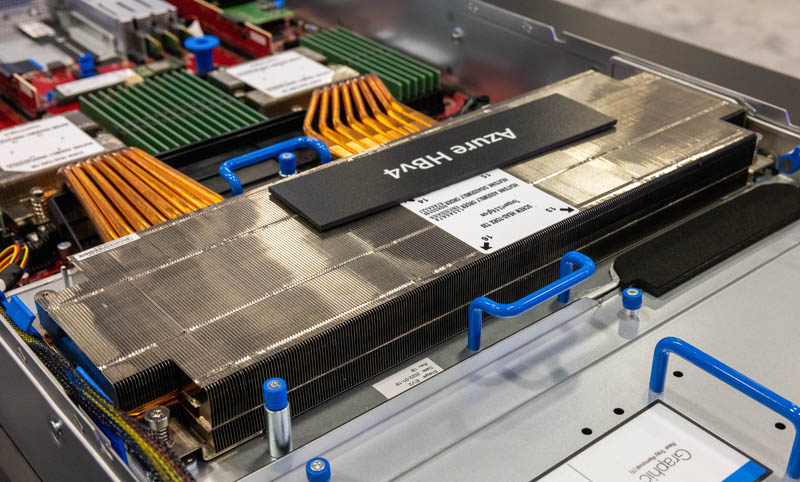

Microsoft Azure at SC22 and The Enormous AMD EPYC Genoa Heatsink on HBv4

In the Azure SC22 booth, Microsoft had on display the hardware powering its HBv4 instances. Microsoft was early in realizing that HPC could be done in the cloud and built an Infiniband-backed infrastructure with high-end compute nodes to make cloud HPC a reality. HBv4 is the company’s AMD EPYC 9004 Genoa instance hardware.

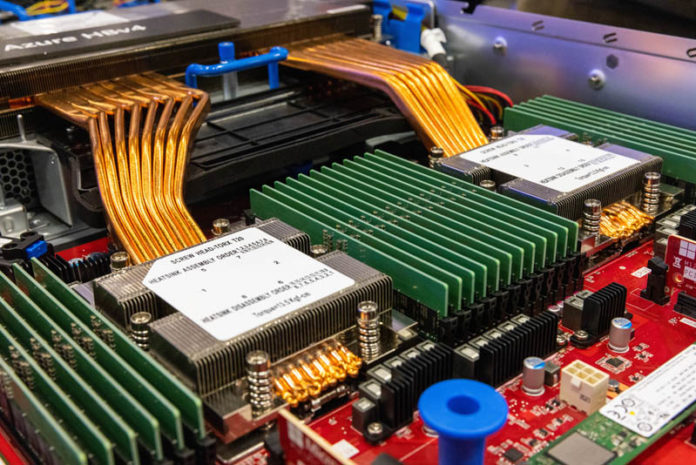

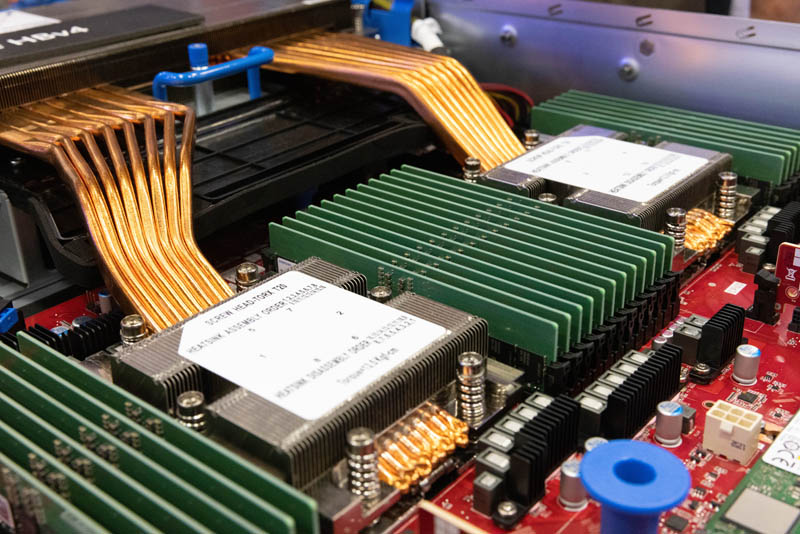

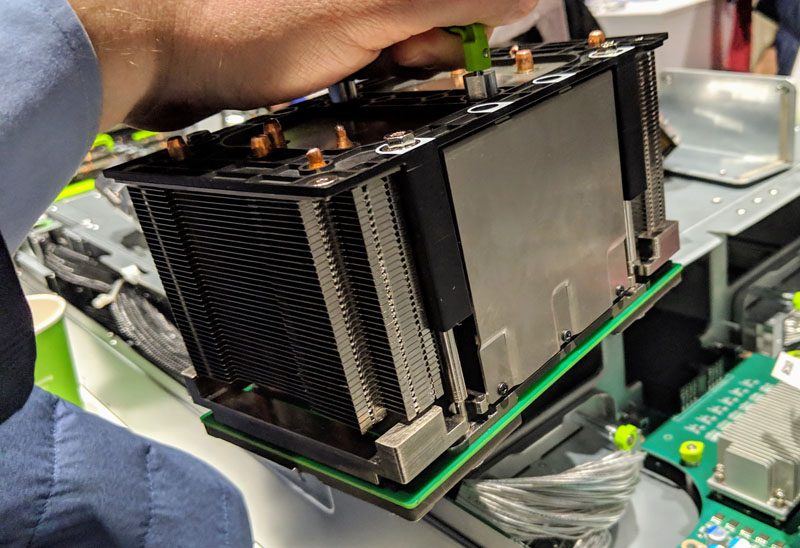

While normally having two large processors would be the most interesting feature, the cooling was the centerpiece. It is absolutely massive. The heatsink has heat pipes that route from both CPUs and almost stretches across the entire chassis width except for some side area for cable routing.

This server also has its FPGA offload card. This card offloads the network data plane, control plane, and storage offload. While AWS is using its Nitro V5 DPU for many of the same functions, Microsoft is using a FPGA.

For an idea of how and why this works, you can see an example of when we set up something similar in This Changes Networking Intel IPU Hands-on with Big Spring Canyon.

In that, we used an Intel FPGA card to remotely deliver storage presenting them as NVMe devices to the host server using an Intel IPU to manage the process. Microsoft’s solution also does networking, and the company has its secret sauce, but that article/ video should help understand why Microsoft is using a FPGA.

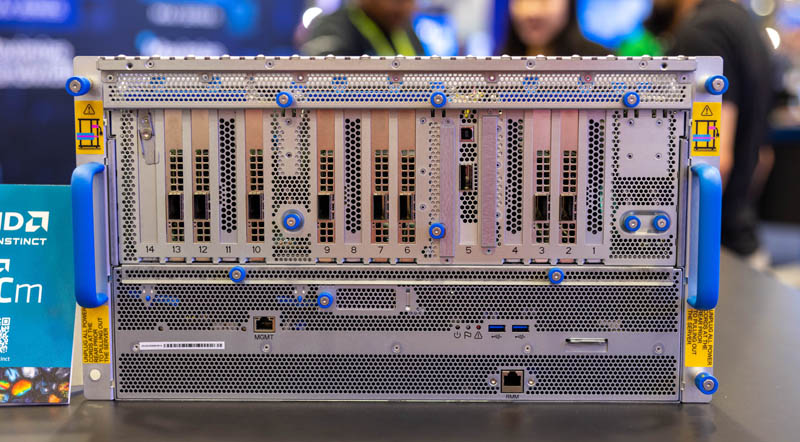

Microsoft also showed a large OAM box. One can see the AMD Instinct ROCm placard next t oit and the massive number of I/O cards.

Inside, we were told these are AMD Instinct MI250X OAM modules.

It is just fun to see a huge array of heatsinks. If you saw our early 2019 Facebook Zion Accelerator Platform for OAM piece and remember this picture, the production version of OAMs is very similar, except the handles appear to have switched orientation.

It is fun seeing these technologies go from concept in February 2019 to production systems in November 2022.

Final Words

Microsoft has some exceptionally cool hardware. We recently featured these systems in our best of SC22 video that you can find here:

There were a lot of AMD EPYC 9004 Genoa systems on the show floor, but the Microsoft heatsink was probably the standout.

Editor’s note: One day, it would be fun to get one of these big heatsinks and add it to the STH YouTube set background.