Amazon announced a new DPU at re:Invent 2022, the AWS Nitro v5. Amazon does not call this the DPU, even as the primary catalyst for the segment, but we need some industry terminology. The cloud provider has a new faster chip built by its Annapurna Labs team and shows impressive gains.

If you want to learn more about AWS Nitro, and why it is being used for some background, see AWS Nitro the Big Cloud DPU Deployment Detailed.

AWS Nitro 5 Ups the Cloud DPU Game Again

AWS Nitro v5 is more than a small change. It has grown to twice the transistors, with faster PCIe and DRAM. DPUs are going to grow over time to offer more services and handle higher speeds.

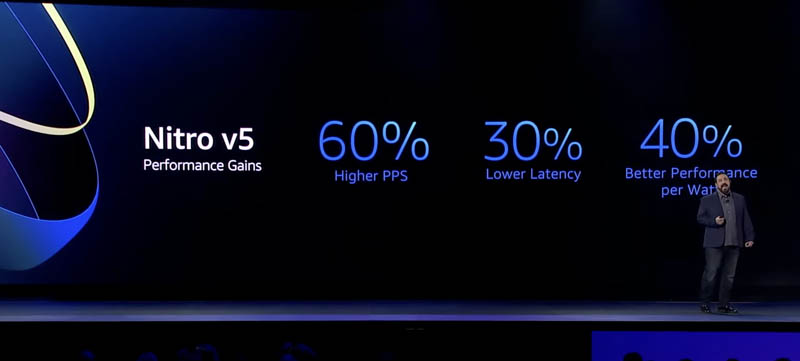

Adding twice the transistor count, PCIe bandwidth, and 50% higher memory speed gives Nitro v5 60% higher performance with lower latency, and AWS says better performance per watt.

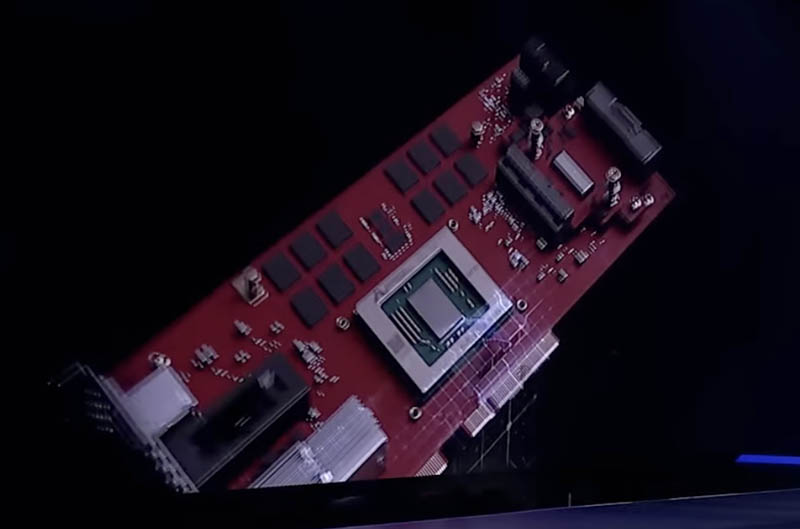

Here is a quick shot of the card with the chip DRAM, and an interesting edge connector configuration.

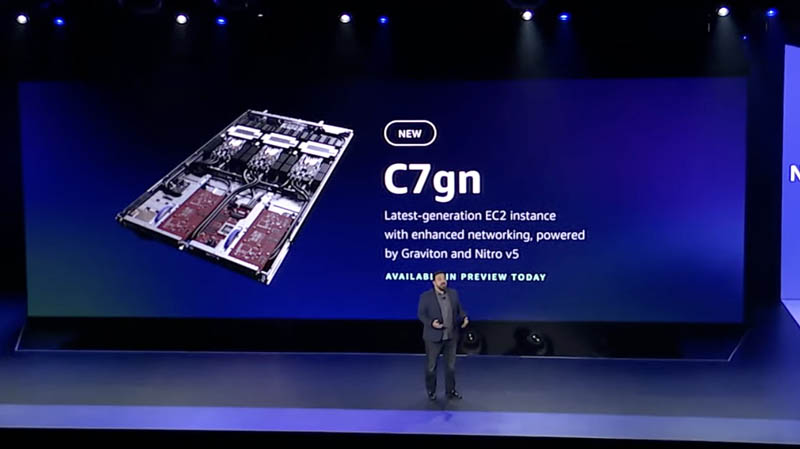

AWS is rolling this out with the AWS C7gn instance with Graviton and Nitro v5.

AWS also went into how it is able to do things like stop using TCP, instead using SRD and running that over Nitro and more.

Final Words

As AWS builds its solution of integrated hardware and software into an increasingly proprietary stack, it is doing a lot of really interesting engineering. The Nitro project is now on its 5th public version and has lit a fire under the industry. Even with that, we have heard many vendors discuss DPUs, we have started to test publicly available DPUs, but we are not seeing them widely deployed yet outside of hyper-scale clouds. AWS is that far ahead in this and continues innovating.

Instead of “AWS says lower performance per watt” maybe the article should read “AWS says greater performance per watt.”

What are the benefits of deploying them outside of something like a hyperscale cloud?

Is there a cost/benefit trade off at a certain size installation where they make sense?

Thank you Eric Olson! I think I am almost done with the effects of this chorizo burrito that has had me lose 11lbs+ in 4 days. The team is really holding it together this week while I have been ill.

I still haven’t seen a real point to DPUs outside of multi-tennant environments, or *maybe* cases where the main OS isn’t flexible enough for organizational reasons. They’re very similar to hardware RAID cards from the ’90s and ’00s–in theory they were better, but they were mostly only a win for Windows and other OSes with terrible built-in RAID support, and there were a lot of workloads where it was trivially easy to DoS the RAID CPU and get horrible performance. Even when they worked, it still wasn’t clear that they were a financial win vs adding a few extra percent of host CPU.

For clouds (and similar), the ability to move giant security-critical network and storage stacks onto their own, isolated processor makes a lot of sense. It also makes bare-metal / whole-machine VMs a lot less complicated. But for enterprise (or home lab) use, I have a hard time seeing the advantage. There are lots of cool things that DPUs can do, but I haven’t seen one that couldn’t be done easier/cheaper/faster with a ConnectX-ish NIC and host OS software.