Today we are going to look at a great AMD EPYC server. The Supermicro AS-2015CS-TNR is a single-socket AMD EPYC 9004 “Genoa” server, but the configuration we had was one we had for the first time. We had 384GB of memory with 84 cores. While we are testing this with the 84 core AMD EPYC “Genoa” we will note that this server does work with the AMD EPYC “Bergamo” line with up to 128 cores, but the embargo for those chips in reviews has not lifted. Still, 84 cores 384GB was a fun one to test. Let us take a look at the server.

Supermicro AS-2015CS-TNR External Hardware Overview

The server itself is a 2U chassis from the company’s CloudDC line. CloudDC is Supermicro’s scale-out line that adopts a lower-cost approach than some of its higher-end servers. This is one of those segments that companies like Dell, HPE, and Lenovo struggle in since they tend to compete more with higher-priced more proprietary offerings that CloudDC is designed to be less expensive than.

At 648mm or 34.5″ deep, this is designed to fit in shorter-depth low-cost hosting racks.

The 2U form factor has 12x 3.5″ bays. These are capable of NVMe, SATA, and SAS (with a controller.) While some may prefer dense 2.5″ configurations, we are moving into the era of 30TB and 60TB NVMe SSDs so for most servers having a smaller number of NVMe drives is going to become more commonplace, but disk will still be less expensive.

On the left side of the chassis, we can see the rack ear with the power button and status LEDs. We can also see disk 0 with an orange tab on the carrier to denote NVMe.

Here is a Kioixa SSD in one of the 3.5″ trays. One downside of using a 2.5″ drive in a 3.5″ bay is that it requires screws so one cannot have a tool-less installation as with 3.5″ drives. That is the price of flexibility.

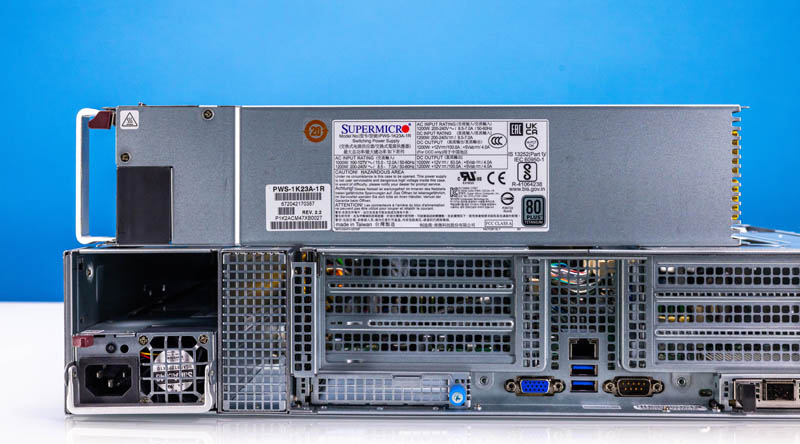

The rear of the server is quite different from many Supermicro designs in the past. It is laid out almost symmetrically, except for the power supplies.

As we would expect from this class of server, we have two redundant power supplies.

Each power supply is a 1.2kW 80Plus Titanium unit for high efficiency. As we will see in our power consumption section, these PSUs are plenty for the server, even adding expansion cards.

In the center of the chassis, we have the rear I/O with a management port, two USB ports, a VGA port, and a serial port.

On either side of that, we have a stack of PCIe Gen5 slots via risers and an OCP NIC 3.0 slot.

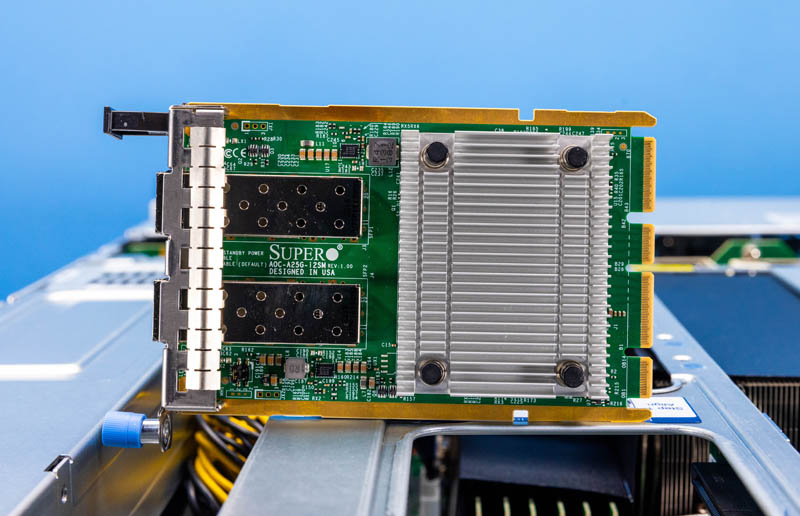

Supermicro calls its OCP NIC 3.0 cards AIOM modules and there are two slots. The 25GbE AOC-A25G-i2SM NIC installed in one of these slots is a SFF with Pull Tab design. You can see our OCP NIC 3.0 Form Factors The Quick Guide to learn more about the different types. This is the type that server vendors who are focused on low-cost easy maintenance have adopted so they are common in cloud data centers. In the industry, you will see companies focused on extracting large service revenues such as Dell and HPE adopt the internal lock OCP NIC 3.0 form factor. The internal lock requires a chassis to be pulled from a rack, opened up, and often risers removed to be able to service the NIC. Supermicro’s design, in contrast, can be serviced from the hot aisle in the data center without moving the server. That is why Supermicro and cloud providers prefer this mechanism.

We are going to discuss the riser setup as part of our internal overview. Next, let us take a look inside the system.

very nice review, but

nvme with pci-e v3 x2 in 2023, in a server with cpu supporting pci-e v5?

Why is price never mentioned in these articles?

Mentioning price would be useful, but frankly for this type of unit there really isn’t “a” price. You talk to your supplier and spec out the machine you want, and they give you a quote. It’s higher than you want so you ask a different supplier who gives you a different quote. After a couple rounds of back and forth you end up buying from one of them, for about half what the “asking” price would otherwise have been.

Or more, or less, depending on how large of a customer you are.

@erik why would you waste PCIe 5 lanes on boot drives? Because that m.2 is useful for.

The boot drives are off the PCH lanes. They’re on slow lanes because it’s 2023 and nobody wants to waste Gen5 lanes on boot drives

@BadCo and @GregUT….i dont need pci-e v5 boot drive. that is indeed waste

but at least pci-e v3 x4 or pci-e v4 x4.

@erik You have to think of the EPYC CPU design to understand what you’re working with. There are (8) PCIe 5.0 x16 controllers for a total of 128 high speed PCIe lanes. Those all get routed to high speed stuff. Typically a few expansion slots and an OCP NIC or two, and then the rest go to cable connectors to route to drive bays. Whether or not you’re using all of that, the board designer has to route them that way to not waste any high speed I/O.

In addition to the 128 fast PCIe lanes, SP5 processors also have 8 slow “bonus” PCIe lanes which are limited to PCIe 3.0 speed. They can’t do PCIe 4.0. And you only get 8 of them. The platform needs a BMC, so you immediately have to use a PCIe lane on the BMC, so now you have 7 PCIe lanes left. You could do one PCIe 3.0 x4 M.2, but then people bitch that you only have one M.2. So instead of one PCIe 3.0 x4 M.2, the design guy puts down two 3.0 x2 M.2 ports instead and you can do some kind of redundancy on your boot drives.

You just run out of PCIe lanes. 136 total PCIe lanes seems like a lot until you sit down and try to layout a board that you have to reuse in as many different servers as possible and you realize that boot drive performance doesn’t matter.