Supermicro AS-2015CS-TNR Internal Hardware Overview

We are going to work from front to back in the system starting with the drive backplanes.

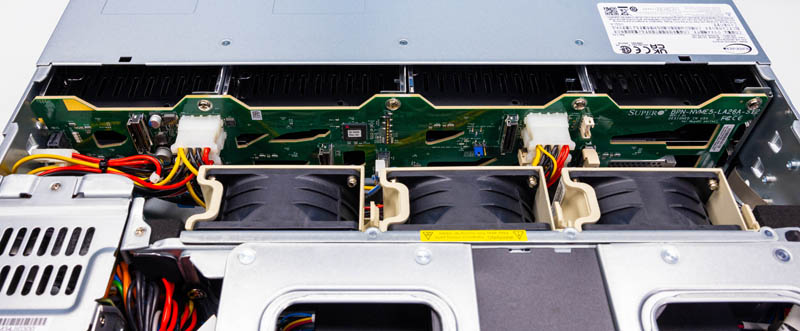

The backplanes in this server are designed to allow airflow but also handle 3.5″ and 2.5″ drives. Something that is notable is that instead of a proprietary power connector that we would find here on a Lenovo, Dell, or HPE server, we have standard 4-pin Molex connectors. That is a great example of how CloudDC is designed to be less proprietary and lower cost than other systems on the market.

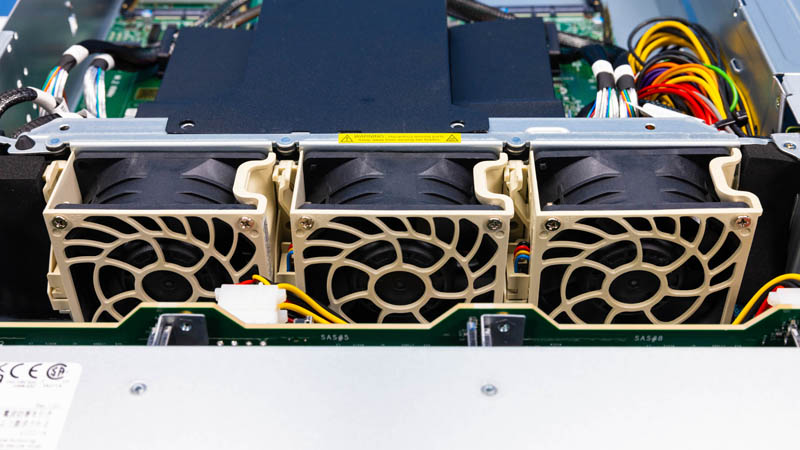

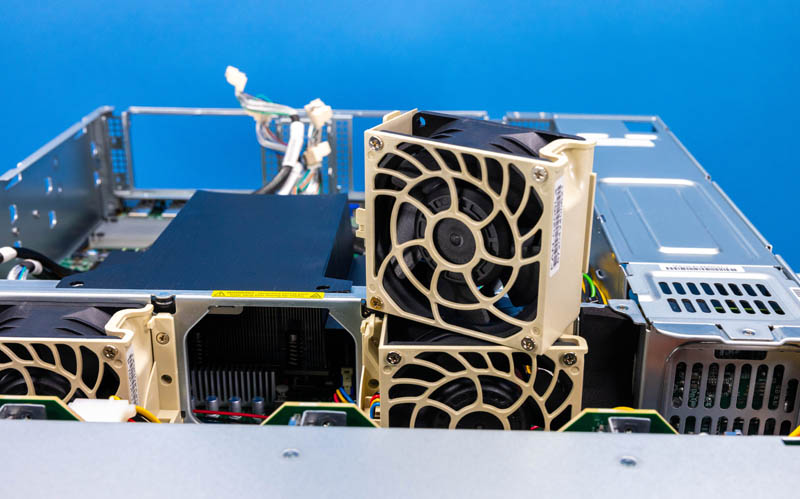

Behind the backplane, we have a small array of only three fans. This server, by modern standards, uses only a modest amount of power.

Even though there is a lot of cost optimization in this server, the fans are in hot-swap carriers that are Supermicro beige.

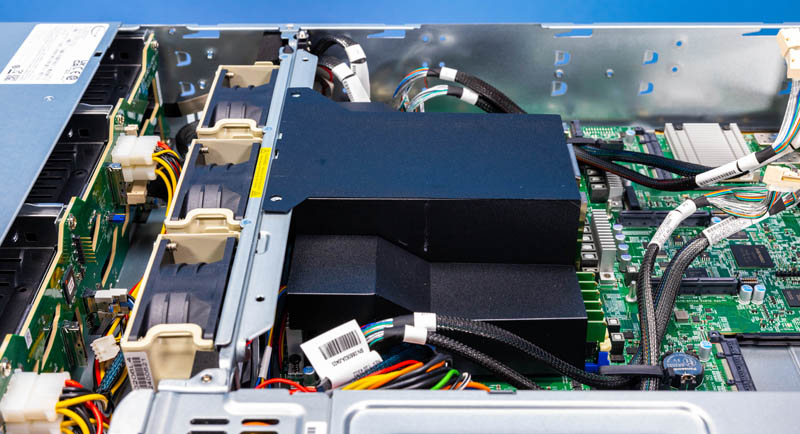

The airflow guide is a flexible unit. We have a strong preference for hard plastic airflow guides since they are usually easier to seat.

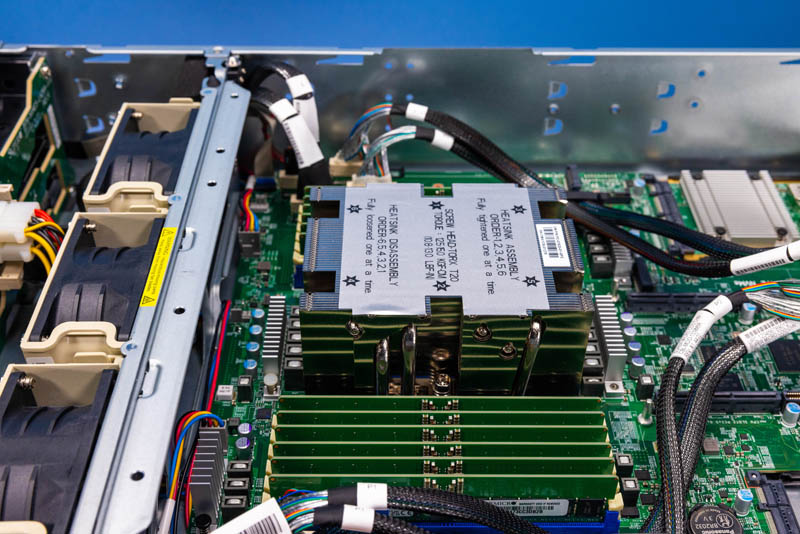

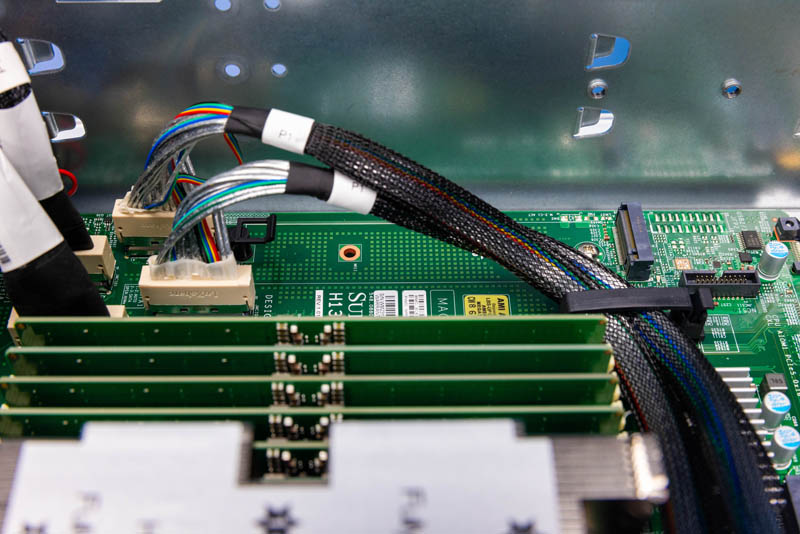

Underneath that airflow guide, there is the CPU socket and memory. This is a 12x DDR5 DIMM slot design or one DIMM per channel in AMD SP5. With the extra channels, 1DPC is becoming more common. We recently did a piece on Why 2 DIMMs Per Channel Will Matter Less in Servers.

The CPU socket is AMD SP5. This houses parts like AMD EPYC “Genoa” mainstream parts, “Genoa-X” parts with up to 48MB of L3 cache per core or over 1.1GB total, and even the new AMD EPYC Bergamo parts with up to 128 cores per socket. While this server may seem dainty to some with a single socket, the fact is that one can have twice the number of threads in this server as an Ampere Altra Arm server (and more than 2x the performance) and more than twice the core count of Intel Xeon “Sapphire Rapids” single socket servers.

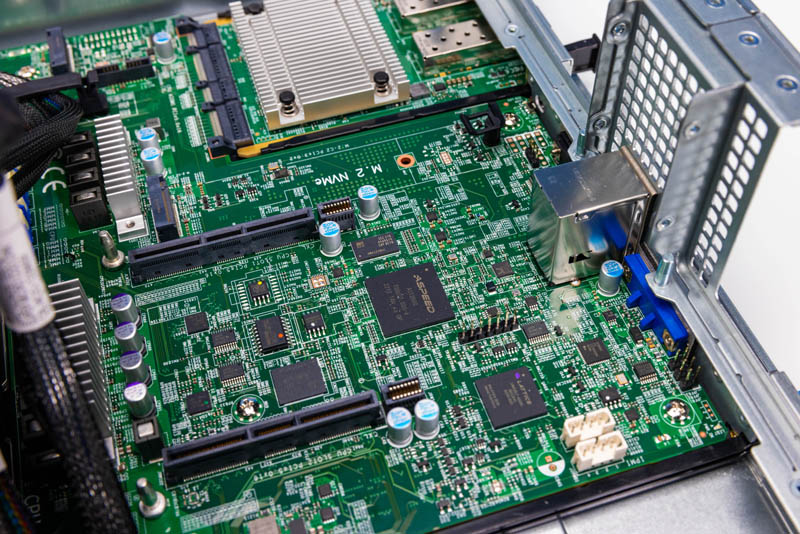

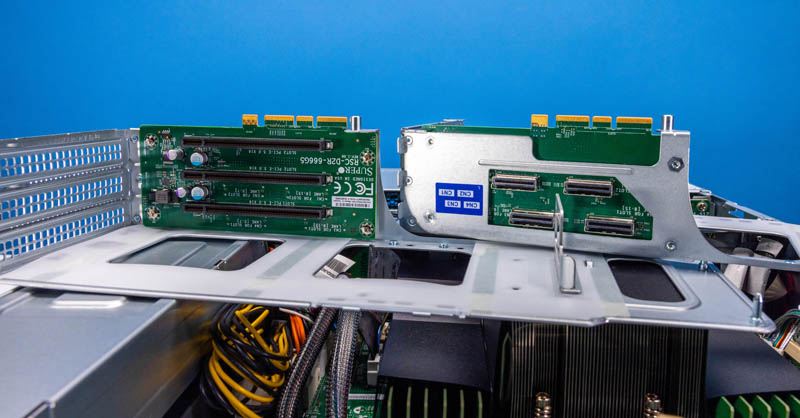

Behind the CPU socket, we have our PCIe riser slots as well as the ASPEED AST2600 BMC.

One of the two internal M.2 slots is also in this area.

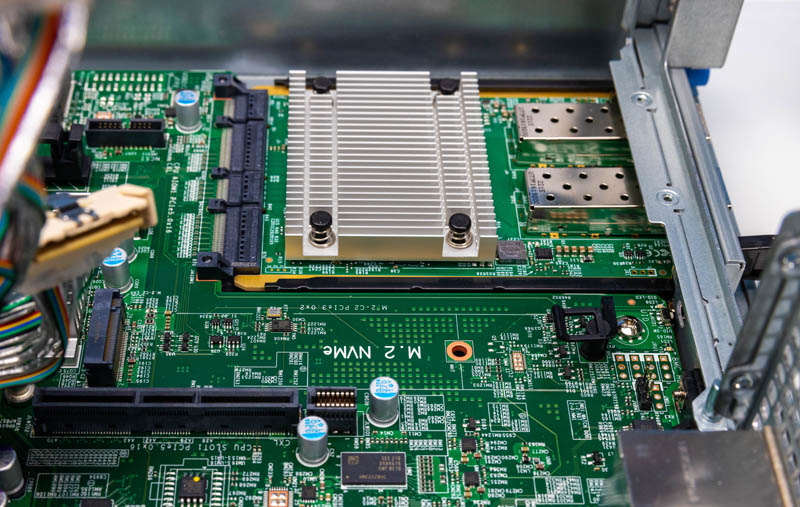

We saw in the spec sheet that there was a second internal M.2 slot for boot drives. We found that on the side of the chassis also with a tool-less design.

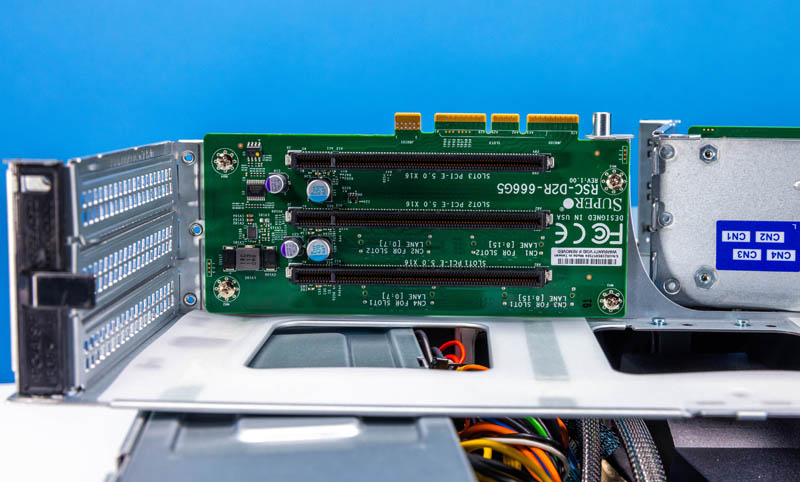

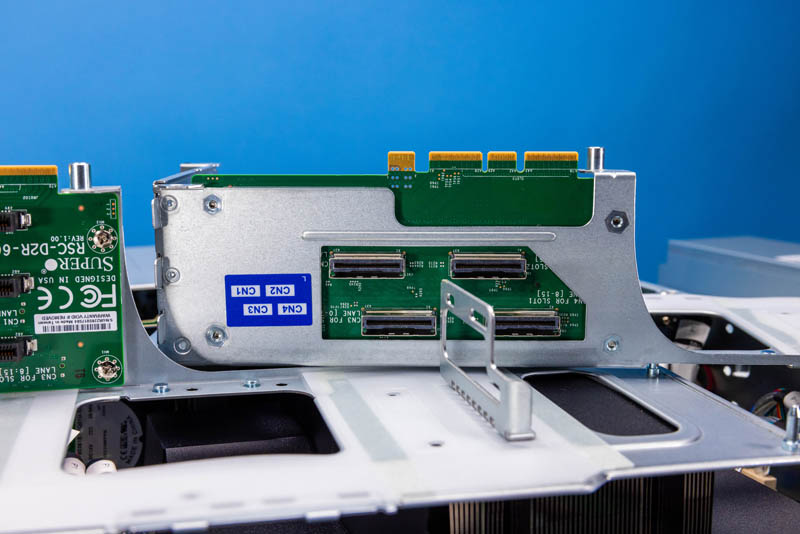

The two risers each have three slots. The way these are configured in this system is to have two full-height full-length and two half-length slots for a total of four PCIe Gen5 x16 slots. These are in addition to the two PCIe Gen5 x16 links feeding the OCP NIC 3.0 slots.

This generation also has Supermicro’s tool-less retention for the PCIe cards, making card swaps very fast.

The back of the riser has the MCIO connectors for feeding PCIe lanes. Again, this is another industry standard connector adopted by CloudDC. These connections help make the system flexible so that PCIe lanes can be used either in the rear for expansion cards or up front for more NVMe drives.

Next, let us get to the topology of the system.

very nice review, but

nvme with pci-e v3 x2 in 2023, in a server with cpu supporting pci-e v5?

Why is price never mentioned in these articles?

Mentioning price would be useful, but frankly for this type of unit there really isn’t “a” price. You talk to your supplier and spec out the machine you want, and they give you a quote. It’s higher than you want so you ask a different supplier who gives you a different quote. After a couple rounds of back and forth you end up buying from one of them, for about half what the “asking” price would otherwise have been.

Or more, or less, depending on how large of a customer you are.

@erik why would you waste PCIe 5 lanes on boot drives? Because that m.2 is useful for.

The boot drives are off the PCH lanes. They’re on slow lanes because it’s 2023 and nobody wants to waste Gen5 lanes on boot drives

@BadCo and @GregUT….i dont need pci-e v5 boot drive. that is indeed waste

but at least pci-e v3 x4 or pci-e v4 x4.

@erik You have to think of the EPYC CPU design to understand what you’re working with. There are (8) PCIe 5.0 x16 controllers for a total of 128 high speed PCIe lanes. Those all get routed to high speed stuff. Typically a few expansion slots and an OCP NIC or two, and then the rest go to cable connectors to route to drive bays. Whether or not you’re using all of that, the board designer has to route them that way to not waste any high speed I/O.

In addition to the 128 fast PCIe lanes, SP5 processors also have 8 slow “bonus” PCIe lanes which are limited to PCIe 3.0 speed. They can’t do PCIe 4.0. And you only get 8 of them. The platform needs a BMC, so you immediately have to use a PCIe lane on the BMC, so now you have 7 PCIe lanes left. You could do one PCIe 3.0 x4 M.2, but then people bitch that you only have one M.2. So instead of one PCIe 3.0 x4 M.2, the design guy puts down two 3.0 x2 M.2 ports instead and you can do some kind of redundancy on your boot drives.

You just run out of PCIe lanes. 136 total PCIe lanes seems like a lot until you sit down and try to layout a board that you have to reuse in as many different servers as possible and you realize that boot drive performance doesn’t matter.