The Inspur NF5488A5 is far from an ordinary server. Instead, it is one of the highest-end dual-socket servers you can buy today for AI training. Based around dual AMD EPYC CPUs and the NVIDIA HGX A100 8 GPU (Delta) platform, this server has topped MLPerf benchmarks for AI/ML training. Now, we get to do our hands-on review of the system.

Inspur NF5488A5 Server Overview Video

As with many of our articles this year, we have a video overview of this review that you can find on the STH YouTube channel:

Feel free to listen along as you read or go through this review. As always, we suggest watching this video in a YouTube tab, window, or app instead of in the embedded viewer. There is even a discussion about PCIe v. Redstone v. Delta in the video.

Inspur NF5488A5 Hardware Overview

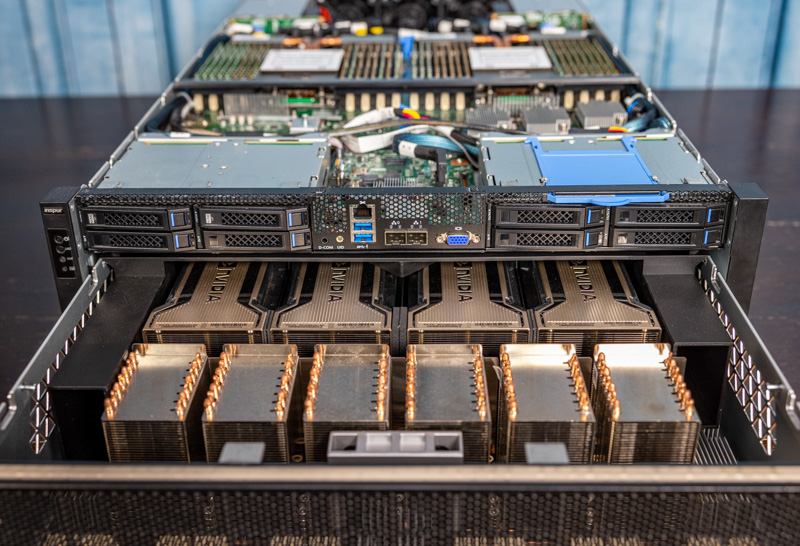

We have been splitting our reviews into external and internal sections for some time. In this article, we also wanted to provide ample views of the 8x NVIDIA A100’s so we are going to have a bit more on that than we would in a normal review. Instead, our plan is to start from the front of the system, move through the top to the rear, then make our way back to the front of the chassis with the GPUs.

Inspur NF5488A5 Server Hardware Overview

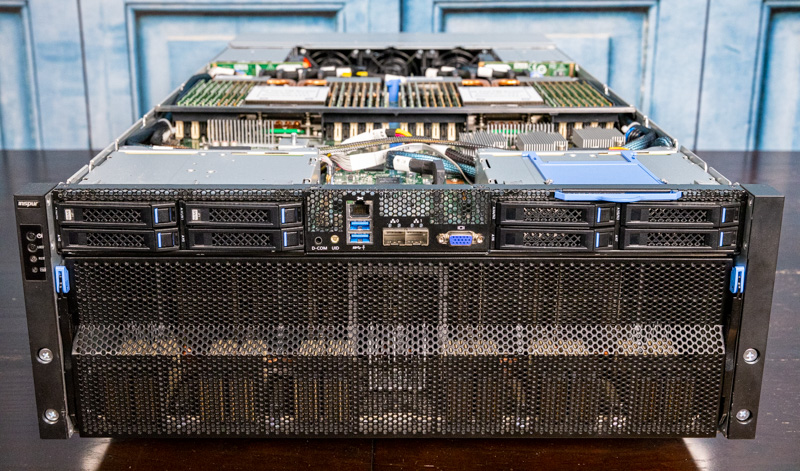

The front of the system is a little bit different than a standard 4U server. There are effectively three zones to the server. The top portion has storage, memory, and two processors. The bottom tray that you can see here has the GPUs and NVSwitches. Finally, the rear of the server has the PCIe switches, power, cooling, and expansion slots.

If the model number and the front of the chassis seem familiar, we reviewed the Inspur NF5488M5, and as you can see that looks very similar.

One can see front I/O with a management port, two USB 3.0 ports, two SFP+ cages for 10GbE networking, as well as a VGA connector.

Storage is provided by 8x 2.5″ hot-swap bays. All eight bays can utilize SATA III 6.0gbps connectivity. One set of four drives can optionally utilize U.2 NVMe SSDs.

There are M.2 options, but they are a bit different than we saw in the NF5488M5 review since there is more memory. Our test system did not have this M.2 option.

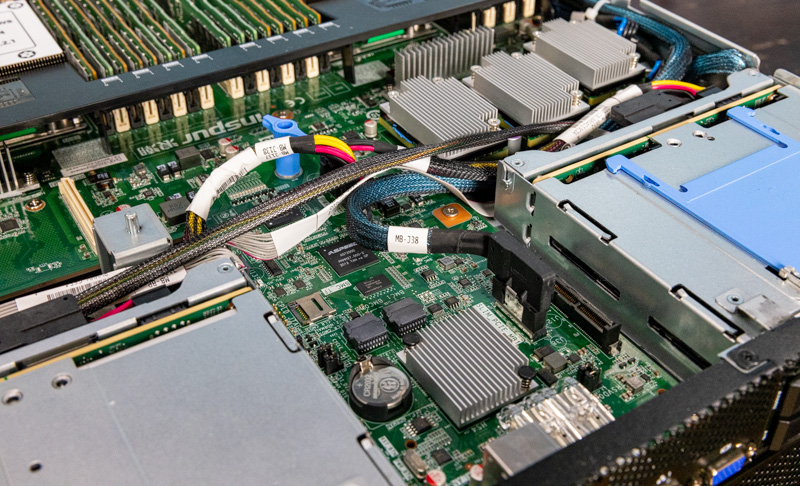

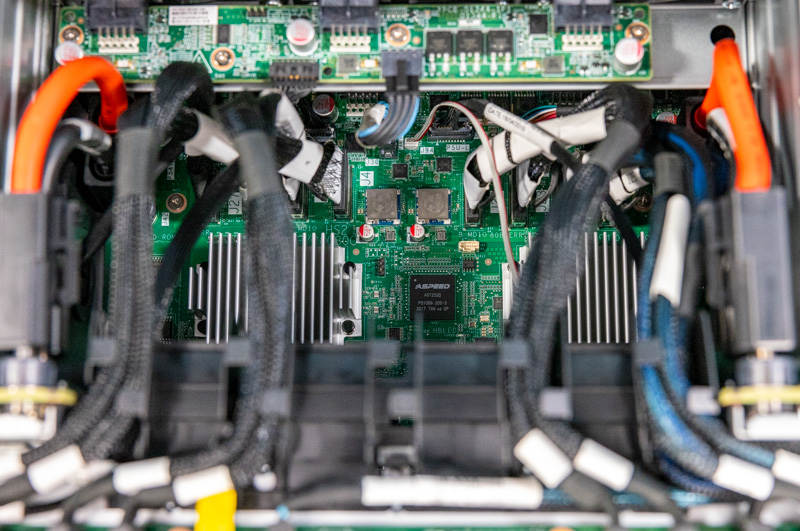

Just behind the 10GbE SFP+ ports on the front I/O and between the 2.5″ drive cages, we have the ASPEED BMC along with PCIe connectivity that is not used in our system.

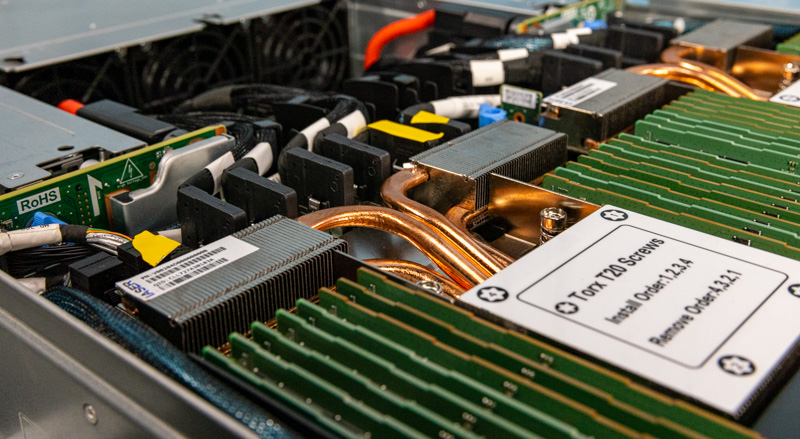

We again have the heatsink array cooling the power delivery components. This solution appears to be updated for the newer A5 system than what we saw with the M5.

There is also an OCP mezzanine slot. Our sense is that this is for internal storage controllers although it is not populated in the test system.

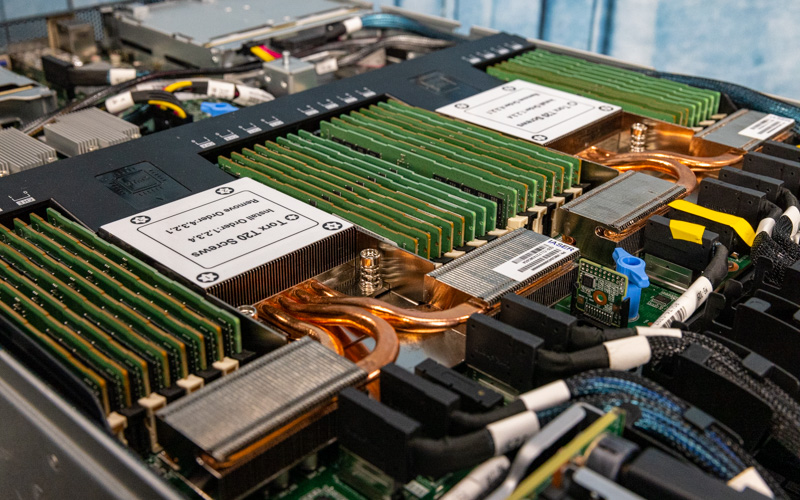

This is a dual AMD EPYC 7002 (Rome) / EPYC 7003 (Milan) system. That is a huge change since this is also the first AMD system that we are aware of from Inspur. Our system has Rome generation CPUs, but Inspur has published benchmarks also using the Milan generation so this supports both. That gives up to 64 cores but also a fairly wide range of lower core count options. Still, in a system like this, even two 64-core CPUs are a relatively smaller cost component.

Each of the two CPUs can potentially take 16 DIMMs for 32 DIMMs total, an increase of 8 DIMMs in the system.

You may have noticed that the heatsinks are more substantial with this generation. The 1U sized heatsinks have heat pipes to secondary fin arrays that are behind the DIMM slots.

Here is the opposite angle. In a system like this, power and thermals are serious considerations. That is why we see more expansive cooling in this A5 server versus the previous-generation M5.

The PCIe connectivity is substantial. As a result, we see a large number of PCIe cables going from the motherboard to the PCIe switch and power distribution board below. This is how PCIe goes from the CPUs to the expansion slots and GPUs. Inspur has a cable management solution to ensure that these cables can be used without blocking too much airflow to the CPUs, memory, and other motherboard components.

This is a view down to the fan controller PCB, then to the PCIe switch board. One can see the second ASPEED BMC here. This server has so much going on it requires not one, but two BMCs.

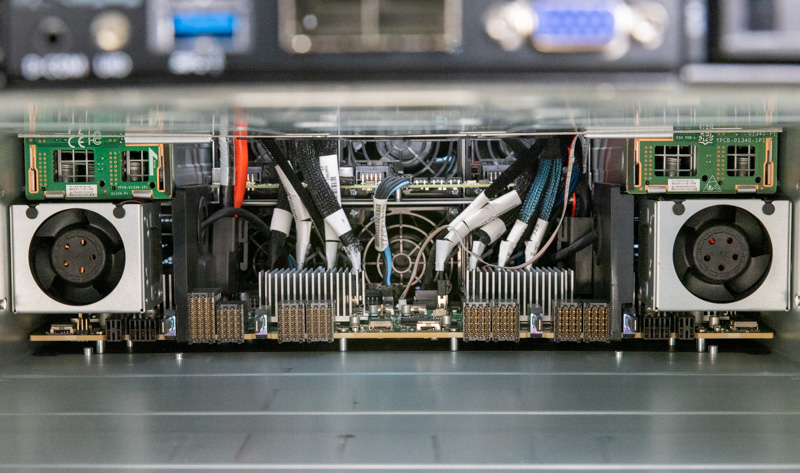

Looking at the rear of the chassis, one can see an array of hot-swap modules. There are three basic types. The center is dominated by fan modules.

To each side, one can see power supplies. There are a total of four PSUs and each is 3kW in our system.

The bottom has low-profile PCIe Gen4 x16 slots, two per side in I/O modules. One side shown below has an extra COM port while the other side you can see above has an IPMI management port.

One of the big changes with this generation is the AMD EPYC’s PCIe Gen4 support. Our system did not have them configured, but these can take up to HDR Infiniband or 200GbE ports running at full speed. In a system like this, that is a great benefit as it serves as a gateway to more training servers and storage in a cluster.

One can see the fans associated with these removable I/O modules if we flip the view and look at the PCIe switch board. Modern high-speed networking cards require solid cooling as well and having dedicated fans on each removable I/O expansion module.

Those high-speed connectors provide PCIe connectivity and power to the NVIDIA HGX A100 8 GPU “Delta” baseboard. It is now time to take a look at that.

There is a cover that normally goes over the GPU tray, but we removed that for better photos. If you want to see the sheet metal panel, you can see the NF5488M5 review.

Next, we are going to look at the GPU baseboard assembly that this plugs into in more detail.

We upgraded from the DGX-2 (initially with Volta, then Volta Next) with a DGX A100 – with 16 A100 GPUs back in January – Our system is Nvidia branded, not inspur. Next step is to extricate the inferior AMDs with some American made Xeon Ice Lake SP.

DGX-2 came after the Pascal based DGX-1. So has nothing to do with 8 or 16 GPUs.

Performance is not even remotely comparable – a FEA sim that took 63 min on a 16 GPU Volta Next DGX-2 is now done in 4 minutes – with an increase in fidelity / complexity of 50% only take a minute longer than that. 4-5 min is near real time – allowing our iterative engineering process to fully utilize the Engineer’s intuition…. 5 min penalty vs 63-64 min.

DGX-1 was available with V100s. DGX-2 is entirely to do with doubling the GPU count.

Nvidia ditched Intel for failing to deliver on multiple levels, and will likely go ARM in a year or two.

The HGX platform can come in 4-8-16 gpu configs as Patrick showed the connectors on the board for linking a 2nd backplane above. Icelake A100s servers don’t make much sense, they simply don’t have enough pcie lanes or cores to compete at the 16 GPU level.

There will be a couple of 8x A100 Icelake servers coming out but no 16.

It would be nice if in EVERY system review, you include the BIOS vendor, and any BIOS features of particular interest. My experience with server systems is that a good 33% to 50% of the “system stability” comes from the quality of the BIOS on the system, and the other 66% to 50% comes from the quality of the hardware design, manufacturing process, support, etc. It is like you are not reviewing about 1/2 of the product. Thanks.