The Inspur NF5488M5 is something truly unique. Although many vendors, including Inspur, can claim to have an 8x NVIDIA Tesla V100 system, the NF5488M5 may just be the highest-end 8x Tesla V100 system you can buy. Not only is it using 8x Tesla V100 SXM3 and for “Volta Next” GPUs with 350W+ TDPs, but it has something special on the fabric side. The GPUs are connected together using NVSwitch technology which means each GPU has 300GB/s of NVLink bandwidth to each of the other GPUs. Essentially, this is half of an NVIDIA DGX-2 or HGX-2 in a 4U chassis.

In our review, we are going to focus a bit more time than normal talking about the hardware, and what makes it completely different than other offerings on the market. By April 2019, Inspur was able to claim at least 51% of AI server market share in China, and this is one of the innovative designs that is helping Inspur continue to build market share. Just for a bit of context, we borrowed this server but the power costs alone to do this review were several thousand dollars so it was a big investment for STH to make to review this server. As such, we want to ensure that we are showing why we wanted to bring this to our readers.

Inspur NF5488M5 Hardware Overview

We are going to go deep into the hardware side of this server because it is an important facet to the solution. It is also something very unique on the market.

We are going to start with the server overview, then deep-dive on the GPU baseboard assembly on the next page. Still, the wrapper around the GPU baseboard is unique and important so we wanted to cover that.

Inspur NF5488M5 Server Overview Video

As with many of our articles this year, we have a video overview of this review that you can find on the STH YouTube channel:

Feel free to listen along as you read or go through this review. It is certainly a longer review for us.

Inspur NF5488M5 Server Overview

The Inspur NF5488M5 is a 4U server that measures 448mm x 175.5mm x 850mm. We are going to start our overview at the front of the chassis. Here we can see two main compartments. The bottom is the GPU tray where we will end this overview. Instead, we are going to start with the top approximately 1U section which is the x86 compute server portion of the server.

One can see front I/O with a management port, two USB 3.0 ports, two SFP+ cages for 10GbE networking, as well as a VGA connector.

Storage is provided by 8x 2.5″ hot-swap bays. All eight bays can utilize SATA III 6.0gbps connectivity. The top set of four drives can optionally utilize U.2 NVMe SSDs.

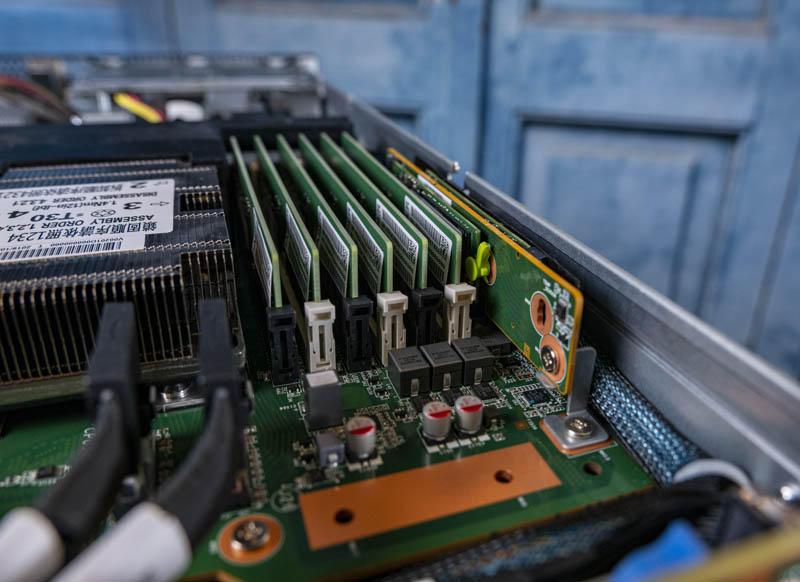

Inside the system, we have a bit more storage. Here we have a dual M.2 SATA boot SSD module on a riser card next to the memory slots. These boot modules allow one to keep front hot-swap bays open for higher-value storage.

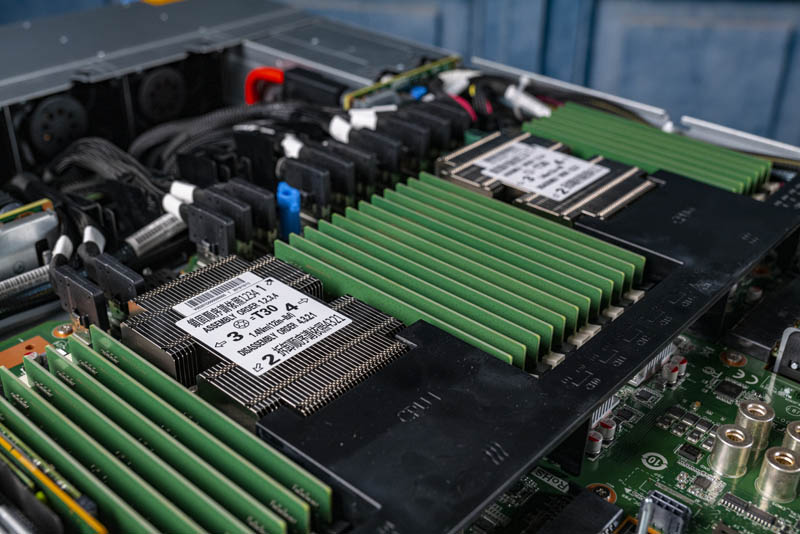

On the topic of memory, this is a dual Intel Xeon Scalable system with a full memory configuration. That means each of the two CPUs can potentially take 12 DIMMs for 24 DIMMs total. In this class of machine, we see the new high-end 2nd Gen Intel Xeon Scalable Refresh SKUs along with some of the legacy 2nd Gen Intel Xeon Scalable L high-memory SKUs. Mich of the chassis design is dedicated to optimizing airflow, but this is a lower TDP per U section of the server, even with higher-end CPU and memory configurations.

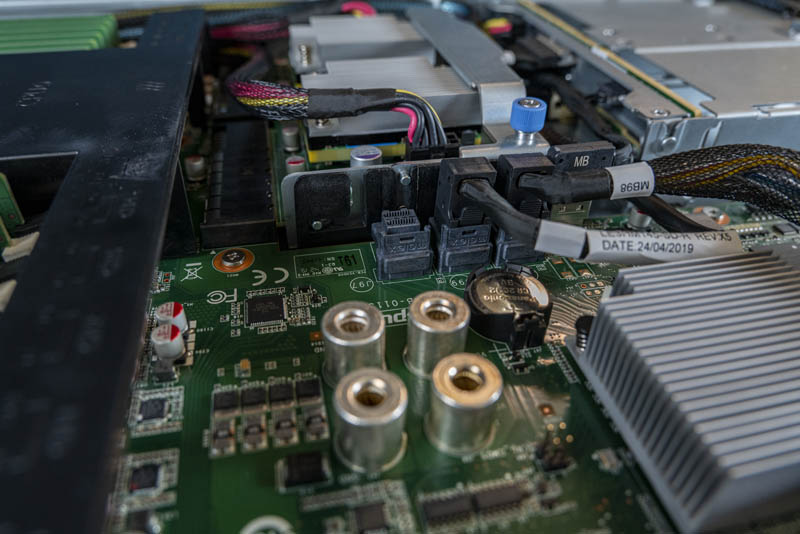

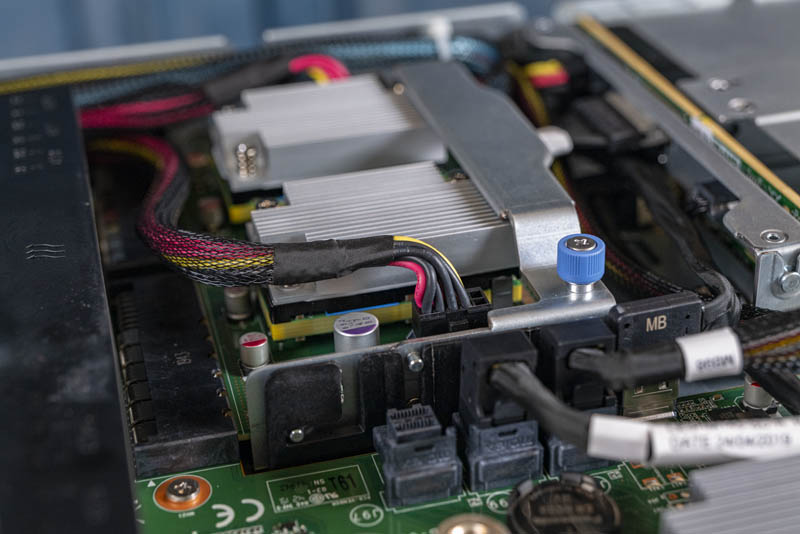

Rounding out a few more bits to the solution, we can see the onboard SFF-8643 connectors providing SATA connectivity. The heatsink to the right of this photo is for the Lewisburg PCH.

You may have noticed the module just behind those connectors. That module provides the CPU’s motherboard with power from the rear power supplies and power distribution boards.

Looking at the rear of the chassis, one can see an array of hot-swap modules. There are three basic types. The center is dominated by fan modules. To each side, one can see power supplies on top and I/O modules on the bottom.

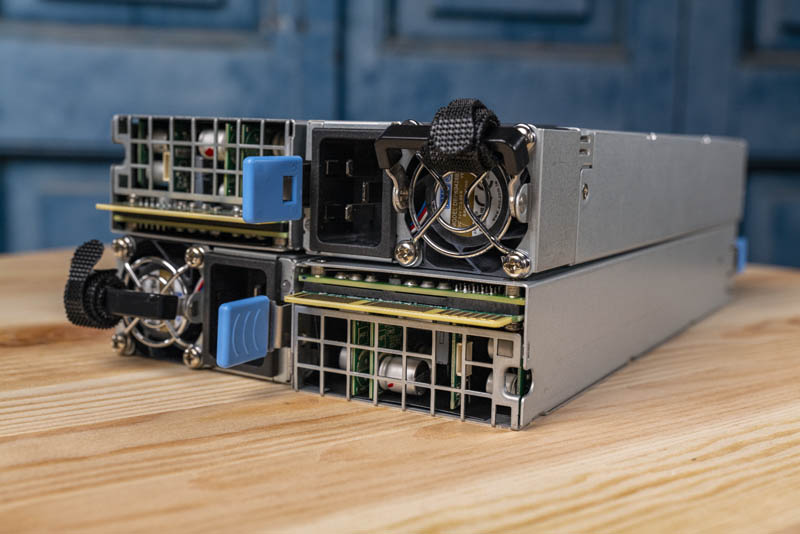

The NV5488M5 utilizes four 3kW power supplies which can be used on A+B data center power to provide redundant operation.

One of the most overlooked, but very important features in a system like this are the fan modules. Each module is comprised of two heavy-duty fans in a hot-swap carrier. Due to the power consumption of this 4U system, these fan modules are tasked with moving a lot of air through the system reliably to keep the system running.

These six fan modules are hot-swappable and have status LEDs that show status. That helps identify units that may need to be swapped out.

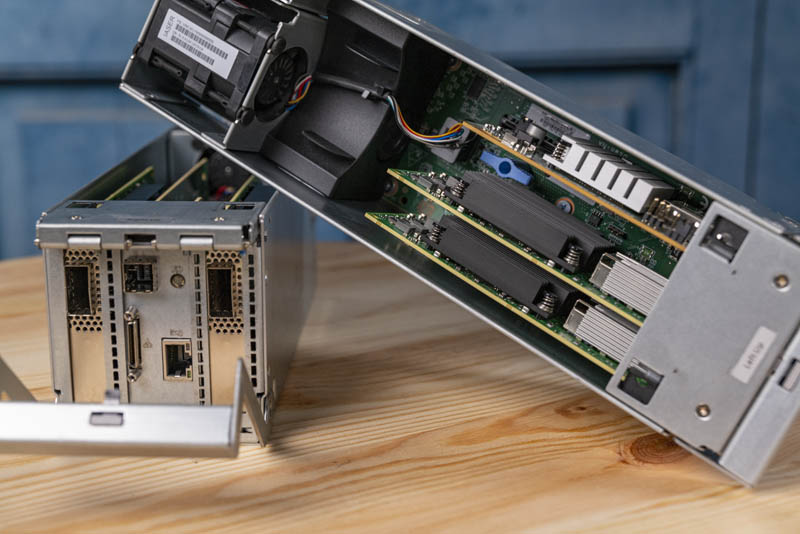

We also wanted to highlight the I/O modules. Here we have the two modules each with two Mellanox Infiniband cards. That gives us a ratio of two GPUs for each Infiniband card.

The modules themselves have their own fans, as well as an additional slot. One slot is used for rear networking such as 10/25GbE. The other side has a slot designed for legacy I/O. One can hook up a dongle and have USB and other ports for hot aisle local management.

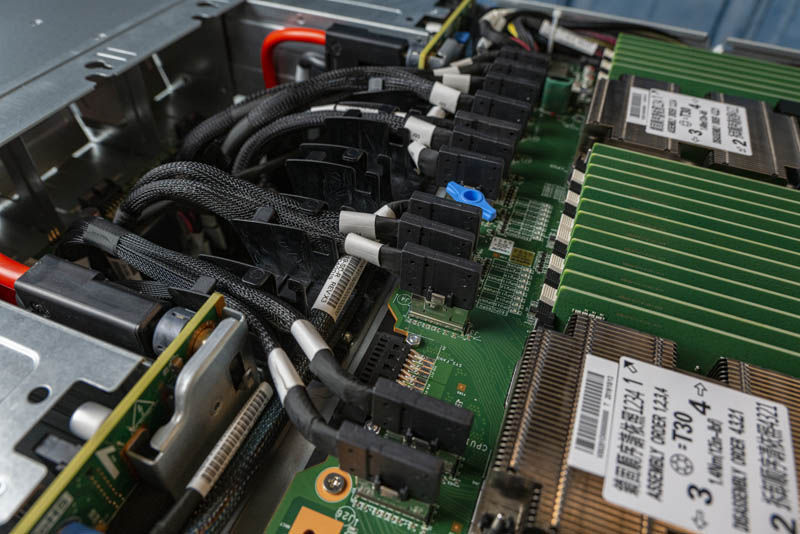

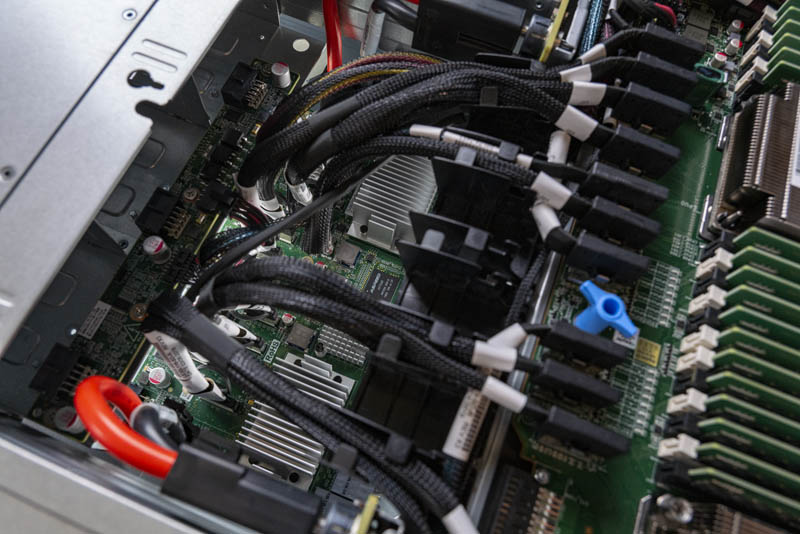

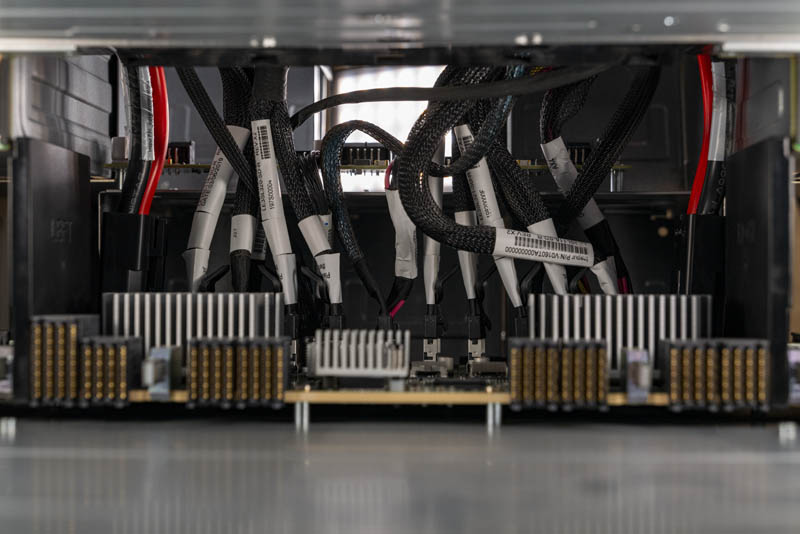

Behind the motherboard, and that wall of fans, one can see an array of PCIe cables. These cables carry PCIe signaling from the main motherboard on the top of the chassis to the PCBs on the bottom of the chassis. Inspur has a cable management solution to ensure that these cables can be used without blocking too much airflow to the CPUs, memory and other motherboard components.

Here is a view looking down through those cables to the PCIe switch PCB. There, one can see a second Aspeed AST2520 BMC. There is a BMC on the main motherboard as well.

On either side, there are large heatsinks. Those heatsinks cover the Broadcom (PLX) PEX9797 97 lane, 25 port, PCI Express Gen3 ExpressFabric switches. These are high-end Broadcom PCIe switches and are used to tie the various parts of the system together.

Those PCIe lanes, are connected to the GPU baseboard via high-density PCIe connectors.

Before we get to the GPU baseboard, you may have noticed the large red and black wires in this section. Those are the power feeds inside the system.

Next, we are going to look at the GPU baseboard assembly that this plugs into in more detail.

That’s a nice (and I bet really expensive) server for AI workloads!

The idle consumption, as shown in the nvidia-smi terminal, of the V100s is a bit higher than what I’d have expected. It seems weird that the cards stay at the p0 power state (max freq.). In my experience (which is not with v100s, to be fair), just about half a minute after setting the persistence mode to on, the power state reaches p8 and the consumption is way lower (~10W). It may very well be the default power management for these cards, IDK. I don’t think that’s a concern for any purchaser of that server, though, since I don’t think they will keep it idling for just a second…

Thank you for the great review Patrick! Is there any chance that you’d at some point be able to test some non-standard AI accelerators such as Groq’s tensor streaming processor, Habana’s Gaudi etc. in the same fashion?

What’re the advantages (if any) of this Inspur server vs Supermicro 9029GP-TNVRT (which is expandable to 16GPU and even then cost under 250K$ fully configured – and price is <150K$ with 8 V100 32GB SXM3 GPUs, RAM, NVMe etc)?

While usually 4U is much better than 10U I don't think it's really important in this case.

Igor – different companies supporting so you would next look to the software and services portfolio beyond the box itself. You are right that this would be 8 GPU in 4U while you are discussing 8 GPU in 10U for the Supermicro half-configured box. Inspur’s alternative to the Supermicro 9029GP-TNVRT is the 16x GPU AGX-5 which fits in 8U if you wanted 16x GPUs in a HGX-2 platform in a denser configuration.

L.P. – hopefully, that will start late this year.