Inspur NF5488M5 Other Chassis Impressions

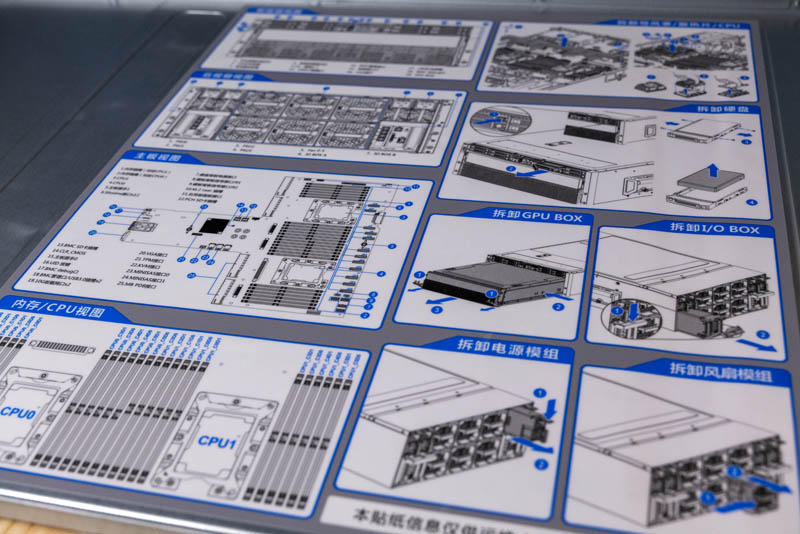

We wanted to cover a few more chassis related items of the server. First, Inspur has a nice service guide underneath the chassis in English and Mandarin. This is a fairly complex system the first time you take it apart so this a great printed in-data center reference for the machine.

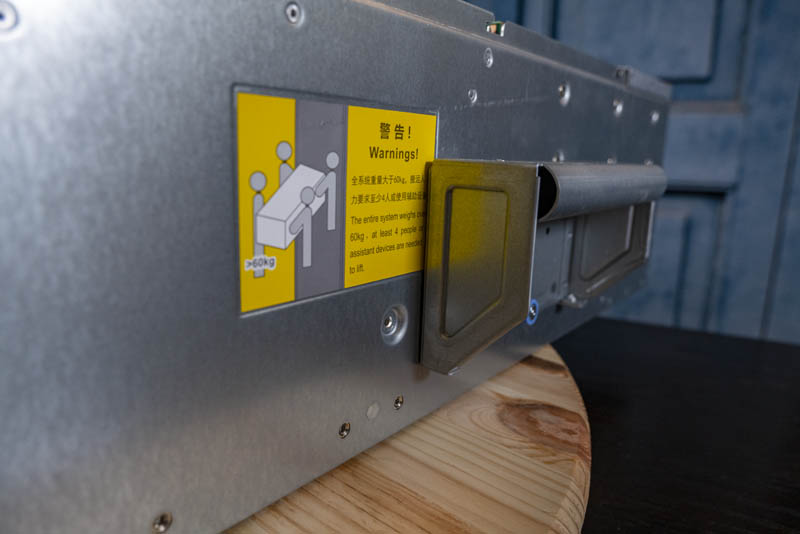

There is a nice warning label on the side that says that the server can weigh over 60kg. For some reference, the GPU box alone weighs over 23kg.

To help move the unit, Inspur suggests having four people and includes handles. When we moved the unit out of the Inspur Silicon Valley office, we used four people to carry the system. Realistically, once in the data center, there may be some movement but we suggest using a server lift if you are installing these. Most data centers have them, but with such a heavy node, it makes a lot of sense here.

Inspur Systems NF5488M5 Topology

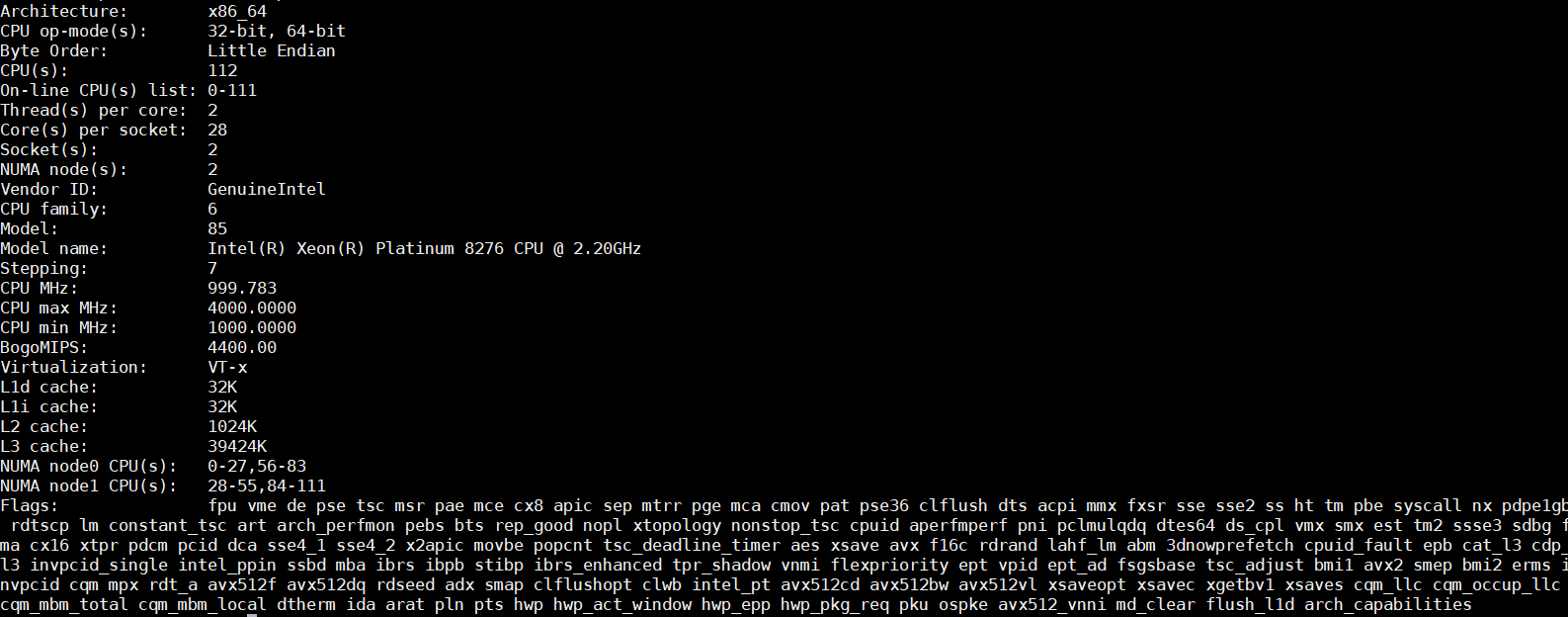

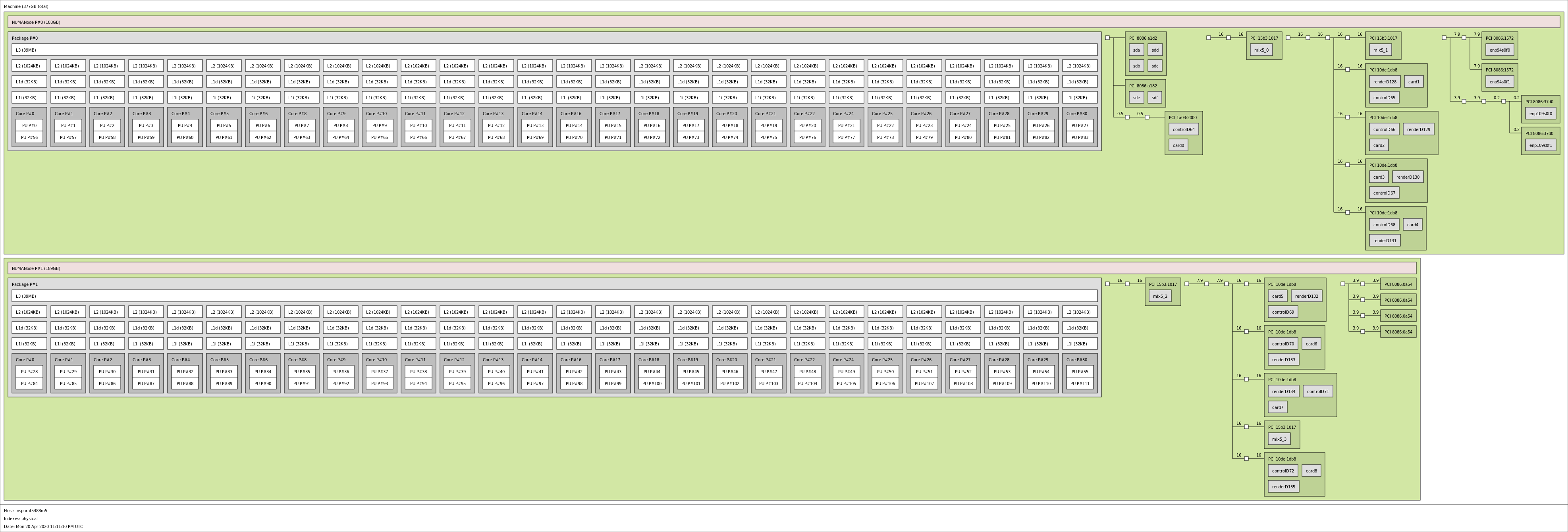

With training servers, topology is a big deal. We used Intel Xeon Platinum 8276 CPUs in our test system. The new 2nd Gen Intel Xeon Scalable Refresh SKUs are 2x UPI parts while the legacy parts are 3x UPI so that is something to consider.

Each CPU has a set of GPUs, storage, Infiniband cards and other I/O attached to it. With the sheer number of devices, you may need to click this one to get a better view.

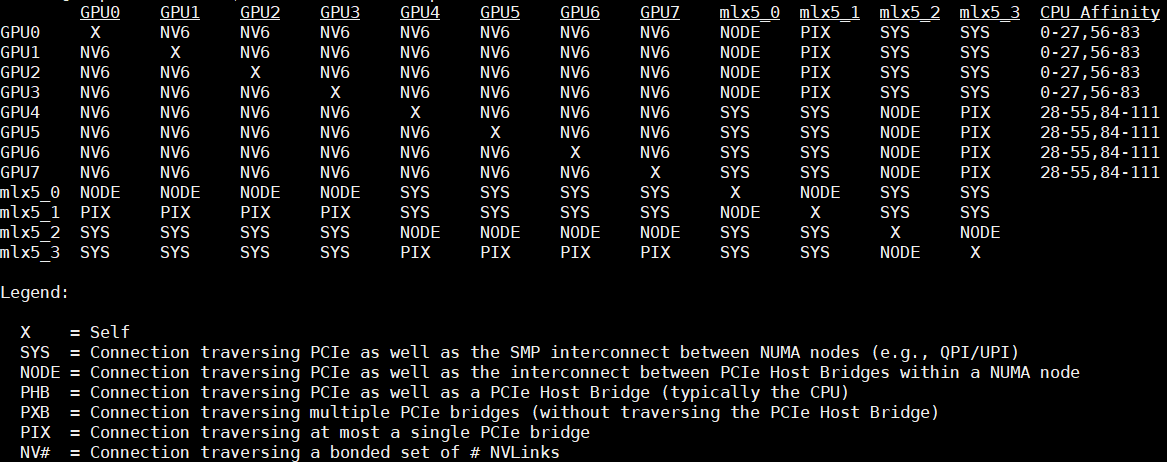

In terms of the NVIDIA topology, one can see the NVIDIA GPUs along with Mellanox NICs. This topology shows the 6 bonded NVLink per GPU on the switched architecture. There is also PCIe and UPI traversal routes. Overall, you can see the four Mellanox Infiniband cards and how they connect to the system.

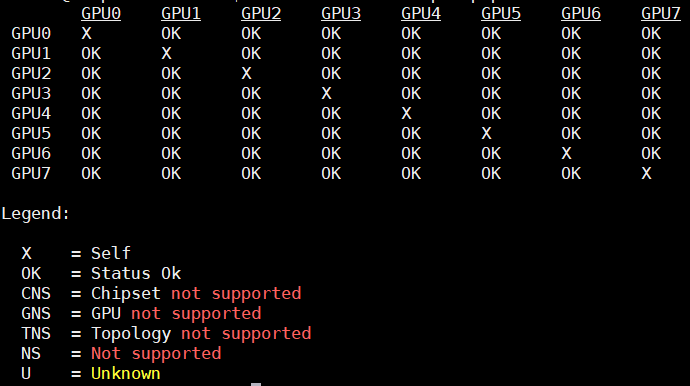

We can see the peer-to-peer topology is setup.

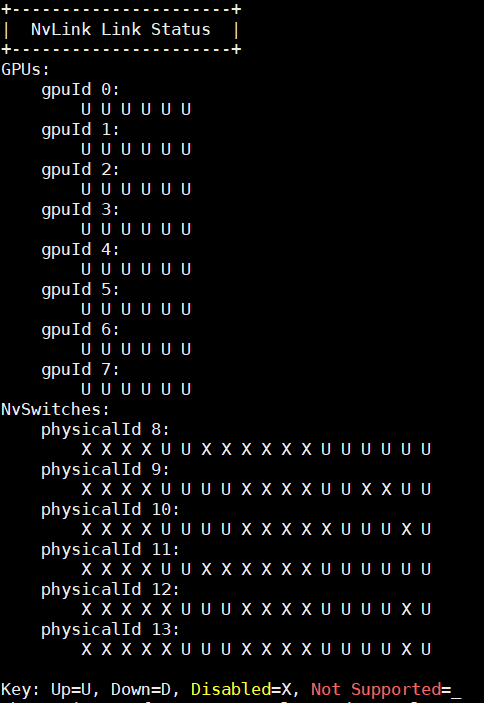

On the NVLink status, we can see the eight GPUs each with their six NVLinks that are up. We can also see the six NVSwitches each with eight links. Each GPU has a link to each NVSwitch. So if we are doing a GPU-to-GPU transfer, we are pushing 1/6th of that transfer over each of the switches on the HGX-2 baseboard.

On a 16x GPU HGX-2 or DGX-2 system, you would see more of the switch ports utilized to uplink to the switches on the other GPU baseboard via the bridges.

The addition of those switches makes this a significantly more robust architecture than the direct attach NVLink we find on DGX-1/ HGX-1 class systems.

Next, we are going to look at the management followed by some of the background behind why we are seeing this type of solution.

That’s a nice (and I bet really expensive) server for AI workloads!

The idle consumption, as shown in the nvidia-smi terminal, of the V100s is a bit higher than what I’d have expected. It seems weird that the cards stay at the p0 power state (max freq.). In my experience (which is not with v100s, to be fair), just about half a minute after setting the persistence mode to on, the power state reaches p8 and the consumption is way lower (~10W). It may very well be the default power management for these cards, IDK. I don’t think that’s a concern for any purchaser of that server, though, since I don’t think they will keep it idling for just a second…

Thank you for the great review Patrick! Is there any chance that you’d at some point be able to test some non-standard AI accelerators such as Groq’s tensor streaming processor, Habana’s Gaudi etc. in the same fashion?

What’re the advantages (if any) of this Inspur server vs Supermicro 9029GP-TNVRT (which is expandable to 16GPU and even then cost under 250K$ fully configured – and price is <150K$ with 8 V100 32GB SXM3 GPUs, RAM, NVMe etc)?

While usually 4U is much better than 10U I don't think it's really important in this case.

Igor – different companies supporting so you would next look to the software and services portfolio beyond the box itself. You are right that this would be 8 GPU in 4U while you are discussing 8 GPU in 10U for the Supermicro half-configured box. Inspur’s alternative to the Supermicro 9029GP-TNVRT is the 16x GPU AGX-5 which fits in 8U if you wanted 16x GPUs in a HGX-2 platform in a denser configuration.

L.P. – hopefully, that will start late this year.