Inspur NF5488M5 Power Consumption

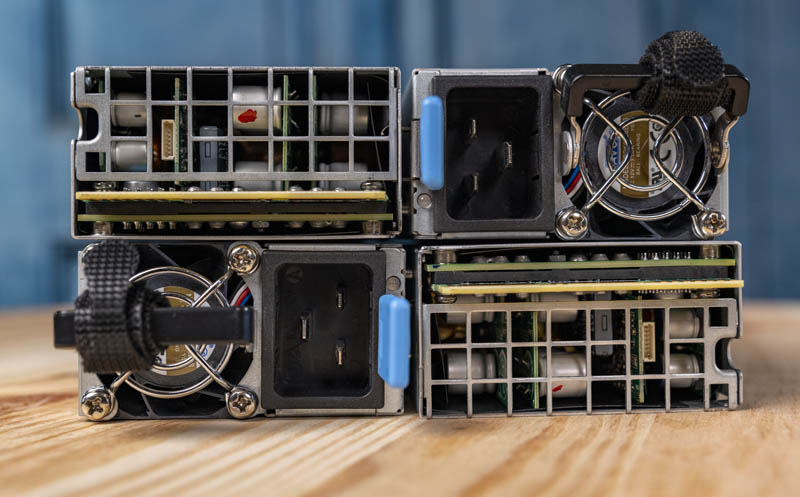

Our Inspur NF5488M5 test server used a quad 3kW power supply configuration. The PSUs are 80Plus Platinum level units.

- Ubuntu OS Idle Power Consumption: 1.1kW

- Average load during AI/ ML workloads: 4.6kW

- Maximum Observed: 5.1kW

Note these results were taken using two 208V Schneider Electric / APC PDUs at 17.7C and 72% RH. Our testing window shown here had a +/- 0.3C and +/- 2% RH variance.

We actually split the load across two PDUs just to ensure we did not go over on our testing. Realistically, we found that this can be powered by a 208V 30A circuit, even if it is only one per rack (or two using two PDUs and non-redundant power.)

Of course, most deployments will see 18-60kW racks with these servers. However, one of the major benefits of a system like this versus a 16x GPU HGX-2 system is the fact that it uses less power so it can fit into more deployment scenarios.

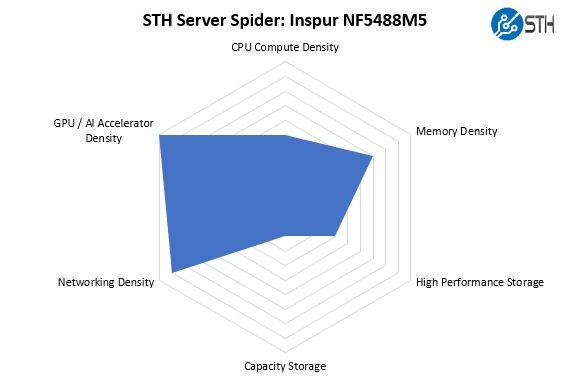

STH Server Spider Inspur NF5488M5

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

The Inspur Systems NF5488M5 is a solution designed around one purpose: keeping eight SXM3 Tesla V100’s fed with data. To that end, it supports up to 2.8-3.2kW of GPUs plus the in-chassis and external fabrics to keep data in GPU pipelines.

Final Words

There are a few key takeaways from our Inspur NF5488M5 review. This is a well-built server from a top AI/ deep learning server vendor. As such, the fit and finish are great and it is designed to be a high-end solution.

We see this server as a tool that AI and deep learning researchers can use to get the benefits of current Tesla V100 SXM3 and “Volta Next” GPUs and NVSwitch fabric without having to move up to a larger and more power-hungry sixteen GPU HGX-2 platform. With the SXM3 modules, we get the benefits of higher TDPs and the NVSwitch fabric to provide better performance than we would from traditional PCIe servers. The more an application can use the NVSwitch and NVLink fabric, the better the performance gains will be.

In terms of pricing, our suggestion here is to reach out to a sales rep. Our sense is that it will rightfully fall between that of a HGX-1 platform and a HGX-2 platform given the capabilities we are seeing. Although NVIDIA sells the DGX-2’s for $399K, we see HGX-2 platforms on the market for well under $300K which puts a ceiling in terms of pricing for the NF5488M5.

Beyond the hardware, there is much more that goes into a solution like this. That includes the storage, Infiniband and other networking, along with all of the clustering solutions, data science frameworks and tools. While we focused on the physical server here, we do want our readers to understand this is part of a much larger solution framework.

Still, for those who want to know more about the AI hardware that is available, this is a top-tier, if not the top-end 8x GPU solution on the market for the NVIDIA Tesla V100 generation.

That’s a nice (and I bet really expensive) server for AI workloads!

The idle consumption, as shown in the nvidia-smi terminal, of the V100s is a bit higher than what I’d have expected. It seems weird that the cards stay at the p0 power state (max freq.). In my experience (which is not with v100s, to be fair), just about half a minute after setting the persistence mode to on, the power state reaches p8 and the consumption is way lower (~10W). It may very well be the default power management for these cards, IDK. I don’t think that’s a concern for any purchaser of that server, though, since I don’t think they will keep it idling for just a second…

Thank you for the great review Patrick! Is there any chance that you’d at some point be able to test some non-standard AI accelerators such as Groq’s tensor streaming processor, Habana’s Gaudi etc. in the same fashion?

What’re the advantages (if any) of this Inspur server vs Supermicro 9029GP-TNVRT (which is expandable to 16GPU and even then cost under 250K$ fully configured – and price is <150K$ with 8 V100 32GB SXM3 GPUs, RAM, NVMe etc)?

While usually 4U is much better than 10U I don't think it's really important in this case.

Igor – different companies supporting so you would next look to the software and services portfolio beyond the box itself. You are right that this would be 8 GPU in 4U while you are discussing 8 GPU in 10U for the Supermicro half-configured box. Inspur’s alternative to the Supermicro 9029GP-TNVRT is the 16x GPU AGX-5 which fits in 8U if you wanted 16x GPUs in a HGX-2 platform in a denser configuration.

L.P. – hopefully, that will start late this year.