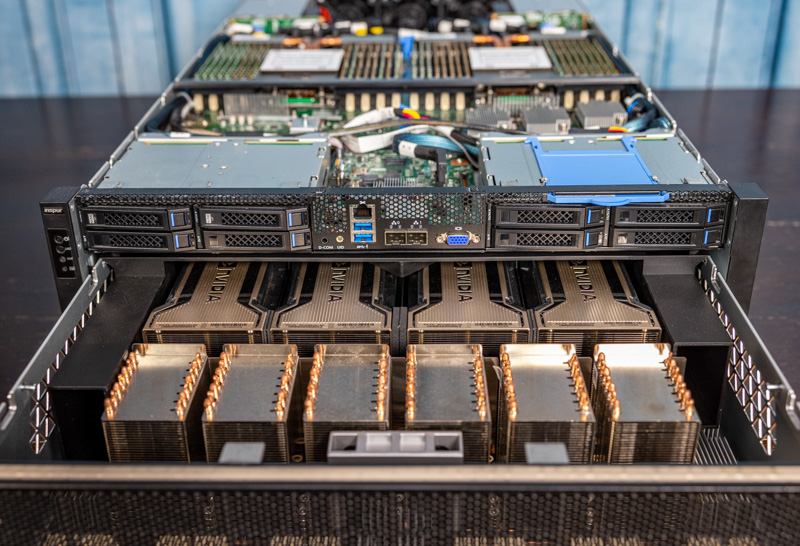

Inspur NF5488A5 GPU Baseboard Assembly

The GPU baseboard assembly slides out from the main 4U server chassis. In theory, one could upgrade GPUs without having to take apart the entire server. That is uncommon, however, there have been cases where during memory size transition (e.g. 40GB to 80GB) there are upgrade programs. Also, this is an immensely complex assembly, so if something fails, having easy access is important.

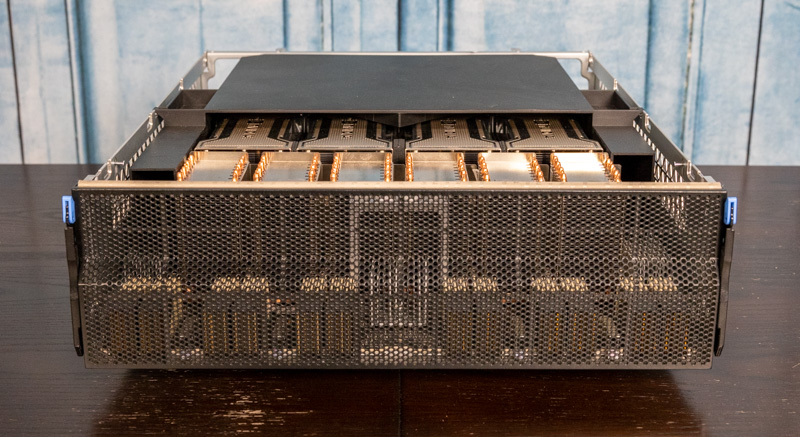

Something slightly interesting here is that the black airflow guide is a bit different in this generation than in the previous generation as it appears shorter. That is due to a fundamental change in the GPU cooling design.

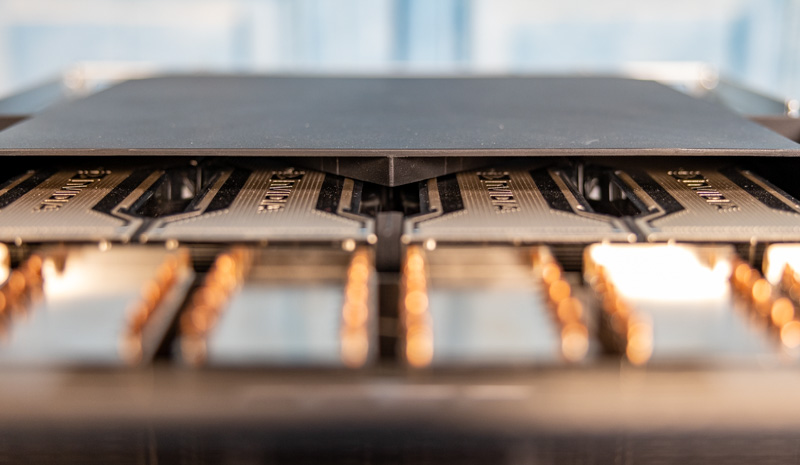

This is a small detail, but the cover actually has a channel directing airflow away from the middle. Although NVIDIA makes the GPU assembly, Inspur has to design the cooling, chassis, and data/ power delivery even for this portion. NVIDIA made a small change with the A100 which meant that Inspur had to re-design this part.

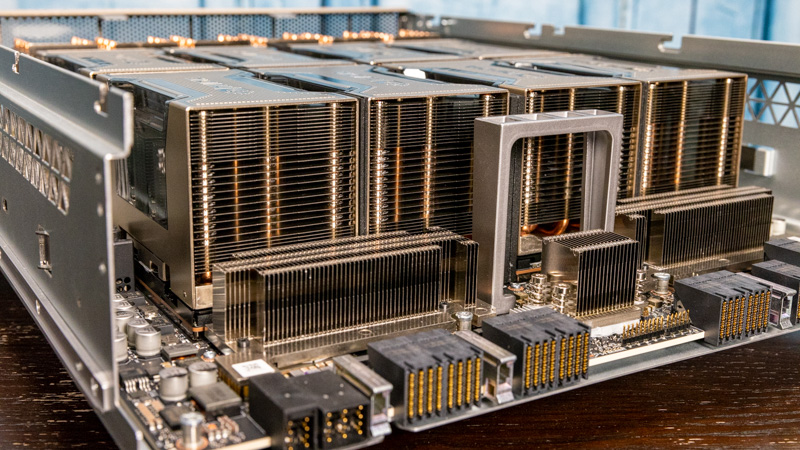

Here is a look at the change NVIDIA made. One can see that the heatsinks now have a clear plastic airflow guide. There is also material between the GPUs keeping air out of the area between GPUs. These are small details, but important ones to highlight differences. Although the V100 and A100 may look similar there was certainly a generational jump in the fit and finish here.

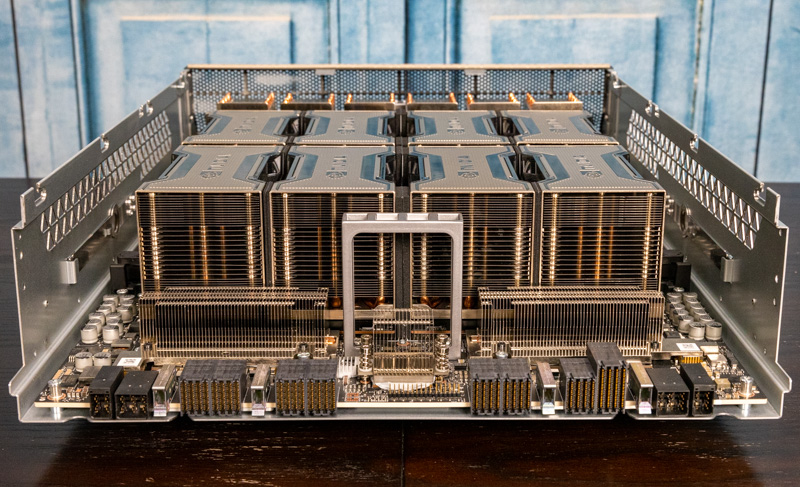

If one recalls the PCIe switch baseboard, one cans see at one end the high-density PCIe and power connectors feeding the HGX A100 8 GPU Delta assembly.

Here is a view with the airflow guide removed.

Behind these connectors, we see heatsinks that have been upgraded on all of the components, not just the GPUs.

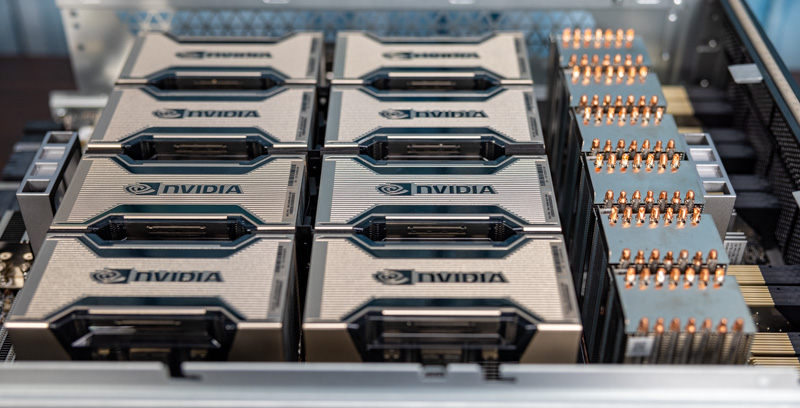

Next, we have the set of 8x NVIDIA A100 SXM4 GPUs. Inspur supports 40GB and 80GB models of the A100.

Generally, 400W A100’s can be cooled like this but the cooling has been upgraded significantly since the 350W V100’s we saw in the M5 version. Inspur has 500W A100’s in the A5 platform as well, but that is another level of power and cooling required.

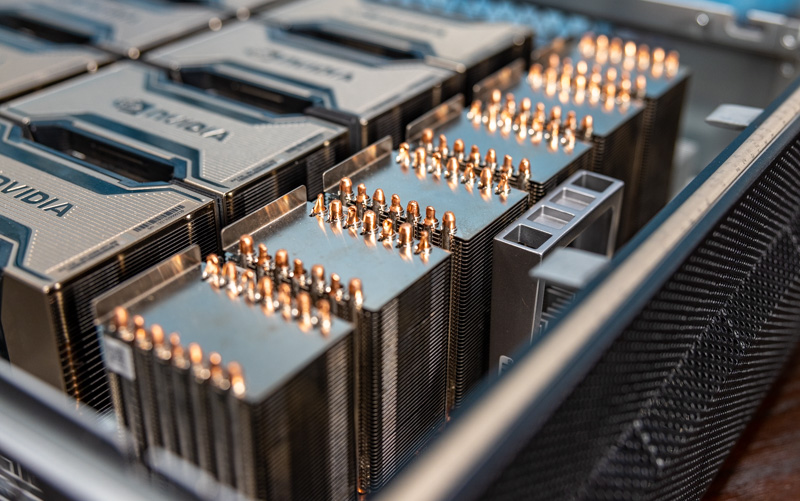

On the other side of the GPUs we have the NVSwitch array. One can see the heatsinks are significantly updated here. In the M5 review, we saw on the V100 generation part that the heatsinks were not full towers like these and only had three heat pipes each. Now, the heatsinks are taller with more fins and fourteen copper heat pipe ends protruding from the top.

NVSwitch is important because it creates a switched fabric allowing for more bandwidth to each GPU. The A100 GPUs have more NVLinks and those are used to add more fabric bandwidth.

In the “Delta” platform, each NVIDIA A100 GPU has its NVLinks connected to the switching fabric. This is a big differentiator over PCIe A100’s and “Redstone” platform A100’s. PCIe-based GPUs must use bridges to get some GPU-to-GPU using NVLink connectivity. In the 4x GPU Redstone platform each GPU is connected to the three other GPUs directly. Scaling up to NVSwitch provides more bandwidth, but it does add cost and power consumption. That is why we see it on these higher-end platforms, but not necessarily lower-end A100 platforms.

Overall, the key theme is that cooling has been upgraded significantly all around the system to support the new NVIDIA A100 features.

Next, we wanted to get into a few additional features along with performance.

We upgraded from the DGX-2 (initially with Volta, then Volta Next) with a DGX A100 – with 16 A100 GPUs back in January – Our system is Nvidia branded, not inspur. Next step is to extricate the inferior AMDs with some American made Xeon Ice Lake SP.

DGX-2 came after the Pascal based DGX-1. So has nothing to do with 8 or 16 GPUs.

Performance is not even remotely comparable – a FEA sim that took 63 min on a 16 GPU Volta Next DGX-2 is now done in 4 minutes – with an increase in fidelity / complexity of 50% only take a minute longer than that. 4-5 min is near real time – allowing our iterative engineering process to fully utilize the Engineer’s intuition…. 5 min penalty vs 63-64 min.

DGX-1 was available with V100s. DGX-2 is entirely to do with doubling the GPU count.

Nvidia ditched Intel for failing to deliver on multiple levels, and will likely go ARM in a year or two.

The HGX platform can come in 4-8-16 gpu configs as Patrick showed the connectors on the board for linking a 2nd backplane above. Icelake A100s servers don’t make much sense, they simply don’t have enough pcie lanes or cores to compete at the 16 GPU level.

There will be a couple of 8x A100 Icelake servers coming out but no 16.