Our Inspur Systems NF5468M5 review is going to focus on how this 4U server delivers a massive amount of computing power. AI and deep learning are key growth vectors for Inspur as we saw in our interview with Liu Jun, AVP and GM of AI and HPC for Inspur. During that interview, we were already testing the Inspur Systems NF5468M5 system that delivers eight NVIDIA Tesla V100 32GB PCIe cards in a single system. While NVIDIA has been pushing SXM2 Tesla GPUs (Inspur sells systems with these as well), cloud providers are still deploying PCIe GPUs in numbers. Since we have reviewed several other 8-10x GPU systems previously, we wanted to see how the Inspur solution performs.

Inspur Systems NF5468M5 Hardware Overview

Looking at the Inspur Systems NF5468M5 one can see that something is different. The 4U chassis is heavy, albeit not incredibly deep. We would suggest that if your team racks these units to utilize the built-in handles and a server lift to ensure that the heavy chassis can be positioned safely.

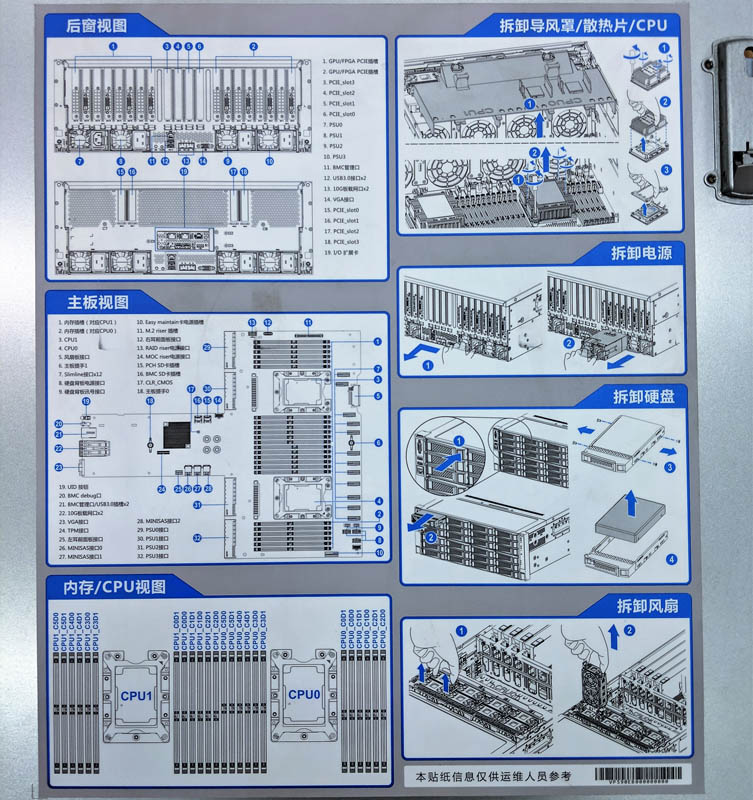

Opening the chassis, one sees a label that is akin to what other top-tier server vendors have. Our review unit was sent from a data center in China, so it has Chinese and English labeling. Still, the diagrams are clear and describe most of the major systems.

The top cover is secured by a nice latching mechanism which is better than the screw secured options we have seen from other vendors. Furthermore, the top can be removed while the Inspur Systems NF5468M5 is still installed in its rack. Some competitive systems cannot have top covers removed for servicing while the server is still on its rails. These are small quality factors that the NF5468M5 has that some of its competitors do not have.

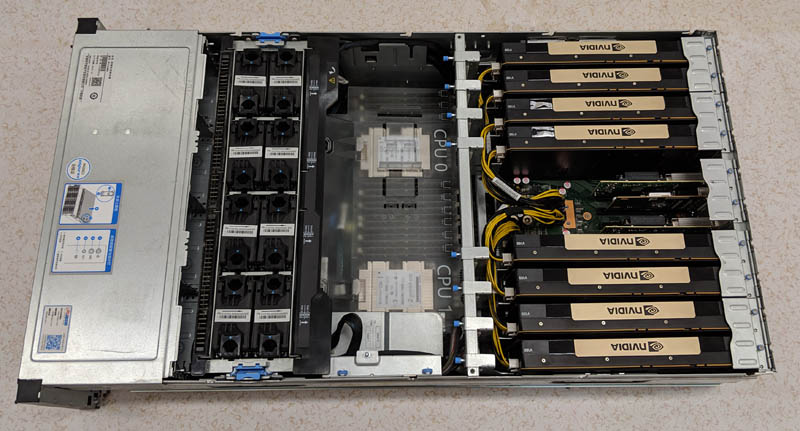

Looking at the system, we are going to start with the highest value section, its GPU PCB, before moving onto forward sections.

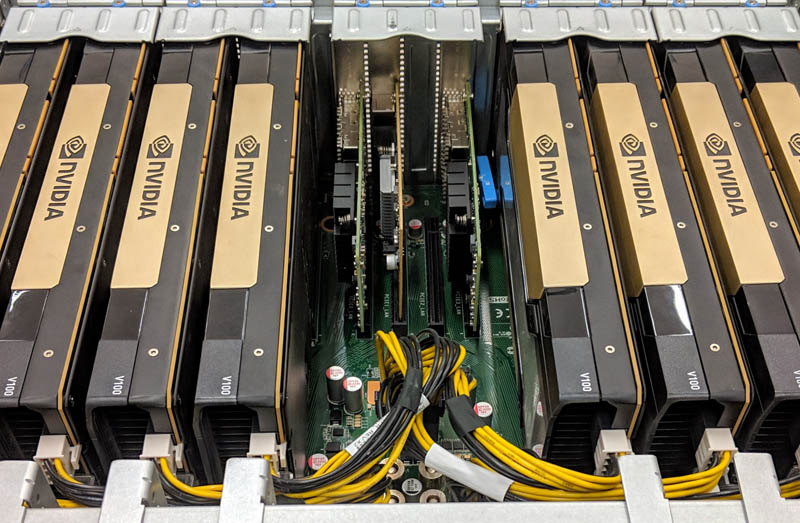

Here is the shot many will want to see. We have eight NVIDIA Tesla V100 32GB GPUs installed with four PCIe slots available for networking. We have an entire section of this review later for system topology, so instead, we are going to focus this section on hardware.

One item we wanted to highlight is the GPU power distribution. The Inspur Systems NF5468M5 brings power from the bottom level of the system to the PCIe motherboard then to the GPUs. In the DeepLearning12 overview we showed an example of the power cables being routed directly from the bottom CPU motherboard to GPUs. We like Inspur’s design direction here.

By utilizing this design, the Inspur Systems NF5468M5 has a very nice array of custom length cables that keep the eight NVIDIA Tesla V100 32GB cards fed with power while minimizing the amount of cabling that interferes with airflow.

The GPUs themselves are mounted on small brackets which keeps them secure during installation and transport while also making servicing the system very easy.

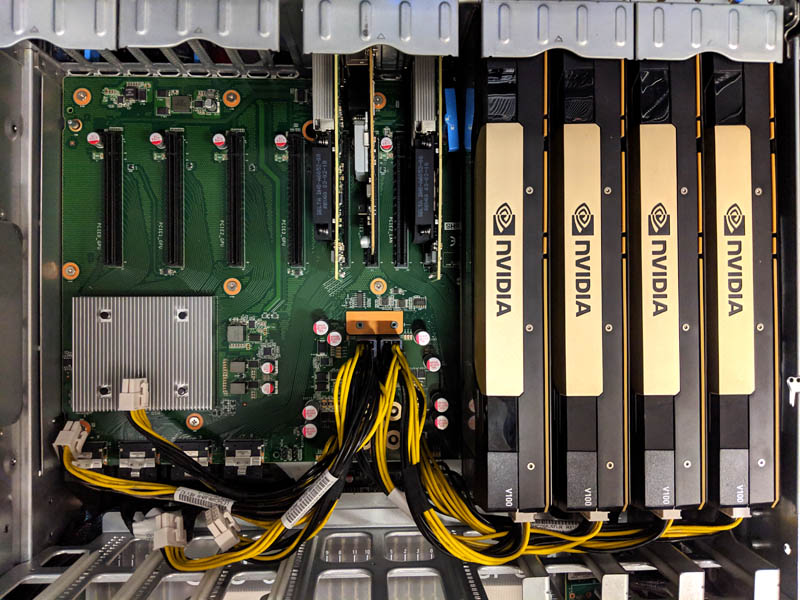

Between the GPUs are four PCIe x16 slots. These PCIe slots are used for NICs. One can see we have three of the four slots filled with NICs. In this picture, we have two Mellanox ConnectX-4 Lx dual-port 25GbE NICs and an Intel i350 NIC between them.

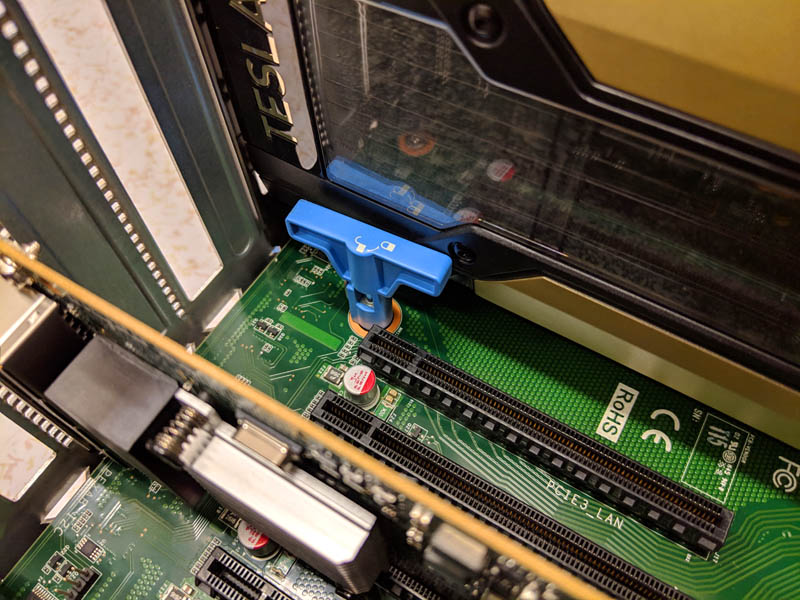

As we swapped out the NICs for 100GbE / 100Gbps EDR InfiniBand Mellanox ConnectX-5 VPI cards, we also took a shot showing the PCB under the GPUs. Each PCIe x16 slot has double width spacing. We can also see the PLX switch below.

We also wanted to draw attention to a feature of the Inspur Systems NF5468M5 that would otherwise go overlooked. The PCIe switch motherboard is designed to be easily serviceable. There is a large locking handle that keeps the board in place. Other competitive systems use screws. Inspur certainly shows a more mature level of serviceability than some of its competition.

That extends even to how the PCIe cards are secured. Inspur has a flange mechanism that locks sets of four PCIe slots in place using only two screws while managing pressure applied. We have seen competitive systems like DeepLearning11 where one needs to individually install up to 20 screws that are prone to falling between cards. Inspur’s solution removes the variable during maintenance which shows how mature their product is.

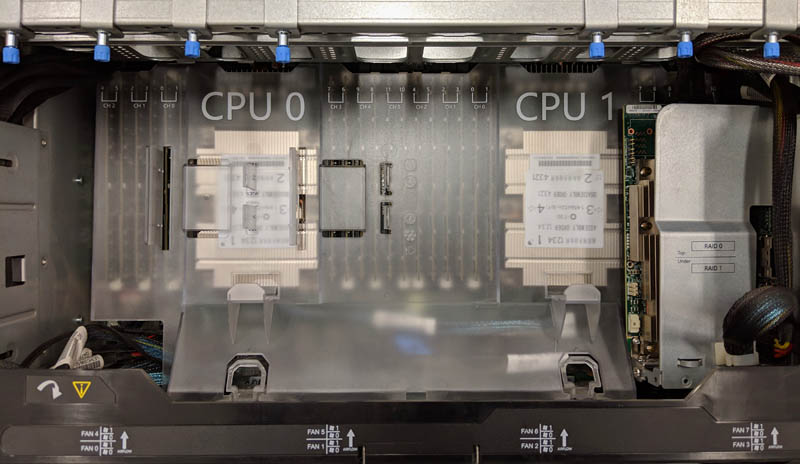

The CPU area is very straightforward with two CPUs and support for up to 24 DIMMs (12 per CPU). Our test configuration pictured is running 24x DDR4-2666 32GB RDIMMs. The CPUs sit under an air guide directing airflow to the CPUs and memory.

We wanted to pivot to the storage which is intriguing. Just above the CPU and RAM area is a dual low profile PCIe SAS adapter cage. Here, one can install up to two RAID cards or HBAs inside the chassis to power front bays. This is a great design. Many competing solutions require SAS cards to be installed near the GPUs. That increases cabling lengths and takes up valuable rear I/O slots to service front storage. It also means customers have a choice of HBAs and RAID controllers to use. The CPU air shroud includes mounting points for BBUs.

A quick note here, the labels are “Top/ Under” and “RAID 0” and “RAID 1.” It would be better to say Top/ Bottom and RAID Card 0 and RAID Card 1 to make the labels clearer.

Inspur offers a number of front storage options, most revolve around sets of 3.5″ bays. The Inspur Systems NF5468M5 can have up to 24x 3.5″ front hot swap bays. On the rack ears, Inspur has standard I/O for management, buttons and status lights.

Our particular configuration came used in the field. It had twelve hot-swap 3.5″ bays. The top row of hot-swap bays is for NVMe drives. The bottom two rows are for SAS/ SATA.

The Inspur Systems NF5468M5 drive trays can accept 3.5″ drives or 2.5″ drives using bottom mounting points. Here are examples of NVMe and SATA drives installed in the 3.5″ drive trays.

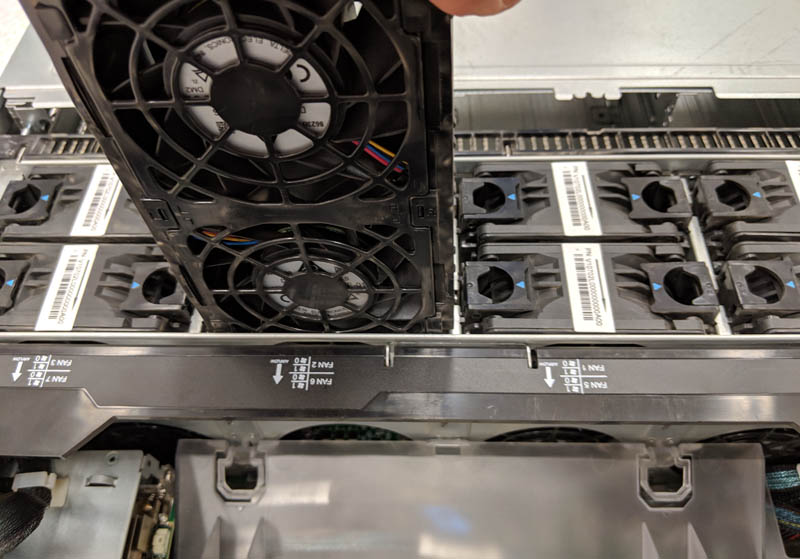

Airflow in GPU systems is a primary design consideration. The Inspur Systems NF5468M5 uses stacked sets of redundant fans. Each fan carrier holds two fans and there are two rows of carriers that are four columns across. This yields a total of sixteen large chassis fans moving a lot of air through the chassis.

Power is provided by four 2.2kW Delta 80Plus Platinum power supplies. Based on what we saw from measured power, the system can function on only two power supplies.

We wanted to put the rear of the unit together for some context. One can see the PCIe motherboard with GPUs and NICs occupying the top 3U of rack space. The bottom 1U has the four power supplies, and standard rear I/O. There is a management Ethernet port, a VGA port, and two USB 3.0 ports.

One feature we really liked was the use of SFP+ 10GbE networking via the Lewisburg PCH‘s Intel X722 NIC. Our test system had a 1GbE add-in card to utilize lower-speed provisioning networks, but the base 10GbE inclusion was a nice touch. These systems have higher average selling prices due to the NVIDIA GPUs, which makes upgrading to 10GbE relatively low cost.

Next, we are going to take a look at the Inspur Systems NF5468M5 test configuration and system topology, before continuing with our review.

Ya’ll are doing some amazing reviews. Let us know when the server is translated on par with Dell.

How wonderful this product review is! So practical and justice!

Amazing. For us to consider Inspur in Europe English translation needs to be perfect since we have people from 11 different first languages in IT. Our corporate standard since we are international is English. Since English isn’t my first language I know why so early of that looks a little off. They need to hire you or someone to do that final read and editing and we would be able to consider them.

The system looks great. Do more of these reviews

Thanks for the review, would love to see a comparison with MI60 in a similar setup.

Great review! This looks like better hardware than the Supermicro GPU servers we use.

Can we see a review of the Asus ESC8000 as well? I have not found any other gpu compute designer that offers the choice in bios between single and dual root such as Asus does.

Hi Matthias – we have two ASUS platforms in the lab that are being reviewed, but not the ASUS ESC8000. I will ask.

How is the performance affected by CVE‑2019‑5665 through CVE‑2019‑5671and CVE‑2018‑6260?

P2P bandwidth testing result is incorrect, above result should be from NVLINK P100 GPU server not PCIE V100.