Recently I did our Mellanox ConnectX-5 VPI 100GbE and EDR InfiniBand Review which focused on the company’s 100Gbps generation. There is another card that we use in the lab often that I wanted to do a mini-review of, the Mellanox ConnectX-4 Lx. The ConnectX-4 Lx was one of the first 25GbE adapters and for most of our readers who are looking at networking cards for virtualization or storage, the ConnectX-4 Lx is one we would suggest looking into. In this review, we are going to show you why.

Mellanox ConnectX-4 Lx Hardware Overview

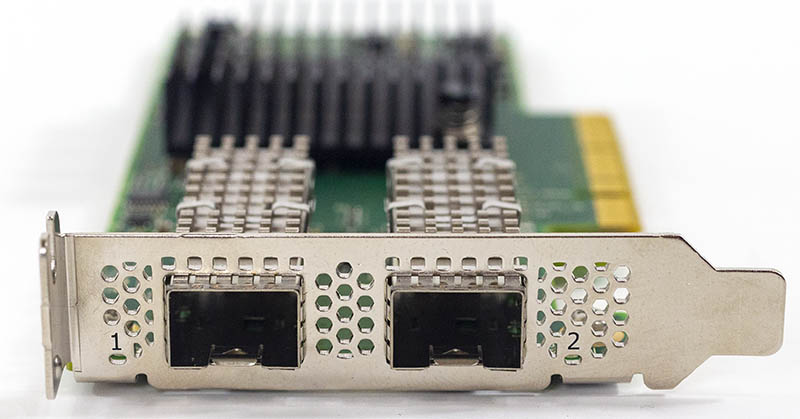

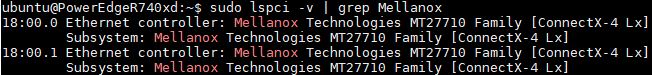

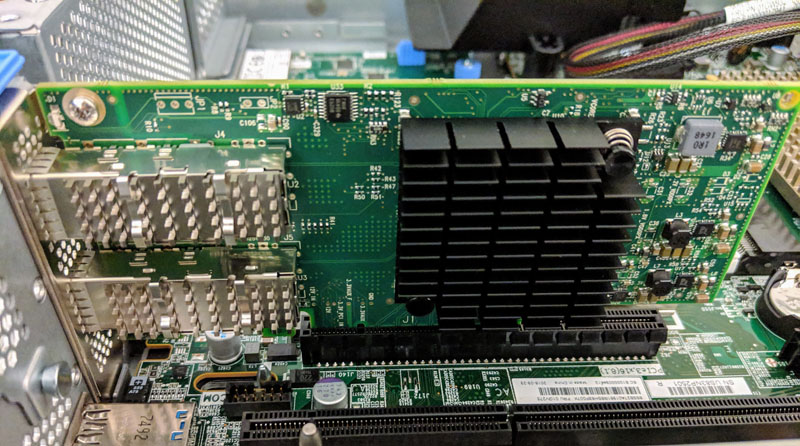

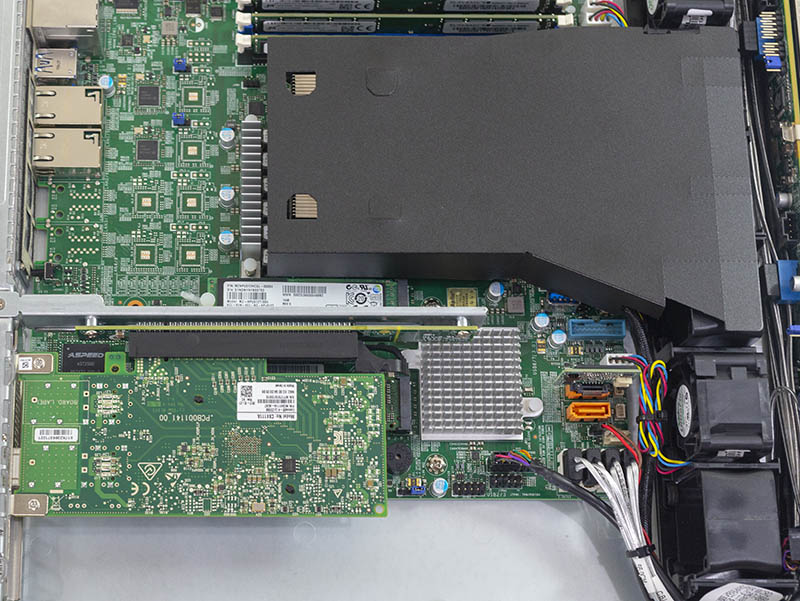

In our review, we are using the Mellanox ConnectX-4 Lx dual-port 25GbE card. Specifically, we have a model called the Mellanox MCX4121A-ACAT or CX4121A for short. The first 4 in the model number denotes ConnectX-4, the 6 in the model number shows dual port. The card slots into a PCIe 3.0 x8 slot which provides enough bandwidth to run the card at full speed.

One of the key differences for 25GbE is that the new signaling standard also means new optics and cabling versus 10GbE in the form of SFP28. In the early days of 25GbE, there were a few backward compatibility headaches. Today, you can generally run an SFP+ 10GbE network into an SFP28 port.

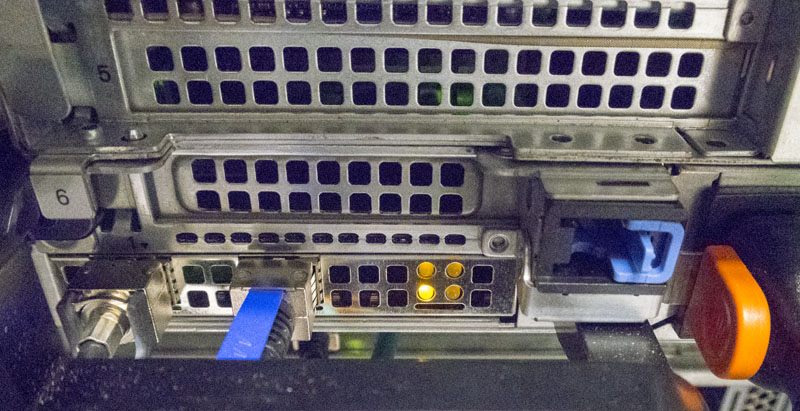

That was not necessarily the case when we did our Dell EMC PowerEdge R740xd Review using a Mellanox ConnectX-4 Lx card.

Since that review, you have likely seen these cards in a number of other STH reviews from different vendors including our Lenovo ThinkSystem SR650 2U Server Review.

Our Supermicro SYS-5019C-MR 1U Xeon E-2100 server review on the lower-end side of the server world that borders on lower-end appliances.

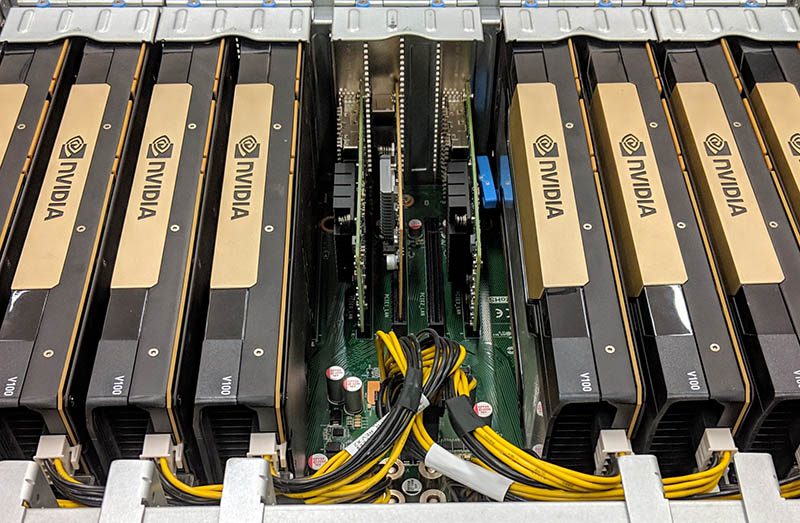

Two cards are found in our Inspur NF5468M5 server that we also tested the Mellanox ConnectX-5 cards in.

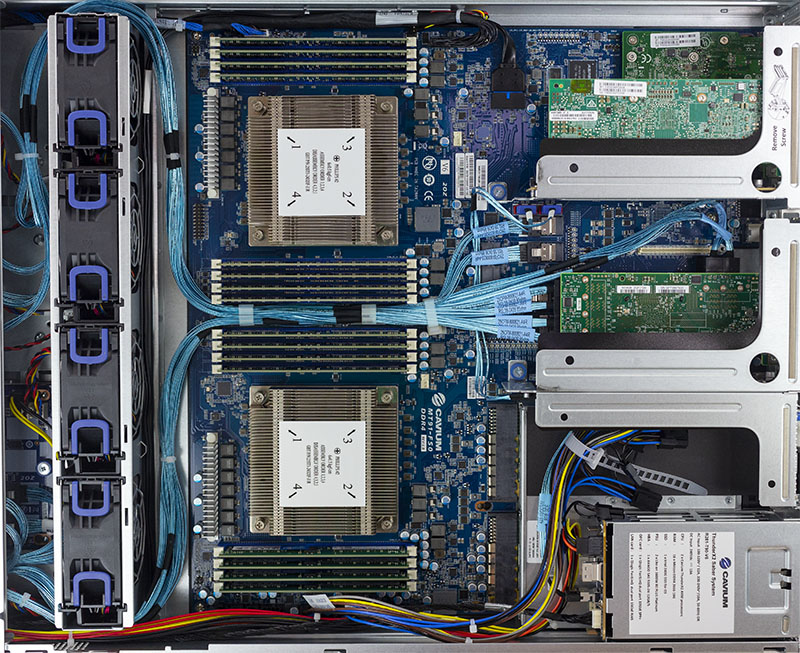

We have used the Mellanox ConnectX-4 Lx 25GbE cards in a number of servers from a number of different vendors, even with different server architectures such as the test platform we used in our Cavium ThunderX2 Review and Benchmarks a Real Arm Server Option piece.

In the server world, the Mellanox ConnectX-4 Lx has become the defacto 25GbE standard. Even NAS vendors such as Synology have adopted Mellanox ConnectX-4 Lx as their 25GbE NIC of preference.

Mellanox ConnectX-4 Lx Versus ConnectX-4 and ConnectX-5

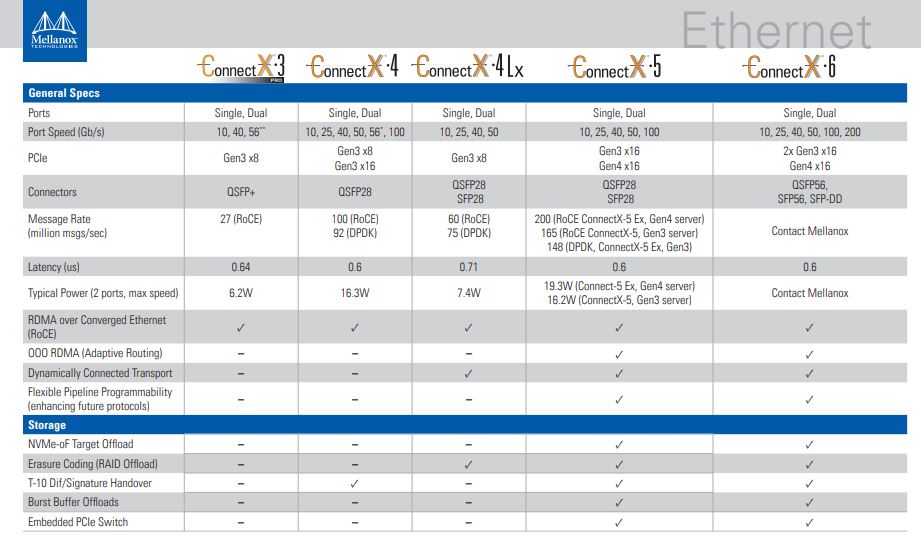

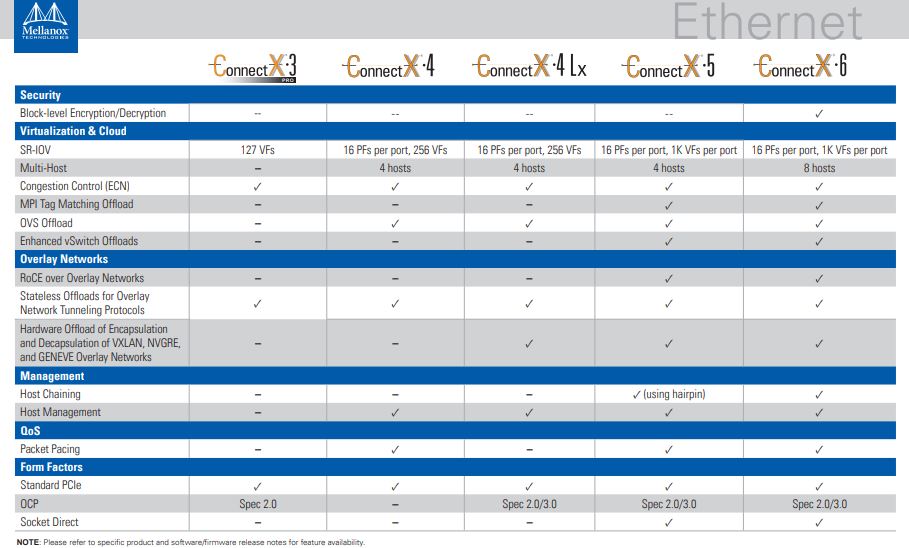

From a feature perspective, Mellanox is a major supporter of RDMA functions for InfiniBand and Ethernet as well as RoCE on the Ethernet side. SRIO-V is present.

With the Mellanox ConnectX-4 Lx generation, the company set out to make an Ethernet focused product that caters to a number of server applications. An example of this is that the ConnectX-4 Lx added erasure coding offload. This feature was not present in the ConnectX-4 generation but has been added to ConnectX-5 and ConnectX-6 lines. Likewise, the hardware encapsulation for overlay networks is another feature that has been offloaded in this generation. For most 25GbE applications, the ConnectX-4 Lx is a better choice than the ConnectX-4 and that is a deliberate design choice.

Mellanox has a handy chart on the Ethernet side that shows the generational comparison that we also had in our ConnectX-5 review:

Here is the second part:

As always, we suggest looking up specific hardware offload features for the specific part you are buying.

Mellanox ConnectX-5 Performance

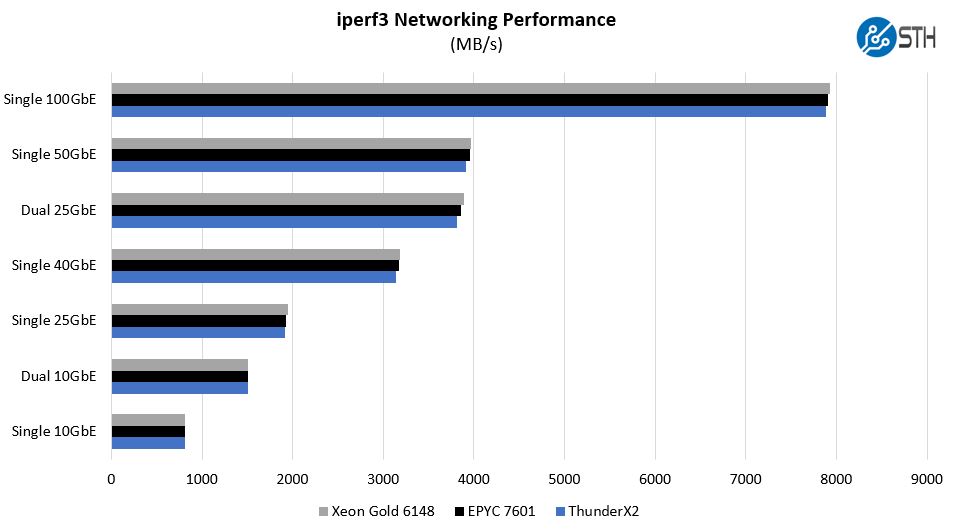

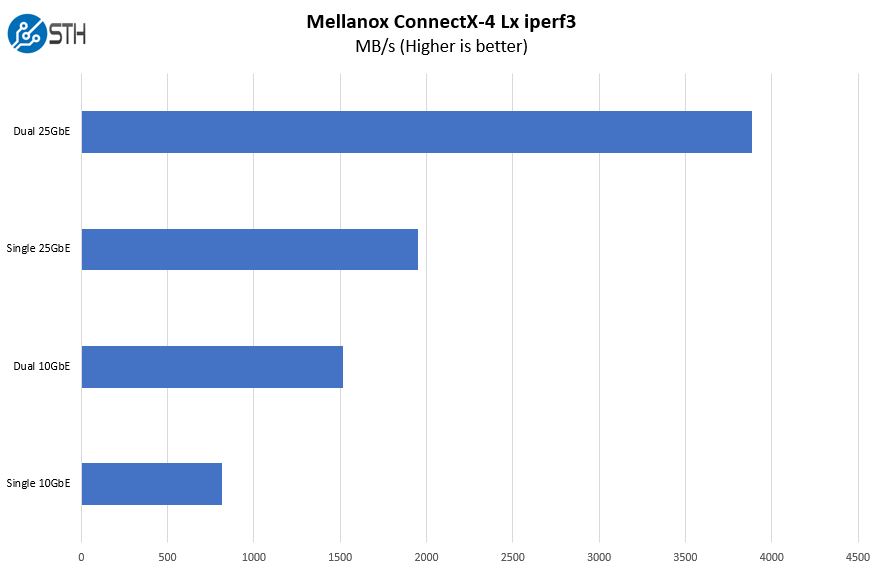

We are a heavy Ethernet shop. We actually used the Mellanox ConnectX-4 Lx cards in some of our reviews and even compared it using Intel Xeon “Skylake-SP”, AMD EPYC “Naples”, and Cavium ThunderX2.

We saw slightly better performance from the Intel Xeon Gold CPUs than the Naples generation AMD EPYC or the Marvell/ Cavium ThunderX2 chips.

If you want 25GbE one can see that the performance advantage in throughput is enormous.

To achieve this, 25GbE is using higher signaling rates which also means that latency is vastly improved.

Another important aspect of 25GbE is that a dual port card better saturates a PCIe 3.0 x8 slot than a single port 40GbE card. If you have limited PCIe slots in your system or have only a single OCP networking slot, you can effectively get 2.5x the bandwidth and lower latency from a single slot which is a big improvement. Slots cost money in servers so maximizing their utilization is an important TCO factor in some applications.

Final Words

In our 100GbE networking, we are running Mellanox ConnectX-4 and ConnectX-5 exclusively as of this article’s publication date. Our 25GbE network has a handful of OCP mezzanine 25GbE cards as well as a few PCIe cards based on Broadcom NICs. We have a few Intel cards. The cards we are buying for the lab are these Mellanox ConnectX-4 Lx dual 25GbE cards as they are supported by just about every vendor from SMB NAS vendors to the large server vendors. If you are in the market to upgrade to 25GbE infrastructure, we think you should at least evaluate Mellanox ConnectX-4 Lx as they have become extremely popular and well supported in the industry.

Yeah we use these everywhere. We’ve got several thousand deployed and they just work for us.

We went with these during our upgrade and have been very please with the performance and they just work. For VMware 6.7 we didn’t have to install any extra drivers as they were already in ESXi.

Yes! But are there any switches suitable for the homelab to plug these guys into? Might be a lot cheaper to built a 4-8 port switch with pfsense using 2-4 of these cards.

” … are there any switches suitable for the homelab to plug these guys into …”. It’s a bit of an issue. :).

They also work in direct attach mode if you want to get really homelab

Do these come with heatsinks or did you source them separately?

I know this is a very old article as of today, but I have a couple of these cards installed in my home made workstation and a home server. They take a long time to connect the first time the machines are booted. Basically the network connection flaps for 1 to 2 minutes before stabilizing. Lots of errors about the flapping in the event logs. After the connection stabilizes, they work perfectly “until” the next time the machines either are shut down, or go to sleep for a long time. It is annoying.

I have been troubleshooting this a lot, tried different firmware, drivers, etc. Nothing helps. I have also switched to “certified to work” with this card Dell branded cables for connecting to my 10Gb switch from Qnap. Maybe the Qnap is the problem, IDK :-(