The STH DemoEval lab is in the process of transitioning from 10/40GbE to 25/50/100GbE. In this process, all new servers outside of embedded products have been equipped with new adapters. Since we have many GPU systems and use clustered storage, transitioning to the higher signaling rate of 25/50/100GbE tends to be a better upgrade than moving a machine from 10GbE to 40GbE. In our lab, all of our machines running 100GbE are using Mellanox ConnectX-4 or ConnectX-5. Patrick asked me to do a mini-review of the Mellanox ConnectX-5 VPI 100GbE and EDR (100Gbps) InfiniBand adapters.

Mellanox ConnectX-5 Hardware Overview

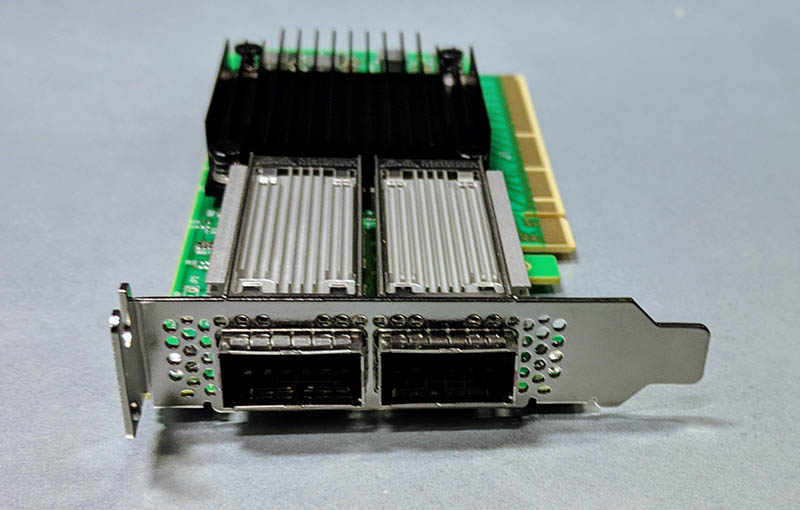

In our review, we are using the Mellanox ConnectX-5 VPI dual-port InfiniBand or Ethernet card. Specifically, we have a model called the Mellanox MCX556A-EDAT or CX556A for short. The first 5 in the model number denotes ConnectX-5, the 6 in the model number shows dual port, and the D denotes PCIe 4.0.

This is a PCIe 4.0 x16 device which is required to sustain 100Gbps speeds. Indeed, PCIe 3.0 x16 does not have enough bandwidth to run both ports at a sustained 100Gbps simultaneously. Many servers only have PCIe 3.0 x8 slots, especially older generations. With Skylake-SP, AMD EPYC, and Cavium ThunderX2 servers, x16 slots are more common. There are some Arm servers such as the Huawei Kunpeng 920 and IBM POWER9 that already support PCIe Gen4. In the x86 segment, we will first see AMD EPYC “Rome” generation launch with PCIe Gen4 support in mid-2019. In today’s x86 servers you can use a PCIe 4.0 device in a PCIe 3.0 slot at around half the host bandwidth or you can get the MCX556A-ECAT which is a Gen3 part. Our advice is to get the -EDAT version if possible as the price difference is generally not too large.

The dual 100Gbps ports are QSFP28 ports and one can run them at FDR/EDR InfiniBand speeds or 25/50/100GbE speeds. As a note, you will need upgraded optics or direct attach cabling from the similar looking QSFP+ 40GbE/ 56Gbps FDR InfiniBand generation. The QSFP28 direct attach cables are significantly thicker with better shielding. That impacts bend radius and bundling at the time of installation, but it is still very manageable. As we move to faster speeds, especially around 400Gbps, DACs will face a new challenge, but that is some time in the future.

The Mellanox ConnectX-5 hardware is generally similar from SKU to SKU with the biggest differences coming from firmware and port counts. Mellanox has maintained a similar form factor and port placement. For example, we needed a full-height bracket for one of our ConnectX-5 cards and we were able to use on from a ConnectX-4 card.

What really matters, and frankly the killer feature of the cards is their flexibility. If you want InfiniBand and Ethernet, especially if you need a card to have one port of each, Mellanox is the only provider out there. There are very few instances where we can say that there are applications with only one solution, and this is one of those instances. You buy these VPI cards because you need, or may need both, and they are your only option.

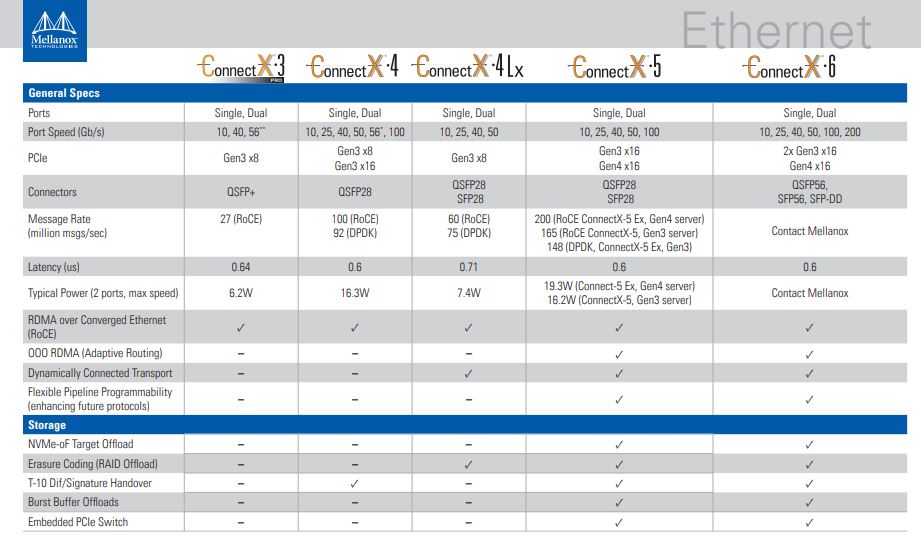

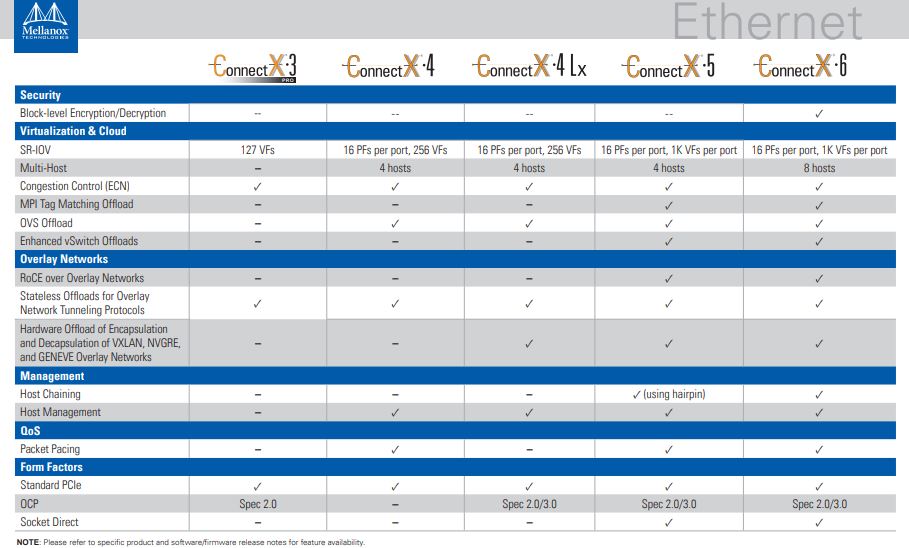

Mellanox ConnectX-5 Versus ConnectX-4 and ConnectX-6

From a feature perspective, Mellanox is a major supporter of RDMA functions for InfiniBand and Ethernet as well as RoCE on the Ethernet side. SRIO-V is present. One way to think about the generational performance of the ConnectX-4 and ConnectX-5 is that the ConnectX-4 was the first 100Gbps product generation. Mellanox ConnectX-5 is the second generation with more performance. Features such as PCIe Gen4, MPI, and SHMEM/PGAS and Rendezvous Tag Matching offload, hardware support for out-of-order RDMA Write and Read operations, and additional Network Atomic and PCIe Atomic operations support among other benefits.

If you were to compare ConnectX-5 to ConnectX-4, this is a “Tock” in the old Tick Tock colloquial release cadence where a Tock is an architectural refinement generation. ConnectX-6 then is the next Tick with 200Gbps speeds.

Mellanox has a handy chart on the Ethernet side that shows the generational comparison:

Here is the second part:

You can find the source document here. The feature list on these is quite large. For example, CAPI support is present on ConnectX-5 which is another improvement. We highly suggest that if you want a feature, check the adapter specs you are buying for the specific model.

Mellanox’s Killer VPI Feature

Although we primarily use cards for 100GbE, there are times when we setup demos for clients using InfiniBand. Since we have 100GbE and Omni-Path (OPA100) switches in the lab, yet no InfiniBand EDR or HDR switches, we are quite limited in our typologies. Luckily, these tend to be two or three node solutions so we can use direct attach for setup. Aside from cabling, that requires that we change ports from Ethernet to Infiniband on our cards.

Mellanox’s VPI, short for virtual protocol interconnect, is Mellanox’s killer feature. If you buy a NIC from other vendors, you are likely locked into Ethernet only. If you get Intel Omni-Path, you are locked into OPA. With Mellanox VPI, a hardware port can run either Ethernet or InfiniBand.

This practically means that you can run either protocol on a single NIC. Perhaps you have a GPU cluster that has both a 100GbE network and an Infiniband network that the nodes need to access. With Mellanox VPI adapters one can service both needs using the same cards. Perhaps you start with 100GbE then get an Infiniband switch and want to use GPUdirect RDMA over IB. Mellanox ConnectX-5 VPI cards do not require opening each node. One can set the protocol type on each port independently.

Changing Mellanox VPI Ports from Ethernet to InfiniBand

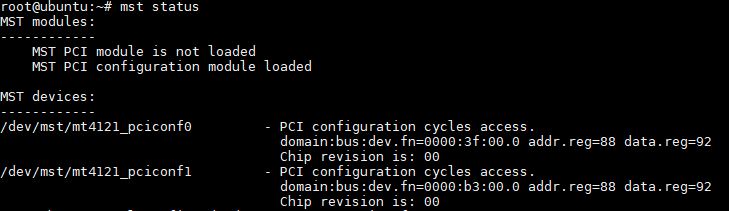

Swapping from Infiniband to Ethernet or back on a Mellanox ConnectX-5 VPI card is really simple. There are tools to help you do this, but we have a simple three step process in the lab. First, we see what devices are installed.

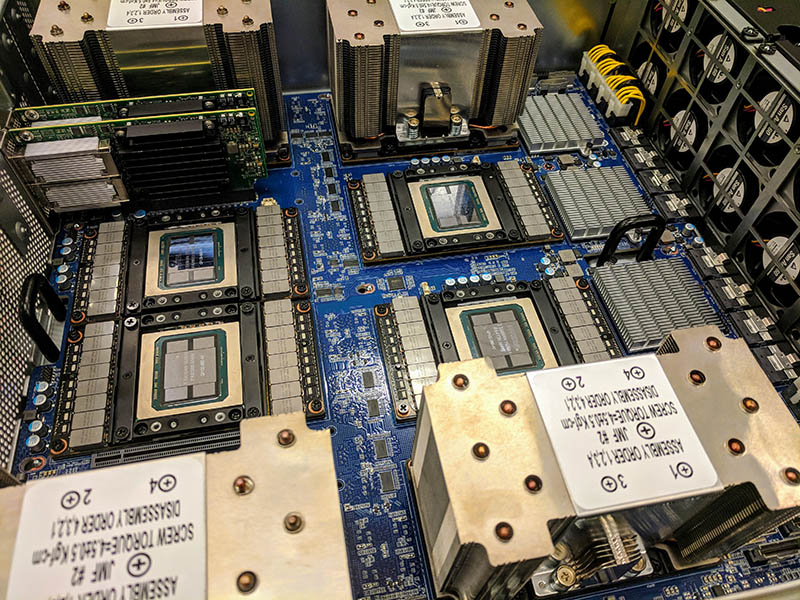

In this case, we have two Mellanox ConnectX-5 VPI cards (CX556A’s) installed in the GPU server.

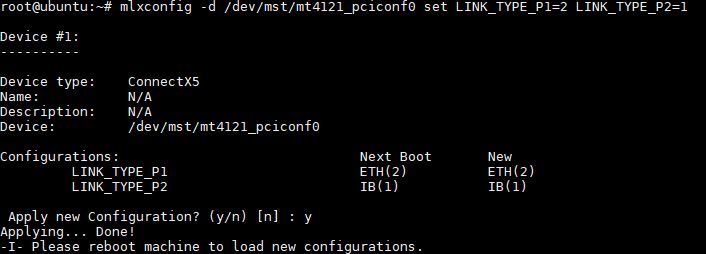

We can then select the port configuration. Here, 1 is for InfiniBand and 2 is for Ethernet.

The third step is to reboot. That is it. Here is a video with this in action:

We may pull this section out to be its own piece for others to reference later.

GPUDirect RDMA

For the deep learning and AI clusters, Mellanox has a sizable advantage. Mellanox PeerDirectin conjunction with NVIDIA GPUDirect RDMA allows adapters with direct peer-to-peer communication to GPU memory without impacting the CPU.

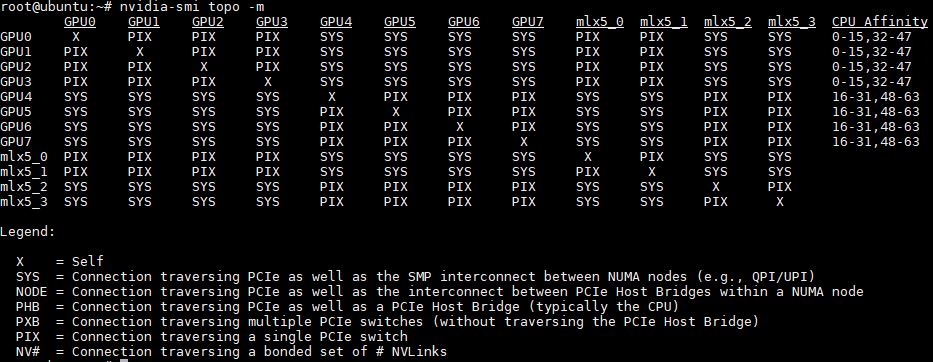

This particular server has two Mellanox ConnectX-5 VPI cards installed. It also has Intel 10GbE and 1GbE cards installed. As you can see, nvidia-smi topology only shows the ConnectX-5 cards.

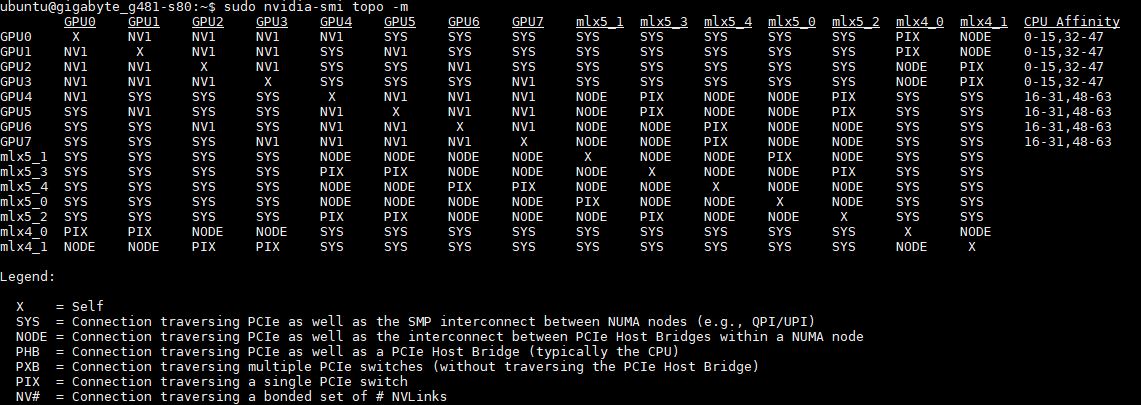

This is a feature Mellanox has had for generations. Here is an example using Mellanox ConnectX-4/5 and Broadcom 25GbE OCP adapters.

The important feature here is that the non-Mellanox adapters are not even showing up in the topology map while Mellanox ConnectX cards are.

Mellanox ConnectX-5 Performance

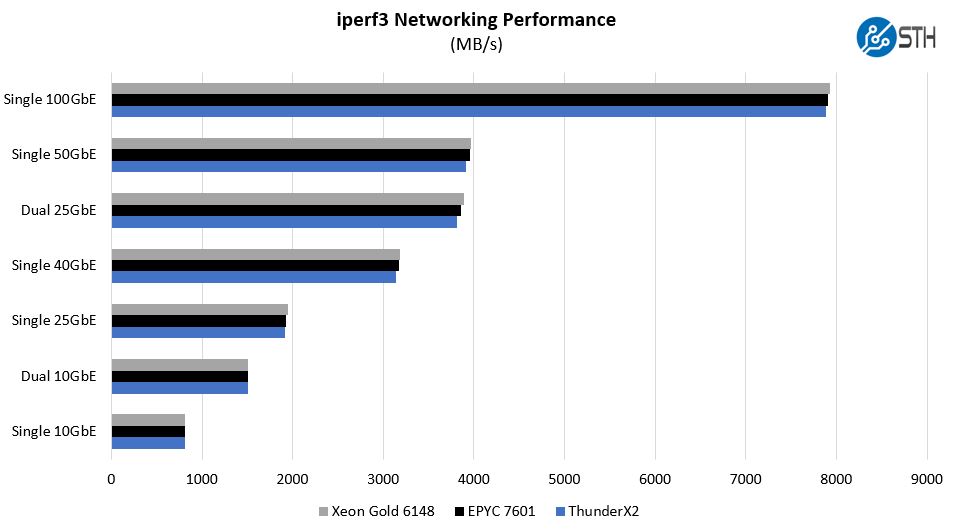

We are a heavy Ethernet shop. We actually used the Mellanox ConnectX-5 cards in some of our reviews and even compared it using Intel Xeon “Skylake-SP”, AMD EPYC “Naples”, and Cavium ThunderX2.

We saw slightly better performance from the Intel Xeon Gold CPUs than the Naples generation AMD EPYC or the Marvell/ Cavium ThunderX2 chips. Overall, if you want 100GbE it is still an enormous performance upgrade over previous generation networking.

Final Words

This is one of those products where the decision to buy hinges on a meta feature rather than a vendor-to-vendor comparison. Our example of using one port for EDR InfiniBand and one for 100GbE is the reason to buy a Mellanox ConnectX-5 over any other adapter. If you want InfiniBand, Mellanox is essentially the only player in town. Intel Omni-Path does not currently have this Ethernet option. Ethernet NIC providers such as Broadcom do not have InfiniBand. If you do not need InfiniBand, and instead want to run in Ethernet mode, the ConnectX-5 is a high-end 100GbE NIC that can support PCIe Gen4, and that many large scale infrastructure providers use. In the deep learning and AI segments, Mellanox has become the de-facto standard. That has resulted in every one of our 100GbE capable NICs in the lab being either ConnectX-4 or ConnectX-5. With the 40GbE generation, we used NICs from other vendors but Mellanox has an aggressive focus on performance with the Mellanox ConnectX-5 generation.

As a product that we use every day and with the unique VPI feature supporting both InfiniBand and Ethernet, we are giving the Mellanox MCX556A-EDAT our seldom given STH Editor’s Choice award. On the server side, there are a lot of nuances to which system can be better, but Mellanox has a product in a category by itself in the CX556A.

There’s probably a high overlap of Mellanox users and STH readers.

Mellanox did a great job with the CX5’s. We’ve seen them use more power but they’ve got great offload capabilities that Intel and Broadcom aren’t really matching now. You could have gone into it more in the review, but you’re probably right. They’ve now got so much going on that you need to check the spec sheet to see what’s offloaded.

Excellent

You know that you can actually get a used 36-port Mellanox managed 4x EDR IB 100 Gbps switch for ~$3500 now on eBay.

I literally just picked my up yesterday.

It’s awesome!

Also, to run IPoIB, do you just use ifconfig to give the IB port an IP address?

Thanks.

I only recently acquired my ConnectX-4 dual-port cards within the past month and a half or so ago, so I am still in the process of getting it all set up and working properly.

I have four compute nodes and as mentioned in the article, when you have two or three nodes, you can create two link pairs to a central node (but the boundary nodes won’t be able to communicate with each other directly), so using a direct attached cable works for that.

But it became obvious and apparent that I need a switch so that any one of the four nodes can talk to any other node (6 possible communication combinations when given four nodes, choose two) – and that’s a bit of a bummer because that means that I would hardly be using the switch’s switching capacity. Oh well.

You had me at “PCIe 4.0 x16”

Are you aware of any other 100Gbps Ethernet cards? Even without the need for IB, I haven’t been able to find any 100GE cards other than the Mellanox. They also have a way of combining pcie 3.0 x16 slots to make up for the pcie 3.0 bandwidth bottleneck while waiting on pcie 4.0 motherboards. This requires special versions of their cards though.

Joe they’ve gotta be out there right? I found this on the first page of search results https://www.servethehome.com/new-cavium-fastlinq-45000-25gbe-100gbe-adapters-rdma-dpdk-support/

thanks for that link Brian. Interesting read with the reference to hp, mirantis and openstack. further research points to marvell now. their 2x40Gbps is interesting as it is an x16 vs intel’s xl710da2 which is 2×40 but using an x8. the x8 slot becomes a bottleneck at appox 63Gbps for the 2×40 NIC. Whereas the x16 slot can handle a throughput of appox. 126Gbps. That is one of the reasons why pcie 4.0 is important for the 2x100G NICs. Mellanox even has 2x200G NICs with their connectx-6 cards.

Unfortunately, I can’t seem to find any of these cards!

@joe paolicell

Chelsio has their T6 line of 25/100g nics, they are a bit cheaper than mellanox and have more/better offload features that are targeted for the general enterprise rather than HPC side of networking.

Cavium(Used to own Qlogic, and Net Extreme) has their FastLinq 45000 series, but I have not heard of anyone using them.