Inspur Systems NF5468M5 Test Configuration

Our Inspur Systems NF5468M5 test configuration was robust and similar to what we expect to see at many hyperscalers and cloud service providers using this type of system:

- System: Inspur Systems NF5468M5

- CPU: 2x Intel Xeon Gold 6130

- GPU: 8x NVIDIA Tesla V100 32GB PCIe

- RAM: 768GB (24x 32GB) DDR4-2666 at 2666MHz

- OS SSD: Intel DC S3520 240GB

- NVMe SSD: 4x Intel DC P4600 3.2TB

- RAID SATA SSD: 8x Intel DC S4500 960GB

- RAID Controller: Broadcom (LSI) 9460-8i

- 1GbE NIC: Intel i350-am4

- 10GbE NIC: Intel X722 Onboard Dual SFP+ 10GbE

- 25GbE NIC: Mellanox ConnectX-4 Lx dual-port 25GbE

- 100GbE NIC: Mellanox ConnectX-5 VPI dual-port 100Gbps EDR InfiniBand and 100GbE

We swapped 25GbE and 100GbE NICs as well as some of the CPUs in the system to customize the solution a bit for our lab’s needs. In a system like this, the GPU costs tend to dominate the overall configuration.

Overall with fast networking, plenty of memory, and plenty of local NVMe storage, these are high-end systems. They are also a step beyond the previous-generation Intel Xeon E5 V4 based systems utilizing additional PCIe lanes for NVMe storage. This is an area where the newer Intel Xeon Scalable platform scales better than its predecessor.

Inspur NF5468M5 Topology

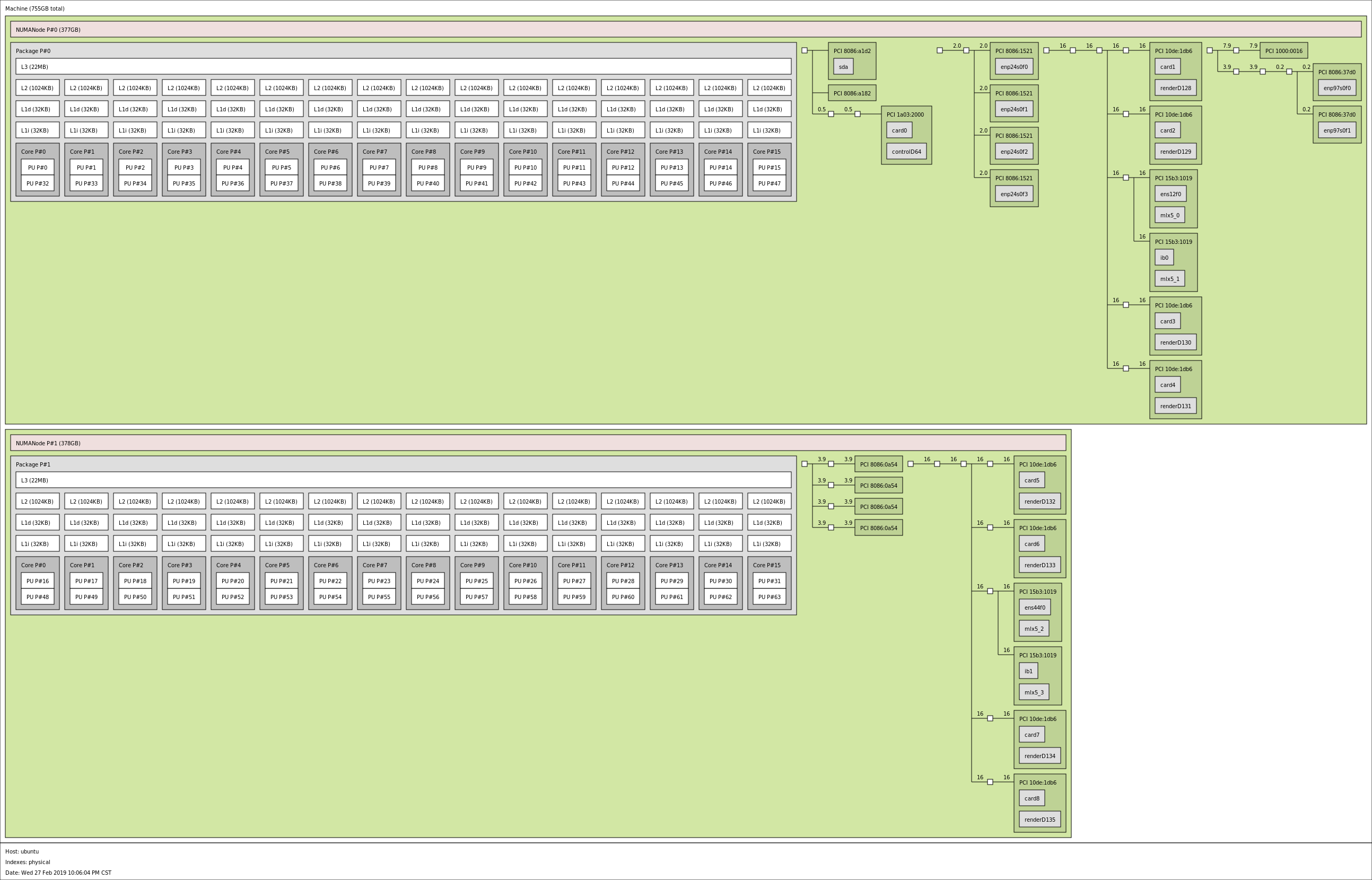

Topology in modern servers is a big deal. The topology dictates performance, especially when I/O is taxed in heavily NUMA domain sensitive workload. Here is the overall system topology of the Inspur NF5468M5:

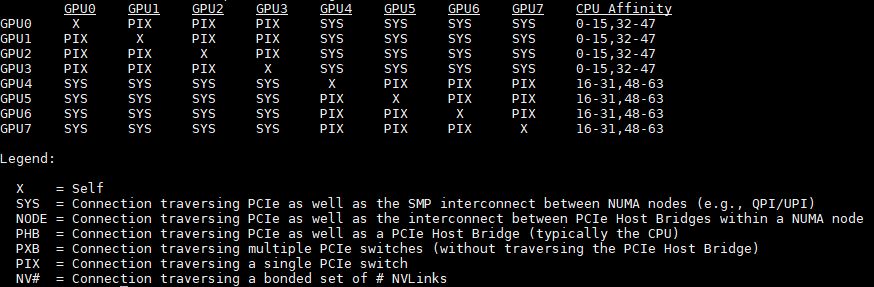

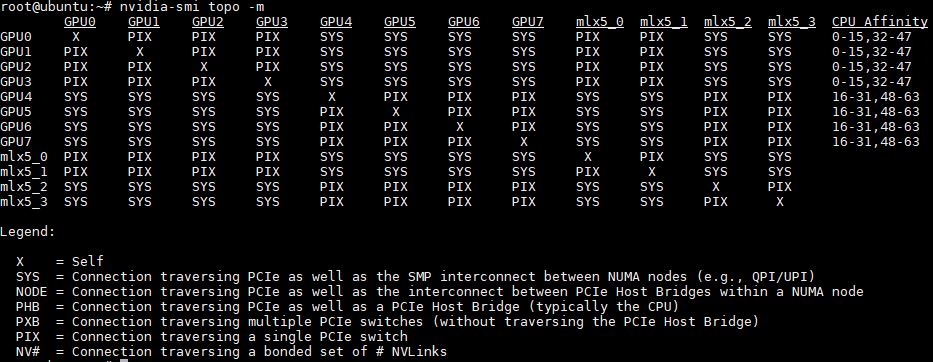

Adding in GPUs, and excluding any Mellanox NICs capable of GPUdirect RDMA here is the system topology from nvidia-smi:

This is a dual root system. You can see that GPU0 – GPU3 are on one PCIe switch off of the first CPU. GPU4 – GPU7 connect to a different PCIe switch on the second CPU.

These systems are designed to be used not just as single servers, but also in clusters. Mellanox invested heavily in both RDMA for Infiniband and Ethernet which helps immensely in GPU-to-GPU communication. Here, we can see a common topology for this class of system.

One PCIe x16 slot for networking is found attached to each PCIe switch. That allows GPUs to communicate over the network directly, without having to go through the CPU and UPI bus. As you scale out with PCIe-based GPU deep learning systems, this is the popular system topology.

Next, we are going to take a look at the Inspur Systems NF5468M5 management interface before moving onto our performance section.

Ya’ll are doing some amazing reviews. Let us know when the server is translated on par with Dell.

How wonderful this product review is! So practical and justice!

Amazing. For us to consider Inspur in Europe English translation needs to be perfect since we have people from 11 different first languages in IT. Our corporate standard since we are international is English. Since English isn’t my first language I know why so early of that looks a little off. They need to hire you or someone to do that final read and editing and we would be able to consider them.

The system looks great. Do more of these reviews

Thanks for the review, would love to see a comparison with MI60 in a similar setup.

Great review! This looks like better hardware than the Supermicro GPU servers we use.

Can we see a review of the Asus ESC8000 as well? I have not found any other gpu compute designer that offers the choice in bios between single and dual root such as Asus does.

Hi Matthias – we have two ASUS platforms in the lab that are being reviewed, but not the ASUS ESC8000. I will ask.

How is the performance affected by CVE‑2019‑5665 through CVE‑2019‑5671and CVE‑2018‑6260?

P2P bandwidth testing result is incorrect, above result should be from NVLINK P100 GPU server not PCIE V100.