Recently I had the opportunity to do a brief Q&A interview with Robert Hormuth, Vice President and CTO, Dell Server and Infrastructure Systems. Dell EMC is the largest server vendor in the world in terms of revenue and units shipped. The technical directions it takes in products will shape the industry thusly making Mr. Hormuth’s predictions unique. Personally, I think Dell Technologies has a fairly good idea about the technology we will use in 2019 and 2020 which makes these predictions well-informed.

Interview with Robert Hormuth, Vice President and CTO, Dell Server and Infrastructure Systems

I wanted to focus the Q&A in three main areas:

- Market trends – what are we going to see emerge in the near future that is different than what we have seen in the past few years of a relatively homogenous ecosystem.

- Persistent memory – often referred to as storage class memory, we are only a short time until the official launch and at STH we have already torn down Intel’s Apache Pass green and blue Optane DC Persistent Memory modules. This is a fundamental technology change we will see starting in 2019.

- Next-generation interconnects – as some background here, I believe this is one of the most exciting areas coming to the server and infrastructure space. I also know that Robert is a Gen-Z promotor so I wanted to get his take.

Grab a cup of coffee, and see what the CTO of the world’s largest server provider thinks is coming next in our industry.

Market Trends

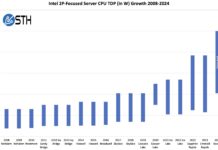

Patrick Kennedy: In your 2018 trends article, you highlighted that 2018 would be the “Rise of the Single Socket Server.” How is that observation playing out into 2019?

Robert Hormuth: In our discussions with customers and analysts, many agree that today’s single-socket servers can support a wide variety of common workloads and are a very efficient way to manage some software licensing models. Single socket servers also enable power density smearing across the data center, which can reduce hot spots. Additionally, when the industry gets to a single NUMA domain within a socket, the 2S IO NUMA performance degradation can be eliminated. With AMD EPYC, Dell EMC was able to make some significant innovations in terms of direct NVMe drive support. Two of our three AMD EPYC-based PowerEdge servers are one-socket optimized for a reason.

PK: How will the edge market evolve in the next 2-3 years as AI inferencing becomes an integral part of employee facing and customer-facing applications?

RH: AI plays a key role in Edge computing, and we will see this market evolve as software on the edge uses AI algorithms. Inferencing will occur close to the edge, and training of models will be further up the compute hierarchy in an edge cloud or a centralized data center or public cloud.

A new class of AI inferencing silicon is emerging that will deliver inferencing at much lower power and much higher performance than today’s GPUs and FPGAs. There is a lot of industry effort underway on this by established silicon players and new startups. This will result in inferencing in end-point devices, edge gateways and edge servers. We will also see a new class of ruggedized server hardware emerge for edge deployments with embedded AI silicon.

This distributed AI architecture with inferencing and training at multiple locations will lead to the evolution of frameworks to orchestrate data collection, data synthesis, training of AI models and use of models for inferencing. Organizations will also better understand that the decision-making using AI inferencing is only as good as the trained model. This offers potential for data marketplaces where people with valuable data can offer this data to others for model training and be able to monetize it.

PK: There has been a lot of movement in the industry toward 2U 4-node, like the PowerEdge C6420, and other modular chassis. Where is that trend headed over the next two to three years?

RH: A four-node chassis in 2U of space continues to offer a good balance between density and storage. This trend is not going away. Looking into the future, as some installations require higher-end, higher-power CPU stacks, liquid cooling could become more common. Looking longer term, if CPU socket size and DDRx channels continue to rise it could make form factors like the 4N2U impossible with a reasonable feature set. For example, Dell EMC had created a very dense solution with the FC430 (eight 2-socket servers in 2U) for our FX product family, but eventually, the laws of physics caught up as the CPU sockets became wider than the board and we could no longer continue that product family.

PK: As you look to next-generation servers and components, are there specific design challenges that Dell EMC is trying to tackle? The PowerEdge MX is clearly built for a longer lifecycle and needs beyond what we have in today’s server architectures. (Signal integrity, power, and heat as examples.)

RH: The physics of server design are pushing many challenges, such as CPU socket size, CPU power and number of DDR channels. Add NVMe drives and GPUs, and you have a real power and cooling challenges. Signaling rates, jumping from 8 to 16 to 32GT/s on PCIe, create even more challenges with sheer physics. Dell EMC PowerEdge MX has taken all of these factors into consideration to ensure a future proof design. PowerEdge MX was designed and architected with the future in mind – the first and only “no mid-plane” design enables the MX to adopt to the latest fabrics, IOs and latest CPUs, due to better airflow, for the future. These architectural choices enable the MX to become a truly kinetic solution in the future, breaking the normal bounds of modular infrastructures.

PK: What is the Dell EMC server and infrastructure team doing differently today in terms of hardware design as a result of the challenge and opportunity that the public clouds present your customers?

RH: We continue to stay focused on our customers. Cloud is a style of compute–the industry is seeing that “cloud” is not a location. There’s a pendulum shift from the notion of moving everything to public clouds to core workloads returning or staying on premises or at the edge for better latency, more control, lower long-term costs, and competitive advantages. More and more, we’re seeing applications and data reside wherever it makes the most sense in a multi-cloud world that combines on-premises private, managed and public clouds in a way that works best for any given organization. Our focus is to continue to deliver the industry’s best infrastructure for the mainstream market while providing optimized infrastructure for new and emerging segments and workloads such as machine learning and the edge.

PK: If you were a customer embarking on a project to build a private cloud in a new colocation facility from scratch later this year, what would be the key considerations that you would have in 2019 that were not necessarily the same in 2014?

RH: I would keep in mind a multi-cloud strategy. Private clouds play the most critical role as the hub of a multi-cloud approach. Customers should be looking for software stacks, such as VMware Cloud Foundation, that enable a hybrid cloud model that allows them to seamlessly manage private and public clouds.

Persistent Memory

PK As we get closer to the Intel Optane DC Persistent Memory launch, are there applications beyond in-memory database and analytics that you see leading interest with this technology? As an end user, where do you see higher-capacity persistent memory technologies impacting the services we experience daily?

RH: Storage Class memory is an exciting technology and we are bullish on its long-term adoption. There are two primary use cases: DRAM replacement and as persistent memory.

For DRAM replacement it is very easy – it must achieve an economic value proposition in terms of performance vs. cost or capacity vs. cost with enough performance. This should create an interesting dynamic that will provide end users with more choice.

For persistent mode, however, users must understand that persistent data does not equal trusted or safe data. Today, the control point for trust is between the application and storage. When storage acknowledges the data, the application can assume it is safe (RAS). As the control point shifts toward the memory tier with SCM, those data services to protect the data must be invented. SCM, by itself, does not provide data trust.

For example, if a server with SCM goes down and it houses credit card transactions, that data is no longer available. SCM, as persistent memory for metadata, caching, re-generable data sets, etc., will have high value at the beginning. But we have to address the RAS side for more use cases. Do you replicate the date outside the box? If so, did you just negate the intrinsic value of SCM? If you replicate (and wait for acknowledge) using Ethernet, you just added a large amount of latency on a sub-microsecond device. If you replicate the data to an external Gen-Z SCM array, you can achieve RAS and stay in the load/store domain, for example. These are the problems that must be addressed long-term to drive widespread adoption.

I am optimistic that the industry will invent new ways to take advantage of SCM with new techniques, new caching, replication, new algorithms and new fabrics like Gen-Z, all in an effort to bring data services to the memory tier.

SCM has a bright future and Dell EMC is investing to ensure we solve customer problems and keep their data safe.

Next-Generation Interconnects

PK: Looking at technologies like CCIX and Gen-Z, is this where we need to go as an industry? What are the current limitations driving these technologies?

RH: Adopting and expanding memory-centric interconnects is definitely a technology direction the industry needs to take. Faster and more capable technologies like Storage Class Memory (SCM) and accelerators like GPUs and FPGA are driving the need to transfer data between these components and the CPU at much lower latencies – lower than one microsecond.

As an example, look at SCM. SCM promises to have much greater speed than NAND flash. Some SCM technologies can have access latencies that are orders of magnitude lower than NAND flash. At these speeds, if we have to access these technologies through a software stack, the overhead of the software would represent more latency than accessing the physical media. This would eliminate much of the benefit the technology brings to the table.

SCM needs to be accessed directly by the CPU as a memory device through load/store operations. Being a true memory fabric, Gen-Z also brings opportunities to expand sub-microsecond latency technologies like SCM and accelerators outside a single chassis opening up a number of interesting architectural possibilities.

PK: When does the shift to technologies like Gen-Z go from a CTO pet project to something that everyone in the industry will have to incorporate?

RH: There will be stepping stones the industry will take to achieve the end goal of a true kinetic (fully composable and disaggregated) architecture over the next decade, and Dell plans on creating a smooth transition for customers by building products, keeping into account these milestones. With this in mind, the Dell EMC server team is focused on two main innovations with Gen-Z. First is with rack servers and our uniquely designed PowerEdge MX, based on a kinetic architecture that’s designed to enable a fully composable and disaggregated system. Second, we’re looking at inside the server innovation, using Gen-Z to enhance the memory tier without adding CPU sockets.

Final Words

I wanted to first say thank you to Robert and the team at Dell that set this up. Everyone has busy schedules, and I appreciate that getting one of the industry’s most influential CTOs to answer questions is an enormous effort.

For our readers, Gen-Z is not officially supported in the PowerEdge MX (yet) however, if you check out our exclusive and in-depth first Dell EMC PowerEdge MX hands-on review you will see that we found that the product team at Dell EMC specifically designed the solution with this in mind for the not too distant future.

By the second half of 2019, we will be in a period of innovation that the server world has not seen in many years. Leaders in the industry like Robert Hormuth will be the ones who help set the course among an explosion of server architectures. Ultimately Dell EMC’s customers will benefit from this thought leadership as they navigate into a more diverse server ecosystem.

He used the word “kinetic” in several answers here but I don’t know what that word would mean in this context? Is it just a cool sounding word to drop into a sales pitch or is there an actual meaning to it here?

There’s like 5 people in the world that I’d love to hear talk about servers. RH and PK are two of them. RH drives Dell. PK has the multi-vendor hands-on context that nobody else has, even the so called analysts. I want a real time interview or q&a.

Disclosure, I work for a dell channel partner

I like larry w’s idea. how do I become a fly on the wall?

GenZ needs Intel’s support to happen. I’d like to hear STH and Dell talk more about Dell’s arm server plans and ai training

Great interview and insight, thank you!

Related to this:

What does Compute Express Link (CXL) mean to the industry?

https://www.linkedin.com/pulse/what-does-compute-express-link-cxl-mean-industry-robert-hormuth/