A topic we will discuss in more detail over the next year is how servers are changing. Recently, we showed our Updated AMD EPYC and Intel Xeon Core Counts Over Time chart. In this article, we are going to look at the PCIe connectivity in platforms over the past decade.

PCIe Lanes and Bandwidth Increase Over a Decade for Intel Xeon and AMD EPYC

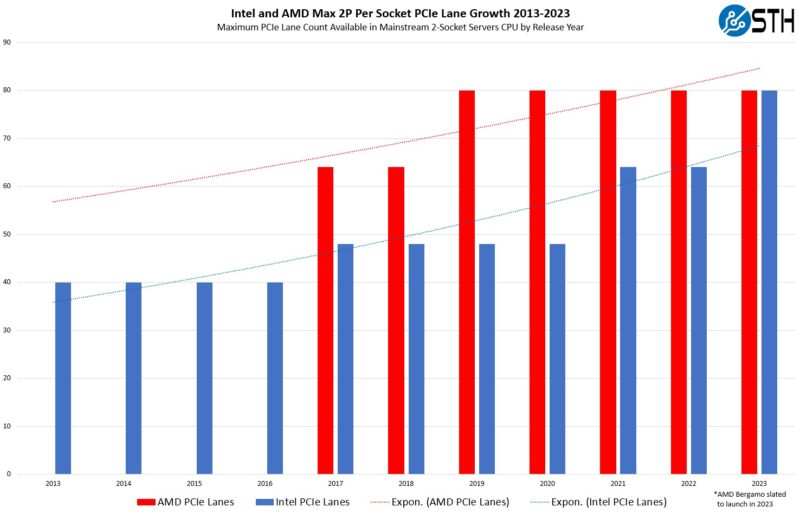

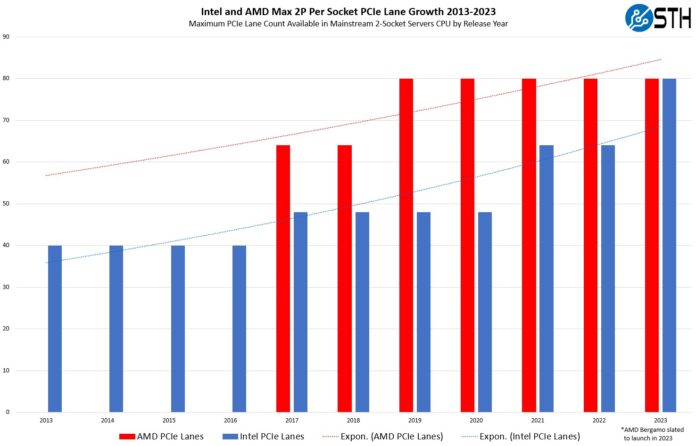

Here is a quick look at the 2013-2023 era of servers now that both the 4th Gen Intel Xeon Scalable Sapphire Rapids and the new AMD EPYC 9004 Genoa are out (AMD’s Bergamo and Genoa-X we expect, are going to use the same socket.) We are using only dual-socket mainstream parts here since there would be differences using single socket, especially on the AMD side, and 4-socket on the Intel side over this period.

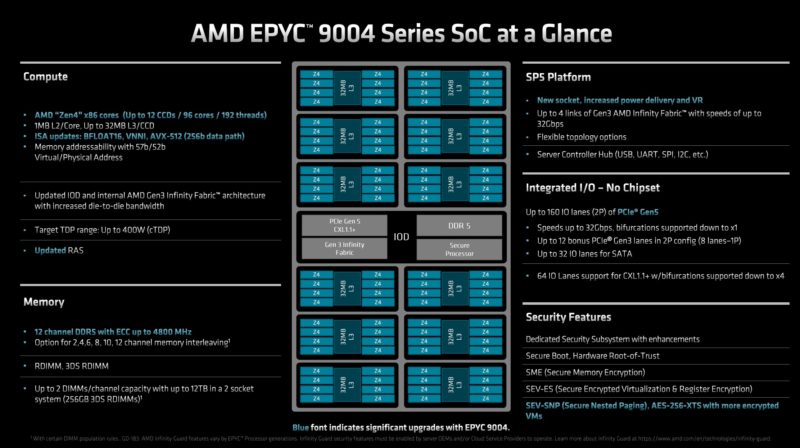

This look at the per-socket PCIe lane counts sees that they have about doubled over the past 10 years. Looking back, before this was the 2012-era Sandy Bridge launch, so 40x PCIe lanes per socket were the norm between 2012-2017. Then there was a four year period with Skylake/ Cascade Lake, where 48 PCIe Gen3 lanes per socket were standard. For AMD’s part, it moved to the EPYC 7001 “Naples” in 2017, but that was a design that had PCIe roots connected by Infinity fabric. In 2019, we got the now familiar I/O die design. One item to note here is that folks often misquote (e.g. on our recent NVIDIA Grace piece), is the PCIe lanes available on the EPYC 7002/7003 (Rome/Milan) and EPYC 9004 platforms.

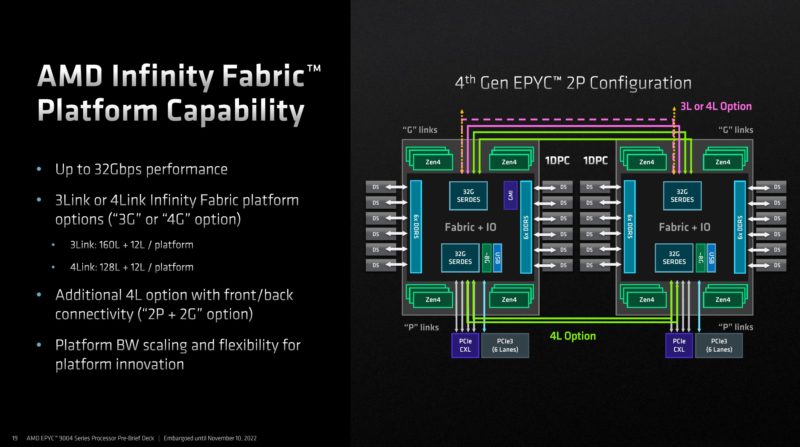

The newer EPYC platforms support 128x PCIe Gen4/Gen5 lanes in single socket configurations. In dual socket configurations, the two options are 160x PCIe Gen4/Gen5 lanes plus three xGMI or 128 lanes with four xGMI links. Most major server vendors have these 160-lane PCIe configurations at least available, as so many customers have found that the socket-to-socket bandwidth is sufficient with only three xGMI links.

There have also been demos of the 192-lane (96 per socket) platforms with only two xGMI links, but those have not been an AMD-supported configuration.

We are also excluding extra lanes that are not top-speed. For example, AMD “Genoa” has WAFL as well as up to 12x extra PCIe Gen3 lanes in dual-socket configurations for lower-speed devices such as BMCs and lower-speed networking NICs.

Intel also has its chipset lanes that perform a similar function in many servers. We do not have an Intel C741 Emmitsburg diagram we are allowed to share, but those lower-speed lanes are available as well.

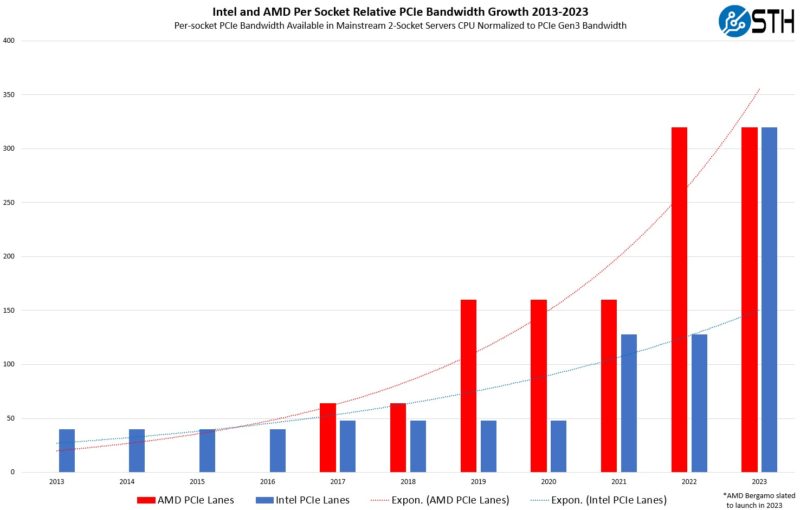

Lanes are important. Lane count dictates how many devices can be connected to a system via PCIe. That is only part of the story. In the last decade, we have transitioned from the PCIe Gen3 to the PCIe Gen5 era.

Purists will note that this is just a rough order of magnitude chart since PCIe Gen3 – Gen4 – Gen5 may not be exactly doubling of bandwidth. At the same time, we wanted to show the impact of what has happened to maximum PCIe bandwidth with each generation roughly doubling in performance, plus newer chips having more lanes. As one can see, we are moving way above the historical pattern set pre-2019/2020.

Final Words

In the future, we expect CPUs in the PCIe Gen6 era to have significantly more PCIe lanes as well as another roughly doubling of bandwidth. We hope that these charts help our readers understand and be able to explain why PCIe bandwidth is so much greater in 2023 servers versus the decade prior. Also, we just wanted to point out the trajectory we are on as PCIe bandwidth will increase significantly as new technologies we discuss on STH, like CXL, become more foundational.

Other than the fact the the AMD EPYC PCIe lane counts are incorrect, nice article. EPYC had 128 PCIe lanes in both 1 socket and 2 socket servers 2 gens ago. 2 socket increased with GA of 160 PCIe lanes last generation. You need to redo both your charts.

You’re wrong Tom.

Tom – your comment is incorrect. 160x in 2P was supported with the Rome generation (EPYC 7002). There is an article linked and a video embedded in this article showing that 160 lane 2P Dell configuration which is 80 lanes per socket in 2P. What was not supported in the Rome 7002 generation, but there were demos of at the launch event, was the 192 lane 2P (96/ socket) configuration.

That is part of why we are doing this series. Many folks have an incorrect understanding of the evolution of servers.

Tom didn’t read the article. Eric showed the epyc 7h12 doing 160 lanes and the charts say 2 not 1 socket. Eric had explained this in the article. It’s sad

The title on the first graph says “…Max 2P Per Socket PCIe Lane…” which is what it is, but the subtitle of the graph says “Maximum PCIe Lane Count In Mainstream 2-Socket Servers CPU” which I found confusing. This sounds like the PCIe lanes in the 2-socket servers but it’s not. In my opinion “Server’s CPU” would help clarify the meaning, or possibly “2-Socket-Servers CPU.” The wording “Per Socket PCIe Bandwidth” in the second graph was much clearer.

It also took some time for me to guess that expon was an abbreviation to exponential and probably represents a least squares fit of

PCIeLanes=A*exp(r*t)

No mention of the exponential fit nor the values of r and A could be found in the text. These curves confused me when I looked at the graphs.

Finally the graphs mention AMD Genoa in a footnote, but that footnote does not clarify whether the coming Genoa PCIe lane count was included or omitted from the graph. It was apparently omitted. If omitting Genoa, it would have been better to also omit 2023 from the fit for the exponential curve representing AMD. It is this last detail I found most confusing.

Charts are right. Eric I think you might be right, but I get what they’re doing since there’s a lot of variability in chips outside of mainstream dualies. The footnote is on Bergamo not Genoa. Genoa was launched in 2022 so the 2022 and 2023 values are correct. I think you meant “Bergamo” not “Genoa” in your post since Genoa is out. Bergamo uses the same SP5 socket so it’ll be the same as the current Genoa unless AMD cuts lanes.

Yepp it’s right.

You are right. I meant Bergamo not Genoa. I see what you mean how using the same socket puts an upper bound on the PCIe lanes.

I’m finding these CPU names difficult to keep straight! Why can’t they just call them Zen4 and Zen4c?

I would rather have more cpu-cpu interconnects or interconnect bandwidth. Or more memory channels. Perhaps one day cpus will be able to allow users to arbitrarily configure what is connected to the cpu. Manufacturers could then make an 8 socket epyc platform with fewer pcie lanes. More shared memory compute.

Chris: the CPU-CPU interconnects on Rome/Milan even in the 3xGMI configuration had more total bandwidth than Naples in the 4x connection. This is due to moving to PCIe 4 which doubled the bandwidth. Now with the move to PCIe 5 the link bandwidth had doubled again. If a company made a 2xGMI connection it would have the same bandwidth as a 4xGMI on PCIe 4. Basically every new PCIe version is increasing the bandwidth for the CPU-CPU interconnect. Genoa also has 12 RAM channels which is a 50% increase over Milan and 50% more than SPR. In the long run CXL Memory will alleviate the issues with needing to increase RAM channels.

The reason why we need more PCIe lanes is due to Hyperconvered Infrastructure. More and more companies are ditching physical SANs and moving to Hyperconvered so they can use storage and compute on the same nodes. These systems have 24 or more PCIe x4 lanes for storage. With only 128 PCIe lanes you are now limited on your network connectivity to only 32 lanes. For PCIe 4 that is enough for two dual port 100GbE cards and that’s it. Going to 160 lanes, while still having more CPU-CPU bandwidth from previous generations, means you can have four dual port 100GbE cards in PCIe 4 or 4 dual port 200GbE cards in PCIe 5. That increases your redundancy and increases the number of ports for better storage performance.