The new NVIDIA Grace Superchip is one of our most highly anticipated new products of 2023. At SC22, I told an unnamed NVIDIA executive that I was excited about it but wanted to see it, and this individual let me know they had chips working. That was November 2022, so now in 2023, we are starting to hear more about Grace, NVIDIA’s push into completing its CPU-GPU-Interconnect trifecta.

NVIDIA Grace Superchip Features 144 Cores, 960GB of RAM, and 128 PCIe Gen5 Lanes

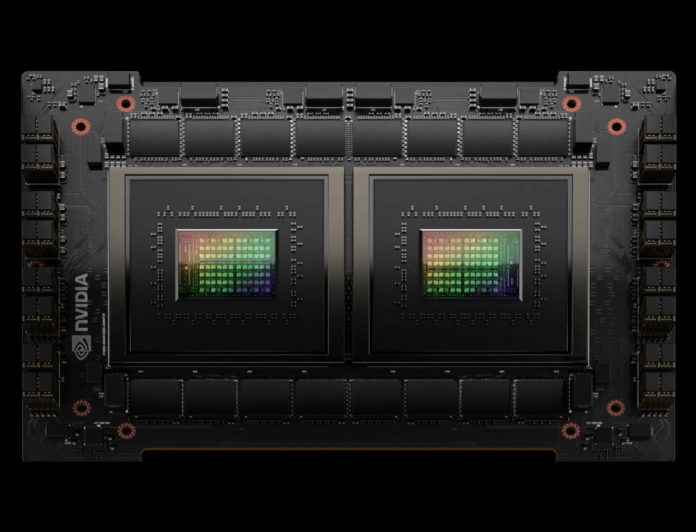

The NVIDIA Grace line will have the ability to have both the NVIDIA CPU and GPU on the same package, but NVIDIA is aiming beyond that. Armed with Arm Neoverse-V2 cores, the NVIDIA Grace Superchip has two 72 cores, integrated memory, and a NVLINK C2C interconnect between them. Here is the diagram:

Each Arm Neoverse-V2 core gets:

- 64 KB I-cache + 64 KB D-cache per core

- L2: 1 MB per core

- L3: 234 MB per superchip

Since NVIDIA is saying “234MB per superchip” as its L3 spec, we assume that is for the full two Grace CPU chip putting L3 cache at 1.625 MB L3 cache/ core.

Onboard each Grace CPU gets 500GB/s of LPDDR5X memory bandwidth. Since the spec is now up to 960GB of LPDDR5X per Superchip, that is 480GB per Grace CPU. NVIDIA will have the ability to vary this capacity.

NVIDIA is also saying that it has 8x PCIe Gen5 x16 roots in its chip putting it around the same as a single AMD EPYC 9004 processor.

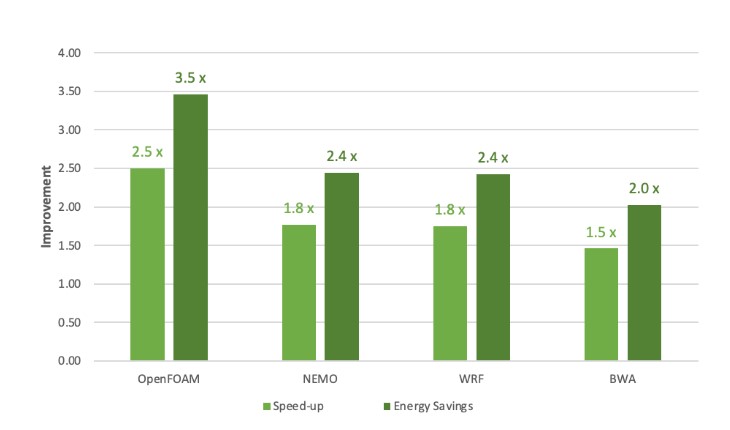

The company has started to share benchmarks of the new processor beyond the 7.1 TFLOP FP64 peak performance:

This was noted as being relative to a dual-socket AMD EPYC 7763 processor, so NVIDIA is comparing its parts to previous-gen AMD. If you want to learn more about the new AMD EPYC Genoa, see that article or our video:

If you want to learn more about last week’s Intel Xeon Sapphire Rapids, here is the video for that.

NVIDIA’s Grace Superchip is a 500W part, but that includes the LPDDR5X memory. We have been seeing roughly 5W/ DDR5 RDIMM. So for AMD EPYC 9004 Genoa with 12 channels of memory, we would add ~60W to the CPU’s TDP. That makes NVIDIA’s chip slightly more powerful, but with what should be a bit over twice the memory bandwidth than a single Genoa CPU.

That single CPU comparison will become important. The AMD EPYC Genoa more than doubled memory bandwidth, and it has much higher compute performance due to adding more cores and a faster microarchitecture. Our sense is that in HPC workloads, Grace will compete with dual-socket Genoa. On the integer side, AMD should be ahead based on what we have seen with existing Arm architectures.

Final Words

Chalk this one up to excitement for a new part. NVIDIA GTC 2023 is coming up in March, and this is perhaps the part I am most excited to see. NVIDIA has not set a Grace or Grace Hopper release date, but this feels like a big enough product bringing NVIDIA into a new market that it should launch on stage at GTC, especially if we are starting to see benchmark results already.

The server world is starting to get more exciting! If you want to learn more about some of the exciting features of new servers, I recently did a video outside of Intel’s HQ with Supermicro on why this new generation of servers is very exciting.

In the meantime, expect another Ampere Arm CPU plus NVIDIA GPU piece soon as well as a BlueField demo coming on STH.

In HPC and ML space wouldn’t the competitor be the AMD MI300 APU instead of Genoa?

I was wondering 900GB/s between sockets.

What is the cross socket bandwidth for Zen 4 Epyc and Intel 4th gen Xeon?

Are you sure that 480GB figure is a capacity rather than a per-second speed? It doesn’t seem a plausible capacity to get from sixteen modules, even if they’re quite tall stacks of 32Gbit devices, whilst it’s comparable bandwidth to a 4090.

SPR interconnect is UPI 2.0 which seems to be 24 bits wide and 16GT/s, so 72GByte/sec.

“Memory Size: Up to 960GB”

Switch Pro is real after all

You have mentioned the 5W per DIMM before but I have to say that there is a huge difference depending on DIMM rank. A typical 16GB ECC DDR5 DIMM will consume much less than a 64GB DIMM.

this is an example of how arm is going to grind not only intel but also amd into dust; if they can scale out and ramp fast enough is another question – probably not for a another few gen

“Since the spec is now up to 960GB of LPDDR5X per Superchip, that is 480GB per Grace CPU. NVIDIA will have the ability to vary this capacity.”

How is that possible with no company manufacturing 60 GB LPDDR5X chips? One would need to stack 20x 24Gbit dies. There must be some kind of version with more chips but that should decrease bandwidth.

@opensourceservers

This is not going to grind anything. Grace is a niche CPU that is only going to be used in very few HPC applications or as companion for GPUs. None of the shown benchmarks is going to have an impact on SPR or Genoa as those are already much faster than the previous generation for these benchmarks like OpenFOAM or WRF (and BWA will benefit a lot from AVX512 but it is not a typical benchmark so no comps).

If anything it’s going to be a competitor to SPR Max but that is a very small market for apllications where bandwidth is everything.

A single 9004 has 128 lanes. a dual socket 9004 has 128 lanes.

I know it’s hard to report honestly – especially when AMD is losing.

Hi DA – That 128 lane is one configuration with 4 xGMI links. There is also a 160 lane configuration with 3x xGMI links that is becoming more common given the increase in xGMI bandwidth in this generation.

We have covered this before and fairly extensively: https://www.servethehome.com/dell-and-amd-showcase-future-of-servers-160-pcie-lane-design/

Here is the video for that if it helps: https://www.youtube.com/watch?v=Oe_hwJ7hl7A

Also, the EPYC 9004 has more lanes than the 7002/7003 series with the addiiton of extra PCIe Gen3 lanes for features like lower-speed 1/10GbE NICs.

Today I’ve seen an internet troll get rolled by an expert. 128 max 2p epyc. lolz. We’ve deployed 30 cabinets of 160 lane epyc.

I love all this tech, but then I look at my daily use-cases, will it improve my Netflix? will it improve my Cyberpunk 2077?

There comes a time when you realise that the tech is now truly hyperscale territory.

I just wished that AMD and Intel pull their fingers outta their batty-oles, and gave desktop processors at the very least 40 pcie lanes, this whole 20/24 pcie lane fiasco is just annoying.

And that whole HEDT or whatever they called it segment is a way to rip larger batty-oles into the wallets of mortals.

Intel, AMD, think about climate change! Give us more lanes so we don’t need to spin up servers at home just to handle extra high-speed storage! If you don’t, you will be the leading causes of a whole new subjectively lethal pandemic! You know the science is right!