One of the biggest challenges of modern servers is memory bandwidth. To that end, server designers are looking at MCR DIMMs or Multiplexer Combined Ranks DIMMs for the 2024-2025-era of servers (and beyond) to get around current memory bandwidth constraints. We wanted to get ahead of the trend and discuss the technology a bit more for our readers.

What is a MCR DIMM or Multiplexer Combined Ranks DIMM?

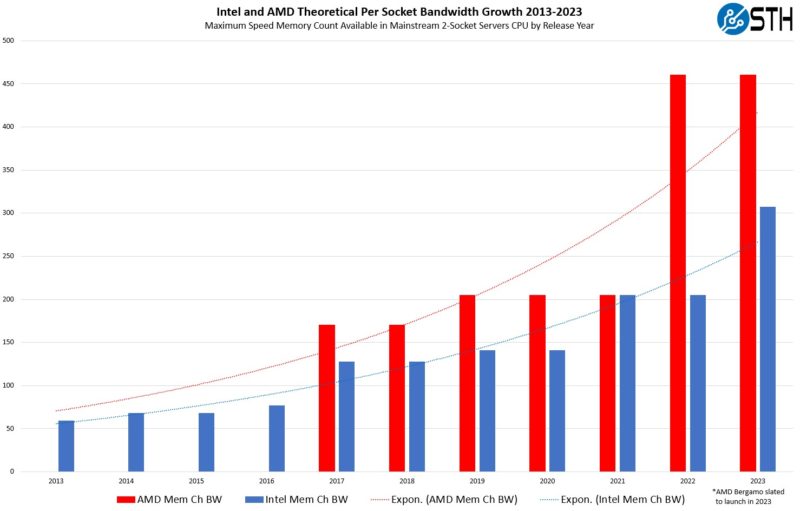

If you saw our recent Memory Bandwidth Per Core and Per Socket for Intel Xeon and AMD EPYC piece you may have seen the memory bandwidth challenge in servers.

CPUs continue to increase core density and it is a challenge for memory bandwidth to keep up. One innovation in the current generation of servers is DDR5 that we covered in Why DDR5 is Absolutely Necessary in Modern Servers.

There are three main ways server designers are solving for memory bandwidth challenges:

- Increase DDR speeds

- Increase DDR channels

- Increase sources of memory bandwidth (e.g. adding CXL devices)

- Use more specialized memory like HBM

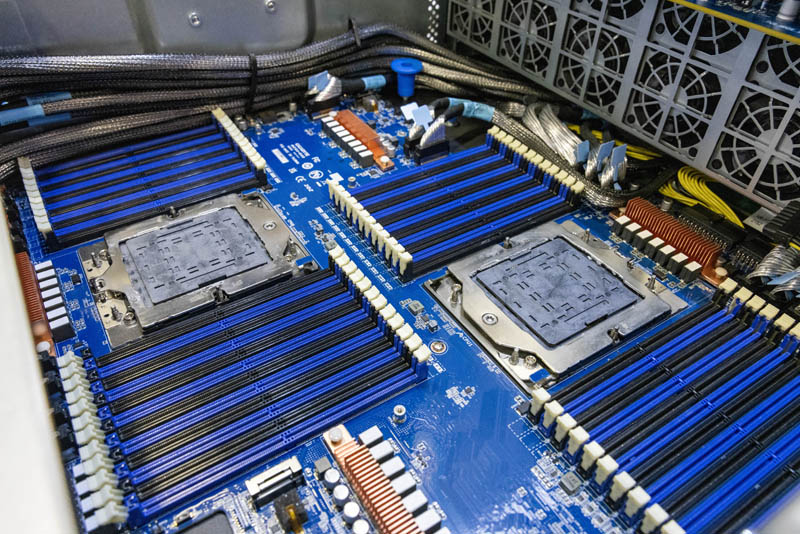

That pursuit has led us to motherboards like this one from Gigabyte:

Here we have an AMD EPYC Genoa 2P platform with a full set of 48 DIMMs. As one can see, the sockets need to be offset by ~8-9 DIMMs because there is simply not enough room in a standard 19″ server to add DIMM slots. This type of configuration adds over 5 inches to a server making it a bit less practical. As a result, many AMD EPYC 9004 “Genoa” servers are 12 DIMM per socket designs as that is the maximum memory bandwidth configuration.

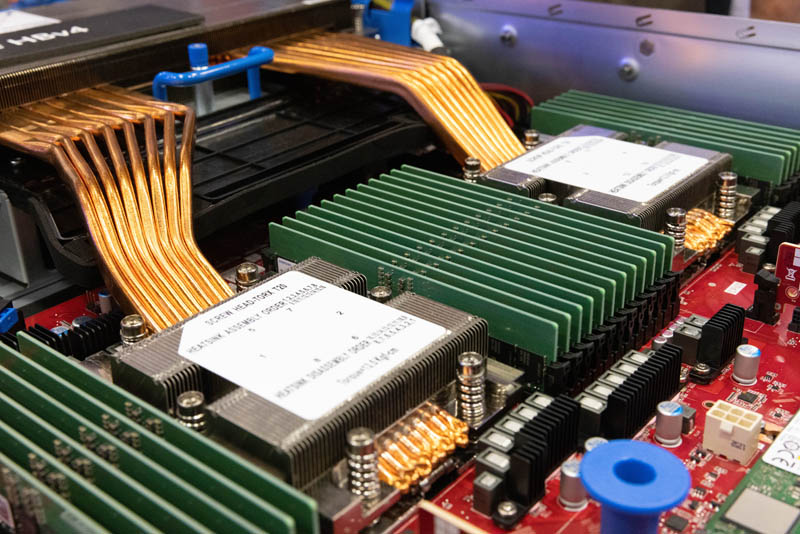

As CPU sockets get bigger, fitting even these 12 memory channels becomes difficult, so we are now at a point where server designers need a new way to expand memory bandwidth beyond just adding more DDR5 slots. Physically, adding more slots is near its limit.

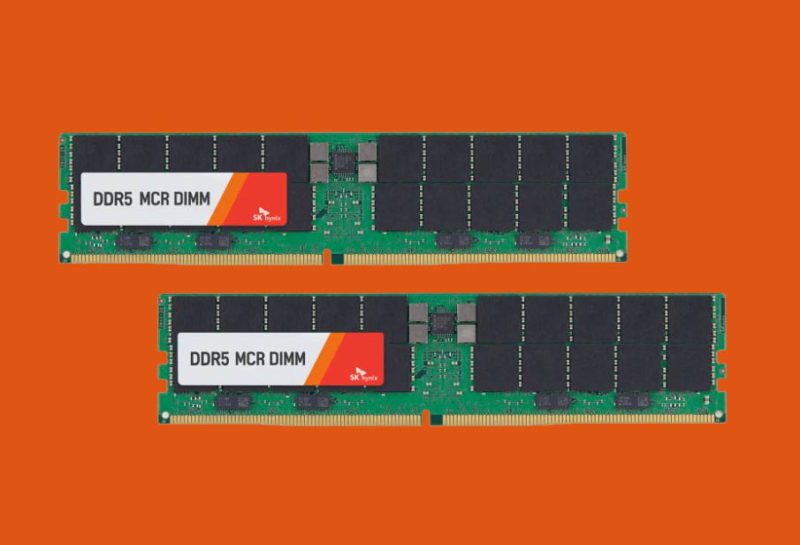

As a result, the next generation of DDR5 memory will be MCR DIMMs. SK hynix announced its DDR5 MCR DIMM at the end of 2022, but the impact is going to be felt in 2024/ 2025 and later.

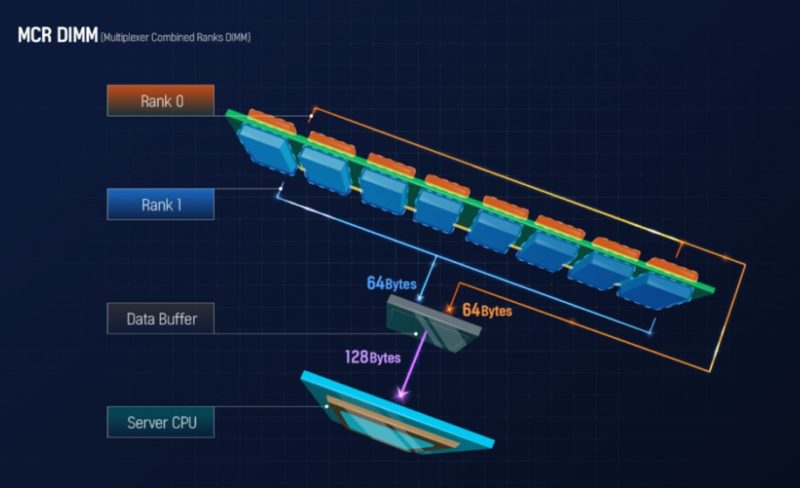

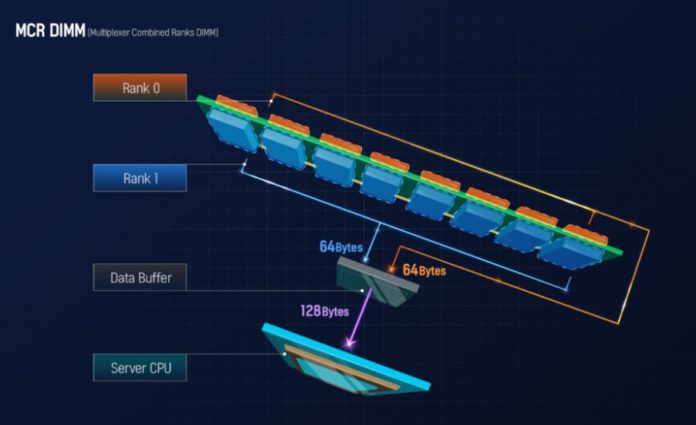

MCR DIMMs are able to simultaneously operate two ranks and deliver 128bytes to a CPU (64 bytes from each rank) roughly doubling the performance per DIMM. The MCR DIMM utilizes a data buffer on the modules to help combine transfers from each rank.

While the current DDR5 is 4.8Gbps, the MCR DIMM is being targeted at 8Gbps as a starting point, and going up from there. While we will get an interim step in DDR5 speeds in 2023 and early 2024, this is going to be an enormous jump for servers in late 2024 and into 2025.

Final Words

While NVIDIA’s Grace Superchip Features 144 Cores 960GB of RAM and 128 PCIe Gen5 Lanes along with almost 1TB/s of memory bandwidth, it is using LPDDR5X memory and thus is limited in capacity. MCR DIMMs should allow standard server processors to continue increasing memory capacity while also achieving well over that 1TB/s of memory bandwidth in dual-socket servers.

Aside from MCR DIMMs, we expect CXL Memory Expansion to be more commonplace by 2025, adding additional memory bandwidth, albeit at a higher latency.

I’m assuming this requires revised memory controllers at the host SoC and improved signalling between host and DIMM?

Makes me think of FB-DIMMs back in the DDR2 days. The memory capacities and bandwidth we’ll see in just a couple years is staggering.

Starting at 8gbps is not impressive, that is actually slower speed than the current 4.8gbps. Remember that the MCR memory is transferring 2x as much data per cycle, so 8gb MCR = 4gb normal ddr5.

AFAIK, the problem with DDR frequencies is maintaining the frequency over the connection from the memory controller to the memory chips. It’s not how quickly the chips can spew out data. What does this do to address the purely electrical problem of running high frequency signals over MB traces and contatcs on both sides? There are already 8GT/s DDR5 modules (ok, not for servers but it should be a matter of time). How is this different from using an 8GT/s module?

Also, it seems this doubles the burst length. What happens if the CPU needs to load a single cache line? What is it to do with the extra 64 bytes?

I think by the time this is avaialble, regular DDR5 would have caught up enough to make it dead on arrival.

That’s pretty smart. LR DIMMs already have buffer chips but those just buffer.

These MCR buffers don’t just do a chip select like LR to choose one memory chip. They have two “virtual” memory channels for input and one fast channel for output. Signal routing will be more complicated on DIMMs though (every channel needs it’s own traces).

This could be extended to four “channels” when we look at how high-density DIMMs are populated. But PCB routing would get even more complicated with “four channels”.

3DS DRAM could also have multiple channels (like HBM) but die sizes and cost would go up slightly (not that much though).

What does this accomplish to solve the purely electrical issue of sending and receiving high-frequency signals through multi-bit (MB) traces and contacts? It’s just a matter of time until servers can take use of DDR5 modules with speeds of 8GT/s (well, so they don’t exist yet). When compared to utilising an 8GT/s module, how is this different?