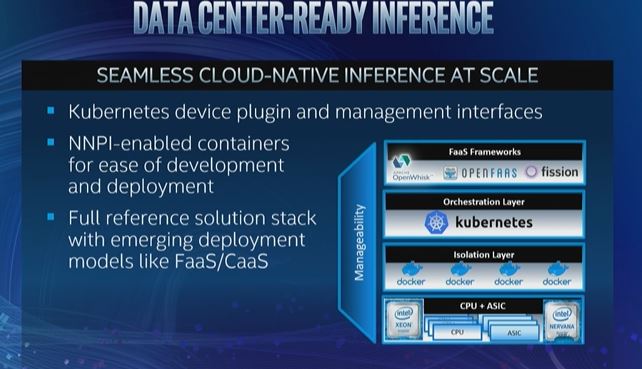

At the Intel AI Summit 2019, the company showed a number of products coming out of their AI division led by Naveen Rao. Today is a day of summits, as you may have seen we were also covering the Dell Technologies Summit 2019 (incidentally, Intel is also sponsoring Dell’s event.) At Intel’s event, it is showing off a number of new AI products.

Intel AI Hardware Opportunity and Portfolio

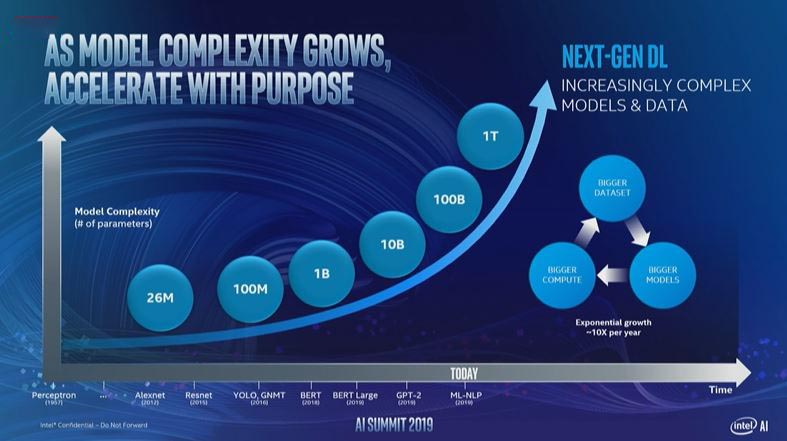

Intel sees AI models increasing in complexity at the rate of around 10x year on year. Intel sees this as having a long runway to go forward at this accelerated rate.

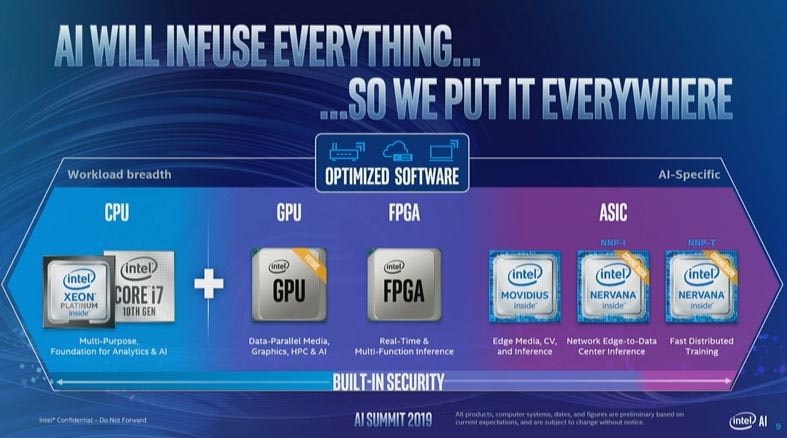

As a result, Intel is planning to offer a range of AI silicon. This includes even current-generation processors like the 2nd Gen Intel Xeon Scalable CPUs, next-generation Cooper (with bfloat16) and Ice Lake CPUs, as well as consumer chips, are incorporating AI features.

Intel’s Naveen Rao went into the three Movidius and Nervana chips to the right of that chart during the event.

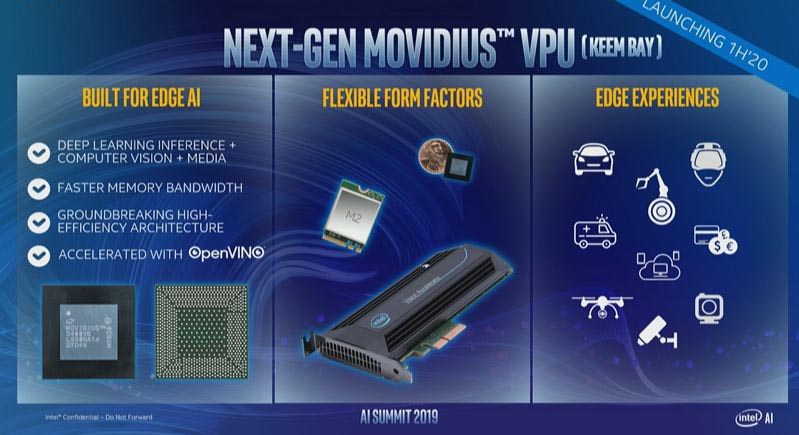

New Intel Movidius Keem Bay VPU

The first big announcement was the next-generation Movidius VPU codenamed Keem Bay. This is designed to be faster with more memory bandwidth but also have flexible form factors to meet a number of needs.

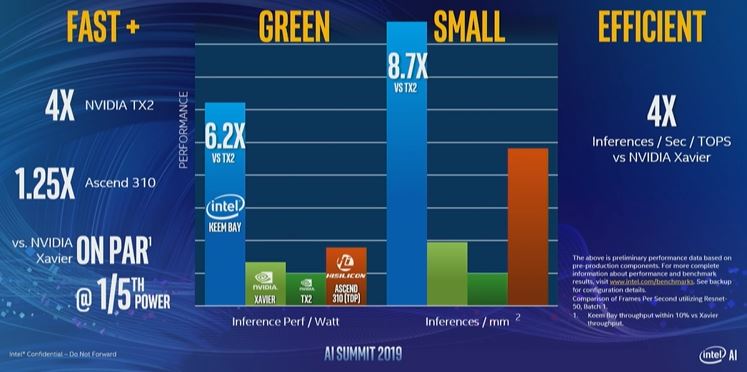

Intel is specifically positioning Keem Bay against the NVIDIA Jetson / Xavier products, although not discussing the new Jetson Xavier NX launched last week. Here it says it is faster and more power-efficient than NVIDIA’s line.

Intel also is announcing the Intel Dev Cloud for the Edge today. This is a program, that for free, users can test their models on the Dev Cloud to find out what is the best hardware “landing zone” for their model.

This is a huge market since low power devices that need to take data and gain some insights to trigger subsequent actions is a space that we may look back on in a decade as being ubiquitous table stakes.

“The Next Leap Forward Means Not Looking Back”

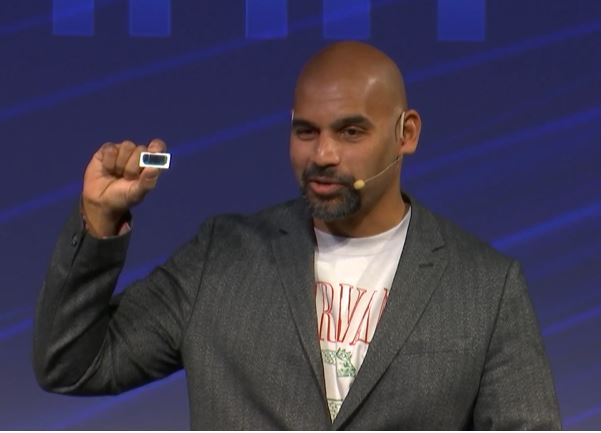

This line stuck with me from the Nervana NNP-I announcement (or re-announcement if you saw Intel NNP-I 1000 Spring Hill Details at Hot Chips 31.)

The NNP-I combines AI inferencing accelerators with x86 cores to provide AI acceleration to the edge, but also the ability to do more on-device command and control logic.

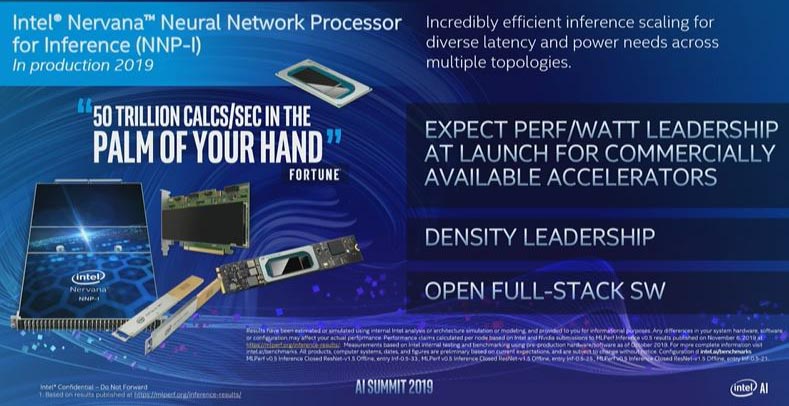

Intel showed off the NNP-I chips in a “ruler” form factor which we saw being designed for companies like Facebook in last year’s Where Cloud Servers Come From Visiting Wiwynn in Taipei. Intel says that it is getting 3.7x the compute density per U versus the NVIDIA Tesla T4 with this form factor. Ruler form factors offer better cooling and density for storage, and that seems to be the case for AI inferencing chips as well.

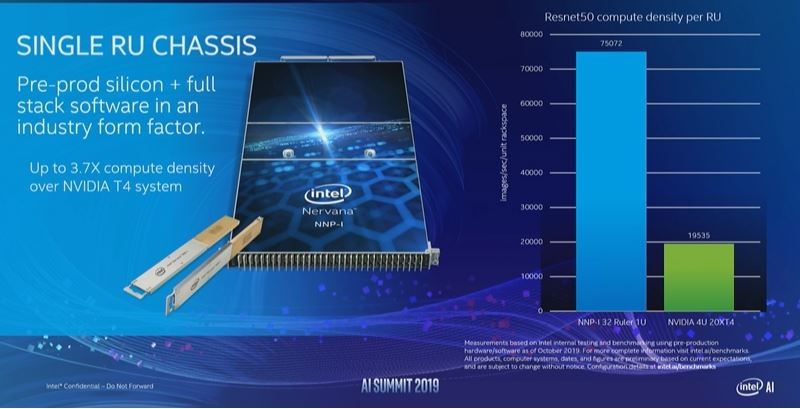

Intel also took a moment to note that the NNP-I and NNP-T are designed to be cloud-native and orchestrated with Kubernetes. Selling to large customers, and to developer customers, Intel needs this type of support from day 1.

Intel also said that the NNP-I is in production in 2019 which means we could start seeing these chips soon.

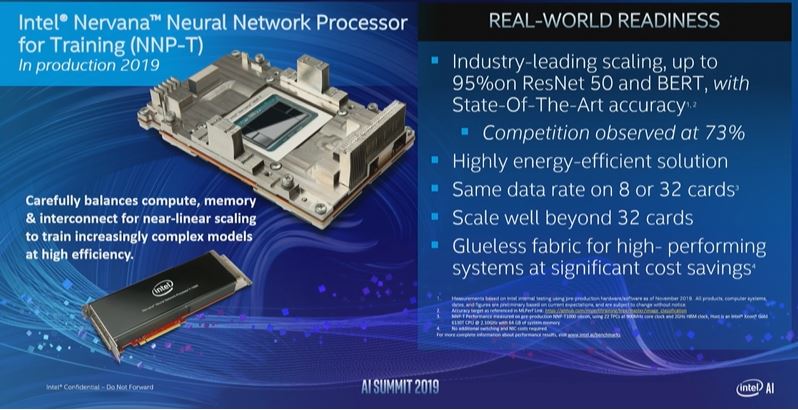

Intel NNP-T for Training

At the event, the Intel NNP-T was announced again. We are not quite sure how many times it has been announced at this point. At the same time, it looks like Intel is making a bigger commercialization push with not just Facebook but also Baidu using the new chips.

Intel says its new chip is designed for high scaling and better power savings versus what NVIDIA offers as the current standard in AI training chips.

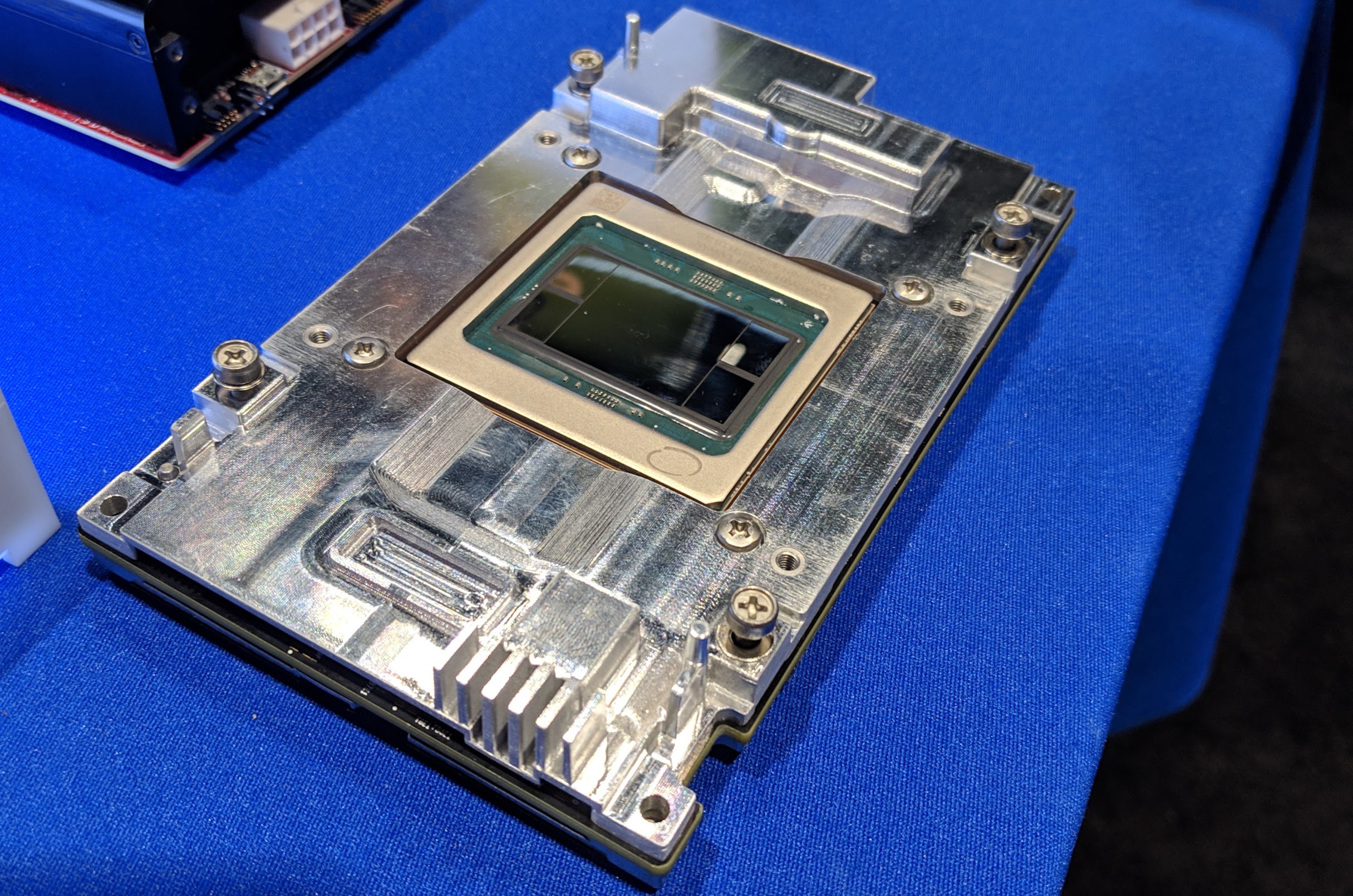

We saw the NNP in its OAM form factor at Intel Nervana NNP L-1000 OAM and at Hot Chips 31.

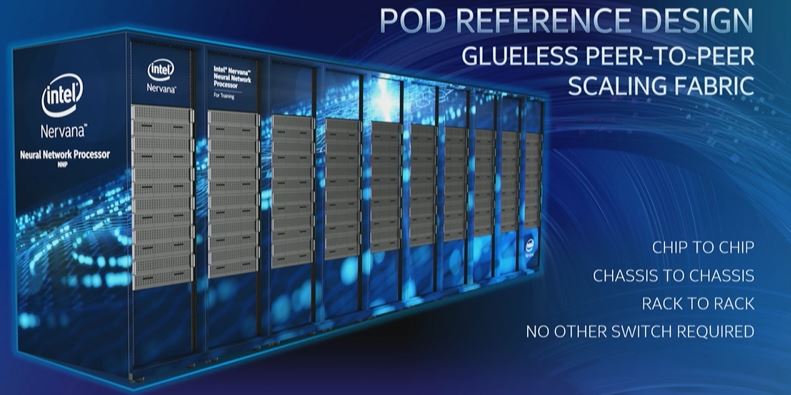

Intel wanted to show it can scale beyond just 32 chips. To do so, the company is showing off what it calls the Intel Nervana NNP Pod, a fairly direct name versus the NVIDIA DGX Superpod and DGX Pod solutions.

Intel also had Baidu on stage talking about how they are using it with the Baidu X-MAN 4.0 since the X-MAN 4.0 is an OAM/ OAI platform based on the Inspur UBB.

We discussed the Baidu X-MAN 4 in our Interview with Alan Chang of Inspur on OCP Regional Summit 2019.

Final Words

Intel will have a seat at the table for AI in the future. Simply by adding the AI accelerators that it needs to into its chips means that a range of applications, especially on the inferencing side, can utilize AI capabilities without requiring specialized hardware. Today’s event was a clear shot across NVIDIA’s bow with the new Movidius chip targeting products like the new NVIDIA Jetson Xavier NX, NNP-I targeting the NVIDIA Tesla T4, and the NNP-T targeting Tesla V100 systems.

It is still early days in the AI hardware world, and this is a space that will heat up in 2020 as more vendors get silicon out into the market. Intel pushing OAM with its hyper-scale partners means that NVIDIA will soon have to play in an open GPU form factor designed to work with others outside of just PCIe devices. For its part, Intel has consistently spoken of software tooling and has a software development arm that has more funding than many startups in the space. Seeing competition in this market so it is not an NVIDIA-only market is welcome.

So this is when Microsoft gives up and recognizes that Windows servers belong to the past.

And then nVidia must finally address the need for open source drivers or they will be the next to bite the dust.

So Intel’s AI in their security flawed CPU’s can use it against itself?

Skynet ftw!