Dell EMC PowerEdge XE7100 Management

The PowerEdge X7100 offers Dell iDRAC 9. We are going to show a few screenshots from our recent Dell EMC PowerEdge C6525 Review to demonstrate what the Enterprise level looks like. Logging into the web interface, one is greeted with a familiar login screen.

An important aspect of this system that many potential buyers will be interested in is the integration with existing Dell management tools. Since this is iDRAC 9, and Dell has been refining the solution for a few years even with AMD platforms, this works as one would expect from an Intel Xeon server. If you have an existing Xeon infrastructure, Dell makes adding AMD nodes transparent.

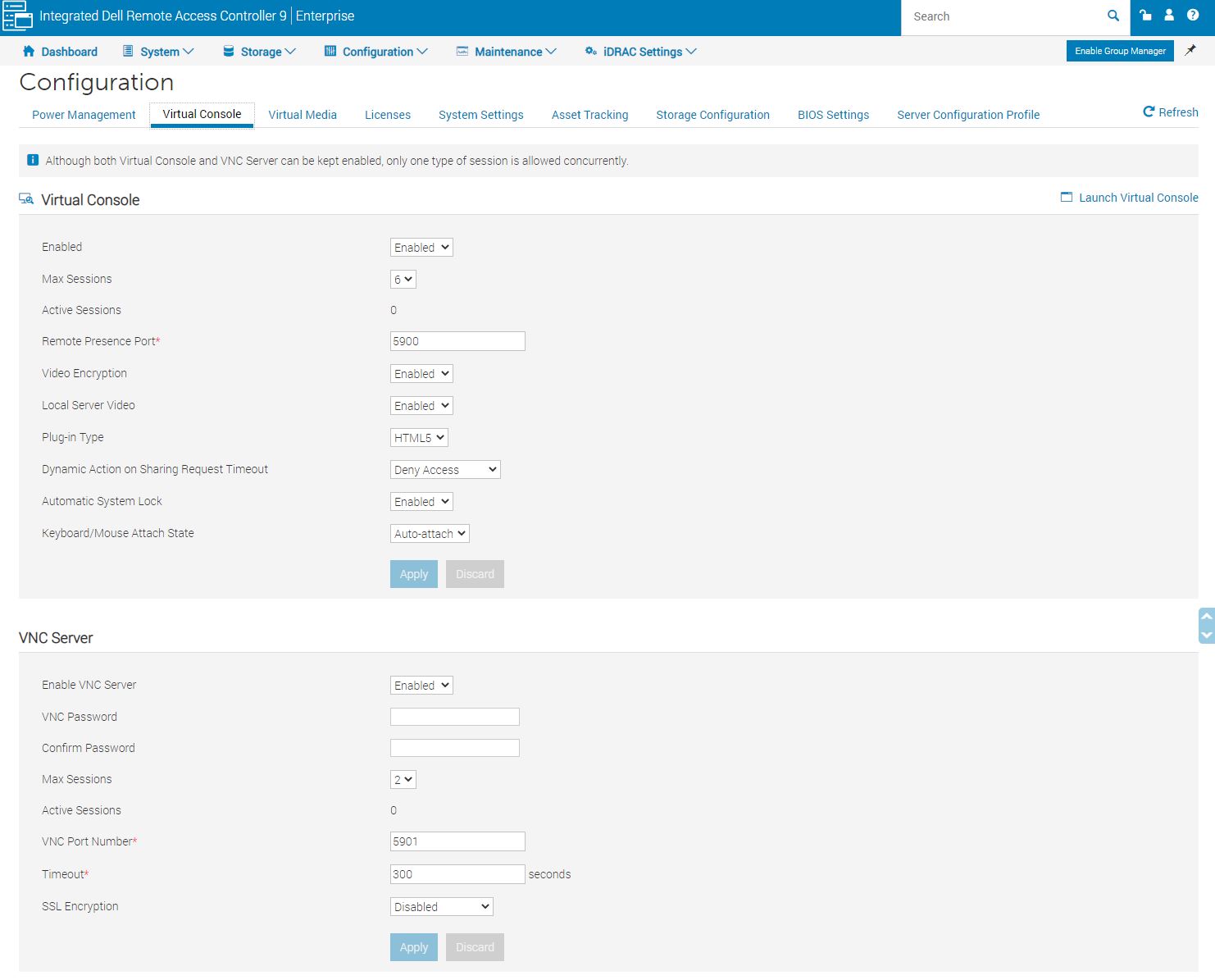

Another feature worth noting is the ability to set BIOS settings via the web interface. That is a feature we see in solutions from top-tier vendors like Dell EMC, HPE, and Lenovo, but many vendors in the market do not have.

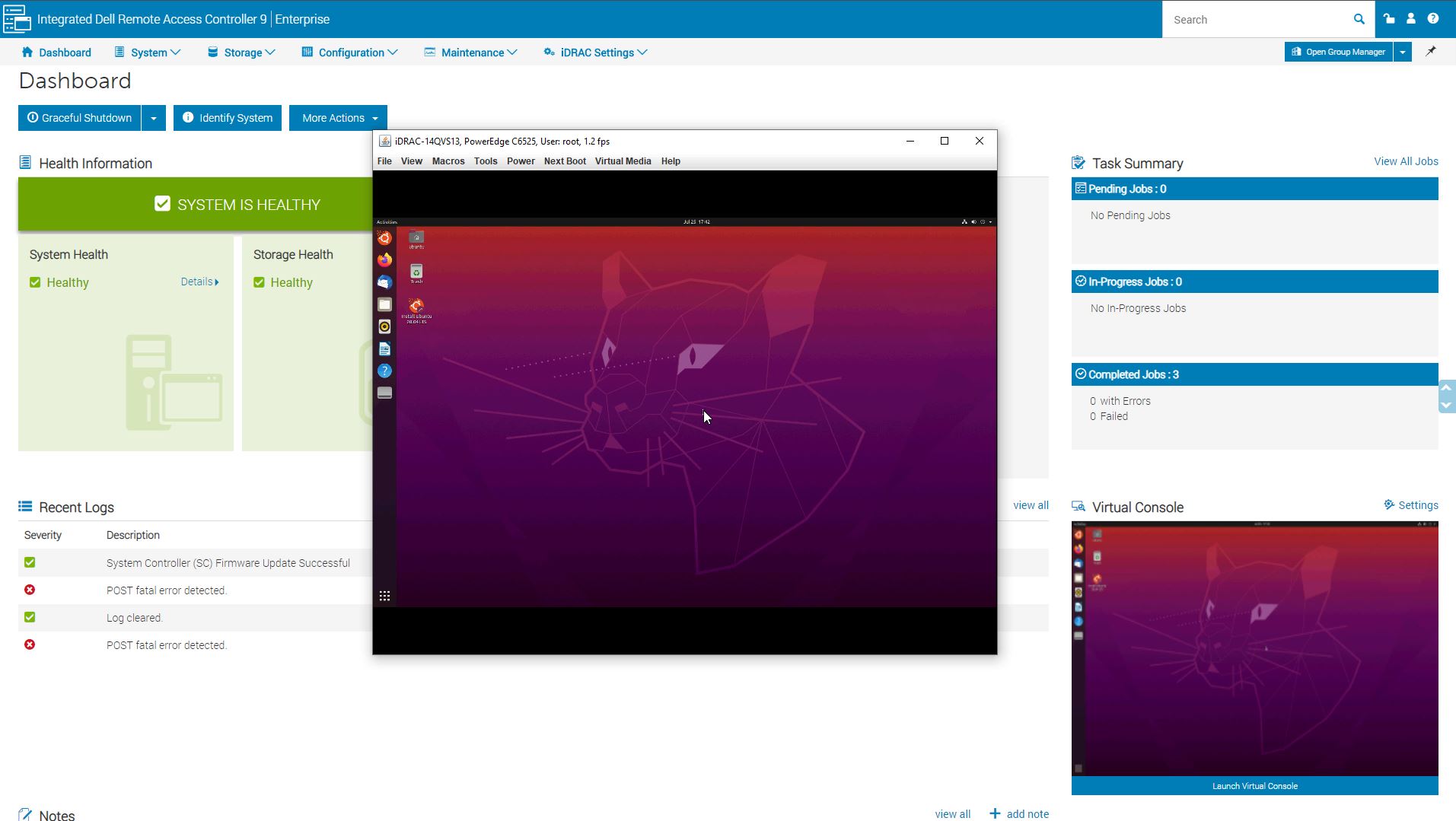

The iKVM feature is a must-have feature for any server today as it has one of the best ROI’s when it comes to troubleshooting. iDRAC 9 features several iKVM console modes including Java, ActiveX, and HTML5. Here we are pulling a view of the legacy Java version from our C6525 review:

Modern server management solutions such as iDRAC are essentially embedded IoT systems dedicated to managing bigger systems. As such, iDRAC has a number of configuration settings for the service module so you can set up proper networking as an example. This even includes features such as detailed asset tracking to monitor what hardware is in clusters. Over time, servicing clusters often means that configurations can drift, and Dell has solutions to help the business side of IT such as tracking the assets.

We probably do not cover this enough, but there are other small features that Dell has. Examples here are selecting the boot device at next boot, important if you want to boot to iKVM media for service or something like that. Dell has an extremely well-developed solution that is a step above white box providers in terms of a breadth of features. Many providers offer the basic set, but Dell has something that goes beyond this.

Something we did not like is the fact that the Enterprise version is an upgrade on these higher-end servers. In 2020 and beyond, iKVM needs to be a standard feature on servers especially with COVID restrictions limiting access to data centers. This has become a workplace safety feature. Perhaps at the lower-end of the segment saving a few dollars on the Enterprise license makes sense. At this segment, upgrades feel like “nickel and diming” Dell’s loyal customers.

Next, we are going to take a look at the performance followed by power consumption and our closing thoughts.

Dell EMC PowerEdge XE7100 Performance

In terms of performance, we had a fairly significant challenge. These systems are generally designed to be used in scale-out storage solutions such as Ceph where each node has 100 disks, or in the dual node configuration, 50 disks per node and racks of these systems. Our challenge is that it is hard to test scale-out storage with a single system and our lab’s Ceph cluster migrated to an all-flash setup in 2018. So instead, we benchmarked individual components and functions compared to baseline figures that we have already tested in other systems designed specifically to use those components. The methodology shows if the XE7100 is performing at or lower than how we expect the components to perform if they were in other systems.

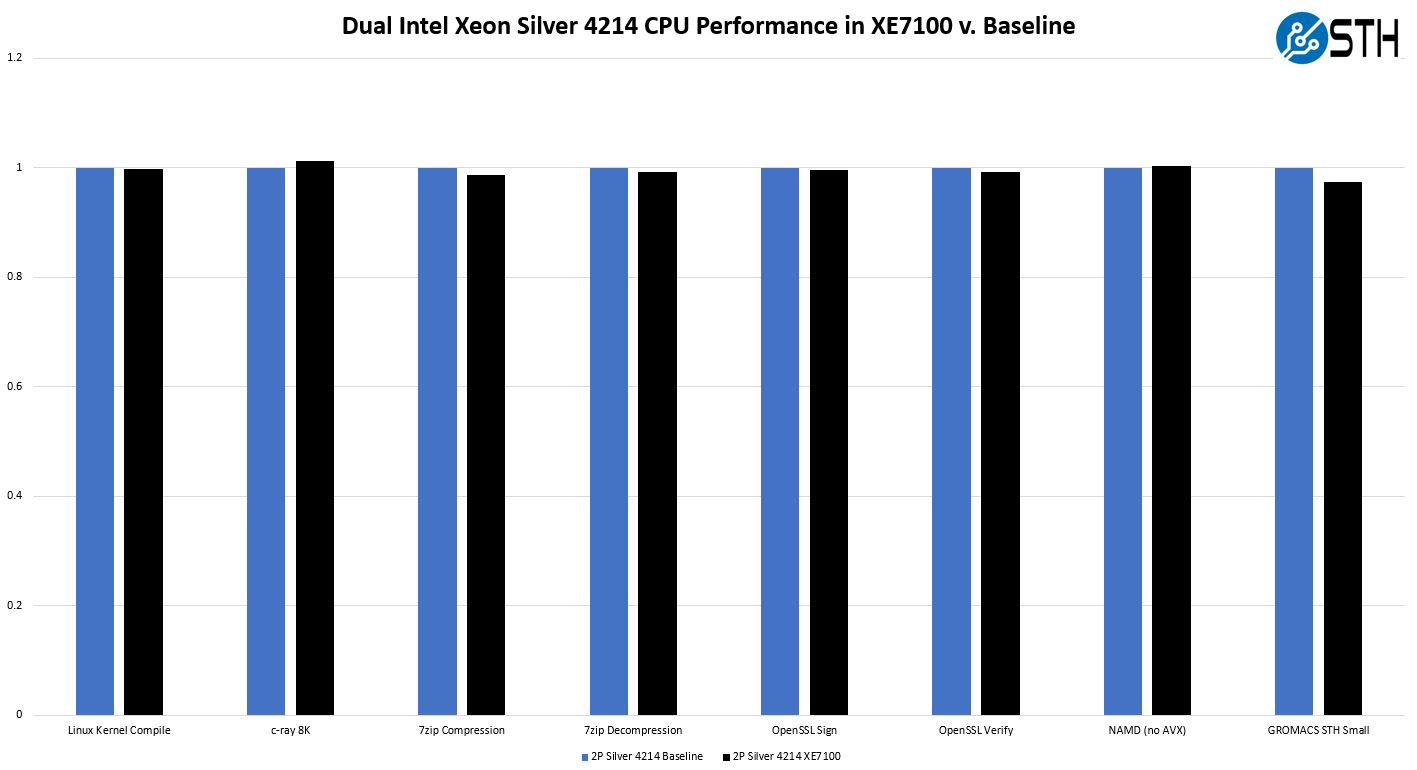

Dell EMC PowerEdge XE7100 CPU Compute Performance

Here we took the compute performance of the dual Intel Xeon Silver 4214‘s to the baseline testing we do for CPUs at STH.

As you can see, the difference with this system compared to our baseline is not much. These are not the highest wattage CPUs, but this shows that performance is about what we would expect and within what we consider a testing variance.

Dell EMC PowerEdge XE7100 Network Storage Performance

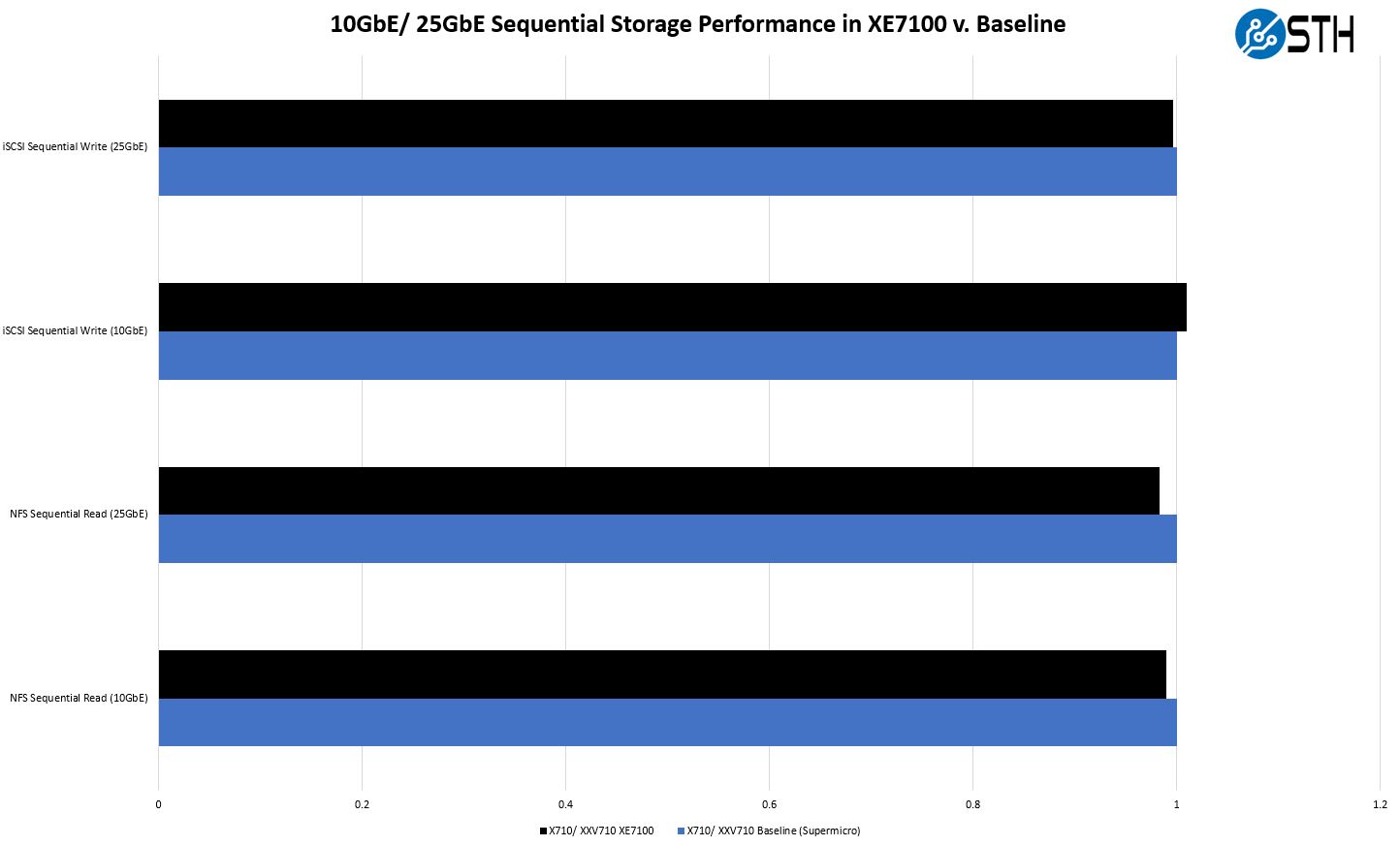

Since scaling-out with our Ceph cluster was impractical, we setup disks as targets and accessed them over NFS and iSCSI using both the NIC types we had for the system. We had the 10GbE X710 NIC in the mezzanine slot and in the half-width nodes we had the XXV710 25GbE solution.

Just given the fact we had so much more storage bandwidth than we had network bandwidth, we basically saturated the links with NFS and iSCSI. The chart was very repetitive so we streamlined the results to these four categories.

Dell EMC PowerEdge XE7100 NVIDIA Tesla V100 Training Performance

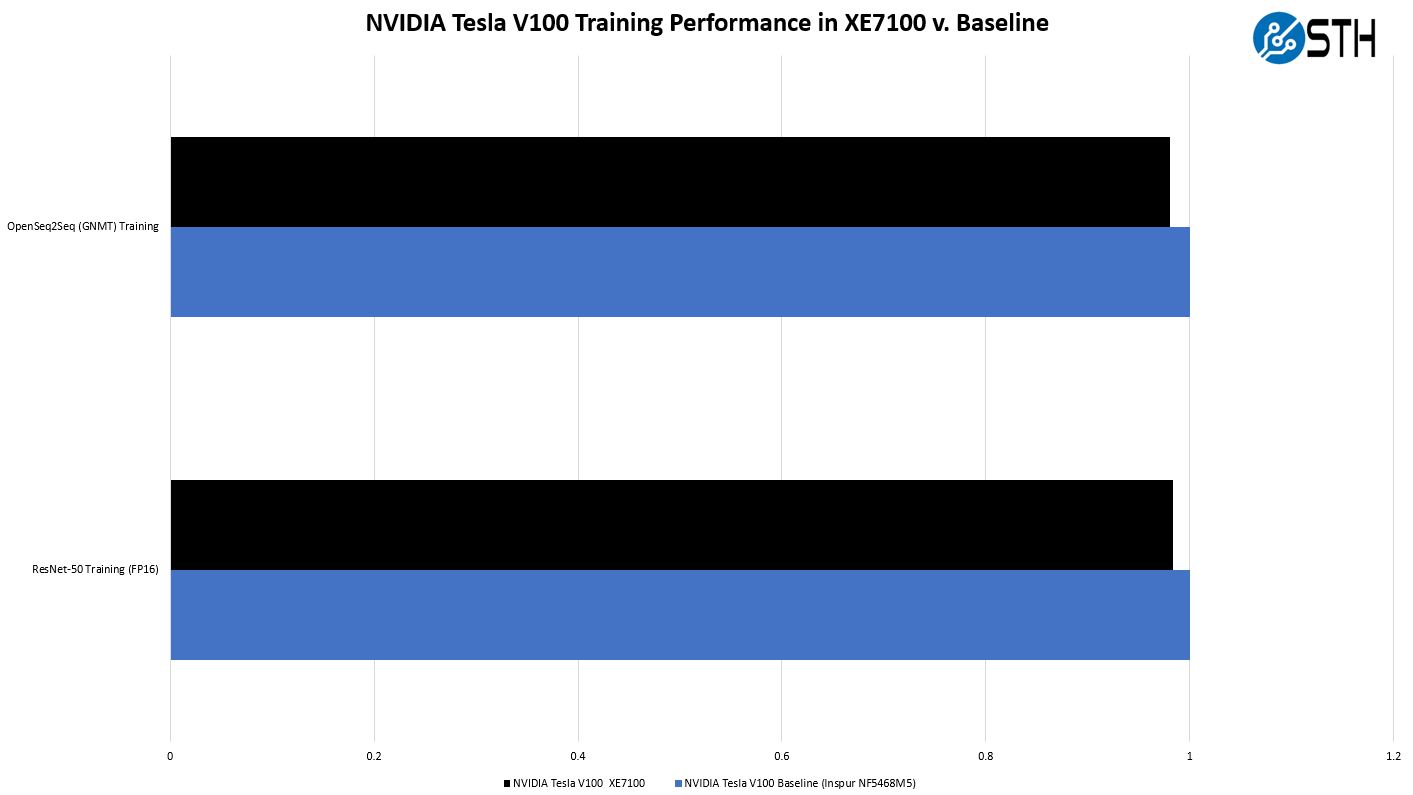

We took the NVIDIA Tesla V100 and compared it to a dedicated training server‘s single-GPU result. Clearly, an 8x or 10x GPU server is going to be faster, but we wanted to see if the system was able to cool a NVIDIA V100.

Here we saw perhaps the biggest drop-off but it is only around 2% from a dedicated training server. Realistically, if one is only training on a single GPU, one will get more than that 2% benefit back by training locally within the node and not have to send data over the network.

Dell EMC PowerEdge XE7100 NVIDIA T4 Inference Performance

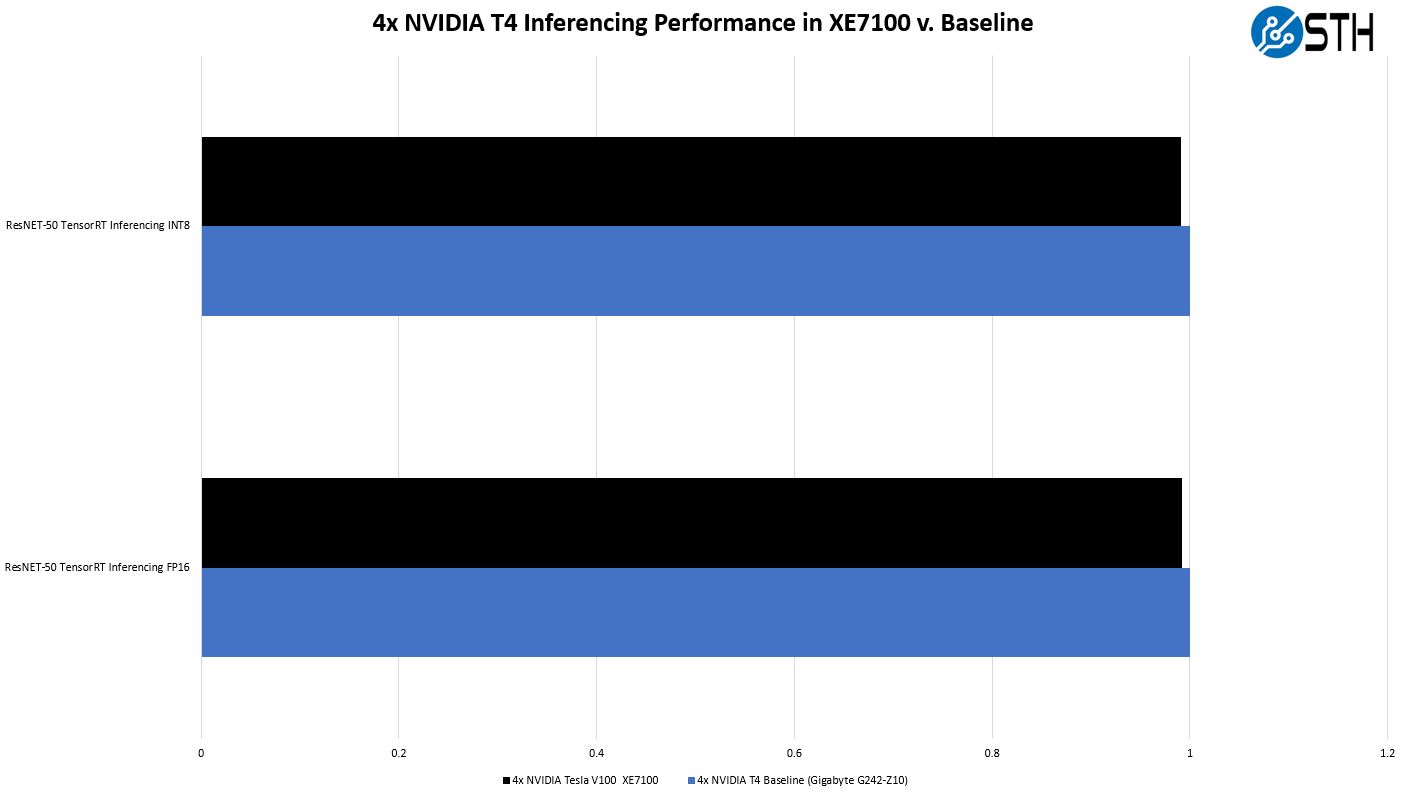

Likewise, we wanted to see the NVIDIA T4 performance using a similar methodology.

Again, we see very similar performance which is why it can make sense to inference locally versus over the network. If the performance was significantly far off, we would not say this is a worthwhile feature, but Dell has made it work.

Petabyte Scale

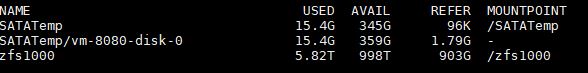

For our readers wondering, yes, we did make a petabyte ZFS array with this system.

Since we only had 1.2PB of capacity, we would not recommend this for production, but we just wanted to see 1PB of storage.

Next, we are going to get to power consumption and our final thoughts.

Just out of curiosity, when a drive fails, what sort of information are you given regarding the location of said drive? Does it have LEDs on the drive trays, or are you told “row x, column y” or ???

On the PCB backplane and on the drive trays you can see the infrastructure for two status LEDs per drive. The system is also laid out to have all of the drive slots numbered and documented.

Unless, you are into Ceph or the likes and you are okay with huge failure domains (the only way to make Ceph economical for capacity), then I just don’t understand this product. No dual path (HA) makes it a no go. Perhaps there are places where a PB+ outage is no biggie.

Not sure what software you will run on this, most clustered file system or object storage system that I know recommend going for smaller nodes, primarily for rebuild times, one vendor that I know recommends 10 – 15 drives, and drives with no more than 8TB.

I enjoy your review of this system, definitely cool features, love to the flexibility with the gpu/ssd options and as you mentioned this would not be an individual setup but rather integrated in a clustered setup. I’d also imagine it has the typical IDRAC functionality, and all the dell basics for monitoring.

After all the fun putting it together, do you get to keep it for a while and play with or once you reviewed it….just pack it up and ship it out?

Hans Henrik Hape even with CEPH this is to big, loosing 1,2 PB becouse one servers fails, rebalancing, network and cpu load on nodes. It’s just bad idea not from data loose point of view but from maintaining operations durring failure.

Great Job STH, Patrick, you never stopped surprising us with these kind of reviews. sine you configured system as a raw 1.2PB can you check the internal bandwidth available using something like

iozone -t 1 -i 0 -i 1 -r 1M -s 1024G -+n

I would love to know since no parity HDD is it possible the bandwidth will 250MBpsx100HDD i.e. 25GBps. I’m sure their will be bottlenecks maybe the PCIe bus speed for the HBA controller or CPU limitation. But since you have it will nice to known

Again thanks for the great reviews

“250MBpsx100HDD i.e. 25GBps” ANy 7200 RPM drive is capable of ~140 MB/sec sustained, so, 100 of them would be ~14 GigaBYTES per second… Ergo, 100 Gbps connection will then propel this server to hypothetical ~10 GB/sec transfers… As it is, 25 Gbps would limit it quite quickly.

A great review of this awesome server! I wish I had a few hundred K$ to spare, and, needed a couple of them!

Happy wise (wo)men who’ve got to play with such grownup toys.

There is a typo in the conclusion section compeition -> competition.

But else a really cool review of a nice “toy” I sadly will never get too play with.

>AMD CPUs tend to have more I/O and core count which also means more power consumption

Ohrealy?

Perfect for my budget conscious Plex

James – if you look at Milan as an example, AMD does not even offer a sub 155W TDP CPU in the EPYC 7003 series. We discussed in that launch review why and it comes down to the I/O die using so much power.

While nothing was mentioned about prices, Dell typically charge at least 100% premium for the hard drives with their logo (all made NOT by Dell) despite having much shorter than normal 5yr warranty – so combination of 1U 2 CPU (AMD EPYC) server and 102-106 4U SAS JBOD from WD or Seagate will be MUCH cheaper, faster (in a right configuration) and much easier to upgrade if needed (the server part which improves faster than SAS HDD).

Color me unimpressed by this product despite impressive engineering.

Definitely getting one for my homelab

I just managed to purchase the 20 m.2 DSS FE1 card for my Dell R730xd. I did not to too much test but It works (both in esxi and truenas). And the cost for this card is absolute bargin. Feel free to contact me for the card.