Dell EMC PowerEdge XE7100 Comptue Options Overview

If one recalls the rear of the system we have three large fan modules and two power supplies which are at the top of the chassis. That is all typical with 4U JBODs and systems. What the Dell engineers have done is to create a flexible compute solution to go along with all of this storage. That compute solution effectively occupies the bottom of the chassis behind the expander modules.

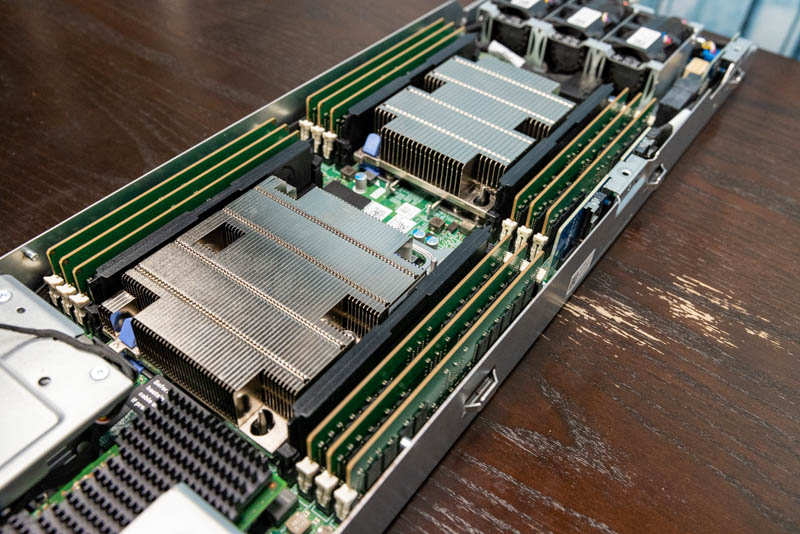

The unit we are using for photos is the 2x half-width compute node solution. These modules may look familiar as the motherboard is very similar to what is found in the C6425 chassis. Indeed, these are two Intel Xeon Scalable CPUs, but we reviewed an EPYC version in our Dell EMC PowerEdge C6525 2U4N server review. Still, Dell is utilizing a high-volume part which increases quality.

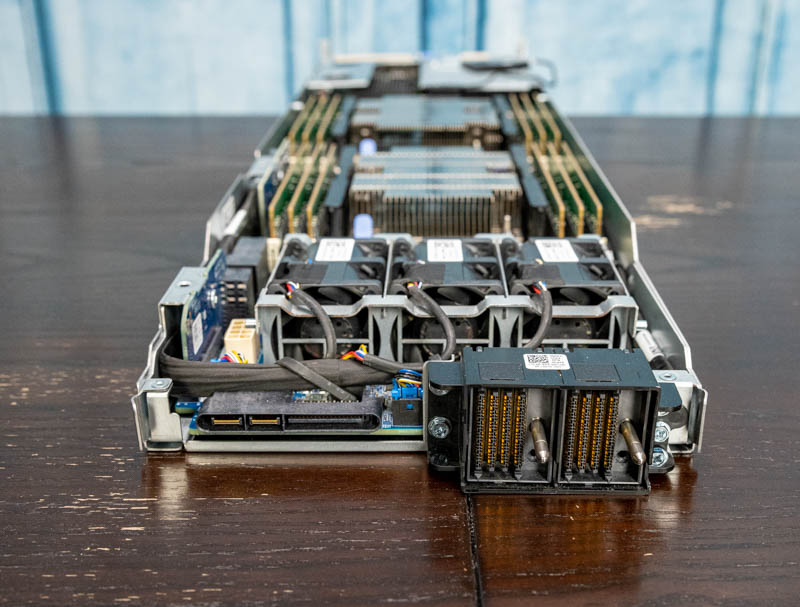

The rear of the nodes has our I/O. We have dual USB 3.0 ports, a DisplayPort output, and a management port for iDRAC duties. Along the bottom we can also see a dual SFP+ Intel X710 10GbE NIC in the mezzanine card slot.

The top part of the rear I/O has one slot for storage and one for networking. One can see the battery pack on the left side which slots into the airflow guide between the CPUs which we have removed for photos. The right side is a low-profile slot for additional expansion.

Each CPU socket is limited to a 150W TDP which may be why we do not see the EPYC solution here. AMD CPUs tend to have more I/O and core count which also means more power consumption. We will, however, note that this platform utilizes 8-channel memory and as we have shown with our Installing a 3rd Generation Intel Xeon Scalable LGA4189 CPU and Cooler piece Intel’s new Ice Lake Xeon SKUs will utilize a package that is a similar width but about 1.5mm longer than the CPUs in here. It seems like Dell has thought ahead for its common platform here. Still, for a storage server like this, we may never see an update to the Ice Lake Xeon/ Milan EPYC generation or it may be delayed. These types of systems tend to be less CPU intensive.

The half-width node has its own fans. That is important because otherwise, the fans would be in the middle of the chassis and hard to service. We can also see more high-density connectors.

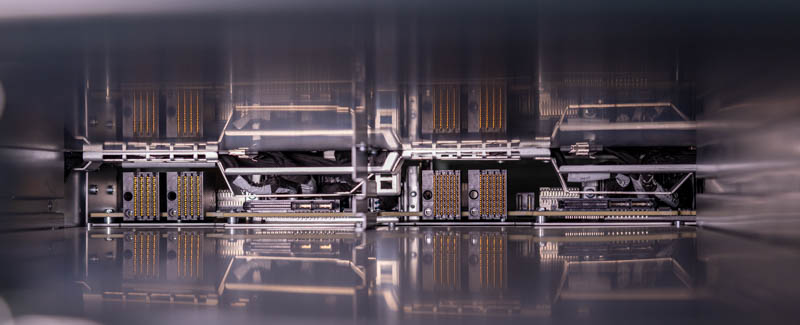

Here is a look inside the chassis to where these connectors mate. One quick note here is that when the two controller nodes are installed we saw two 50x bay nodes. We were told this is used to increase node count by twice the chassis count which can be advantageous in scale-out storage deployments.

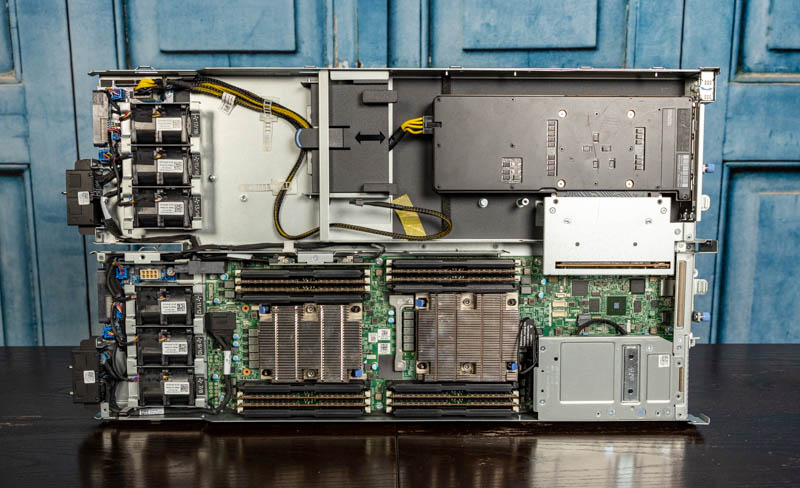

The half-width compute node is fairly standard, but there is a lot more going on here. The Dell EMC engineers made a configurable platform. Here, we have a GPU compute node with a NVIDIA Tesla V100 dual-width GPU installed.

While the dual Xeon compute node is still present, on the dual-sled design the low-profile PCIe riser’s connectivity is exposed to the other side. Here we get a riser that supports a double-width GPU.

That side of the chassis also provides GPU power and its own cooling. We are not showing ducting since this is already a large piece, but Dell is ducting airflow from the fans to the GPU.

This side can also house four NVIDIA T4‘s. For those that prefer smaller and lower-power accelerators, we get the ability to run up to four low-profile cards. In this shot, we left the airflow guides on both the top and bottom. A quick note is that one can see the battery module in its proper place lodged in the airflow guide between the two CPU heatsinks.

What is not pictured is that Dell has a DSS FE1 card. This card has a PCIe switch chip and small risers that each has up to four M.2 drives. That means the FE1 can have up to 20x M.2 SSDs on this card.

In summary, the Dell EMC engineers did not create the highest-density storage platform on the market. Dell can qualify it by saying 5U and by citing a “standard” rack dimension of sub-36 inches. In terms of density per U, the ruler SSD units are at 1PB/U and there are 4U systems with more than 100 drives out there that are deeper, but denser per U.

What the engineers created instead was a 5U 100x bay storage platform with a surprising amount of customizability. One can not only get a single, or dual compute nodes. Instead, one also has options to add GPUs and storage in a number of configurations. That practically allows architects to design solutions where AI training/ inferencing happens on the storage node itself instead of being the source of data that must be pushed over the network, processed on another GPU server, and then potentially data returned. Keeping everything local improves latency and also lowers the networking and data center power requirements. That is the true innovation and benefit to what the Dell EMC engineers accomplished.

Next, we are going to look at the management and performance before getting to our final words.

Just out of curiosity, when a drive fails, what sort of information are you given regarding the location of said drive? Does it have LEDs on the drive trays, or are you told “row x, column y” or ???

On the PCB backplane and on the drive trays you can see the infrastructure for two status LEDs per drive. The system is also laid out to have all of the drive slots numbered and documented.

Unless, you are into Ceph or the likes and you are okay with huge failure domains (the only way to make Ceph economical for capacity), then I just don’t understand this product. No dual path (HA) makes it a no go. Perhaps there are places where a PB+ outage is no biggie.

Not sure what software you will run on this, most clustered file system or object storage system that I know recommend going for smaller nodes, primarily for rebuild times, one vendor that I know recommends 10 – 15 drives, and drives with no more than 8TB.

I enjoy your review of this system, definitely cool features, love to the flexibility with the gpu/ssd options and as you mentioned this would not be an individual setup but rather integrated in a clustered setup. I’d also imagine it has the typical IDRAC functionality, and all the dell basics for monitoring.

After all the fun putting it together, do you get to keep it for a while and play with or once you reviewed it….just pack it up and ship it out?

Hans Henrik Hape even with CEPH this is to big, loosing 1,2 PB becouse one servers fails, rebalancing, network and cpu load on nodes. It’s just bad idea not from data loose point of view but from maintaining operations durring failure.

Great Job STH, Patrick, you never stopped surprising us with these kind of reviews. sine you configured system as a raw 1.2PB can you check the internal bandwidth available using something like

iozone -t 1 -i 0 -i 1 -r 1M -s 1024G -+n

I would love to know since no parity HDD is it possible the bandwidth will 250MBpsx100HDD i.e. 25GBps. I’m sure their will be bottlenecks maybe the PCIe bus speed for the HBA controller or CPU limitation. But since you have it will nice to known

Again thanks for the great reviews

“250MBpsx100HDD i.e. 25GBps” ANy 7200 RPM drive is capable of ~140 MB/sec sustained, so, 100 of them would be ~14 GigaBYTES per second… Ergo, 100 Gbps connection will then propel this server to hypothetical ~10 GB/sec transfers… As it is, 25 Gbps would limit it quite quickly.

A great review of this awesome server! I wish I had a few hundred K$ to spare, and, needed a couple of them!

Happy wise (wo)men who’ve got to play with such grownup toys.

There is a typo in the conclusion section compeition -> competition.

But else a really cool review of a nice “toy” I sadly will never get too play with.

>AMD CPUs tend to have more I/O and core count which also means more power consumption

Ohrealy?

Perfect for my budget conscious Plex

James – if you look at Milan as an example, AMD does not even offer a sub 155W TDP CPU in the EPYC 7003 series. We discussed in that launch review why and it comes down to the I/O die using so much power.

While nothing was mentioned about prices, Dell typically charge at least 100% premium for the hard drives with their logo (all made NOT by Dell) despite having much shorter than normal 5yr warranty – so combination of 1U 2 CPU (AMD EPYC) server and 102-106 4U SAS JBOD from WD or Seagate will be MUCH cheaper, faster (in a right configuration) and much easier to upgrade if needed (the server part which improves faster than SAS HDD).

Color me unimpressed by this product despite impressive engineering.

Definitely getting one for my homelab

I just managed to purchase the 20 m.2 DSS FE1 card for my Dell R730xd. I did not to too much test but It works (both in esxi and truenas). And the cost for this card is absolute bargin. Feel free to contact me for the card.