ASRock Rack 2U4G-ROME/2T Internal Hardware Overview

We are going to start our internal overview with the front and work our way back. We noted in our external overview that the front 2U faceplate was mostly dedicated to airflow. That is because three accelerators are in the front area of the system.

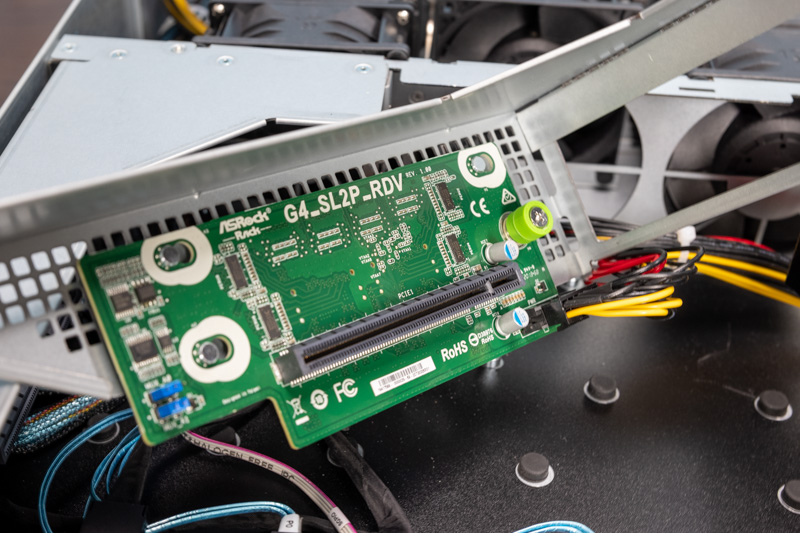

In this front area, there are three PCIe Gen4 x16 risers.

Each of the risers is removable for easy access to the GPUs/ accelerators that can be placed in them. To remove the risers requires two screws. We wish that in a future revision ASRock Rack finds a way to make this tool-less for easier service. In comparison, the Dell EMC PowerEdge R750xa requires a lot more work to get to the GPUs, but that work can be done without a screwdriver. ASrock Rack’s implementation was faster to service, but that is a small area that can be improved.

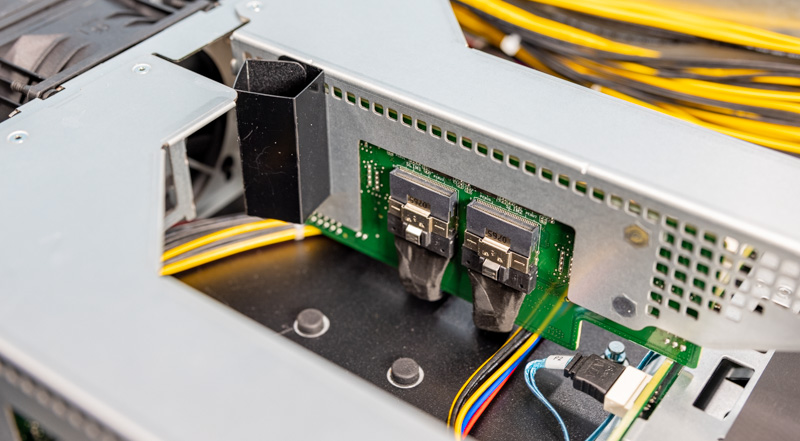

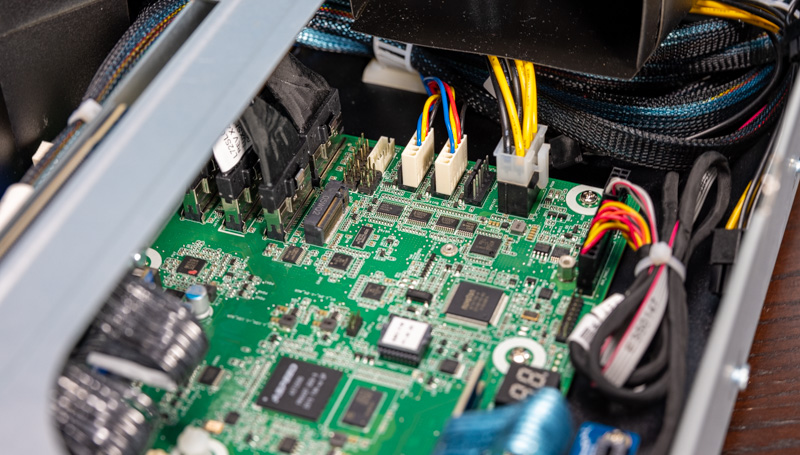

Since these are front-mounted PCIe risers, PCIe needs to be brought to the risers using cables. Each riser has two cabled connections bringing in 8x PCIe Gen4 lanes each for a total of 16 lanes per riser.

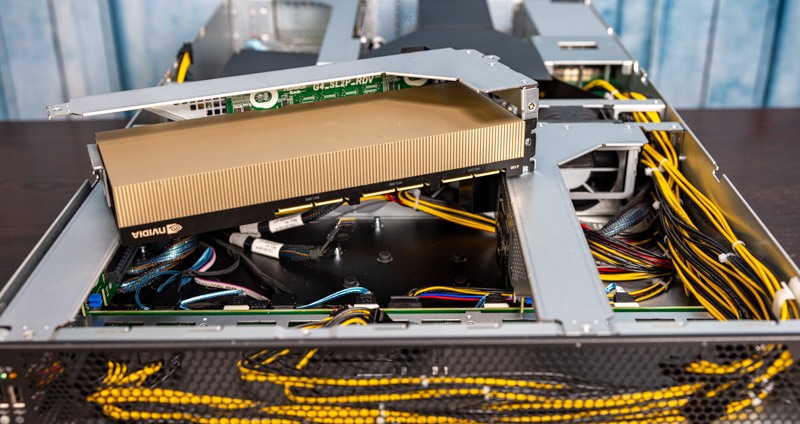

Here is a NVIDIA A100 installed in a riser. When we had all four GPUs installed, it was harder to see the actual server which is the focus of the review so hopefully, this helps our readers understand how this server is built up.

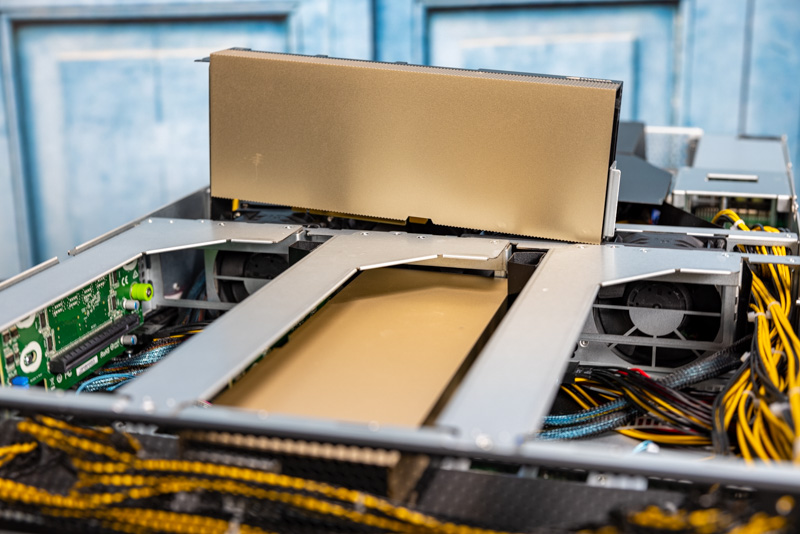

We have that riser back in the server with empty risers to either side. Here is another A100 for size comparison purposes. Something that was immediately apparent is that the risers have a lot of room around a standard GPU. It is almost as if this was designed to potentially take larger accelerators or accelerators where the PCIe power input was not on the front edge of the card but was instead atop the card.

Even though we have a double-width GPU installed, there is a lot of room atop the A100 in this design.

With three GPUs, and five 2.5″ bays upfront, there is a major need to get power to the front of the system. ASRock Rack has a cable channel on the side of the chassis that has a large number of power cables. This cabled design is not as clean as designs using PCB distribution, however, it also makes for straightforward service. It is also likely less expensive than designing additional power distribution PCB inside the system just to get shorter cables so that goes along with the cost optimization theme we have here.

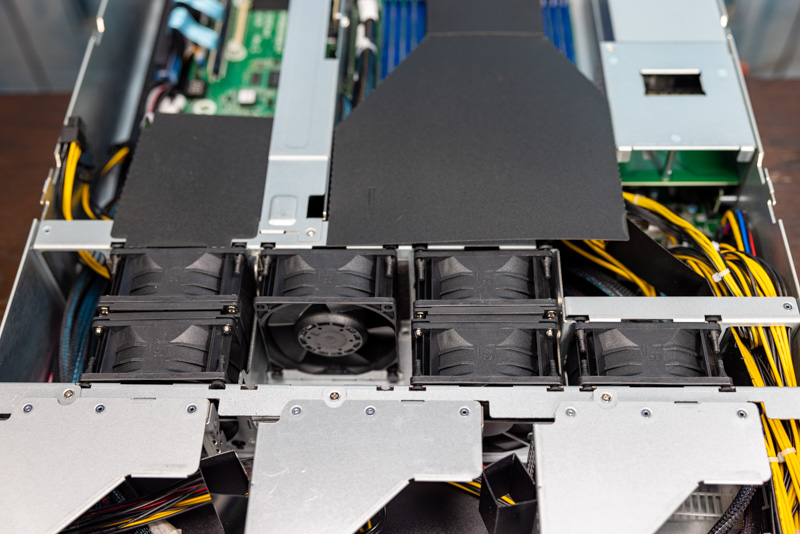

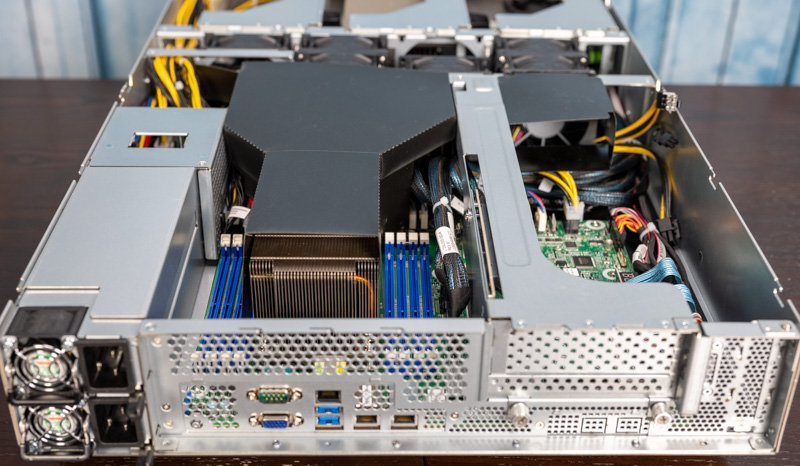

The fan situation is interesting. There are six chassis fans, but they are in a seemingly strange 2-1-2-1 configuration. During our testing, this managed to keep everything cool, however, we will note that on the right side the GPU only has a single chassis fan. Fans are reliable, but a fan failure there would be troublesome for that accelerator.

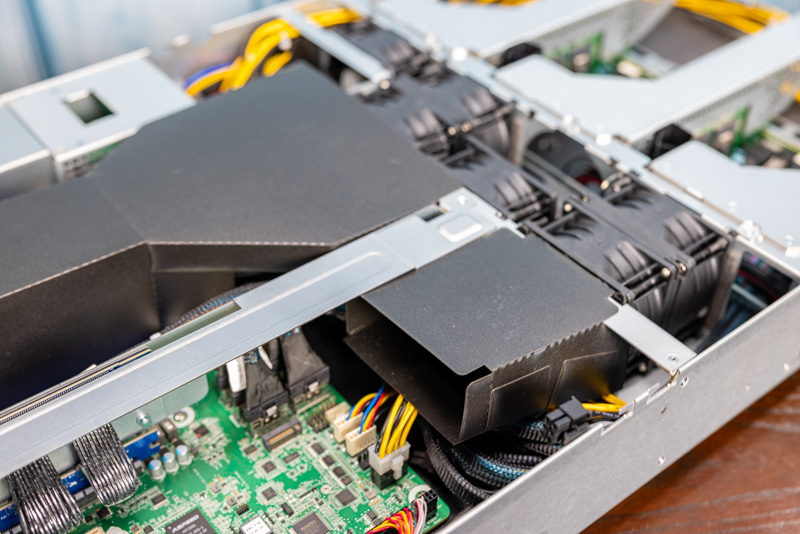

Something that longtime readers know I am not a fan of is using flexible airflow guides. In 2U 4-node and 1U servers, sometimes they are needed. In 2U servers, I tend to prefer rigid guides. Here ASRock Rack has guides to direct airflow over the CPU and also to the rear GPU.

In the external overview, we mentioned the rear accelerator expansion slot. This, like the low-profile x16 slot, uses a riser setup and a lot of room around the accelerator again.

The airflow guide directs air through this GPU from the two chassis fans on this side. ASRock Rack also has GPU power connectors available here.

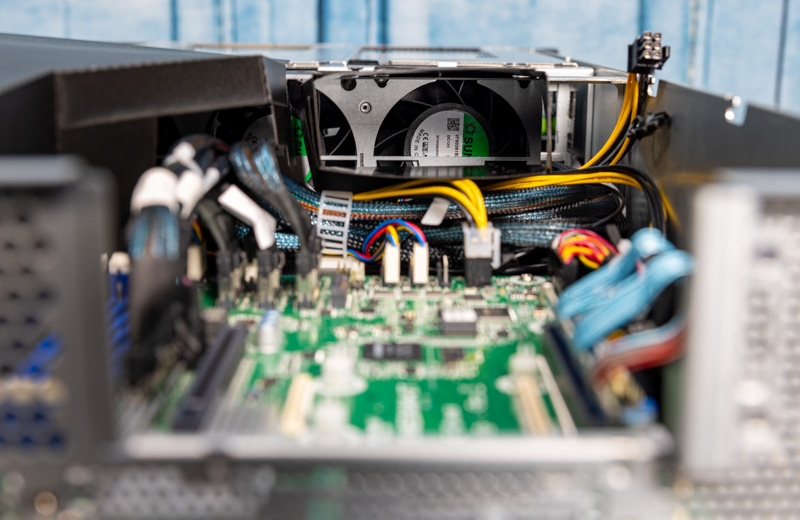

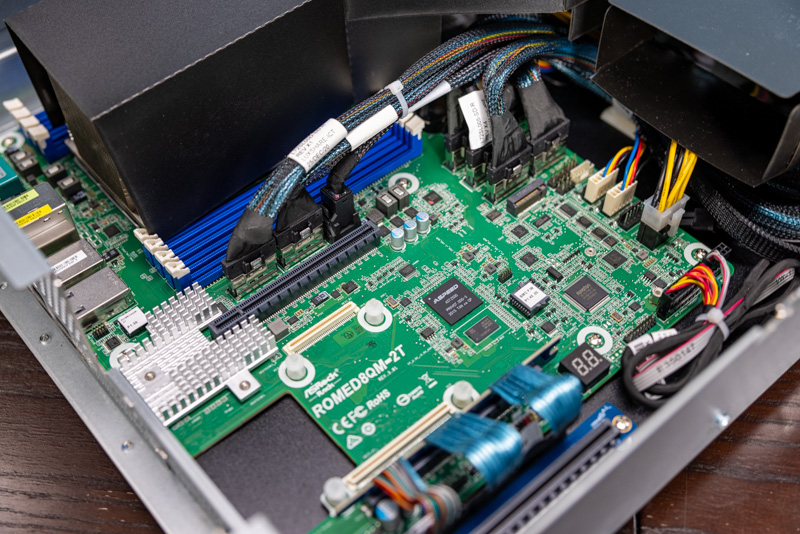

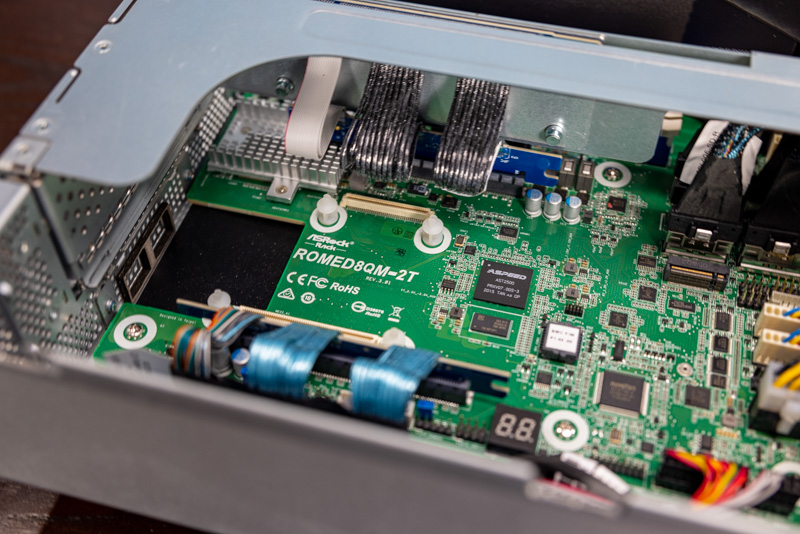

The motherboard here is the ASRock Rack ROMED8QM-2T. This is a standard ATX motherboard which is actually quite a feat that it can power a system with this complexity. Aside from the rear risers, one can see cables for the other PCIe connections coming from this motherboard’s center area and used to get PCIe Gen4 connectivity for GPUs/ accelerators and NVMe drives.

In these photos, the small silver heatsink is covering the Intel X550 dual 10Gbase-T NIC. We will also notice that if this motherboard is looking familiar to STH readers, it is the same one used in the ASRock Rack 1U10E-ROME/2T we reviewed. Using the same motherboard across systems helps drive volumes to decrease cost and increase quality.

One other feature we wanted to mention was that there is a M.2 drive slot that is internal to the system. Despite all of the cabled connectivity, this and the OCP NIC 2.0 slots are the on-motherboard PCIe connectivity for devices in this server.

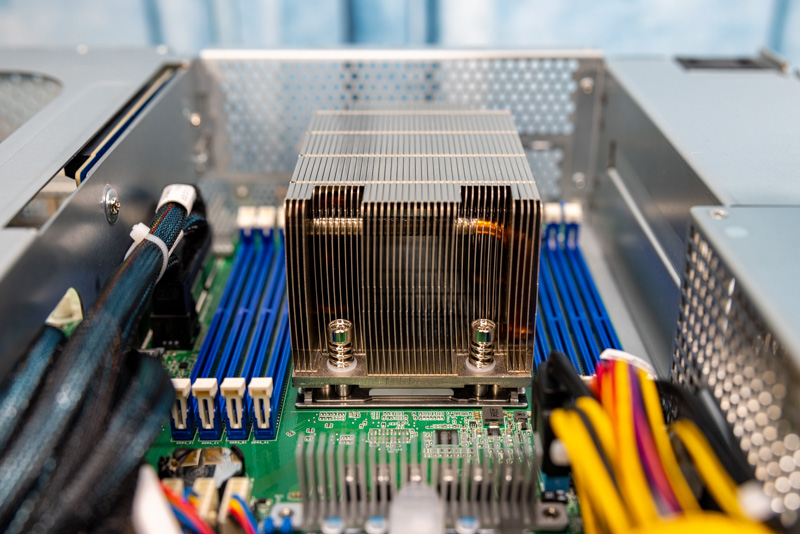

The CPU powering this is an AMD EPYC 7003 (Milan) or EPYC 7002 (Rome) processor. Here we have a Milan CPU installed. The system has 8-channel DDR4-3200 support but one gives up two DIMMs per channel (2DPC) support so one only gets half of the maximum RAM capacity of an EPYC CPU. That is a reasonable trade-off for this type of platform.

We mentioned the Dell EMC PowerEdge R750xa, and this is a great contrast point. To power a system like this for 4 accelerators, Dell EMC uses two 3rd generation Intel Xeon “Ice Lake” processors. Here, ASRock Rack is using a single CPU on a standard ATX motherboard which lowers costs.

Something that one will have noticed throughout is that the airflow guides were not seated perfectly in some of the photos. When setting up the system for testing, then tearing the system down for photos, a key challenge was getting these seated properly. It was not necessarily difficult, but with all of the cables, it was something that presented a challenge to ensure everything was correctly aligned. The system was fast to access, but this is an area that would make the system significantly faster to service if ASRock Rack changed to a rigid plastic design. It is a small detail, but one we notice reviewing so many servers.

Next, we are going to get into operating the server.

We plan to use this chassis for our new AI stations.

Would the GPU front risers provide enough space to also fit a 2 and 1/2 slot wide GPU Card with the fans on top like a RTX3090?

I just like the fact STH has honest feedback in it’s reviews.

Thanks, Patrick!

Markus,

I was wondering the same thing as you!

the HH riser rear cutout also looks like it could take two single width cards.

in fact I will be surprised if this chassis doesn’t attract a lot of attention from ebtrepid modders changing the riser slots for interesting configurations.

jetlagged,

I think I have to order one and test it myself.

At the moment we design the servers for our new AI GPU cluster system and now, since it looks like NVIDIA had successfully removed all the 2 slot wide turbo versions of the RTX3090 with the radial blower design from the delivery chains, it has become very difficult to build affordable space saving servers with lots of “inexpensive” consumer GPU cards.

Neither the Asrock website or this review specify the type of NVME front drive bays supplied or the PCI gen supported. This is important information to know in order to be able to make a sourcing decision. AFAIK, there is only m.2, m.3, u.2, and u.3 (backwards compatible with u.2). Most other manufacturers colour code their nvme bays so that you can quickly identify what generation and interface are supported. What does Asrock support with their green nvme bays?