Power Consumption

The redundant power supplies in the server are 2kW units. These are 80Plus Platinum-rated units. At this point, most power supplies we see in this class of servers are 80Plus Platinum rated. Almost none are 80Plus Gold at this point and a few are now Titanium rated.

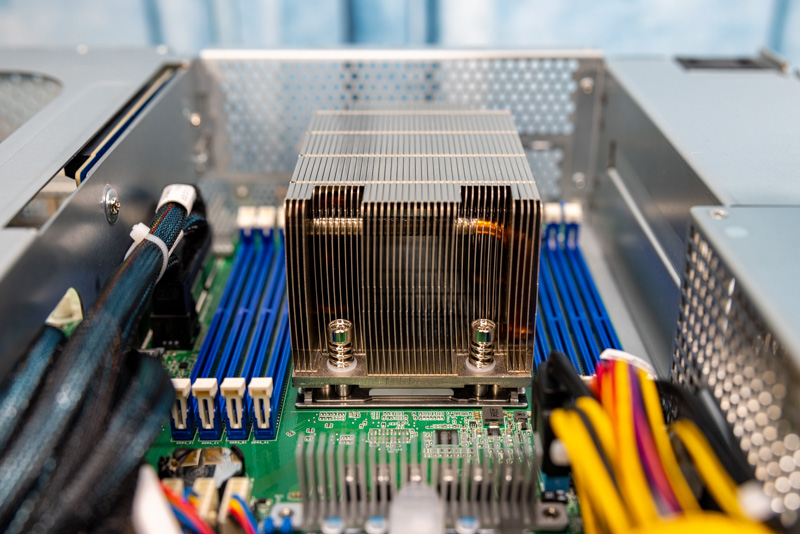

For this, we wanted to get some sense of how much power the system is using with an AMD EPYC 7443 CPU and 256GB of memory that we can imagine will be a common configuration. We saw an idle of around 0.44kW. We saw maximum power consumption of around 1.5kW but most of our workloads held the system to no more than 1.2kW as not all parts of the system were usually being stressed at the same time. We will note that this is a fairly solid power consumption savings over dual-socket systems as a modern CPU with 8x DIMMs for full memory bandwidth adds several hundred watts of power consumption to platforms.

Note these results were taken using a 208V Schneider Electric / APC PDU at 17.5C and 71% RH. Our testing window shown here had a +/- 0.3C and +/- 2% RH variance.

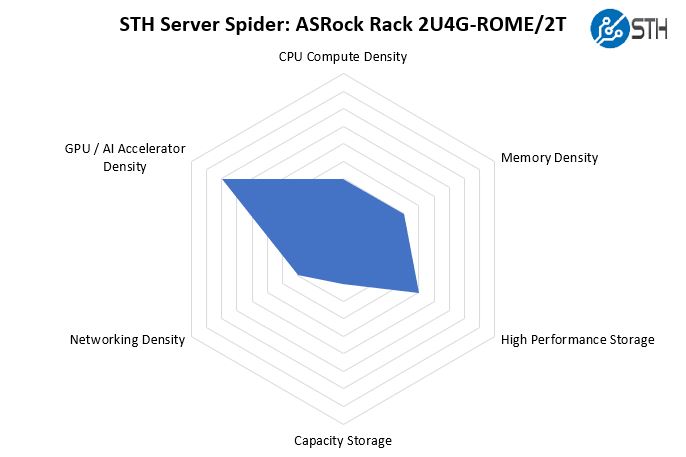

STH Server Spider: ASRock Rack 2U4G-ROME/2T

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

Here the main features of this server are the single AMD EPYC CPU along with the four double-width accelerator slots. As such, this server is very focused on that design. ASRock could have made a larger chassis with a second CPU, but the focus here was on cost optimization which makes sense. Having a single CPU with four accelerators lowers the overall cost of GPU compute nodes by thousands of dollars.

Final Words

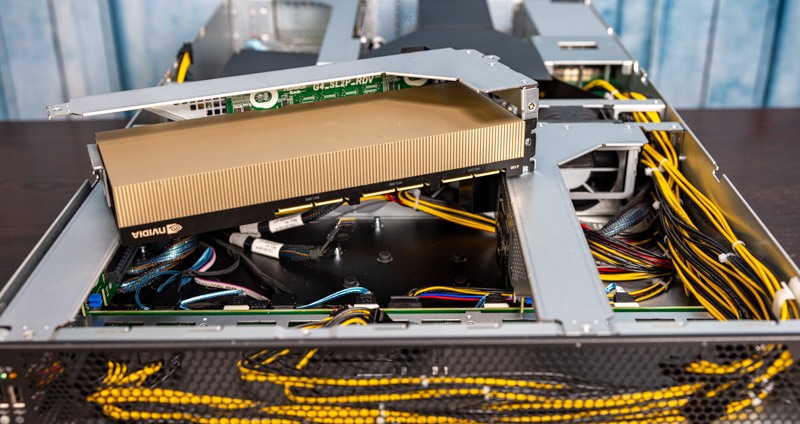

Overall, this is a very interesting system. It is certainly not a system that one looks at in the context of modern servers and quickly becomes awestruck at its beauty. Instead, the ASRock Rack 2U4G-ROME/2T is more of the type of system that has a purpose and looks to excel at that purpose. That purpose is to provide a cost-optimized 2U platform for GPU compute.

There are certainly some aspects that we may have liked to have seen changed in future versions. For example, it would have been interesting if ASRock Rack could have oriented the GPUs in two stacks of two GPUs so NVLink could be used for higher-end configurations. There seems to be an opportunity to add fan redundancy in some places and some of the air baffles could be made from a more rigid material which would help with the quality feel. On the other hand, the Dell PowerEdge R750xa we are reviewing has what looks like a nicer layout, but the ASRock Rack’s GPUs are easier to service and clearly designed with more clearance. Dell touts cooling, yet its system is costlier and performs effectively equally with four top-end NVIDIA A100 GPUs running independently (no NVLink.)

There is likely a segment of the market that is focused on cost savings and this ASRock Rack and AMD EPYC platform in many configurations can save about as much as another accelerator costs. This is both in the initial purchase price from having a lower-cost system design, but also in ongoing operating costs. Removing a CPU and having a single CPU four accelerator design also reduces power consumption which means operating costs are lower and one can fit more GPUs in a rack. That makes it more attractive for those trying to maximize the number of accelerators in a given budget.

We plan to use this chassis for our new AI stations.

Would the GPU front risers provide enough space to also fit a 2 and 1/2 slot wide GPU Card with the fans on top like a RTX3090?

I just like the fact STH has honest feedback in it’s reviews.

Thanks, Patrick!

Markus,

I was wondering the same thing as you!

the HH riser rear cutout also looks like it could take two single width cards.

in fact I will be surprised if this chassis doesn’t attract a lot of attention from ebtrepid modders changing the riser slots for interesting configurations.

jetlagged,

I think I have to order one and test it myself.

At the moment we design the servers for our new AI GPU cluster system and now, since it looks like NVIDIA had successfully removed all the 2 slot wide turbo versions of the RTX3090 with the radial blower design from the delivery chains, it has become very difficult to build affordable space saving servers with lots of “inexpensive” consumer GPU cards.

Neither the Asrock website or this review specify the type of NVME front drive bays supplied or the PCI gen supported. This is important information to know in order to be able to make a sourcing decision. AFAIK, there is only m.2, m.3, u.2, and u.3 (backwards compatible with u.2). Most other manufacturers colour code their nvme bays so that you can quickly identify what generation and interface are supported. What does Asrock support with their green nvme bays?