When we started the review process for the ASRock Rack 1U10E-ROME/2T we were immediately surprised. The system is highly focused on providing a solid AMD EPYC 7002 series single-socket platform in a cost-optimized manner. Our initial thought is that this is a minimalistic server, but as we started to work through the system, we realized it has a lot more to offer than we expected, including a small but important undocumented feature.

ASRock Rack 1U10E-ROME/2T Hardware Overview

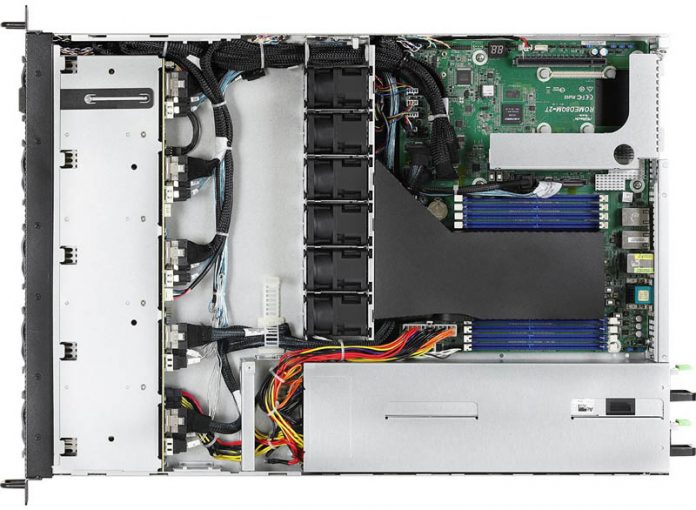

Since this is a more complex system, we are first going to look at the system chassis. We are then going to focus our discussion on the design of the internals.

ASRock Rack 1U10E-ROME/2T External Overview

First off, the 1U10E-ROME/2T is noticeably shorter than many competitive servers. It measures 625mm deep or 24.6 inches. Many of the servers we review are over 725mm so this was a noticeably smaller package that will fit in more racks.

Looking at the front of the system, we can see a fairly standard 1U solution. There is a USB 3.0 port, basic power and reset buttons along with status LEDs as one would expect. We get 10x 2.5″ bays and they offer a few surprises for us.

First, the drive bays accept SATA or NVMe, so one can use lower power/ cost drives for boot media, then high-performance NVMe for primary storage. The second pleasant surprise was the speed.

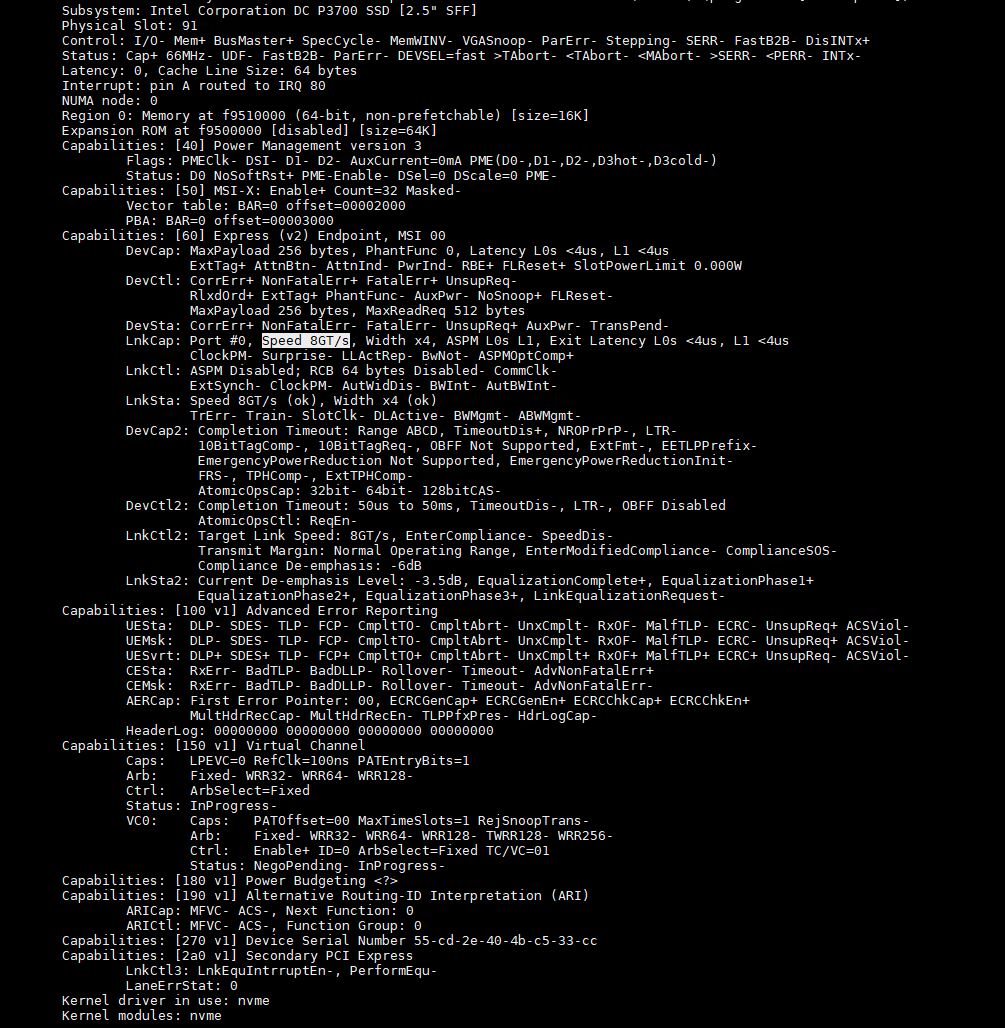

Here is an Intel DC P3700 SSD in the system. This is a PCIe Gen3 device so you will see multiple references here to 8GT/s in the link speed. Many AMD EPYC 7002 systems only support PCIe Gen3 speeds on their U.2 drive bays so this is what we expected.

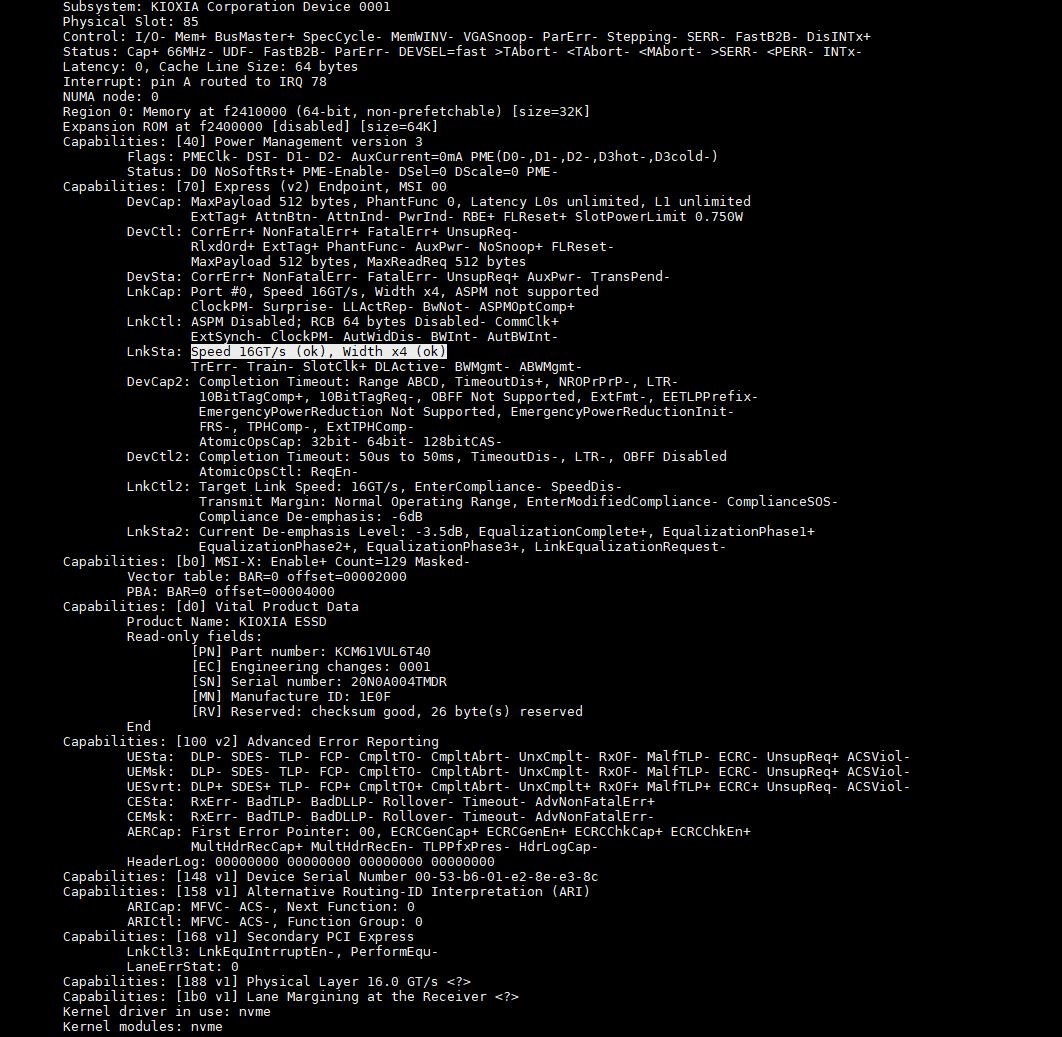

We tried the Kioxia CM6 that we reviewed to see if the system would link at PCIe Gen4 speeds.

We were pleasantly surprised that this system linked without issue at PCIe Gen4 16GT/s speeds and when we put a load on the drive we saw performance in-line with our initial benchmarks with the drive validating that it was indeed working at PCIe Gen4 speeds. This is an absolutely great feature.

We are going to note that while this worked, the spec sheet online says “10 Bay 2.5” SATA (6Gb/s) or NVMe (8Gb/s) Drives.) If that is 8GB/s, then that likely means PCIe Gen4, but it may be worth validating with ASRock Rack if you plan to use PCIe Gen4 NVMe SSDs.

Moving to the rear of the system, we can see a relatively simple layout. Although the 10x SATA and PCIe Gen4 NVMe SSD bays are a decidedly higher-end feature, this is actually a relatively cost-optimized platform. We have two 750W 80Plus Platinum power supplies on one side, and two expansion slots on the other. Some higher-end (and more costly) 1U AMD EPYC servers have 3 PCIe expansion slots at the rear of the chassis, but here we have 1-2.

The rear I/O shows a legacy serial and VGA ports. There are also two USB 3.2 Gen1 ports on the rear of the system. We also see a standard IPMI management port just above the USB ports.

The other perhaps surprise feature in this system is the networking. One may assume that the onboard RJ45 networking ports are 1GbE, but instead, they are 10Gbase-T ports. The dual 10GbE ports are powered by an Intel X550 NIC. Although this is a cost-optimized platform, we really like this basic networking. Compared to other servers in this class it is a differentiator. If you are using SFP+ networking in a rack, it is now fairly inexpensive to add SFP+ to 10Gbase-T adapters to switches in order to convert media making this feature far more useful. We still may prefer dual 10Gb SFP+ ports plus a single 1GbE NIC, but this is a good option as well.

Next, we are going to proceed with our internal overview.

Now just a low power consuming ARM cpu and we have our silent server.

What low power Arm CPU? Once they have similar features and performance they’re using the same as Intel and AMD’s lower than Intel.

very nice review….very detailed information. nice to see no vendor lock-in like dell of hpe

What is the firmware lifecycle experience like for the server? For example Dell and HP release firmware updates not just for the BIOS and BMC but for drives, add in cards and even power supplies. If you go with AS Rock Rack servers does the owner have to track down all these firmwares or are they consolidated and delivered similarly by a AS Rock Rack?

Typo:

“The redundant power supplies in the server are 0.75W units” (top of the last page)

You meant to write:

“The redundant power supplies in the server are 0.75*K*W units”, or

“The redundant power supplies in the server are *750*W units”

Jared – you are likely tracking them down since this is being sold (as reviewed) as a barebones server. On the other hand, you do not have updates behind a support agreement wall. I know some VARs will also manage this. ASRock Rack is also using fairly standard components. For example, to get NIC drivers one can use the standard Intel drivers. You are 100% right that there is a difference here.

BinkyTo – someone on Twitter got that one too. We are changing servers to kW from W so still working on getting everything on the new kW base. Fixed now.

Hello Patrick,

long time reader here. Great review and tbh it steered me in buying this.

I wanted to ask you a couple of questions if this is no problem.

1. What kind of Mellanox Connect-X 5 can i install in the mezzanine slot ? Only Type 1 or can it support Type 2 with Belly to Belly QSFP28 (MCX546A-EDAN) ? Was thinking of utilizing Host Chaining instead of resorting to buy a very expensive 100GBe switch.

2. What is the noise of it, and can it be controlled by the IPMI GUI effectively (besides ipmi terminal commands) – Is it bearable in an office environment if adjusted?

3. Do you think Samsung PM1733 is a great fit for it? I am thinking of about 6 to 10 drives over ZFS stripped mirrored vdevs (Raid10) and served as NFS over RDMA (RoCE)

Thank you !

Does anyone know where this can be sourced from? NewEgg version only has a single PSU.

I am unable to get it working using a 7003 series CPU. Specifically a 7713P.

I am using the 01.49.00 BMC and 3.03 BIOS that claim to support 7003 CPUs.

The server boots but some sensors including the CPU temp are not recognized by the BMC. The result is the FANs are always on low and the CPU performance is really low (perhaps thermal throttling or something else)

Does anyone have a solution to this?

Hello Joe

Are you still having this issue? Cause I ran into the same kind of trouble and I received a 3.09 BIOS which besides the temp. issue also fixed various others. However despite the Temp being show now in the BMC the trouble with the fans not spinning up upon load persists, resulting in speed throtteling as the temp. reaches 95 Degrees Celsius

Regards