Power Consumption

The redundant power supplies in the server are 0.75kW units. These are 80Plus Platinum rated units. At this point, most power supplies we see in this class of server are 80Plus Platinum rated. Almost none are 80Plus Gold at this point and a few are now Titanium rated.

For this, we wanted to get some sense of how much power the system is using with a 64 core EPYC 7702P CPU and 256GB of memory. We thought it would be important to give a range.

- Idle: 0.13kW

- STH 70% CPU Load: 0.35kW

- 100% Load: 0.38kW

- Maximum Recorded: 0.49kW

There is room to further expand the configuration which can make these numbers higher than what we achieved, but the 750W PSU seems reasonable.

Note these results were taken using a 208V Schneider Electric / APC PDU at 17.5C and 71% RH. Our testing window shown here had a +/- 0.3C and +/- 2% RH variance.

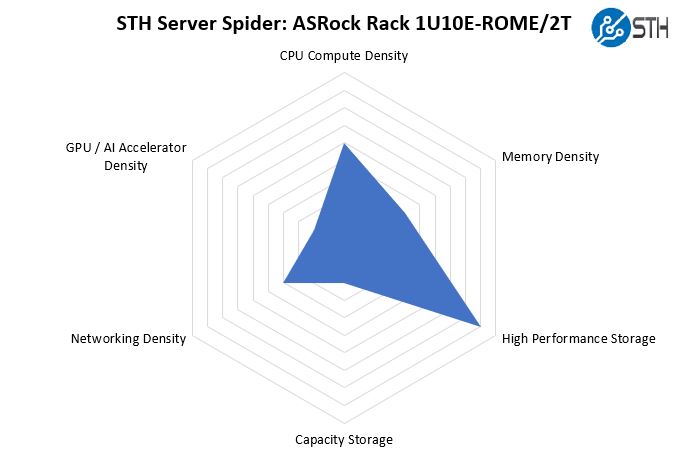

STH Server Spider: ASRock Rack 1U10E-ROME/2T

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

Although the ten front panel slots are configured for SATA as well, the ability to move to NVMe SSDs across the front means that we get a lot of storage performance. The single AMD EPYC CPU in the 1U10E-ROME/2T means that it is not as dense as dual-socket or 2U4N platforms. Still, it is a nice balance that ASRock Rack managed to achieve with the server.

Final Words

When we first saw the 1U10E-ROME/2T was heading our way, we thought that this was going to be a heavily cost-optimized platform. Against that backdrop, it both met and exceeded our expectations.

There are aspects to the server such as the PCIe riser design and using a standard form factor motherboard that are clear nods to cost optimization. Another example is that there is not a printed service guide sticker on the chassis or inside the cover which is something we are seeing more vendors adopt. The front and rear chassis designs do not have the fancy ornamentation that high-end servers have. OCP NIC 2.0 designs are plentiful and lower-cost, but we know OCP NIC 3.0 will be the future. Using an OCP NIC 2.0 slot here is being done to lower total system costs. Frankly, that is the point of a server like this in the market.

Where the server exceeded our expectations was in terms of features. 10Gbase-T networking is something that we really like on the server since it adds more flexibility to deployment. One does not need to add an extra NIC to get 10GbE which lowers costs. Make no mistake, the X550 is more expensive than putting dual Intel i210 NICs in the system so this is a performance and feature versus cost trade-off. We also really like the front panel storage configuration and that we saw PCIe Gen4 speeds from the front bays that also can support SATA. It is nice to not have to re-wire the system to add NVMe or SATA functionality.

Overall, the key impression this left us with is that this would be just about the ideal server for us to use in the STH hosting infrastructure. We tend to use Optane SSDs for databases and NVMe SSDs for other VMs. The ASRock Rack 1U10E-ROME/2T has the right storage configuration while also having the onboard and OCP networking that we would use immediately. Most of our new hosting nodes are AMD EPYC 7002 based with 8x 32GB DIMMs per CPU so this system profile fits well. Perhaps that is a high compliment from a site like STH that a server we review is one we tested and are willing to use in our hosting infrastructure.

Now just a low power consuming ARM cpu and we have our silent server.

What low power Arm CPU? Once they have similar features and performance they’re using the same as Intel and AMD’s lower than Intel.

very nice review….very detailed information. nice to see no vendor lock-in like dell of hpe

What is the firmware lifecycle experience like for the server? For example Dell and HP release firmware updates not just for the BIOS and BMC but for drives, add in cards and even power supplies. If you go with AS Rock Rack servers does the owner have to track down all these firmwares or are they consolidated and delivered similarly by a AS Rock Rack?

Typo:

“The redundant power supplies in the server are 0.75W units” (top of the last page)

You meant to write:

“The redundant power supplies in the server are 0.75*K*W units”, or

“The redundant power supplies in the server are *750*W units”

Jared – you are likely tracking them down since this is being sold (as reviewed) as a barebones server. On the other hand, you do not have updates behind a support agreement wall. I know some VARs will also manage this. ASRock Rack is also using fairly standard components. For example, to get NIC drivers one can use the standard Intel drivers. You are 100% right that there is a difference here.

BinkyTo – someone on Twitter got that one too. We are changing servers to kW from W so still working on getting everything on the new kW base. Fixed now.

Hello Patrick,

long time reader here. Great review and tbh it steered me in buying this.

I wanted to ask you a couple of questions if this is no problem.

1. What kind of Mellanox Connect-X 5 can i install in the mezzanine slot ? Only Type 1 or can it support Type 2 with Belly to Belly QSFP28 (MCX546A-EDAN) ? Was thinking of utilizing Host Chaining instead of resorting to buy a very expensive 100GBe switch.

2. What is the noise of it, and can it be controlled by the IPMI GUI effectively (besides ipmi terminal commands) – Is it bearable in an office environment if adjusted?

3. Do you think Samsung PM1733 is a great fit for it? I am thinking of about 6 to 10 drives over ZFS stripped mirrored vdevs (Raid10) and served as NFS over RDMA (RoCE)

Thank you !

Does anyone know where this can be sourced from? NewEgg version only has a single PSU.

I am unable to get it working using a 7003 series CPU. Specifically a 7713P.

I am using the 01.49.00 BMC and 3.03 BIOS that claim to support 7003 CPUs.

The server boots but some sensors including the CPU temp are not recognized by the BMC. The result is the FANs are always on low and the CPU performance is really low (perhaps thermal throttling or something else)

Does anyone have a solution to this?

Hello Joe

Are you still having this issue? Cause I ran into the same kind of trouble and I received a 3.09 BIOS which besides the temp. issue also fixed various others. However despite the Temp being show now in the BMC the trouble with the fans not spinning up upon load persists, resulting in speed throtteling as the temp. reaches 95 Degrees Celsius

Regards