ASRock Rack 1U10E-ROME/2T Internal Overview

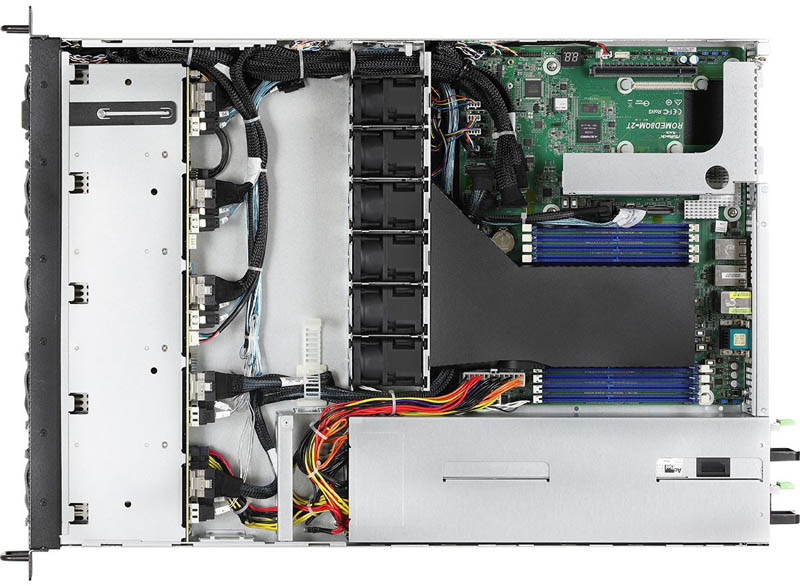

Inside the server, we see a fairly standard layout. At the front of the system, we have the ten drive bays, then we have a fan partition, followed by the motherboard. Something we found intriguing here is that this design seems to have a lot of space between the drive backplane and the fans. Our sense is that the chassis is designed to potentially take larger drives. Looking forward, as more designs become 2.5″ only, that could shave 1-2 inches from the length of a system like this.

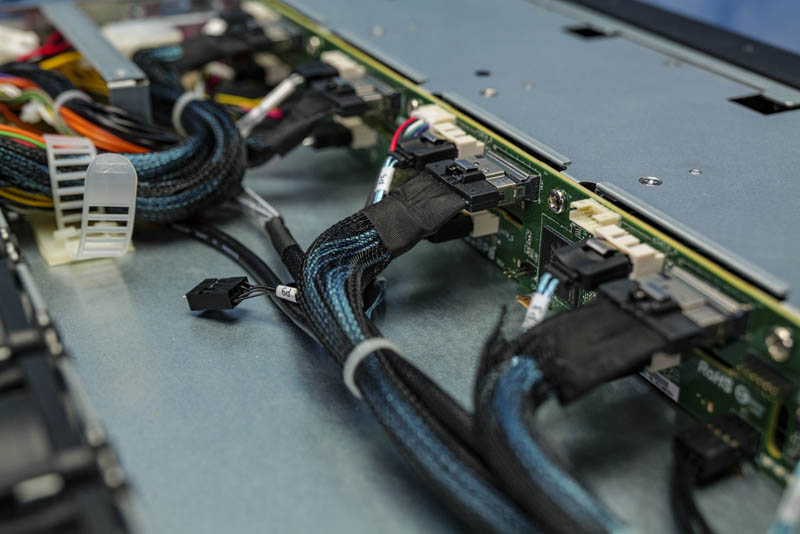

The backplane is particularly intriguing as it offers both SATA and NVMe connectivity. In our external overview, we showed PCIe Gen4 linkage using this setup. Many servers do not wire for both SATA/ NVMe to save costs. This is a very nice and also flexible solution that is enabled by the ASRock Rack’s implementation of the flexible AMD EPYC SerDes. ASRock rack is using some I/O lanes as SATA which allows for this configuration. That is a wise move since in a 1U system we are practically limited in terms of how many PCIe lanes can be exposed and used.

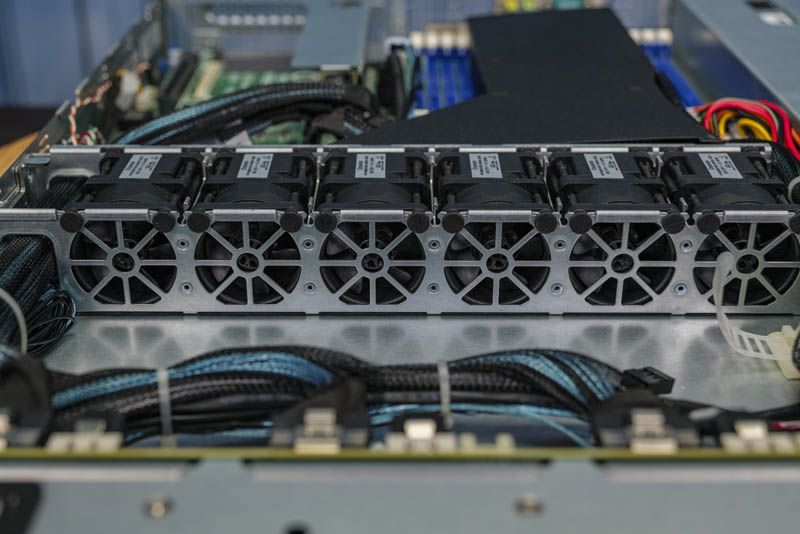

After the drive backplane, we get an array of six 1U fan modules to keep the system cool. There are two items of note. First, ASRock Rack is using a flexible cutout for CPU cooling. We generally prefer the hard plastic airflow guides, but the solution works. Second, the fan partition can be removed using a lever to unlock the entire assembly. That can help ease service times in a tight 1U system.

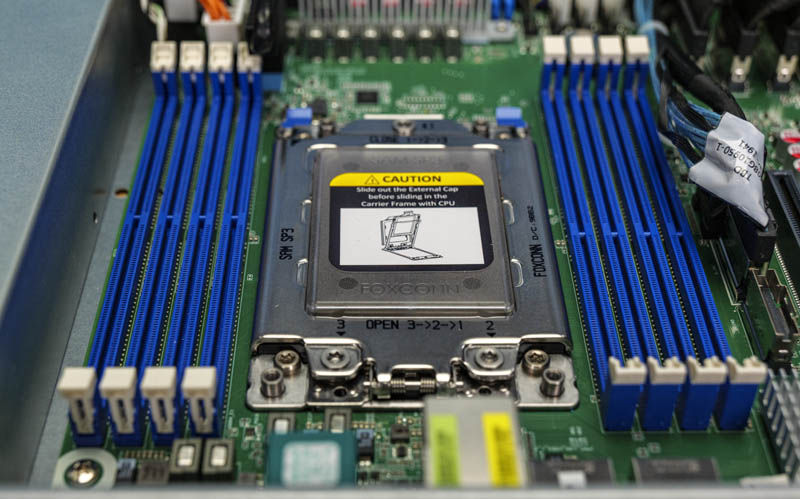

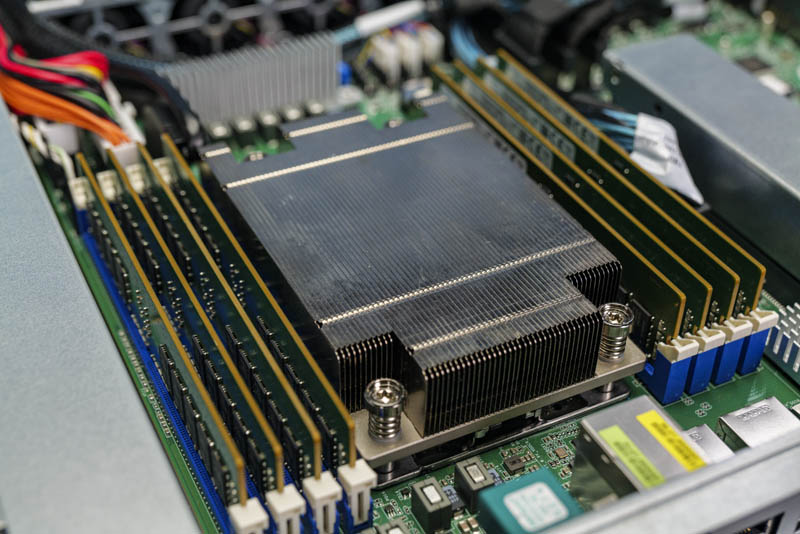

The CPU socket is a standard AMD EPYC 7002 series SP3 socket that has eight DDR4-3200 DIMM slots on either side. Using 128GB LRDIMMs, as an example, one can get up to 1TB of memory. For lower-cost hosting nodes, one can use 16GB DIMMs for 128GB in the machine.

The AMD EPYC 7002 series has a number of options. Perhaps the most intriguing are the “P” series of processors that are single-socket only parts. AMD has 16, 24, 32, and 64 core versions of these optimized CPUs that carry lower costs as well. We think that the P series in this system is going to be the most popular due to pricing.

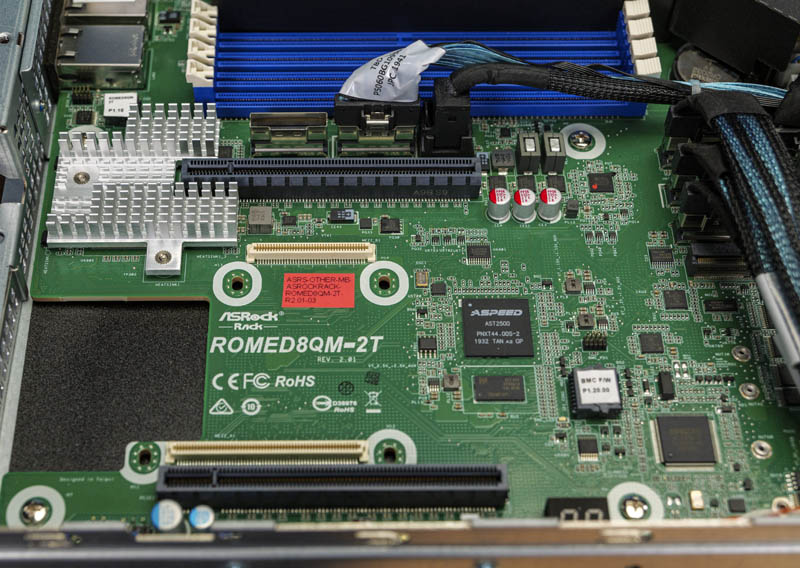

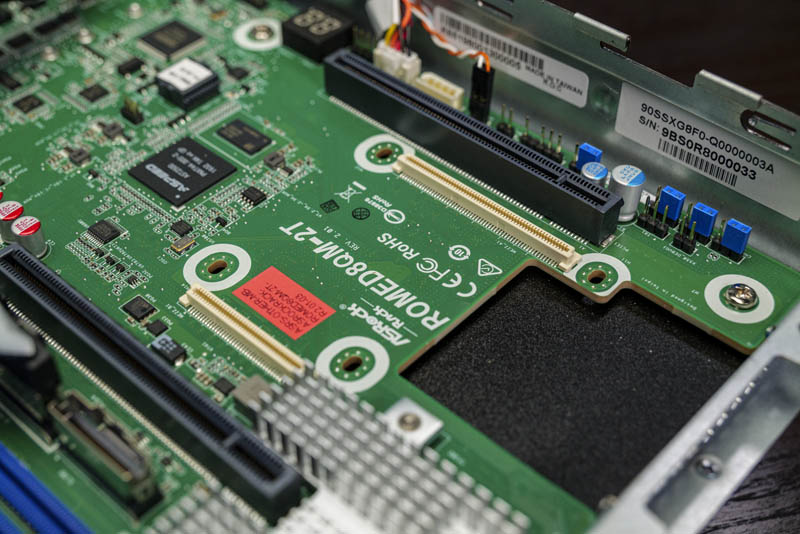

The CPU and memory area takes up the majority of the top portion of the server motherboard’s PCB. Below the DIMMs we have the PCIe expansion solution. Here we can see the ASRock Rack ROMED8QM-2T motherboard with its onboard ASPEED AST2500 BMC. On the right of this photo, we have a single M.2 slot that can handle up to M.2 22110 (110mm) SSDs. For the primary expansion area, we have two PCIe Gen4 x16 slots.

The top of these two slots has a PCIe riser. We confirmed this is operating at PCIe Gen4 speeds using an NVIDIA-Mellanox ConnectX-5 NIC. The bottom slot does not have room for a riser.

Between the two slots, we see an OCP NIC 2.0 slot. We tried our normal Broadcom 25GbE NICs in this slot and they worked well. We will note that the slot is an A/B slot so one can put faster NICs in here. At the same time, in the next year or so we expect most of the market to transition to the OCP NIC 3.0 form factor since that is designed for a newer generation with PCIe Gen4 and better serviceability.

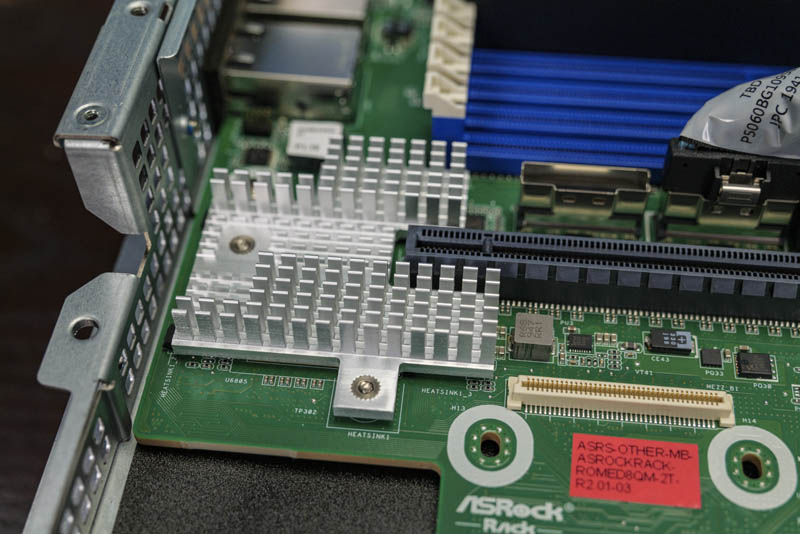

A small touch which is nice that we wanted to show is the custom heatsink near the PCIe slot that holds the riser. The heatsink extends around the PCIe slot and is indented to allow for the riser to fit. It may not seem like much, but this is a nice custom touch in the system to cool the onboard Intel X550 10GbE controller.

We did want to discuss one area that we dubbed “the corner.” This corner shows examples of two parts of the system that we wish were changed. First, the DIMM slots are single latch units with a fixed side. The number of times we have missed inserting a DIMM on the fixed portion of the DIMM slots is non-trivial since we have encountered them on a number of workstation motherboards previously. A number of STH readers have also run into issues getting proper seating in this DIMM slot design. We wish these were dual latch solutions, however, this is a well-known design in the industry.

Behind the DIMM slots, we have the power and USB cables. ASRock Rack did a good job of making these connectors work and relatively easy to service in a small space, but it is still harder to do so than on larger, less compact motherboards. That is simply due to this board needing a relatively high connector density to make the platform work. Still, it can be hard to get to some connectors that are surrounded by other components.

In our hardware overview, one can see an array of high-density cables bringing NVMe and SATA connectivity to the front of the chassis. The chassis is dense with cables as will be more common in the future. As we move into more systems supporting PCIe Gen4 and in 2022’s PCIe Gen5 era, we expect to see more motherboards that take a similar approach. ASRock Rack here is using a compact PCB and cables to extend PCIe range to various components. We are starting to see this happen more already, and it will be more common in the future.

Next, we are going to get to our test configuration and management before getting to performance, power consumption, and our final words.

Now just a low power consuming ARM cpu and we have our silent server.

What low power Arm CPU? Once they have similar features and performance they’re using the same as Intel and AMD’s lower than Intel.

very nice review….very detailed information. nice to see no vendor lock-in like dell of hpe

What is the firmware lifecycle experience like for the server? For example Dell and HP release firmware updates not just for the BIOS and BMC but for drives, add in cards and even power supplies. If you go with AS Rock Rack servers does the owner have to track down all these firmwares or are they consolidated and delivered similarly by a AS Rock Rack?

Typo:

“The redundant power supplies in the server are 0.75W units” (top of the last page)

You meant to write:

“The redundant power supplies in the server are 0.75*K*W units”, or

“The redundant power supplies in the server are *750*W units”

Jared – you are likely tracking them down since this is being sold (as reviewed) as a barebones server. On the other hand, you do not have updates behind a support agreement wall. I know some VARs will also manage this. ASRock Rack is also using fairly standard components. For example, to get NIC drivers one can use the standard Intel drivers. You are 100% right that there is a difference here.

BinkyTo – someone on Twitter got that one too. We are changing servers to kW from W so still working on getting everything on the new kW base. Fixed now.

Hello Patrick,

long time reader here. Great review and tbh it steered me in buying this.

I wanted to ask you a couple of questions if this is no problem.

1. What kind of Mellanox Connect-X 5 can i install in the mezzanine slot ? Only Type 1 or can it support Type 2 with Belly to Belly QSFP28 (MCX546A-EDAN) ? Was thinking of utilizing Host Chaining instead of resorting to buy a very expensive 100GBe switch.

2. What is the noise of it, and can it be controlled by the IPMI GUI effectively (besides ipmi terminal commands) – Is it bearable in an office environment if adjusted?

3. Do you think Samsung PM1733 is a great fit for it? I am thinking of about 6 to 10 drives over ZFS stripped mirrored vdevs (Raid10) and served as NFS over RDMA (RoCE)

Thank you !

Does anyone know where this can be sourced from? NewEgg version only has a single PSU.

I am unable to get it working using a 7003 series CPU. Specifically a 7713P.

I am using the 01.49.00 BMC and 3.03 BIOS that claim to support 7003 CPUs.

The server boots but some sensors including the CPU temp are not recognized by the BMC. The result is the FANs are always on low and the CPU performance is really low (perhaps thermal throttling or something else)

Does anyone have a solution to this?

Hello Joe

Are you still having this issue? Cause I ran into the same kind of trouble and I received a 3.09 BIOS which besides the temp. issue also fixed various others. However despite the Temp being show now in the BMC the trouble with the fans not spinning up upon load persists, resulting in speed throtteling as the temp. reaches 95 Degrees Celsius

Regards