As we were editing the AMD EPYC 7281 dual CPU benchmark piece, along with a number of other AMD and Intel benchmark pieces one area became clear, AMD EPYC pricing is extremely aggressive. So much so that it breaks a trend Intel uses in their CPU pricing, and not by a small degree. While there has been a lot of attention on the AMD EPYC 7601 as the flagship 32 core / 64 thread part, the story gets considerably more interesting in the high-volume segments, sub $1,100 USD. Today we want to explore the extraordinarily aggressive AMD EPYC single socket mainstream pricing model and show how AMD is attacking a segment of the market. We will also show how Intel views the mainstream market differently and has a competitive response based on introducing lower-power parts.

Background on Analysis

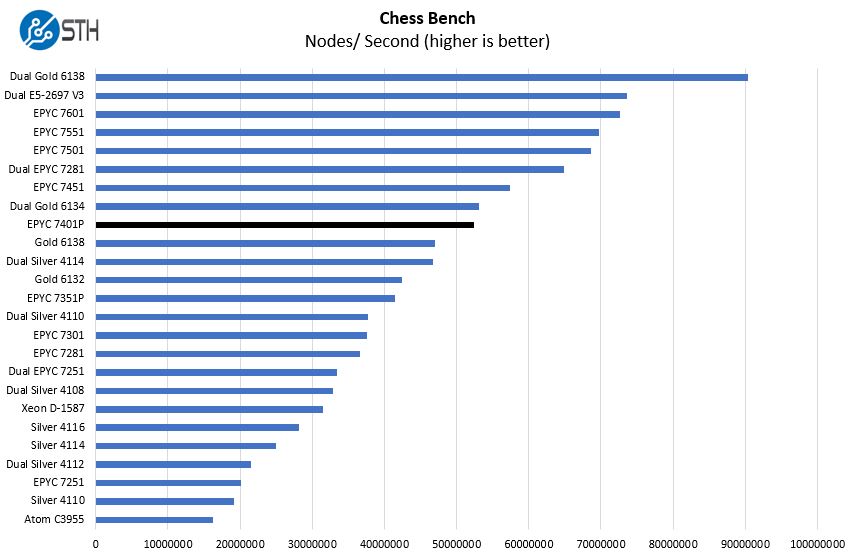

As background, at STH we have already finished benchmarking the entire Intel Xeon Silver and Bronze lines in both single and dual socket configurations and are working now on the Gold and Platinum lines. We also have finished benchmarking all AMD EPYC 7000 series CPUs in at least single socket configurations. Between the public data set we have and will share on STH, the private data set of our workloads we share with our clients, and the client workloads we have run on behalf of other companies, we have a fairly good sense of how this generation of CPUs will perform. Here is an example from our AMD EPYC 7401P review that shows every single socket EPYC combination.

Although there is understandably a lot of interest in dual AMD EPYC 7601 configurations, the volume side of the market is considerably more interesting. Using a car analogy, the BMW M3 may be the iconic flagship model, but the majority of sales are more pedestrian 3-series models.

We have been focused on the server industry for years and one area that we have seen a consistent trend is that CPU manufacturers charge more for greater capabilities. That makes perfect sense. If you have eight cores instead of four at the same clock speeds you may be able to do twice as much work. Pricing models evolved over time and as core count deltas rose Intel started charging more for savings on per core/ socket software licensing as well as for consolidating to fewer machines. Intel Xeon Scalable models range from 4 to 28 cores or up to 7x. In the Intel Xeon E5 (V1) generation, that ratio was from 2 to 8 cores or 4x. As consolidation ratio TCO cases were made, Intel priced adding additional high-performance cores at a premium.

As a new re-entrant into the market, AMD is largely bucking this trend, especially in one key segment of the market: the volume segment. While the dual AMD EPYC 7251 benchmarks we posted show a solid chip for the price, the narrative undertone favored the EPYC 7281 or 7351P. We wanted to put a chart together showing how AMD EPYC pricing in the volume segment diverges from Intel’s precedent.

AMD’s Aggressive Volume Play with EPYC

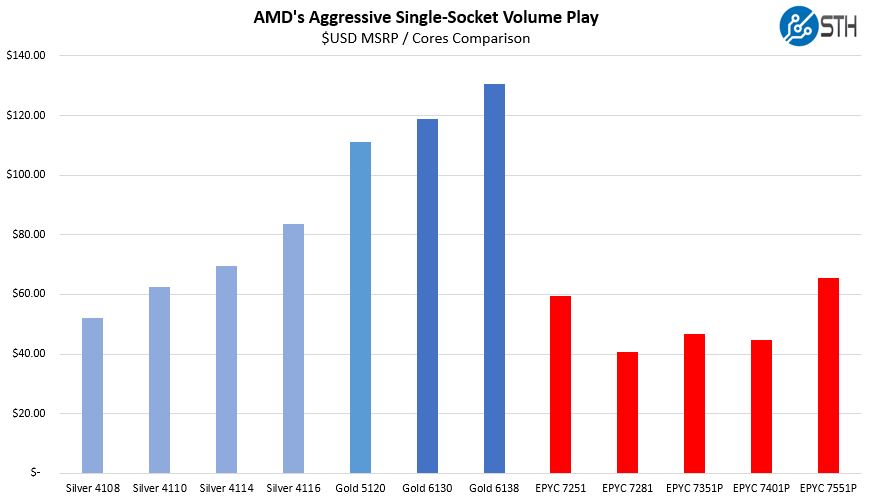

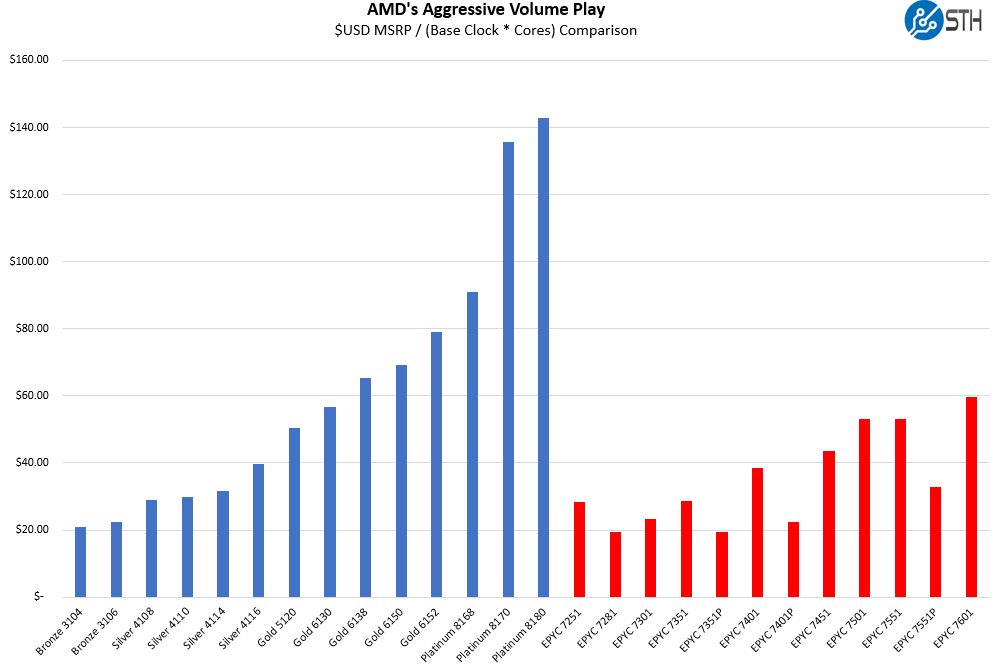

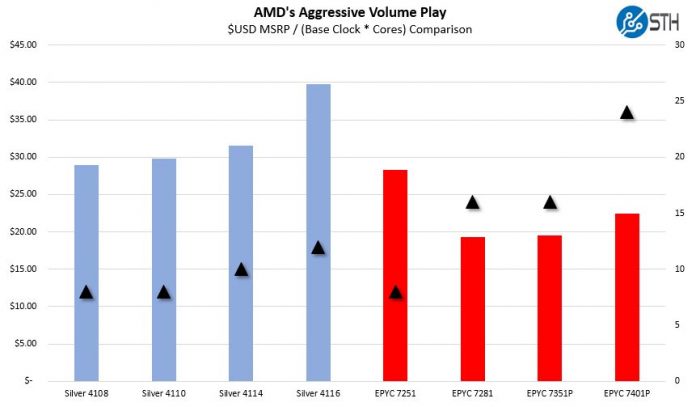

For this chart, we took the main CPU competition of Intel Xeon Silver and AMD EPYC between $400 and $1002 MSRP. We are using MSRP numbers however we have paid over MSRP several times to get CPUs into our lab. Still, the general pricing trends hold if you are looking at street pricing.

Although AMD to Intel IPC is not equal, it is not off by an enormous margin especially in the sub $1100 price points that make up the mainstream market. We previously showed an Intel Xeon Silver versus the lower-cost AMD EPYC parts for comparison. Today we are going to go a bit deeper.

AMD EPYC 1P Pricing Trends

For over a decade, the server industry has been obsessed by cores. There are reasons that companies deploying software with a per server or per socket licensing model want more cores in a given server, so it makes sense that chip vendors use a value pricing model for squeezing more cores into a server. Indeed, for some underlying technical reasons, virtualization servers with more physical cores are quite beneficial.

If we look at how AMD and Intel are pricing core growth, we have this chart showing select points for Intel Xeon Silver and Gold 8 to 20 core pricing per core. On the same chart, we have AMD EPYC 8 core through 32 core pricing.

The Gold 5120 series has a slightly different feature set compared to both the Silver series and the Gold 6100 series which is why there are three color gradients on the Intel side. AMD P parts are single socket only parts but have the full feature set. $USD / MSRP simply shows per core pricing, but it is missing a key component: clock speed. Clock speed determines how many clock cycles each core has to process data and has a major bearing on performance.

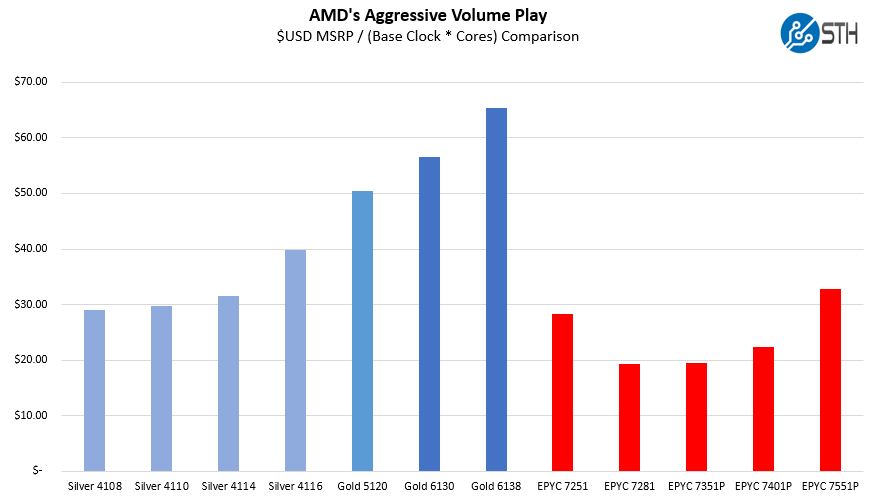

Although the idea of base clock * cores as being a performance metric is rudimentary, it is used more often than many would imagine. The next step in our process used the base clock for each CPU multiplied by the core count. This excludes turbo frequencies, hyper-threading impacts, cache per core deltas of the EPYC 7351P as an example, and the IPC differences between the two architectures. What we were trying to see is how AMD and Intel are pricing their chips in the mainstream single-socket CPU market on a relative basis.

We are going to note that the Gold 6100 series has Intel’s full RAS feature set, the full execution pipeline including dual FMA AVX-512, higher Turbo frequencies, and full DDR4-2666 support. Suffice to say, the Gold 6100 series is in a different league versus the Silver and Gold 5100 series so this is unfair. That fact that the Intel Xeon Gold 6100 series cores are faster is likely factoring into the pricing model above, and that makes sense.

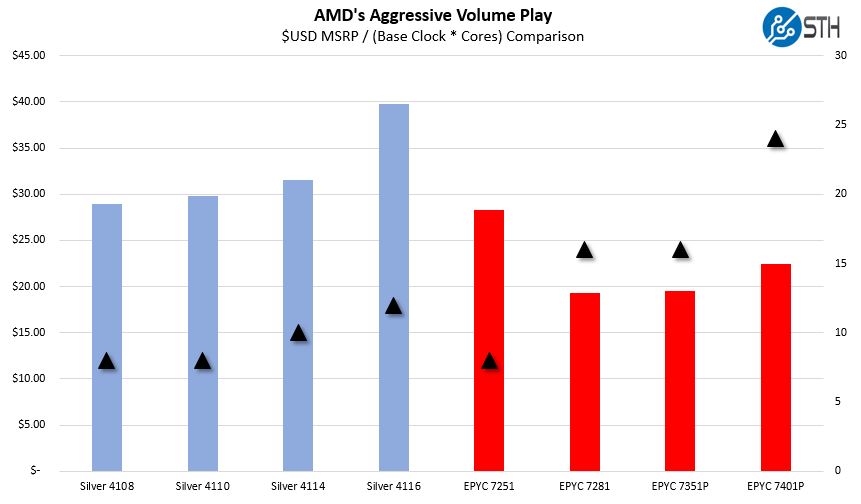

If we focus on the mainstream $400-$1100 per CPU segment and chart Intel and AMD offerings we get an interesting pattern. We have a composite of $USD MSRP / (Base Clock * Cores) represented as bars with the core count represented by the triangle plot:

The trend is as simple as it is shocking. Intel is charging a 37% per core premium to go from an 8 core part to a $1002 12 core part. AMD at $1075 is essentially offering a 20% discount on the $USD MSRP / (Base Clock * Cores) ratio moving from 8 cores to 24 cores. At the low-end of the 8-core range ($400-500 price), both AMD and Intel are very close on pricing. From there, the paths diverge.

Looking at Broader Pricing Trends

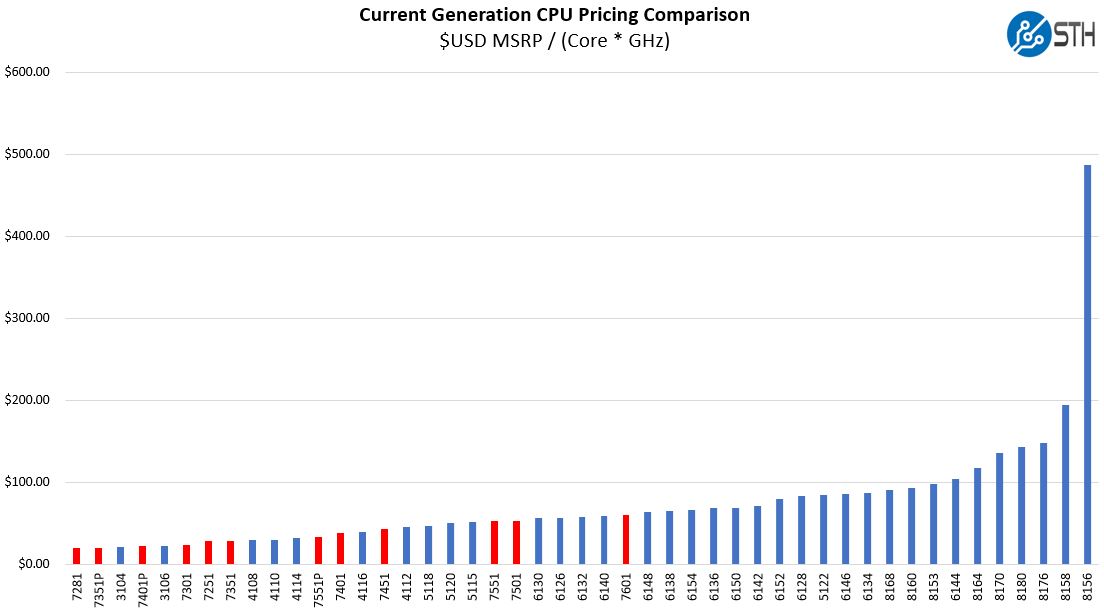

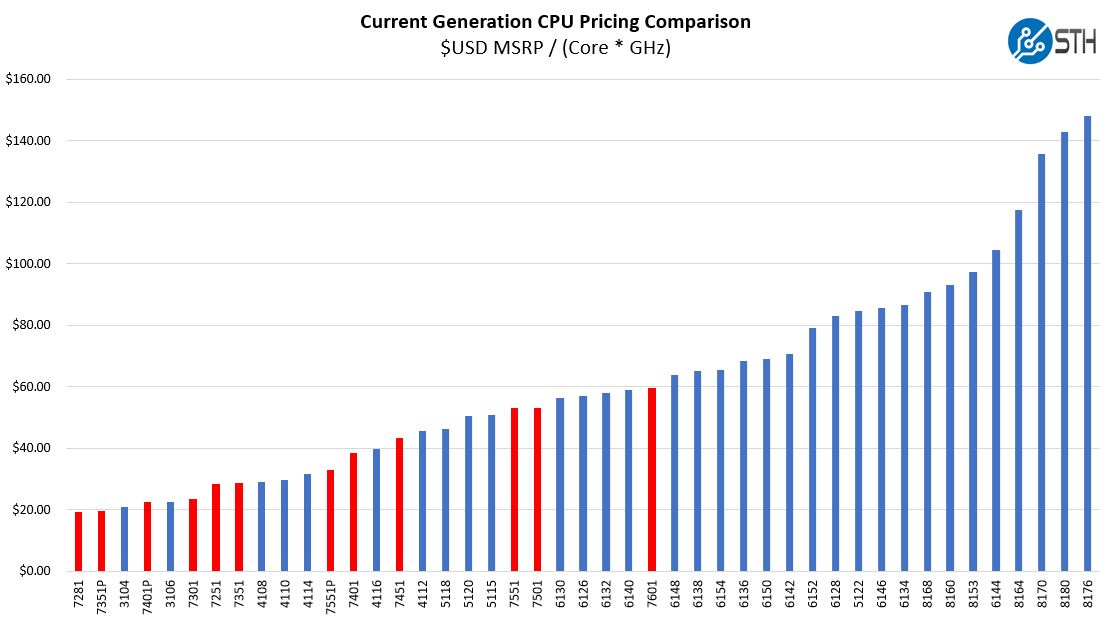

One may ask, how do these pricing trends fit into the broader ranges. Afterall, AMD has around 12 EPYC 7100 launch parts while Intel has at least three dozen core/ clock speed variants (excluding memory, fabric, and other special parts.) Here is what the broader view of AMD v. Intel pricing looks like when we use $USD MSRP / (Base Clock * Cores):

During our previous 4P Intel Xeon Platinum 8180 coverage, we mentioned that we did not think that the EPYC 7601 and Platinum 8180 were direct competitors. We also mentioned that we see the AMD EPYC as a direct competitor to the Intel Xeon Gold series.

This chart shows a trend but is misleading in a key area. The Intel Xeon Gold 6100 and Platinum 8100 series have much higher all-core turbo speeds. Take the Xeon Gold 6138 for example. It is a 20 core part with a 2.0 base non-AVX speed. Turbo with up to two cores is 3.7GHz, something AMD EPYC has no match for. Likewise, the all-core maximum non-AVX turbo frequency is 2.7GHz. The AMD EPYC 7401, for example, has a 2.0GHz base and a maximum turbo frequency of just 3.0GHz. The chart is skewed for this reason, plus IPC differences, but using base frequencies gives us a worst-case scenario view.

Moving to a broader set of CPUs, and excluding the Omni-Path fabric, high memory and etc CPUs from Intel, here is what current generation $USD MSRP / (Base Clock * Cores) looks like sorted across the range rather than by model hierarchy:

You can see that the Intel Xeon Platinum 8156 skews this chart heavily, as does the Intel Xeon Platinum 8158. The Intel Xeon Platinum 8156 is a 4 core / 8 thread $7000 CPU that is meant to be deployed in cases with extremely high license costs. Removing those two Platinum license cost optimized SKUs here is how current generation CPUs stack up:

Again, this should be taken in the context that the Intel Xeon Gold and Platinum turbo frequencies will be higher. They also have dual FMA AVX-512 which is an absolute monster in terms of performance.

At the same time, one can see that AMD is pricing its EPYC CPUs largely in the domain of the Intel Xeon Bronze, Silver and Gold 5100 CPUs. Essentially, AMD is targeting high-performance chips in a pricing zone where Intel is optimizing on power consumption instead of performance.

Calculating TCO Impact

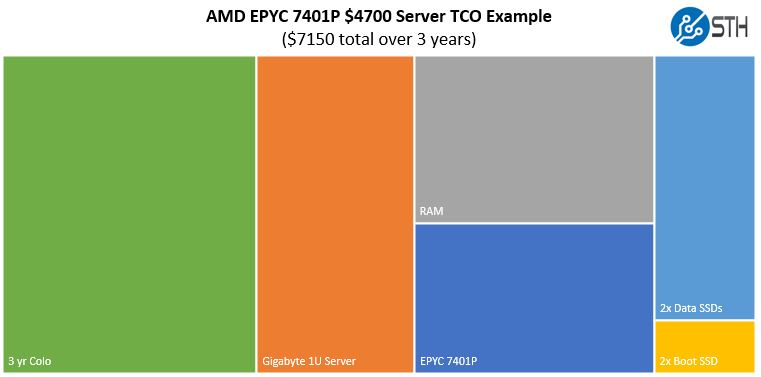

At STH, we operate labs in several data centers. That allows us to provide demos to our clients along with test gear in real-world conditions. We have gear colocated in data centers next to production clusters of applications our readers use every day. That means we have to do actual capacity planning.

Like most organizations that lease data center space, our primary cost driver is based on power. Power consumed generates data center utility costs and most operators have a cooling factor that allows a per kW figure to be calculated for deployments. If you build your own data centers, you will have different metrics, but we can use actual all-in costs to determine how much it costs to run a server in a data center for three years. If we assume the same rack footprint and the same networking, it is easy to calculate an all-in cost for housing a server for three years.

Any Intel v. AMD comparison not looking at this type of metric is extremely misleading. Above we were looking at pure CPU prices but CPU prices are a small portion of the overall cost to purchase and operate a server for three years in one of our colocation facilities. We generally use lower-cost providers for the lab racks and assume no license costs. One can easily spend twice as much on a kW basis at a data center down the street from where we are in the Silicon Valley. Likewise, Silicon Valley real estate, labor, and electricity are expensive so other locations can be significantly less expensive.

Low-End Server TCO Comparison

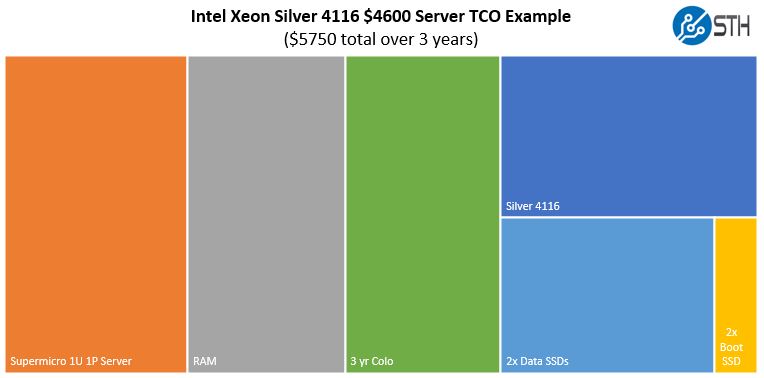

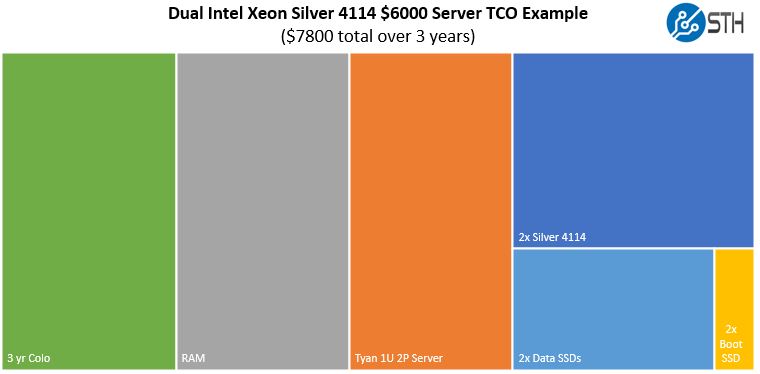

For the low-end server comparison, we are going to make an assumption that there are two SATA DOMs for boot, and two data SSDs. We are using 8x 16GB DIMMs for the 1P EPYC and 1P Xeon Scalable servers and 12x 16GB for the 2P Xeon Scalable servers. This is essentially a web hosting node with mirrored boot and data SSDs.

We are using platforms we have in the lab specifically because we have actual power data from running them. We provision for 100% load instead of peak loads. Many internal IT planners use power supply size to estimate power consumption. Those are the same organizations are the same that effectively are paying $1000/ kW or more because of poor planning.

The AMD EPYC 7401P is a very fast part optimized for speed, but it is also a part with up to a 170W TDP. The Intel Xeon Silver 4116 is in the same $1000-$1100 MSRP range but has a paltry 85W TDP. TDP does not equal power consumption but based on our data, there is a significant power consumption difference and therefore a data center power and cooling budget impact. Over a three-year horizon, power and cooling costs will be the largest portion.

Looking at single-socket Intel Xeon Silver, we can see a different strategy at work.

In this example, our 8x 16GB RDIMMs along with the Supermicro 1U 1P server are the biggest cost drivers of our platform, followed by power and cooling. Intel’s target market with the Intel Xeon Silver is low power consumption and a low cost to operate rather than performance. Indeed, this configuration was using 8 DIMMs with only six memory channels so two DDR4 memory channels were running in 2 DPC mode.

The Intel Xeon Silver 4114 has the same TDP but is a lower power part than the 4116. It also costs less. One can argue that two Intel Xeon Silver 4114 are a better comparison than a single Xeon Silver 4116, despite their higher purchase price. For our dual socket example, we had 12x 16GB DIMMs, have a more expensive CPU combination and are using a more expensive dual socket server platform.

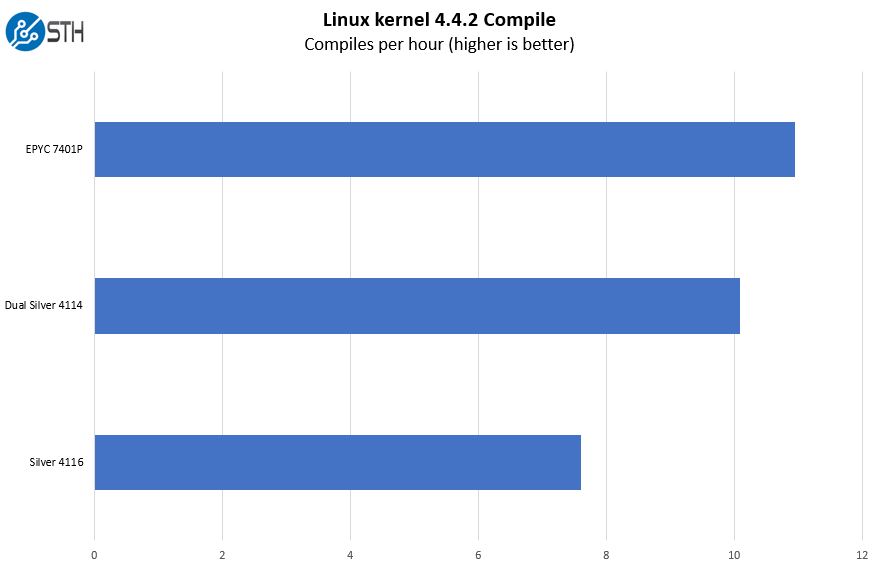

Despite the purchase price differential, the trade-off is approximately $650 over three years in favor of the AMD EPYC 7401P. One benefits from 50% more RAM installed for that price differential (192GB v. 128GB) but also gets more CPU power. For example here is our Linux kernel compile benchmark for the three configurations:

The bottom line here is that AMD EPYC is meeting its target of providing single socket platforms with dual socket performance. AMD is also beating slightly on costs (albeit in our configurations with less RAM.) If you run VMware with per-socket licensing versus using an open-source alternative such as KVM, the AMD option will hands-down win. If you have low software license costs, one can see where Intel and AMD are optimizing.

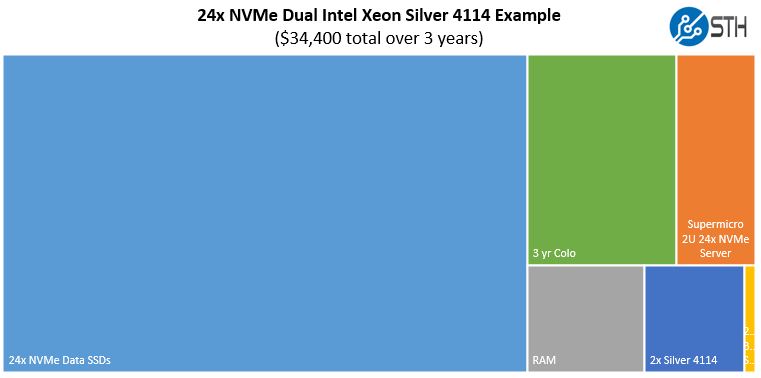

24x NVMe TCO Comparison

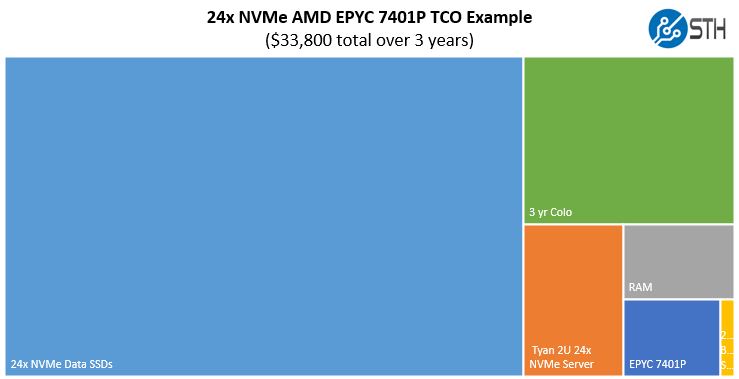

One area that many analysts who do not deal directly with hardware purchasing will harp on is that AMD EPYC is considerably more expandable in single socket configurations. That is completely true. You will spend less for more PCIe lanes with AMD EPYC. With that said, we wanted to show why it is important to look beyond simple CPU costs in that scenario.

Here we are assuming a server platform with 24x $1,000 1TB NVMe SSDs. As one can see, the impact of adding SSDs changes completely dominates the three-year cost to purchase and operate the AMD EPYC platform:

The power consumption with 24x NVMe drives will go up, adding to ongoing expenses.

On the Intel side, we are going to assume that we are not using a single-socket design, essentially a purely archival solution. Instead, we are using a dual-socket design for more PCIe lanes.

The AMD option comes out ahead again in this scenario, but the additional cost of the NVMe drives make up an enormous part of the equation. When one compares 768GB of RAM per CPU on most Intel Xeon CPUs of this generation to 2TB of RAM per CPU on AMD EPYC, the picture becomes even more drastic than the above where CPU costs become relatively minimal in terms of overall purchase costs.

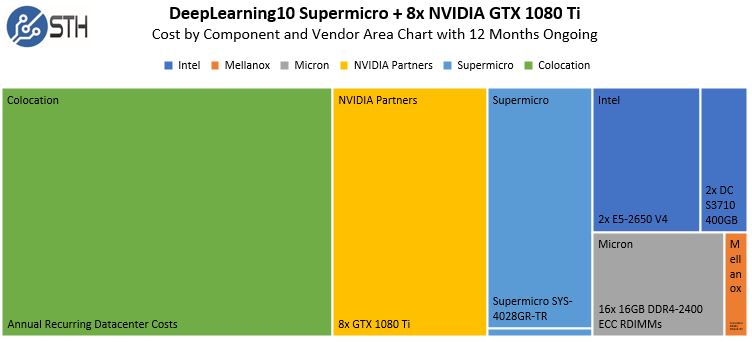

Turning to GPUs, another oft-cited example of why one needs many PCIe lanes, the ongoing power and cooling costs dominate the equation. Here is an example that we did earlier this year for DeepLearning10 where we used a 12-month ongoing cost.

Even using inexpensive GPUs, and assuming they would be swapped out for newer models quickly, the 12-month costs were completely dominated by power and cooling which make the CPU differences negligible.

There are other important benefits to the 128 PCIe lane single-socket EPYC configuration. Primarily that the PCIe layout allows for expansion using 96 PCIe lanes for NVMe drives or GPUs along with a PCIe 3.0 x16 path for 100GbE or EDR Infiniband.

Final Words

Through this exercise we showed a number of important points:

- AMD has extremely aggressive pricing in the single socket market, pricing its CPUs against the Intel Xeon Bronze, Silver and Gold 5100 series

- AMD is positioning performance parts in the sub $1100 single socket market where Intel is positioning low power parts with the Intel Xeon Silver series

- AMD’s position of the AMD EPYC single socket with aggressive pricing against dual socket Intel Xeon Silver has merit both on initial purchase price and on a TCO basis

- On a TCO basis, software licensing being equal, Intel can service the low-end node market extremely well with its low power offerings.

- Intel can deliver lower absolute platform TCO using the Intel Xeon Silver 4108 and Bronze series CPUs for those looking simply for a low-cost single node with minimal configurations and performance (e.g. a DNS server)

- Per-socket licensing will see TCO favor the AMD EPYC platform in this segment of the market

- Per-core licensing TCO models Intel has a strong stable of specific parts to address the market

As we have been generating performance data for our clients, and doing capacity planning for STH, we have a unique perspective on this generation of processors in terms of performance and TCO. AMD is clearly positioning lower-price CPUs to exploit a hole in the Intel lineup, Intel has no performance Intel Xeon Scalable CPUs in the sub-$1100 segment, but it does have lower power parts. This is certainly an exciting time in the server market as we are seeing clear divisions based on product strategies.

Given the fact that we have almost half of all 1P and 2P combinations from Intel and AMD in the lab, you may be wondering what we are buying. The answer is both. It depending on the need and software licensing model, along with what we need to integrate into our existing infrastructure.

TL;DR Intel low power. AMD performance. TCO close.

Thanks for not using all bar charts at least.

I’m not gonna lie to you. I read the title and thought: this is a crap on Intel go AMD article. It’s a way more balanced tone. I’d have liked to see more on the AVX512 performance. You need to address vMotion compatibility between legacy Xeon E5 and the new gens. You’re costs are only valid up to a few racks but if you’re buying 10k nodes you shouldn’t be getting TCO comparisons off of the interwebies.

Not perfect but I appreciate the TCO numbers. You’re right. Everything I read is CPU costs especially on consumer facing pubs. That’s a better way to look at it.

You aren’t looking at compute & RAM total capacity so your TCO is focused on nodes. That gets rid of a lot of wash costs like management licenses that are per server and networking. Most of our servers don’t run 100% capacity anyways so it isn’t a dumb way to do it. A rack of this or a rack of that do I care if I just want 40 nodes in the rack and they’re running at 42 or 35%?

Title is provocative tone for STH but it’s a good read.

In the Netherlands:

Consumer price electricity € 0.22/kWh

(incl. everything tax, enviroment, special tax. other special tax, etc..)

Google price green-electricity € 0.08/kWh

(also incl. everything).

(Steel factories pay around € 0.03/kWh).

I would like to see a performance/Wh (or kWh), how much Wh does a certain task consume.

TDP is worth nothing these days, Dual EPYC 7601 (2×180 watt TDP+system use) maxed at 480 Watt, quad 8180(4×205 watt TDP+system use) maxed at 1336 Watt.

Core i7-8700k TDP 95 Watt actual use during torture loop on stock speed 159.5 Watt, that is 68% more then the TDP.

Very good article sir.

Not many do tco.

I use with clients in future.

That 8156 chart tho!

TCO differentiates STH

TCO is workload dependant.

1st. the system has to be able to handle it’s workload in the given time.

Different workloads may require different system configurations, the interresting part for me is to look for an optimum.

When not compiling linux and/or using AVX512 a lot the whole system requirement might change with intel XEON’s (and yes the XEON’s will also use a lot less power when not using AVX512, but you might need a faster one). I really appreciate is when an article makes you start thinking at more levels.

I’d been expecting a simple table with dollars. That’s useful perspective. Long read.

I read an article on anandtech and it was in their first review of the EPYC, it said that in doing data there was latency issues. Could you get back on that if you would please.

Bruce

–

Like this? AMD EPYC Infinity Fabric DDR4-2400 v DDR4-2666

I find this more useful than micro benchmarks. Friday morning reads ftw

+DanRyanIT is right. I read this article and we still bought a quarter rack of dual 4108s for low power applications and 192gb.

Trying to convince my colleagues we should add epyc to the mix but they’re not onboard yet.

until there is cheap 2nd hand or amd es/qs that can compete with the current xeon 2nd hand and es/qs market, looks like I won’t be getting my hand on epyc anytime soon..

Spelling error. You wrote>A: There is. In a single socket server, the AMD EPYC essentially has 32x PCIe lanes per die, and for die per package.

You meant to writer 4 or four, not for.

I usually don’t bother to mention thinks like that, but because STH is a rather engineered oriented site, accuracy and clearness counts. Many, less engineered oriented sites, can get away with poor wording. STH needs to leverage its superior detailed engineer approach to master simple, clear, precise writing. Please take the time to review your writings. One, incremental idea per short sentence. Spelled correctly :-)!

The emperor has not clothes :-). “You meant to write 4 or four, not for.”

@iq100 thank you for taking the time to point find that error. The text is not in this article except in your comment. You may have been referring to a different article, the recent FAQ and that was fixed just after it went live. Please check your cache.

Good article but availability of these parts seems to be an issue.

@Emril Briggs – You can buy EPYC CPUs and servers from several vendors at this point. Availability started getting much better by the end of August and was good in September. While they are not on enthusiast sites like Newegg and Amazon, you can get systems and CPUs without an issue as of October 2017.