While the 8x NVIDIA A100 GPU “Delta” platform with NVSwitch got a lot of airtime during the Ampere launch, it was not the only platform being launched today by NVIDIA. The 4x GPU “Redstone” platform is a smaller NVLink mesh platform that is designed to be a lower-cost option.

NVIDIA A100 4x GPU HGX Redstone Platform

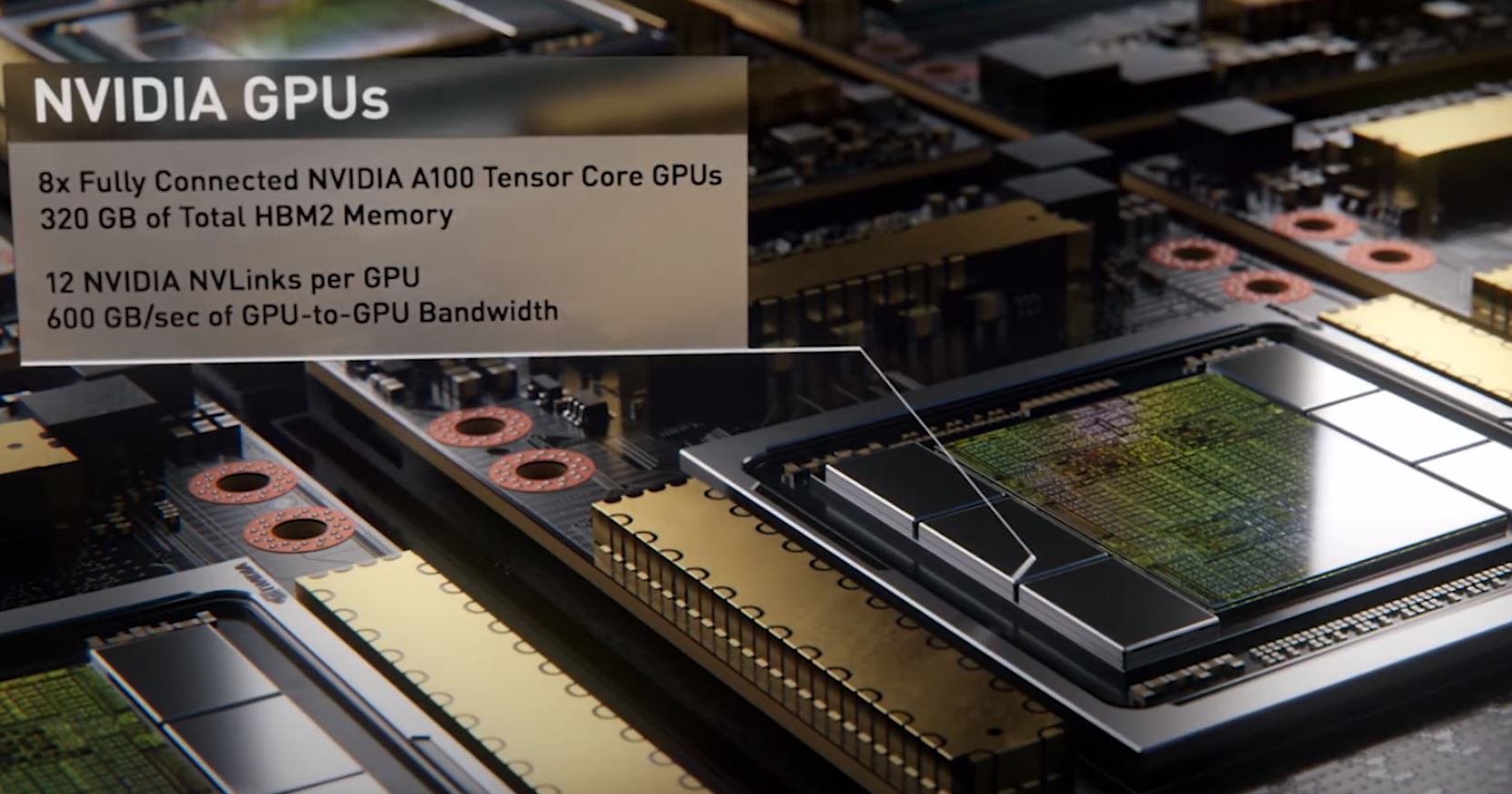

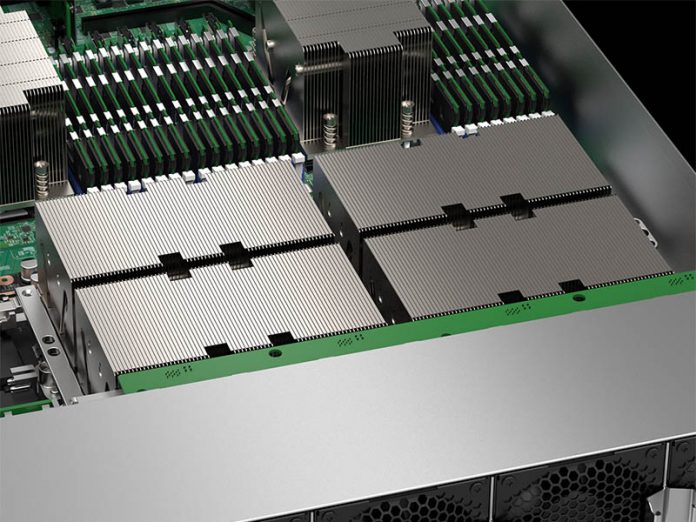

The NVIDIA A100 “Redstone” HGX platform is important since it is a smaller and less complex version of the HGX A100 platform. The Redstone platform incorporates 4x SXM NVIDIA A100 GPUs onto a PCB. As we saw with the Tesla A100 overview, the new GPUs have 12x NVlinks per GPU. Each NVLink provides 50GB/s of GPU-to-GPU bandwidth for 600GB/s total.

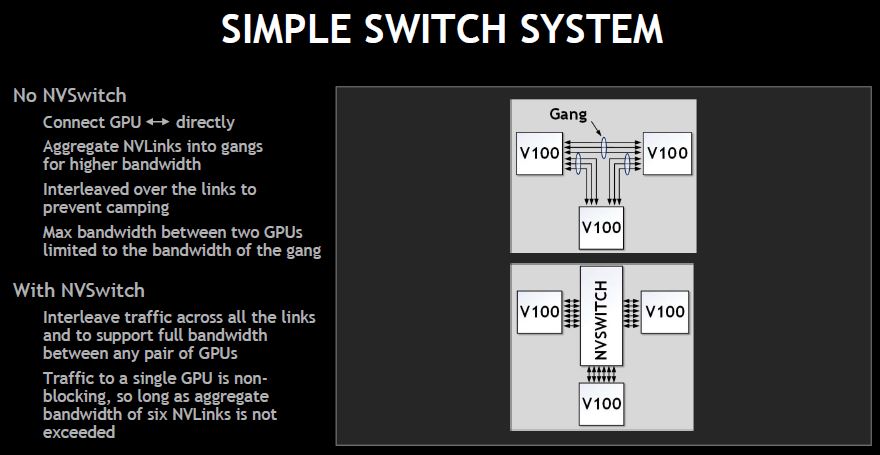

Redstone takes those 12 NVLinks and splits them into three groups. Instead of the NVIDIA NVSwitch solution we see on the HGX A100 platform, we get a mesh topology without switching. NVIDIA has offered both switched and non-switched systems for some time.

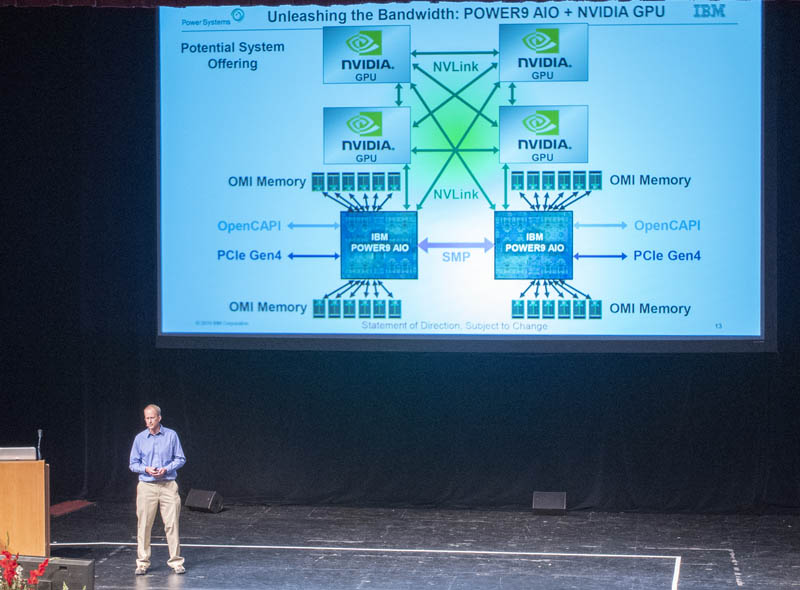

This type of topology, NVIDIA has been using for years and is the basis for many important compute nodes. For example, Summit uses NVLink directly attached between Tesla V100 GPUs. With four GPUs per node, each GPU can talk directly to every other GPU.

The importance of Redstone is that the smaller HGX A100 4 GPU board uses much less power due to having fewer GPUs and omitting NVSwitch. Leaving NVSwitch out also means one saves on per-node systems costs. If you simply wanted to 7 MIGs per Tesla A100 up to 4 Tesla A100’s per instance, then this topology can make a lot more sense. Supermicro, along with other vendors are adding A100 4 GPU systems to their portfolios.

As with the larger HGX A100 option, these GPUs have PCIe Gen4 connectivity to their hosts. One can use the HGX A100 4 GPU Redstone board with Intel Xeon Scalable, however, to get full PCIe Gen4 performance one needs to use the AMD EPYC 7002 family or potentially an emerging Arm or POWER option.

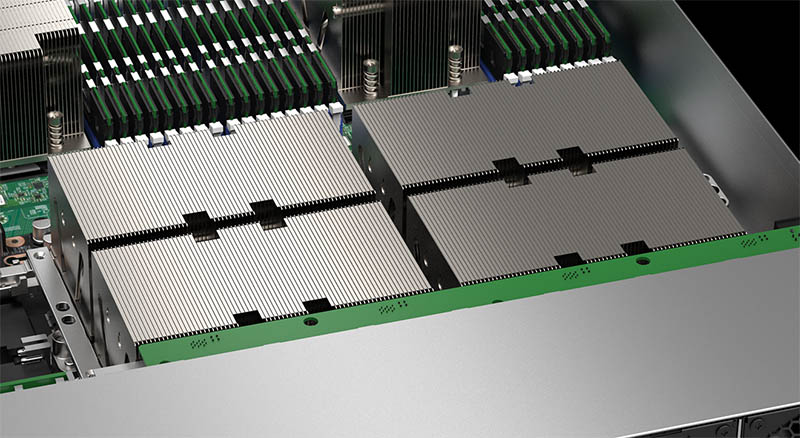

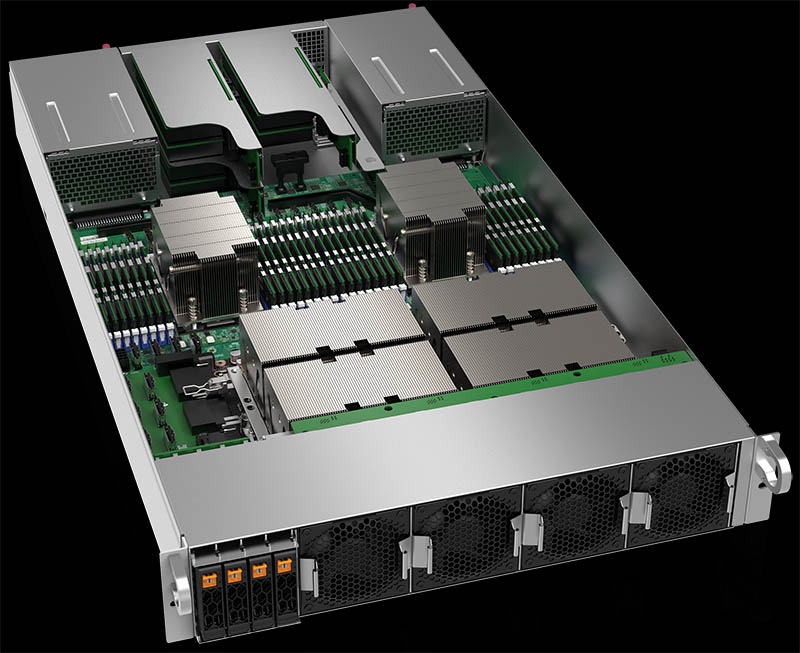

You can see the Supermicro Redstone platform based on the AMD EPYC 7002 Rome series here:

This is a great example of how server OEMs can take the HGX A100 4 GPU platform and innovate to provide their own feature sets around the new Ampere generation.

Final Words

For many organizations, the 4x GPU mesh architecture has made a lot of sense. The new HGX A100 4 GPU Redstone platform makes integration of these solutions much easier but also moves some of the design differentiation away from NVIDIA’s partners. Still, this seems to make sense from an industry perspective. Other companies, such as Dell have focused on these 4x Tesla GPU compute nodes for its customers instead of pushing larger solutions. For customers who want the smaller, less costly, and less complex form factor, Redstone makes a lot of sense.

6 HBM stack but only 5120-bit? so 5 stack + 1 for ECC?

Jon

Not a fully enabled product yet – and would be my guess that yes the 6th stack would be for sideband/native HBM ECC.

Any ideas on how much it costs? The HGX-2 costs $200k. Wonder if Redstone is in the realm of small startup budget.