We do take reader feedback and one piece we get a few requests per week on is related to our DeepLearning01 and DeepLearning02 builds, specifically a value comparison chart we used from Q4 2016 of all of the GPUs. The thesis of those two builds was this: when you are just starting out in the world of GPU compute, it is unlikely that you are going to fully utilize a $500+ GPU. Instead, it makes sense to optimize on cost, to some degree. You can then save money for the inevitable newer/ cheaper cards that will come out as your skills improve. Value-based deep learning/ AI purchases. We are constraining ourselves to models sub $1000, so cards like the Titan Xp fall outside of that range and are likely outside a new-to-the-field learning GPU.

NVIDIA Deep Learning / AI GPU Value Comparison Q2 2017 Update

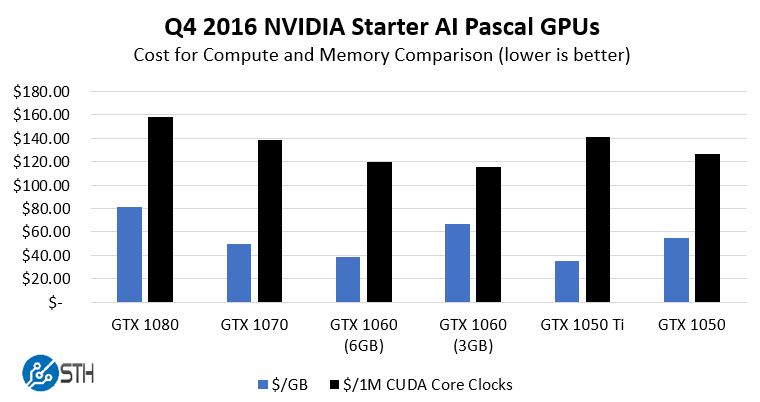

We are going to start with the last chart we published in Q4 2016. This was before the NVIDIA GTX 1080 Ti was launched and totally changed the landscape.

The NVIDIA GTX 1080 price moved significantly when the GTX 1080 was launched and other cards saw price decreases as well.

We have always used reference specs and base clocks since we want to show conservative figures. Also, the reference blower designs are very popular since they work in higher-density workstation/ server designs with their rear exhaust.

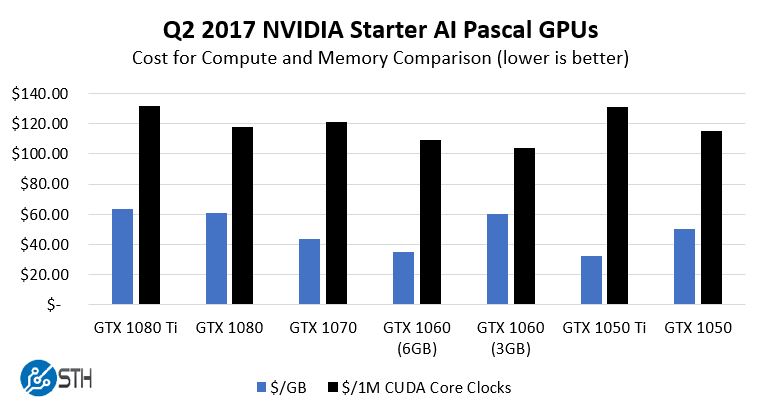

Here is what the current view as of this publishing looks like using retail prices we saw on Amazon the morning of May 12, 2017:

A key takeaway here is that with the introduction of the 1080 Ti, pricing has normalized to where the cost per CUDA Core Clock has dropped by a significant degree. While the max value in Q4 2016 was around $159/ 1M CUDA Core Clocks, the max on our chart today is under $132. Likewise, the average in the population has moved from over $133 to under $119. In terms of memory, our cost per GB has, on average, fallen by about 10%.

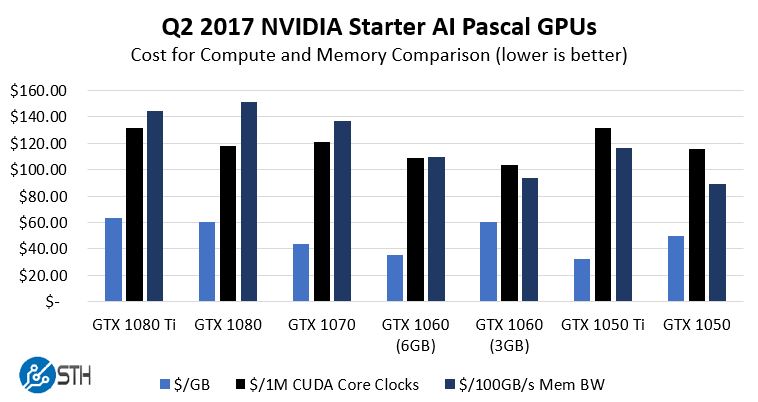

One of the other requests we have received a few times was to add memory bandwidth to the mix. We created a metric of dollars per 100GB/s of memory bandwidth. We are using that 100GB/s to make the metric visible on our chart.

Here the GTX 1060 actually performs very well from a value perspective. The GTX 1070 is also a compelling value with more compute, memory and memory bandwidth.

Which NVIDIA GPUs to Get For Starting Deep Learning/ AI

There are a lot of nuances to this, but we wanted to provide some guidance as to our recommendations on what to get. Right now, if you are budget constrained the NVIDIA GTX 1060 6GB cards are where the value is at. The NVIDIA GTX 1060 3GB and GTX 1050 (2GB) cards have such small memory footprints we suggest skipping them for this purpose. For a handful of dollars more, the 6GB variant of the GTX 1060 makes too much sense to get instead since that is significantly more headroom for fitting models in memory.

We suggest that if you are buying a GPU today, just get Pascal. Let the gamers get older-generation (Maxwell and older) cards. Not only are NVIDIA’s architectures getting better for AI with each generation, but they have better power consumption characteristics. Tools and applications will also depreciate older architectures over time wanting of features. Fermi Tesla cards are very inexpensive partly for this reason.

Within the current generation here is our best guidance:

- $690+ to spend: NVIDIA GTX 1080 Ti

- $475-$550 to spend: NVIDIA GTX 1080

- $350-$400 to spend: NVIDIA GTX 1070

- $210-$250 to spend: NVIDIA GTX 1060 6GB

- $130-$150 to spend: NVIDIA GTX 1050 Ti 4GB

- Under $130 to spend: Beg, borrow, search couches, hold a bake sale to get more funding

You may notice there are some big holes in the above list. Remember, prices have fallen in the past six months and will do so as Volta is released in a few more quarters. Also, AMD Vega will likely put pressure on some price segments of NVIDIA’s lineup. If you cannot hit the minimum for the next performance tier, our suggestion is to put money away. Selling the GPU plus using funds saved will keep you at a next-generation higher performance tier while aligning your hardware and abilities.

An Imperfect Example: Training an Adversarial Network

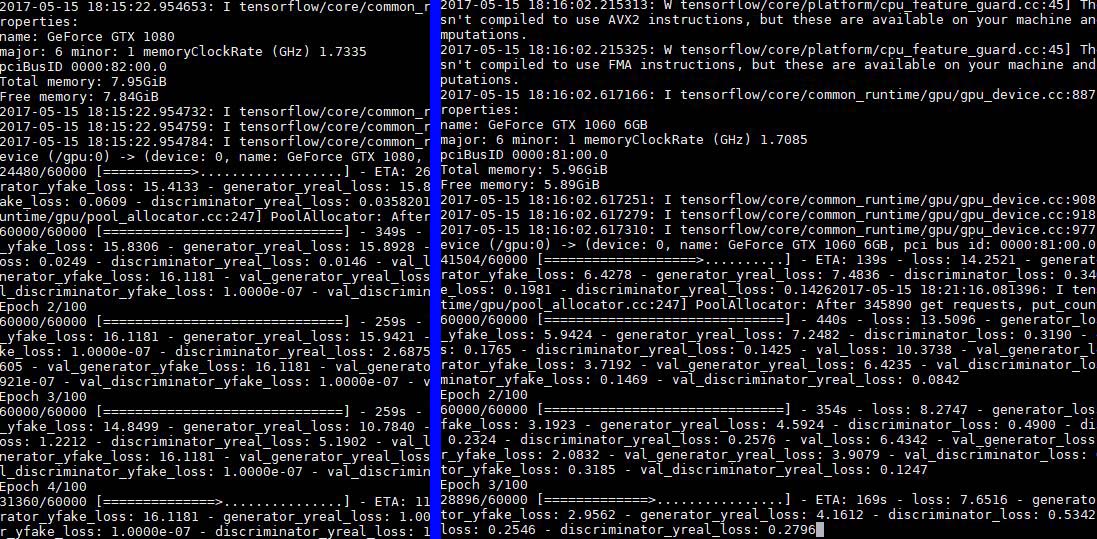

Here is an imperfect example of why the answer we have often heard is to just spend more on a better GPU. Here we are training an adversarial network using CUDA-enabled Tensorflow via nvidia-docker over 100 epochs. While this is not the most optimized example, it is something that you will likely do in the early days of learning.

We had this running on several GPUs at one time but this is the least expensive GTX 1060 6GB we could find versus the least expensive GTX 1080 8GB we could find:

It turns out, that in this model, the extra 2GB is helping quite a bit. The jump from GTX 1070 to GTX 1080 is not as large as we would hope. Still, the net benefit is about a 30% gain in training time mostly stemming from additional memory (again, a poorly optimized model.) That is almost a 3-hour difference in training time which in terms of real-user impact is huge. There are clearly software optimizations possible here but one has to ask themselves whether saving $400 on a GTX 1060 6GB over a GTX 1080 Ti is even worth it if you can get hours back and more iterations in.

A Word on Multiple GPUs

If you are thinking multiple GPUs, get the GTX 1080 Ti, potentially the GTX 1080 depending on what you are doing and your budget. If you are able to scale past one GPU, then you are probably not in your first few days of machine learning/ deep learning/ AI work. You are also thinking about Infiniband or Omni-Path networking at that point and minimum 40GbE with RDMA support.

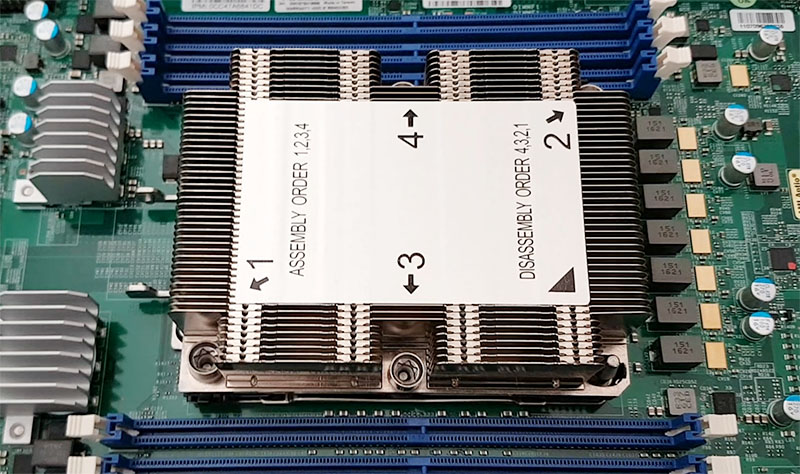

If you are thinking that you will expand to multiple GPUs, we do suggest looking at reference cooler “blower” models. When it comes time to fit them into rackmount servers they are better optimized for airflow. Furthermore, some cards (e.g. the ASUS STRIX GTX 1070 and GTX 1080) have taller PCB that may have trouble fitting in some GPU compute servers.

In short, out of scope for this, but you likely know much more about the hardware you need.

What about AMD GPUs?

Our advice, skip them for machine learning, deep learning, and AI if you are starting out. They can game well. They are great for cryptocurrency mining. If you are getting into this field you are likely to be using tools that others have already provided. You are also likely to be utilizing publicly available guides. Tensorflow support is a great example. If you want to run Tensorflow on a CPU, it will work out of the box. If you then install a NVIDIA GPU and even want to use nvidia-docker, it will work out of the box. If you try using an AMD GPU you are going to spend a lot of time just getting things working rather than training or using models.

Our advice: AMD is not what you want to start with. Start with NVIDIA, then make a trade-off to AMD if/ when it makes sense for you.

What about Knight’s Landing/ Intel Xeon Phi x200?

We did a review of the Intel / Supermicro Knights Landing Xeon Phi x200 development system. KNL we would say is much easier to use for many frameworks than AMD GPUs simply because Intel is pouring money into tooling. Intel knows it is behind but is certainly trying adding features like the Intel MKL.

Our take is that if you are using one system, go NVIDIA. When you start moving to clusters the Intel Xeon Phi x200 series makes a lot more sense in no small part because Omni-Path is inexpensive for a 100gbps interconnect and the resources/ expertise you likely have available when you move to cluster-scale are much greater.

Our advice: Interesting option for clusters, but if you are getting your feet wet in a first machine learning skip the $5000 option that will require additional work to optimize.

Final Words

Someone once told me, the rule with getting into AI / deep learning is basically get the biggest NVIDIA GPU you can reasonably afford. The reason for this is that while your initial models may take seconds to train, larger models will take many hours. If you are a casual learner (e.g. someone taking 4-8 hours/ week) then the GTX 1070 is likely the biggest GPU you will be using before Volta, unless you have a very good starting foundation. If you are spending many hours per day on AI/ deep learning, the GTX 1080 Ti is a good option.

Timely article. I just shared that around the office.

We’re launching a program to update some of our team’s desktops with GPUs and giving 5% time to learning deep learning. If even one person builds something useful it’ll pay for the whole outlay.

Your example may not be the best scaling but I’ve seen enough examples where you’re running something not in the best way and the choice at 5PM is run overnight and go home or work until 8 to optimize. You’re forgetting that oftentimes those learning won’t know how to optimize. Since there’s so many newbies in the field, I can see how its valid to start by overbuying GPU too.

-AW

Which one is best

2X GTX 1070 ( cuda core 1920 X 2) or GTX 1080 Ti ( cuda core 3584 )?

Get a GTX 1080 Ti over 2x 1070’s. Easier to scale a model bigger on one GPU before going to multiple GPUs

will have a 2×1080 ti bottleneck because of the 28 pci express line of Intel Core i7 7820x, as they will work 16+8 line scheme?

it is not value, monex matters not