Let us get down to business. The Gigabyte H261-Z61 is an AMD EPYC 2U 4-node (2U4N) platform from Gigabyte. It is also a very close cousin to the Gigabyte H261-Z60 we reviewed previously. There is however one major benefit to this new design: more NVMe storage in each node. Combined with 8x 32 core AMD EPYC 7001 “Naples” generation CPUs, and with an upgrade path to upcoming 64 core “Rome” parts, compute density is excellent. Using the 2U4N chassis and leaving some room for additional networking, as the new Rome generation launches, one will be able to fit up to 10,240 cores and 20,480 threads in 40U of rack space. With the Gigabyte H261-Z61, that will also include 320 U.2 NVMe SSDs in that same footprint as well.

In our review, we are going to show you around the system. There are a lot of similarities between this Gigabyte H261-Z61 and the H261-Z60 which is good. The similarities mean that systems integrators and customers can use either platform in a familiar form factor. In this review, after touching upon the hardware, we are going to talk about management. Finally, we are going to show some performance figures and give our final thoughts.

Gigabyte H261-Z61 Hardware Overview

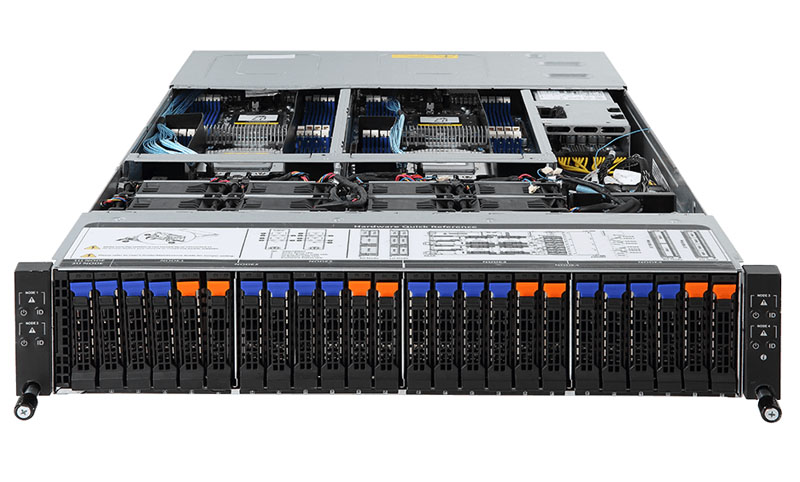

The Gigabyte H261-Z61 is a 2U chassis. The front of the chassis looks like many other 2U 24-bay storage chassis on the market except for a few differences. On each rack ear, there is a pair of node power and ID buttons. Our configuration utilized 2x U.2 2.5″ NVMe front panel bays and 4x SATA III 6.0gbps connections to each node via front drive bays. That means there are a total of 16x SATA III bays (or optionally SAS with SAS RAID cards or HBAs) as well as 8x U.2 NVMe drives per chassis. We think most will opt for the SATA configuration.

Looking just above the front of the chassis, Gigabyte affixes a sticker with the basic node information allowing one to quickly navigate the system. This type of on-chassis documentation was previously only found on servers from large traditional vendors like HPE and Dell EMC. Gigabyte has taken a massive step forward in recent years paying attention to detail even on small items such as these labels.

As a note, we tried to hot-plug U.2 NVMe SSDs from Intel and Samsung into running nodes. AMD and Intel handle NVMe hot-swap differently. Gigabyte’s system did a great job of not freezing, as we have seen from some AMD EPYC systems in the past. On the other hand, the nodes required reboots on all of the drives we tried (Intel DC P3600, P3700, P4510, Samsung PM983, PM1715, and PM1725.)

Since this is a 2U 4-node design, there are four long nodes that each pull-out and can be hot swapped from the rear of the chassis. This makes servicing extremely easy. If you have a rack of these servers, one can replace a node into another chassis without having to deal with rack rails. From a servicing perspective, the 2U4N works very well.

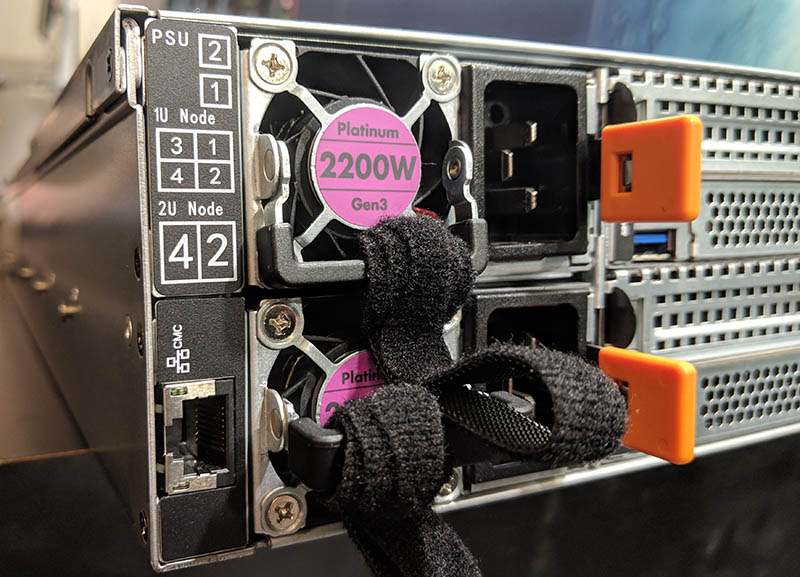

Power is provided to the chassis via dual 80Plus Platinum rated 2.2kW power supplies. These are the same power supplies we saw used in our Gigabyte G481-S80 8x NVIDIA Tesla GPU Server Review. One of the big benefits to the 2U4N design is that you can utilize two power supplies for redundancy instead of eight power supplies for individual 1U servers. These power supplies can run at higher efficiency which lowers overall power consumption for four servers. Cabling is reduced from eight power cables to two using a 2U4N server like the Gigabyte H261-Z61.

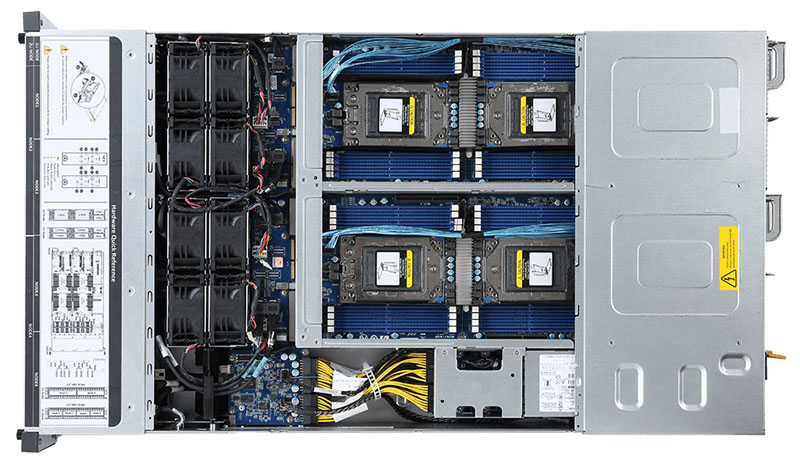

Taking a look at the top of the server opened up, one can see four pairs of chassis fans cooling the dual processor nodes. With only eight fans per chassis, there are fewer parts to fail than in four traditional 1U servers and the cooling provided by the fans can be better utilized leading to power consumption savings over less dense options. This design we have found also uses less power than 2U4N versions where 40mm fans are affixed to the node trays. If you are trying to realize power savings, this is the design you want.

For those wondering, why there is not a fifth set of fans, the power supplies pull a ton of air through the chassis. The drives in front of this airflow section will use under 100W. If you compare that to the other sections there are several times as much thermal load to cool. We tested the design and it worked here even with hot NVMe SSDs.

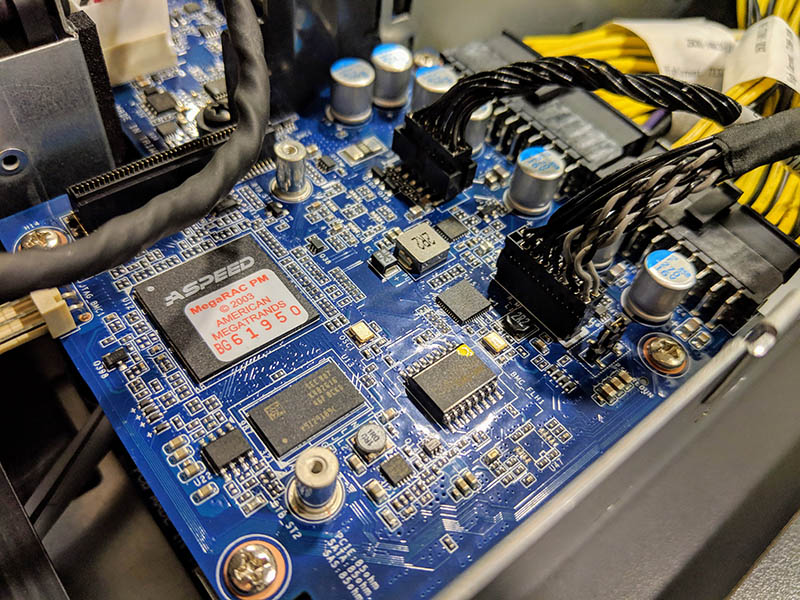

Our test Gigabyte H261-Z61 utilizes a Central Management Controller part. This CMC has its own baseboard management controller and allows a single connection for all four node BMCs reducing cabling further. We think that this concept is something that Gigabyte will do well to continue to develop in the future as it expands its multi-node portfolio.

From a chassis design perspective, we think that the Gigabyte H261-Z61, like the H261-Z60, is designed well and is showing Gigabyte’s evolution as a manufacturer.

Gigabyte H261-Z61 Nodes

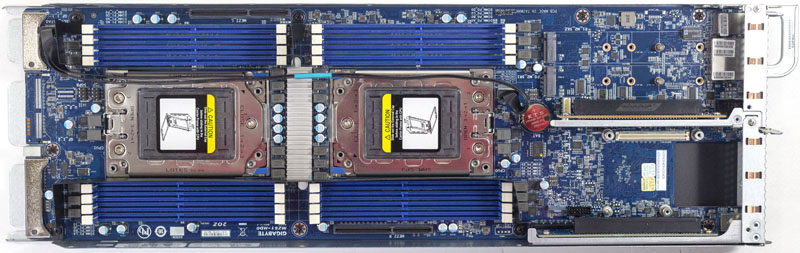

Each Gigabyte H261-Z61 node is very similar to the H261-Z60 nodes we have seen before. One can see the hot swap tray contains a Gigabyte MZ61-HD0 motherboard suitable for dual AMD EPYC processors with 8 DIMMs per CPU and 16 DIMMs total.

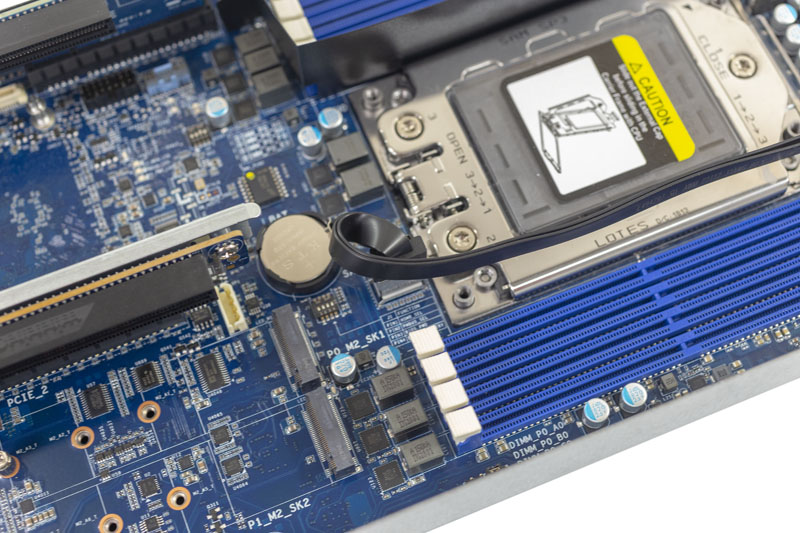

Aside from the front two NVMe SSD bays, there is room onboard for five PCIe-based expansion devices on each MZ61-HD0 node. There are two low profile, half-length PCIe x16 slots, an OCP mezzanine card slot, and two M.2 slots for NVMe SSDs up to 22110 (110mm) in size.

We hope that in the future Gigabyte can find a way to make the PCIe x16 expansion slots a tool-less design as installing cards requires several screws. Most 2U4N nodes like this use screws primarily for structural rigidity to ensure that they remain the proper size to fit neatly into the larger chassis. Otherwise, they get caught while sliding in and out of the chassis.

Here is a test node shot we did earlier with a Mellanox 10GbE OCP mezzanine card installed as well as a SK.Hynix M.2 22110 NVMe SSD. We also had a node setup with a Broadcom 25GbE dual-port OCP NIC and an Intel Optane 905p M.2 380GB SSD. We found that the Optane drive, given the pre-heating from the CPUs, required a heatsink here to run reliably with higher wattage CPUs like the AMD EPYC 7601. Offering two full M.2 22110 (110mm) slots means that Gigabyte can support two power loss protected NVMe SSDs and these Optane drives which many competitors cannot support in 2U4N designs. This is a differentiating design feature for the Gigabyte H261-Z61.

Each node has two USB 3.0 ports, a VGA port, dual 1GbE networking, and a management port built-in as well.

Like with the H261-Z60, the Gigabyte H261-Z61 still retains the same SATA cable through the chassis. The MZ61-HD0 node did not require additional cabling for the NVMe configuration. We wish that Gigabyte did not use this cabled solution since it adds something to watch out for during installation.

Like its SAS/SATA sibling, we were able to configure the Gigabyte H261-Z61 with everything from 32-core “Naples” generation processors to even the new high-frequency AMD EPYC 7371 processors.

Next, we are going to take a look at the system topology before we move on to the management aspects. We will then test these servers to see how well they perform.

where are the Gigabyte H261-Z61 and H261-Z60 available at? I’m having difficulty sourcing them in the US.

Brandon, Gigabyte has several US VARs and distributors. If you still cannot find one shoot me an e-mail patrick@ this domain and I can forward it on.

Can the management controller be configured to expose a low traffic management network to the host operating system?