Gigabyte H261-Z61 Power Consumption to Baseline

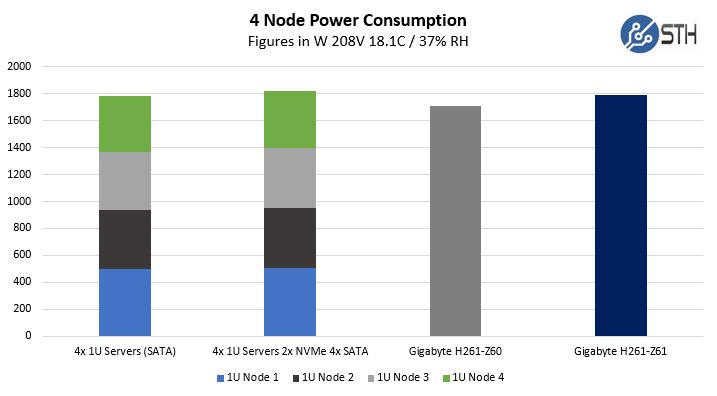

One of the other, sometimes overlooked, benefits of the 2U4N form factor is power consumption savings. We ran our standard STH 80% CPU utilization workload, which is a common figure for a well-utilized virtualization server, and ran that in the sandwich between the 1U servers and the Gigabyte H261-Z61.

Here we only saw about a 2% decrease in power consumption over the four 1U servers once we equipped them with two NVMe SSDs and four SATA SSDs. The nominal difference was close to what we saw with the H261-Z60 and four SATA SSD equipped servers, but with slightly higher power consumption, and a delta that closed slightly, we saw the benefit slightly decrease here.

Putting this in context, the Gigabyte H261-Z61 is delivering virtually identical performance to four 1U servers while reducing rack space by 50%, power consumption by 2%, number of power supplies by 75%, and the number of base power/ 1GbE/ management cables by 56% (7 v. 16.)

We have heard numbers in the hyperscale community of using a $6 per watt savings. Given this is under load not idle, but we can see a $100-260 operating cost savings using this over four 1U servers just from the reduced power consumption. That is exactly why 2U4N systems are so popular.

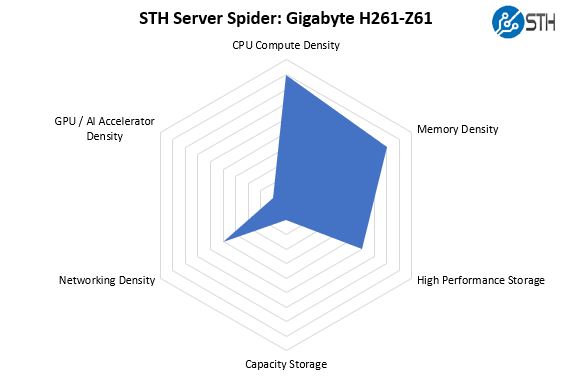

STH Server Spider: Gigabyte H261-Z61

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

Adding eight NVMe devices to the chassis means that the Gigabyte H261-Z61 has significantly more storage performance than the H261-Z60. Combine that with eight AMD EPYC CPUs and 64 DIMM slots in 2U and one gets an extremely dense configuration.

Final Words

We found the Gigabyte H261-Z61 to be an excellent platform. The addition of eight front NVMe bays, two per node, was excellent. Fitting 256 cores and 512 threads with 8TB of RAM in a 2U4N chassis is excellent in 2019. AMD EPYC “Rome” will further expand core counts giving this system a strong roadmap.

Gigabyte’s engineers did a great job of maintaining a high level of expansion capabilities while at the same time keeping the 2U4N form factor. Aside from the CPUs and RAM, one can fit 8x U.2 and 8x M.2 NVMe SSDs, 16x SATA SSDs, 4x OCP NICs and 16x PCIe x16 half-length low profile cards. You can divide those figures by 4 for the per-node total. For some context, that is a mid-range expansion capability for the 1U AMD EPYC servers we have seen, which is impressive in twice the density. Intel platforms simply cannot match this.

We also like that the nodes are interchangeable with the Gigabyte H261-Z60 SATA based system. For those deploying racks of these servers, having the ability to use the same nodes in either can help service times significantly.

Our biggest points of improvement would be that the Central Management Controller continues to gain features. This would be an enormous benefit to the unit but it is an option we would love to see as it would be nice to login via a single management interface per chassis. This is still a great feature simply for reducing cabling.

Overall, we think that users will be very happy with the Gigabyte H261-Z61 2U4N platform for higher-density AMD EPYC deployments.

where are the Gigabyte H261-Z61 and H261-Z60 available at? I’m having difficulty sourcing them in the US.

Brandon, Gigabyte has several US VARs and distributors. If you still cannot find one shoot me an e-mail patrick@ this domain and I can forward it on.

Can the management controller be configured to expose a low traffic management network to the host operating system?