ASRock Rack 1U2N2G-ROME/2T Internal Hardware Overview

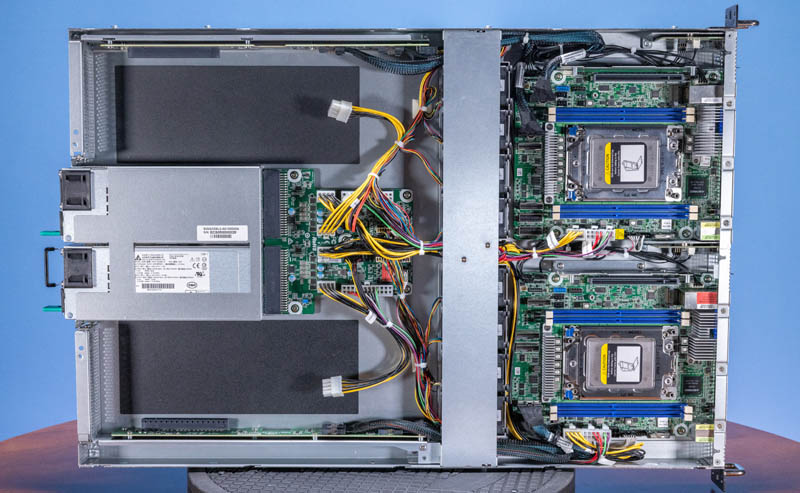

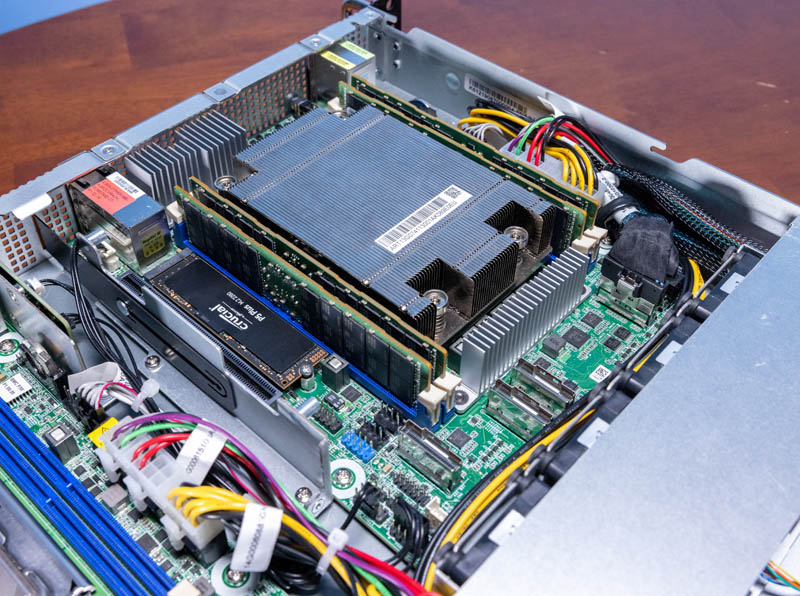

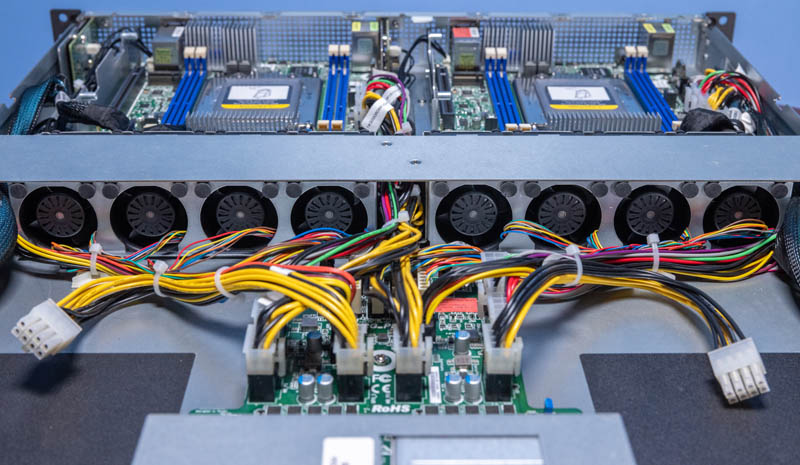

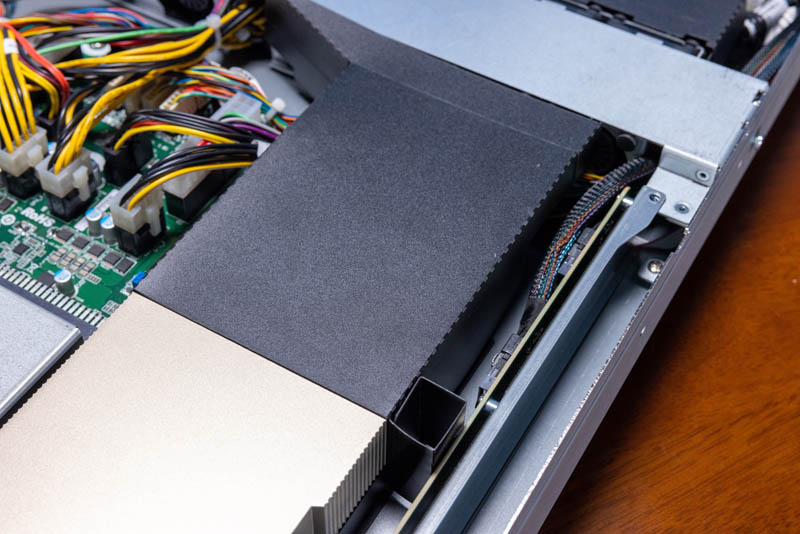

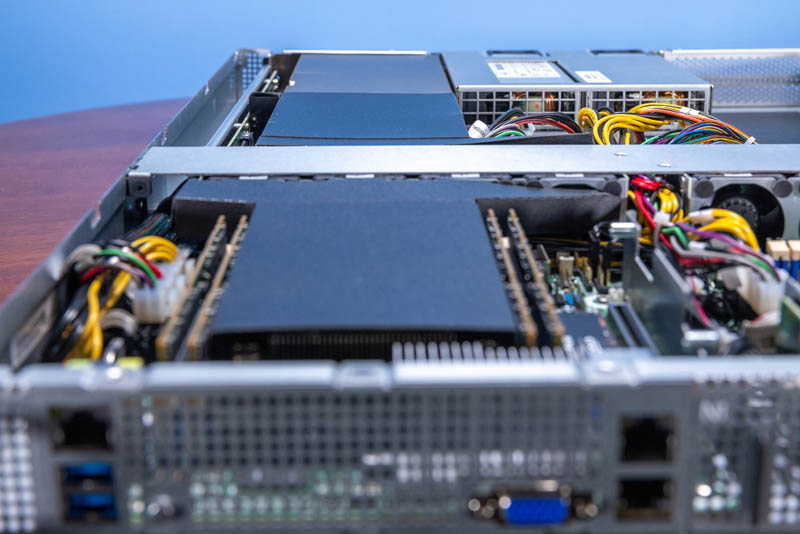

Inside, we get the two independent nodes. Here is an overview without GPU and CPU shrouds to show how everything is laid out. We are going to focus our energy on Node 1, the node at the bottom of this photo, since they are virtually identical.

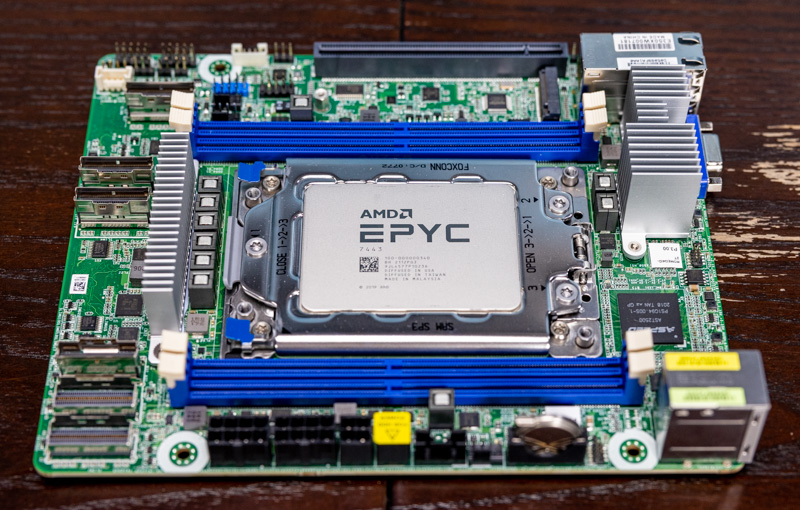

Each node is powered by its own motherboard and has its own AMD EPYC 7002/7003 socket along with memory and storage.

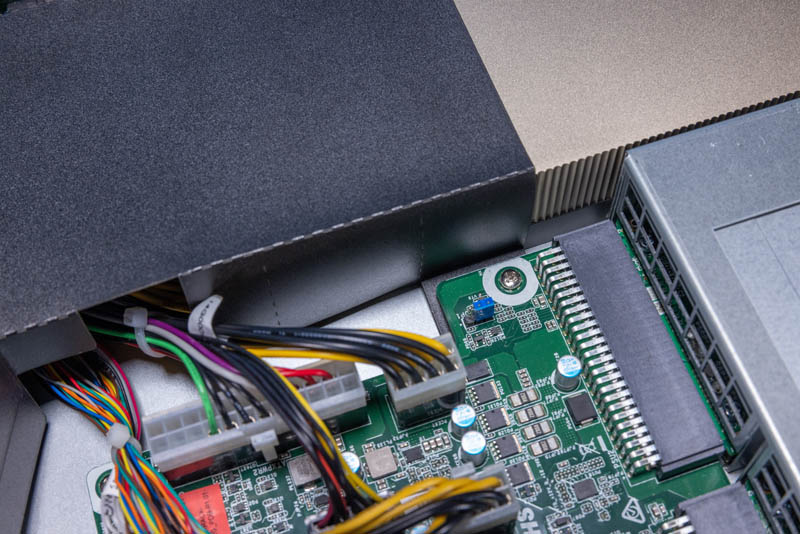

The motherboard may look familiar. This is the ASRock Rack ROMED4ID-2T motherboard that Will reviewed over a year ago.

This is a mITX-ish form factor that is the smallest AMD EPYC “Rome” and “Milan” motherboard we have seen to date. As you can see from the photos, even though the system and motherboard have “ROME” in their names, they work with the Milan and even Milan-X parts.

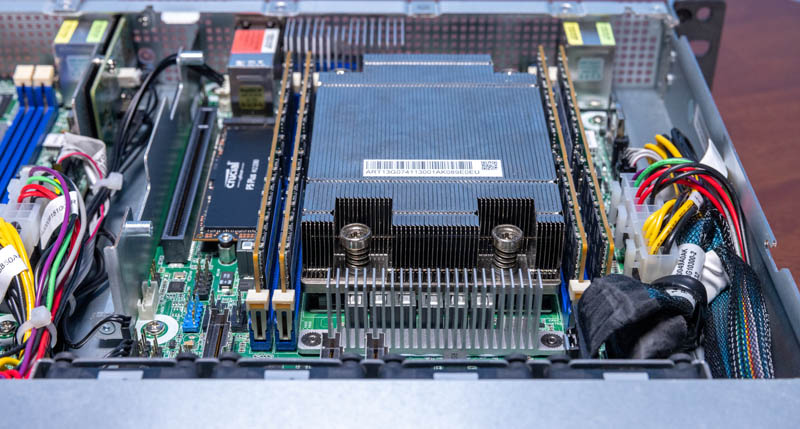

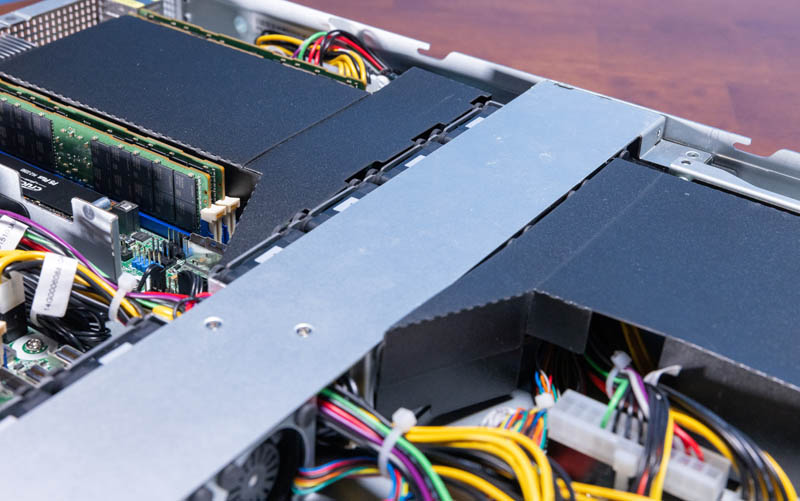

Here is a look at the fully configured node. One can see the large passive heatsink with four DIMMs. To achieve such a small motherboard size, ASRock Rack could only fit four DIMMs even though these are eight-channel memory CPUs.

Our sense is that this system will be either filled with larger 24-64 core CPUs, more than AM4 offerings, with single-socket price optimized “P” SKUs, or with lower-cost 4-channel optimized parts. We did a piece on the EPYC 4-channel memory optimization you can see here.

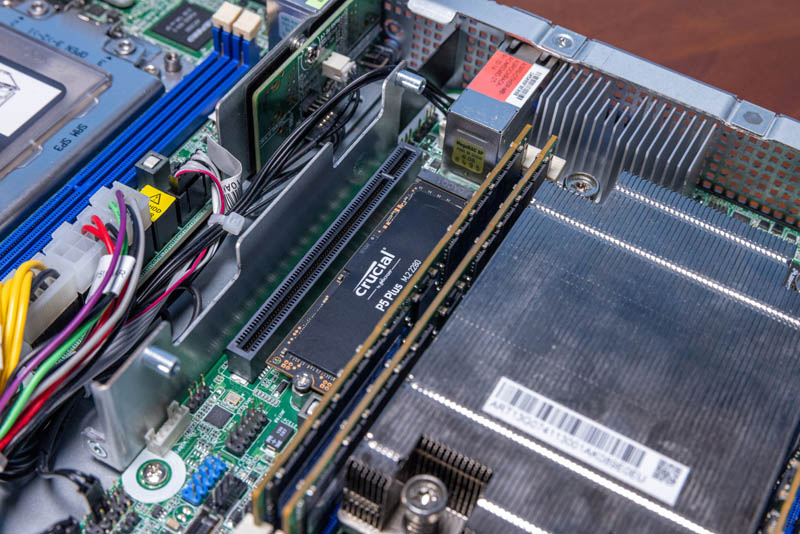

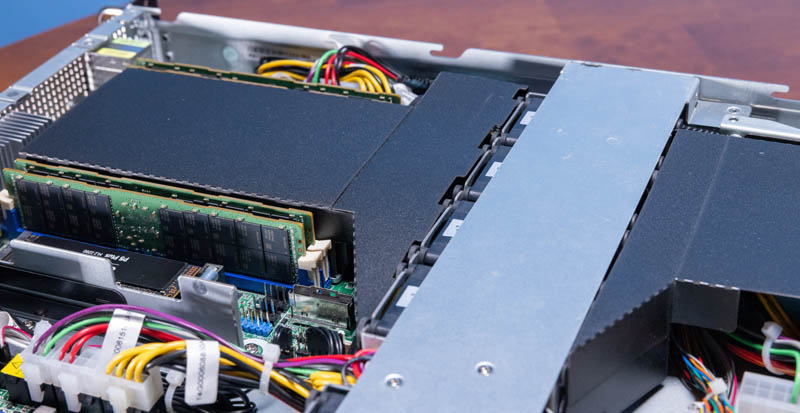

The only storage in this node is via the onboard M.2 slot. We installed a Crucial P5 Plus 2TB NVMe SSD because it was the only 2280 M.2 SSD we had on hand. This platform is compact, so it does not support 22110 NVMe SSDs, or at least gracefully.

There is a metal mounting point above the PCIe Gen4 x16 slot. We could see some sort of riser being made and held using that mounting point for a second SSD, but the space is very cramped. We sense that, along with the cost optimization theme, this is a single M.2 SSD-only server node.

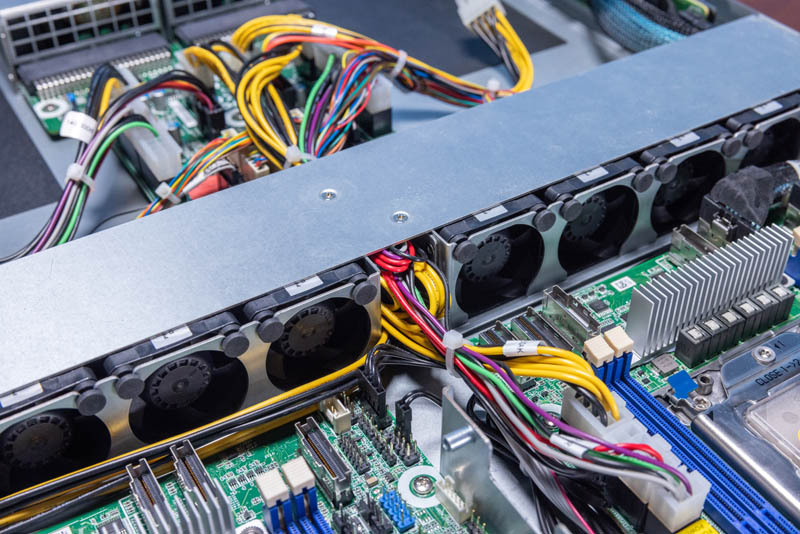

Directing airflow in a chassis like this is important. As a result, we get air baffles for the CPU and the GPU. Each node takes four of the dual fan modules and directs air using them.

The fan partition is very interesting. First, there is a metal bar that holds all of the fans in place while also cross-bracing the chassis. This makes the fans harder to service but might be required to keep everything in place. Four of the fan modules power each node for redundancy.

In the middle is the power cable pass-through. We can see the center channel crammed with cables running between the power distribution board and the motherboards.

A fun note here is that the fans are actually powered by the power distribution board, not the motherboards, and they are quite loud.

This power distribution board also powers the GPUs.

The GPUs have an air baffle to ensure proper airflow to the units.

An interesting small feature is that these GPU airflow guides hook into the screws holding the power distribution board down. That makes them easy to service. Often these guides are screwed down, making them harder to service. ASRock Rack’s design here is better than we were anticipating.

Here we can see the rear of the node with a NVIDIA A40 installed. The passive GPU worked well. We also tried a NVIDIA RTX A4500 for an active blower-style GPU, and with careful placement of the airflow guide, it worked. We did not get to test the NVIDIA RTX A6000 because it had to go back. That is one item we wish we could have tested with this as it is a high-power blower-style GPU.

Here is a look at the two airflow guides around four fan modules.

Here is a look from another direction.

Even though this is a 1U 2-node dense server, it is actually quite simple in its design. Each node has a CPU, four DIMM slots, an M.2 slot for storage, and a GPU slot. That simplicity helps keep costs down. Power supplies and the chassis are shared among the 1U platform, which lowers the cost versus two 1U platforms with redundant power.

Next, let us get to the topology.

Any idea when these will be for sale anywhere?

Because the PSUs are redundant, that would seem to suggest that you can’t perform component level work on one node without shutting down both?

I’m a little surprised to see 10Gbase-T rather than SFPs in something that is both so clearly cost optimized and which has no real room to add a NIC. Especially for a system that very, very, much doesn’t look like it’s intended to share an environment with people, or even make a whole lot of sense as a small business/branch server(since hypervisors have made it so that only very specific niches would prefer more nodes vs. a node they can carve up as needed).

Have the prices shifted enough that 10Gbase-T is becoming a reasonable alternative to DACs for 10Gb rack level switching? Is that still mostly not the favored approach; but 10Gbase-T provides immediate compatibility with 1GbE switches without totally closing the door on people who need something faster?

I see a basic dual compute node setup there.

A dual port 25GbE, 50GbE, or 100GbE network adapter in that PCIe Gen4 x16 slot and we have an inexpensive resilient cluster in a box.