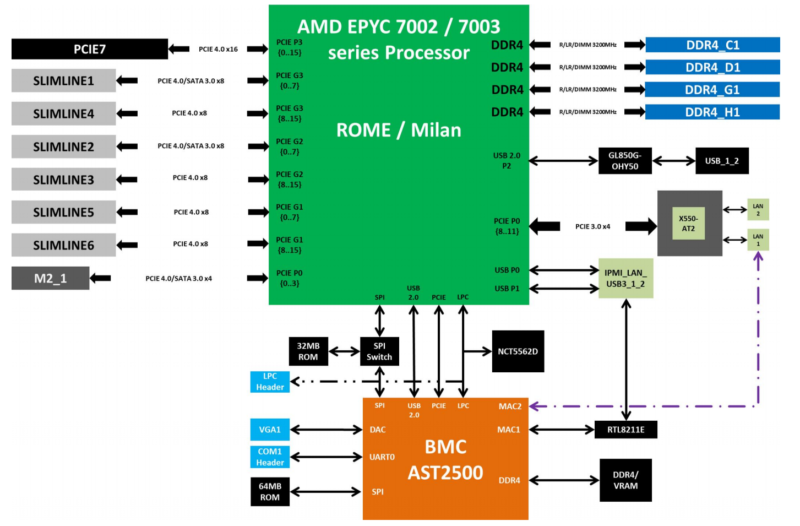

ASRock Rack 1U2N2G-ROME/2T Topology

We showed the topology in our previous review of the motherboard. Here is that view of each node:

This is a per-node view. Both of the nodes are independent and only share power, the chassis, and perhaps cooling. The other difference is really which Slimline connectors are being used between the two nodes.

Functionally, that should have little difference, but it does mean that each node uses different PCIe controllers on the CPU by default in our system. Of course, if the cables were set to the same ports, then this difference would not exist. Still, for the vast majority of users, these differences will not be noticed.

Next, let us discuss management.

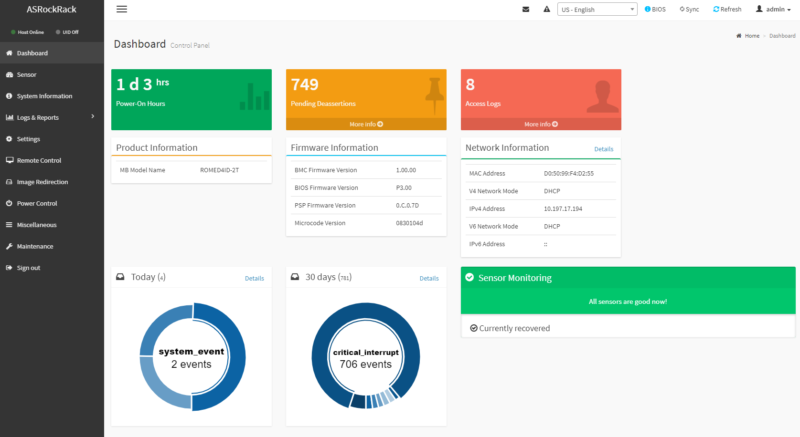

ASRock Rack 1U2N2G-ROME/2T Management

In terms of management, we get the standard ASRock Rack IPMI management interface via the ASPEED AST2500 controller. Will went over the management of the motherboard in his review.

As he noted, we have gone over the exact feature set of the ASRock Rack AST2500 BMC implementation in previous reviews like the X570D4U-2L2T and ROMED6U-2L2T. Everything written in those reviews applies here; we get full HTML5 iKVM, including remote media, along with BIOS and BMC firmware updates.

Will noted that one challenge with this platform is the slow remote media, and we saw that again with the motherboards built into this server.

Overall, the management works as we would expect, except that remote media can load slowly via the HTML5 iKVM viewer.

Next, let us get to performance.

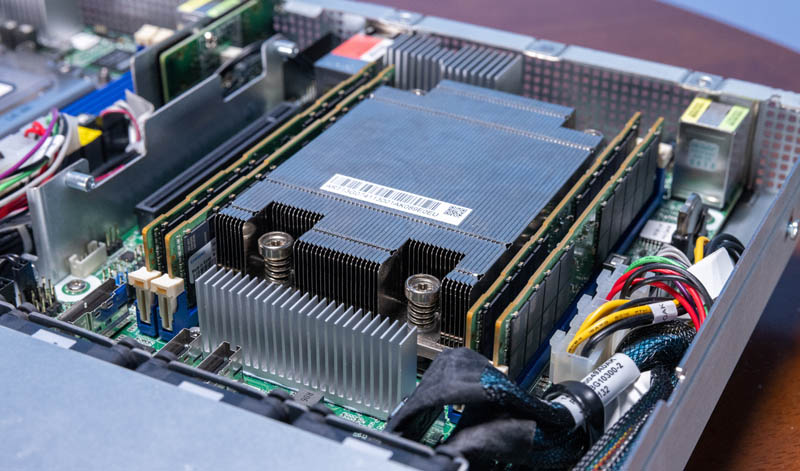

ASRock Rack 1U2N2G-ROME/2T Performance

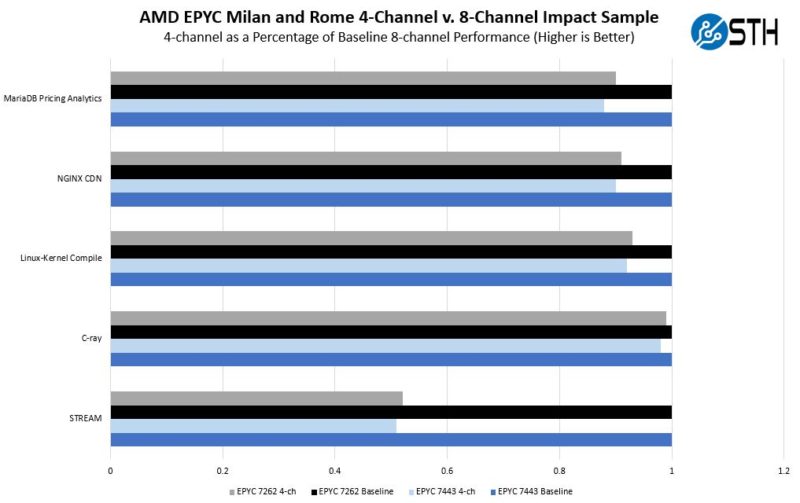

In terms of the performance we saw, we got the same results we saw when testing this motherboard in a desktop configuration in 2021. That is an accomplishment since cooling in 1U servers can be challenging. We again ran an AMD EPYC 7262 and an AMD EPYC 7443 in the ASRock Rack platform and compared our results to the 8-channel data we had for both CPUs.

One can clearly see the impact of having four-channel memory. We will again note that at the lower-end AMD has EPYC “Rome” generation CPUs (also for use in Milan platforms) designed specifically for this market. Typically these are lower-core count (8-16 cores) parts. The cost of memory alongside those lower core count CPUs can be a lot, so AMD has optimized parts for that deployment type.

Overall, performance is what we would expect.

Next, let us get to the power consumption.

Any idea when these will be for sale anywhere?

Because the PSUs are redundant, that would seem to suggest that you can’t perform component level work on one node without shutting down both?

I’m a little surprised to see 10Gbase-T rather than SFPs in something that is both so clearly cost optimized and which has no real room to add a NIC. Especially for a system that very, very, much doesn’t look like it’s intended to share an environment with people, or even make a whole lot of sense as a small business/branch server(since hypervisors have made it so that only very specific niches would prefer more nodes vs. a node they can carve up as needed).

Have the prices shifted enough that 10Gbase-T is becoming a reasonable alternative to DACs for 10Gb rack level switching? Is that still mostly not the favored approach; but 10Gbase-T provides immediate compatibility with 1GbE switches without totally closing the door on people who need something faster?

I see a basic dual compute node setup there.

A dual port 25GbE, 50GbE, or 100GbE network adapter in that PCIe Gen4 x16 slot and we have an inexpensive resilient cluster in a box.